mirror of

https://github.com/huggingface/diffusers.git

synced 2026-01-27 17:22:53 +03:00

[docs] Update training docs (#5512)

* first draft * try hfoption syntax * fix hfoption id * add text2image * fix tag * feedback * feedbacks * add textual inversion * DreamBooth * lora * controlnet * instructpix2pix * custom diffusion * t2i * separate training methods and models * sdxl * kandinsky * wuerstchen * light edits

This commit is contained in:

@@ -100,26 +100,36 @@

|

||||

title: Create a dataset for training

|

||||

- local: training/adapt_a_model

|

||||

title: Adapt a model to a new task

|

||||

- local: training/unconditional_training

|

||||

title: Unconditional image generation

|

||||

- local: training/text_inversion

|

||||

title: Textual Inversion

|

||||

- local: training/dreambooth

|

||||

title: DreamBooth

|

||||

- local: training/text2image

|

||||

title: Text-to-image

|

||||

- local: training/lora

|

||||

title: Low-Rank Adaptation of Large Language Models (LoRA)

|

||||

- local: training/controlnet

|

||||

title: ControlNet

|

||||

- local: training/instructpix2pix

|

||||

title: InstructPix2Pix Training

|

||||

- local: training/custom_diffusion

|

||||

title: Custom Diffusion

|

||||

- local: training/t2i_adapters

|

||||

title: T2I-Adapters

|

||||

- local: training/ddpo

|

||||

title: Reinforcement learning training with DDPO

|

||||

- sections:

|

||||

- local: training/unconditional_training

|

||||

title: Unconditional image generation

|

||||

- local: training/text2image

|

||||

title: Text-to-image

|

||||

- local: training/sdxl

|

||||

title: Stable Diffusion XL

|

||||

- local: training/kandinsky

|

||||

title: Kandinsky 2.2

|

||||

- local: training/wuerstchen

|

||||

title: Wuerstchen

|

||||

- local: training/controlnet

|

||||

title: ControlNet

|

||||

- local: training/t2i_adapters

|

||||

title: T2I-Adapters

|

||||

- local: training/instructpix2pix

|

||||

title: InstructPix2Pix

|

||||

title: Models

|

||||

- sections:

|

||||

- local: training/text_inversion

|

||||

title: Textual Inversion

|

||||

- local: training/dreambooth

|

||||

title: DreamBooth

|

||||

- local: training/lora

|

||||

title: LoRA

|

||||

- local: training/custom_diffusion

|

||||

title: Custom Diffusion

|

||||

- local: training/ddpo

|

||||

title: Reinforcement learning training with DDPO

|

||||

title: Methods

|

||||

title: Training

|

||||

- sections:

|

||||

- local: using-diffusers/other-modalities

|

||||

|

||||

@@ -12,245 +12,247 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# ControlNet

|

||||

|

||||

[Adding Conditional Control to Text-to-Image Diffusion Models](https://arxiv.org/abs/2302.05543) (ControlNet) by Lvmin Zhang and Maneesh Agrawala.

|

||||

[ControlNet](https://hf.co/papers/2302.05543) models are adapters trained on top of another pretrained model. It allows for a greater degree of control over image generation by conditioning the model with an additional input image. The input image can be a canny edge, depth map, human pose, and many more.

|

||||

|

||||

This example is based on the [training example in the original ControlNet repository](https://github.com/lllyasviel/ControlNet/blob/main/docs/train.md). It trains a ControlNet to fill circles using a [small synthetic dataset](https://huggingface.co/datasets/fusing/fill50k).

|

||||

If you're training on a GPU with limited vRAM, you should try enabling the `gradient_checkpointing`, `gradient_accumulation_steps`, and `mixed_precision` parameters in the training command. You can also reduce your memory footprint by using memory-efficient attention with [xFormers](../optimization/xformers). JAX/Flax training is also supported for efficient training on TPUs and GPUs, but it doesn't support gradient checkpointing or xFormers. You should have a GPU with >30GB of memory if you want to train faster with Flax.

|

||||

|

||||

## Installing the dependencies

|

||||

This guide will explore the [train_controlnet.py](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/train_controlnet.py) training script to help you become familiar with it, and how you can adapt it for your own use-case.

|

||||

|

||||

Before running the scripts, make sure to install the library's training dependencies.

|

||||

Before running the script, make sure you install the library from source:

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

To successfully run the latest versions of the example scripts, we highly recommend **installing from source** and keeping the installation up to date. We update the example scripts frequently and install example-specific requirements.

|

||||

|

||||

</Tip>

|

||||

|

||||

To do this, execute the following steps in a new virtual environment:

|

||||

```bash

|

||||

git clone https://github.com/huggingface/diffusers

|

||||

cd diffusers

|

||||

pip install -e .

|

||||

pip install .

|

||||

```

|

||||

|

||||

Then navigate into the [example folder](https://github.com/huggingface/diffusers/tree/main/examples/controlnet)

|

||||

Then navigate to the example folder containing the training script and install the required dependencies for the script you're using:

|

||||

|

||||

<hfoptions id="installation">

|

||||

<hfoption id="PyTorch">

|

||||

```bash

|

||||

cd examples/controlnet

|

||||

```

|

||||

|

||||

Now run:

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

</hfoption>

|

||||

<hfoption id="Flax">

|

||||

|

||||

And initialize an [🤗Accelerate](https://github.com/huggingface/accelerate/) environment with:

|

||||

If you have access to a TPU, the Flax training script runs even faster! Let's run the training script on the [Google Cloud TPU VM](https://cloud.google.com/tpu/docs/run-calculation-jax). Create a single TPU v4-8 VM and connect to it:

|

||||

|

||||

```bash

|

||||

ZONE=us-central2-b

|

||||

TPU_TYPE=v4-8

|

||||

VM_NAME=hg_flax

|

||||

|

||||

gcloud alpha compute tpus tpu-vm create $VM_NAME \

|

||||

--zone $ZONE \

|

||||

--accelerator-type $TPU_TYPE \

|

||||

--version tpu-vm-v4-base

|

||||

|

||||

gcloud alpha compute tpus tpu-vm ssh $VM_NAME --zone $ZONE -- \

|

||||

```

|

||||

|

||||

Install JAX 0.4.5:

|

||||

|

||||

```bash

|

||||

pip install "jax[tpu]==0.4.5" -f https://storage.googleapis.com/jax-releases/libtpu_releases.html

|

||||

```

|

||||

|

||||

Then install the required dependencies for the Flax script:

|

||||

|

||||

```bash

|

||||

cd examples/controlnet

|

||||

pip install -r requirements_flax.txt

|

||||

```

|

||||

|

||||

</hfoption>

|

||||

</hfoptions>

|

||||

|

||||

<Tip>

|

||||

|

||||

🤗 Accelerate is a library for helping you train on multiple GPUs/TPUs or with mixed-precision. It'll automatically configure your training setup based on your hardware and environment. Take a look at the 🤗 Accelerate [Quick tour](https://huggingface.co/docs/accelerate/quicktour) to learn more.

|

||||

|

||||

</Tip>

|

||||

|

||||

Initialize an 🤗 Accelerate environment:

|

||||

|

||||

```bash

|

||||

accelerate config

|

||||

```

|

||||

|

||||

Or for a default 🤗Accelerate configuration without answering questions about your environment:

|

||||

To setup a default 🤗 Accelerate environment without choosing any configurations:

|

||||

|

||||

```bash

|

||||

accelerate config default

|

||||

```

|

||||

|

||||

Or if your environment doesn't support an interactive shell like a notebook:

|

||||

Or if your environment doesn't support an interactive shell, like a notebook, you can use:

|

||||

|

||||

```python

|

||||

```bash

|

||||

from accelerate.utils import write_basic_config

|

||||

|

||||

write_basic_config()

|

||||

```

|

||||

|

||||

## Circle filling dataset

|

||||

Lastly, if you want to train a model on your own dataset, take a look at the [Create a dataset for training](create_dataset) guide to learn how to create a dataset that works with the training script.

|

||||

|

||||

The original dataset is hosted in the ControlNet [repo](https://huggingface.co/lllyasviel/ControlNet/blob/main/training/fill50k.zip), but we re-uploaded it [here](https://huggingface.co/datasets/fusing/fill50k) to be compatible with 🤗 Datasets so that it can handle the data loading within the training script.

|

||||

<Tip>

|

||||

|

||||

Our training examples use [`runwayml/stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5) because that is what the original set of ControlNet models was trained on. However, ControlNet can be trained to augment any compatible Stable Diffusion model (such as [`CompVis/stable-diffusion-v1-4`](https://huggingface.co/CompVis/stable-diffusion-v1-4)) or [`stabilityai/stable-diffusion-2-1`](https://huggingface.co/stabilityai/stable-diffusion-2-1).

|

||||

The following sections highlight parts of the training script that are important for understanding how to modify it, but it doesn't cover every aspect of the script in detail. If you're interested in learning more, feel free to read through the [script](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/train_controlnet.py) and let us know if you have any questions or concerns.

|

||||

|

||||

To use your own dataset, take a look at the [Create a dataset for training](create_dataset) guide.

|

||||

</Tip>

|

||||

|

||||

## Training

|

||||

## Script parameters

|

||||

|

||||

Download the following images to condition our training with:

|

||||

The training script provides many parameters to help you customize your training run. All of the parameters and their descriptions are found in the [`parse_args()`](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/controlnet/train_controlnet.py#L231) function. This function provides default values for each parameter, such as the training batch size and learning rate, but you can also set your own values in the training command if you'd like.

|

||||

|

||||

```sh

|

||||

For example, to speedup training with mixed precision using the fp16 format, add the `--mixed_precision` parameter to the training command:

|

||||

|

||||

```bash

|

||||

accelerate launch train_controlnet.py \

|

||||

--mixed_precision="fp16"

|

||||

```

|

||||

|

||||

Many of the basic and important parameters are described in the [Text-to-image](text2image#script-parameters) training guide, so this guide just focuses on the relevant parameters for ControlNet:

|

||||

|

||||

- `--max_train_samples`: the number of training samples; this can be lowered for faster training, but if you want to stream really large datasets, you'll need to include this parameter and the `--streaming` parameter in your training command

|

||||

- `--gradient_accumulation_steps`: number of update steps to accumulate before the backward pass; this allows you to train with a bigger batch size than your GPU memory can typically handle

|

||||

|

||||

### Min-SNR weighting

|

||||

|

||||

The [Min-SNR](https://huggingface.co/papers/2303.09556) weighting strategy can help with training by rebalancing the loss to achieve faster convergence. The training script supports predicting `epsilon` (noise) or `v_prediction`, but Min-SNR is compatible with both prediction types. This weighting strategy is only supported by PyTorch and is unavailable in the Flax training script.

|

||||

|

||||

Add the `--snr_gamma` parameter and set it to the recommended value of 5.0:

|

||||

|

||||

```bash

|

||||

accelerate launch train_controlnet.py \

|

||||

--snr_gamma=5.0

|

||||

```

|

||||

|

||||

## Training script

|

||||

|

||||

As with the script parameters, a general walkthrough of the training script is provided in the [Text-to-image](text2image#training-script) training guide. Instead, this guide takes a look at the relevant parts of the ControlNet script.

|

||||

|

||||

The training script has a [`make_train_dataset`](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/controlnet/train_controlnet.py#L582) function for preprocessing the dataset with image transforms and caption tokenization. You'll see that in addition to the usual caption tokenization and image transforms, the script also includes transforms for the conditioning image.

|

||||

|

||||

<Tip>

|

||||

|

||||

If you're streaming a dataset on a TPU, performance may be bottlenecked by the 🤗 Datasets library which is not optimized for images. To ensure maximum throughput, you're encouraged to explore other dataset formats like [WebDataset](https://webdataset.github.io/webdataset/), [TorchData](https://github.com/pytorch/data), and [TensorFlow Datasets](https://www.tensorflow.org/datasets/tfless_tfds).

|

||||

|

||||

</Tip>

|

||||

|

||||

```py

|

||||

conditioning_image_transforms = transforms.Compose(

|

||||

[

|

||||

transforms.Resize(args.resolution, interpolation=transforms.InterpolationMode.BILINEAR),

|

||||

transforms.CenterCrop(args.resolution),

|

||||

transforms.ToTensor(),

|

||||

]

|

||||

)

|

||||

```

|

||||

|

||||

Within the [`main()`](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/controlnet/train_controlnet.py#L713) function, you'll find the code for loading the tokenizer, text encoder, scheduler and models. This is also where the ControlNet model is loaded either from existing weights or randomly initialized from a UNet:

|

||||

|

||||

```py

|

||||

if args.controlnet_model_name_or_path:

|

||||

logger.info("Loading existing controlnet weights")

|

||||

controlnet = ControlNetModel.from_pretrained(args.controlnet_model_name_or_path)

|

||||

else:

|

||||

logger.info("Initializing controlnet weights from unet")

|

||||

controlnet = ControlNetModel.from_unet(unet)

|

||||

```

|

||||

|

||||

The [optimizer](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/controlnet/train_controlnet.py#L871) is set up to update the ControlNet parameters:

|

||||

|

||||

```py

|

||||

params_to_optimize = controlnet.parameters()

|

||||

optimizer = optimizer_class(

|

||||

params_to_optimize,

|

||||

lr=args.learning_rate,

|

||||

betas=(args.adam_beta1, args.adam_beta2),

|

||||

weight_decay=args.adam_weight_decay,

|

||||

eps=args.adam_epsilon,

|

||||

)

|

||||

```

|

||||

|

||||

Finally, in the [training loop](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/controlnet/train_controlnet.py#L943), the conditioning text embeddings and image are passed to the down and mid-blocks of the ControlNet model:

|

||||

|

||||

```py

|

||||

encoder_hidden_states = text_encoder(batch["input_ids"])[0]

|

||||

controlnet_image = batch["conditioning_pixel_values"].to(dtype=weight_dtype)

|

||||

|

||||

down_block_res_samples, mid_block_res_sample = controlnet(

|

||||

noisy_latents,

|

||||

timesteps,

|

||||

encoder_hidden_states=encoder_hidden_states,

|

||||

controlnet_cond=controlnet_image,

|

||||

return_dict=False,

|

||||

)

|

||||

```

|

||||

|

||||

If you want to learn more about how the training loop works, check out the [Understanding pipelines, models and schedulers](../using-diffusers/write_own_pipeline) tutorial which breaks down the basic pattern of the denoising process.

|

||||

|

||||

## Launch the script

|

||||

|

||||

Now you're ready to launch the training script! 🚀

|

||||

|

||||

This guide uses the [fusing/fill50k](https://huggingface.co/datasets/fusing/fill50k) dataset, but remember, you can create and use your own dataset if you want (see the [Create a dataset for training](create_dataset) guide).

|

||||

|

||||

Set the environment variable `MODEL_NAME` to a model id on the Hub or a path to a local model and `OUTPUT_DIR` to where you want to save the model.

|

||||

|

||||

Download the following images to condition your training with:

|

||||

|

||||

```bash

|

||||

wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/controlnet_training/conditioning_image_1.png

|

||||

|

||||

wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/controlnet_training/conditioning_image_2.png

|

||||

```

|

||||

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`pretrained_model_name_or_path`](https://huggingface.co/docs/diffusers/en/api/diffusion_pipeline#diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path) argument.

|

||||

One more thing before you launch the script! Depending on the GPU you have, you may need to enable certain optimizations to train a ControlNet. The default configuration in this script requires ~38GB of vRAM. If you're training on more than one GPU, add the `--multi_gpu` parameter to the `accelerate launch` command.

|

||||

|

||||

The training script creates and saves a `diffusion_pytorch_model.bin` file in your repository.

|

||||

<hfoptions id="gpu-select">

|

||||

<hfoption id="16GB">

|

||||

|

||||

On a 16GB GPU, you can use bitsandbytes 8-bit optimizer and gradient checkpointing to optimize your training run. Install bitsandbytes:

|

||||

|

||||

```py

|

||||

pip install bitsandbytes

|

||||

```

|

||||

|

||||

Then, add the following parameter to your training command:

|

||||

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

export OUTPUT_DIR="path to save model"

|

||||

|

||||

accelerate launch train_controlnet.py \

|

||||

--pretrained_model_name_or_path=$MODEL_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--dataset_name=fusing/fill50k \

|

||||

--resolution=512 \

|

||||

--learning_rate=1e-5 \

|

||||

--validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \

|

||||

--validation_prompt "red circle with blue background" "cyan circle with brown floral background" \

|

||||

--train_batch_size=4 \

|

||||

--push_to_hub

|

||||

--gradient_checkpointing \

|

||||

--use_8bit_adam \

|

||||

```

|

||||

|

||||

This default configuration requires ~38GB VRAM.

|

||||

</hfoption>

|

||||

<hfoption id="12GB">

|

||||

|

||||

By default, the training script logs outputs to tensorboard. Pass `--report_to wandb` to use Weights &

|

||||

Biases.

|

||||

|

||||

Gradient accumulation with a smaller batch size can be used to reduce training requirements to ~20 GB VRAM.

|

||||

On a 12GB GPU, you'll need bitsandbytes 8-bit optimizer, gradient checkpointing, xFormers, and set the gradients to `None` instead of zero to reduce your memory-usage.

|

||||

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

export OUTPUT_DIR="path to save model"

|

||||

|

||||

accelerate launch train_controlnet.py \

|

||||

--pretrained_model_name_or_path=$MODEL_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--dataset_name=fusing/fill50k \

|

||||

--resolution=512 \

|

||||

--learning_rate=1e-5 \

|

||||

--validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \

|

||||

--validation_prompt "red circle with blue background" "cyan circle with brown floral background" \

|

||||

--train_batch_size=1 \

|

||||

--gradient_accumulation_steps=4 \

|

||||

--push_to_hub

|

||||

--use_8bit_adam \

|

||||

--gradient_checkpointing \

|

||||

--enable_xformers_memory_efficient_attention \

|

||||

--set_grads_to_none \

|

||||

```

|

||||

|

||||

## Training with multiple GPUs

|

||||

</hfoption>

|

||||

<hfoption id="8GB">

|

||||

|

||||

`accelerate` allows for seamless multi-GPU training. Follow the instructions [here](https://huggingface.co/docs/accelerate/basic_tutorials/launch)

|

||||

for running distributed training with `accelerate`. Here is an example command:

|

||||

On a 8GB GPU, you'll need to use [DeepSpeed](https://www.deepspeed.ai/) to offload some of the tensors from the vRAM to either the CPU or NVME to allow training with less GPU memory.

|

||||

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

export OUTPUT_DIR="path to save model"

|

||||

|

||||

accelerate launch --mixed_precision="fp16" --multi_gpu train_controlnet.py \

|

||||

--pretrained_model_name_or_path=$MODEL_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--dataset_name=fusing/fill50k \

|

||||

--resolution=512 \

|

||||

--learning_rate=1e-5 \

|

||||

--validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \

|

||||

--validation_prompt "red circle with blue background" "cyan circle with brown floral background" \

|

||||

--train_batch_size=4 \

|

||||

--mixed_precision="fp16" \

|

||||

--tracker_project_name="controlnet-demo" \

|

||||

--report_to=wandb \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

## Example results

|

||||

|

||||

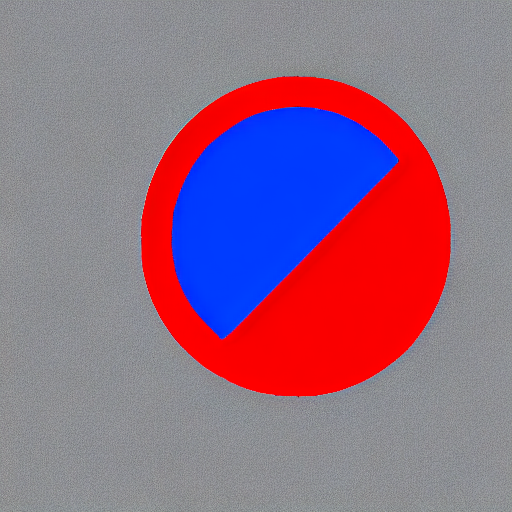

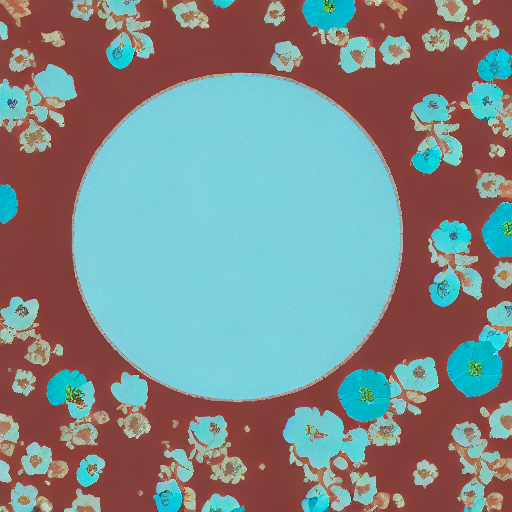

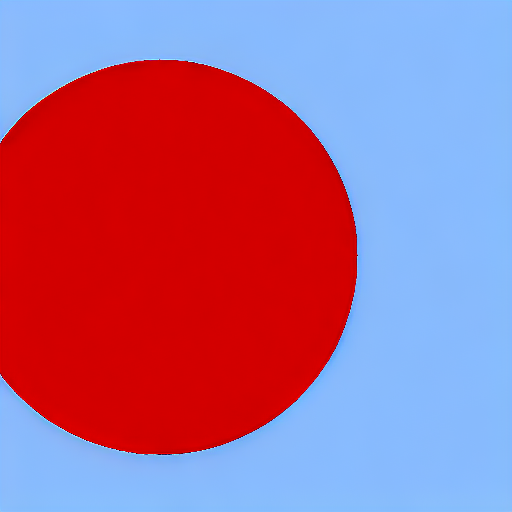

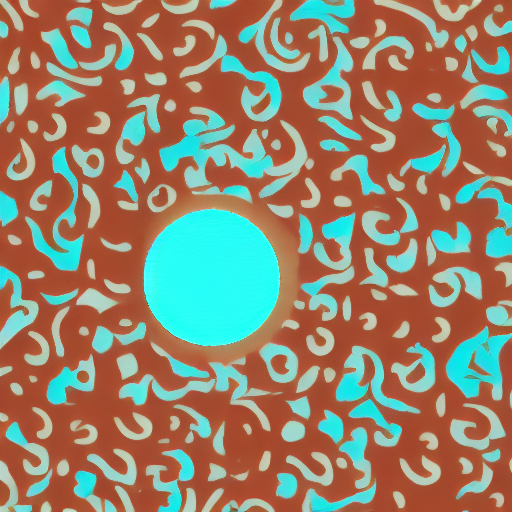

#### After 300 steps with batch size 8

|

||||

|

||||

| | |

|

||||

|-------------------|:-------------------------:|

|

||||

| | red circle with blue background |

|

||||

|  |

|

||||

| | cyan circle with brown floral background |

|

||||

|  |

|

||||

|

||||

|

||||

#### After 6000 steps with batch size 8:

|

||||

|

||||

| | |

|

||||

|-------------------|:-------------------------:|

|

||||

| | red circle with blue background |

|

||||

|  |

|

||||

| | cyan circle with brown floral background |

|

||||

|  |

|

||||

|

||||

## Training on a 16 GB GPU

|

||||

|

||||

Enable the following optimizations to train on a 16GB GPU:

|

||||

|

||||

- Gradient checkpointing

|

||||

- bitsandbyte's 8-bit optimizer (take a look at the [installation]((https://github.com/TimDettmers/bitsandbytes#requirements--installation) instructions if you don't already have it installed)

|

||||

|

||||

Now you can launch the training script:

|

||||

Run the following command to configure your 🤗 Accelerate environment:

|

||||

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

export OUTPUT_DIR="path to save model"

|

||||

|

||||

accelerate launch train_controlnet.py \

|

||||

--pretrained_model_name_or_path=$MODEL_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--dataset_name=fusing/fill50k \

|

||||

--resolution=512 \

|

||||

--learning_rate=1e-5 \

|

||||

--validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \

|

||||

--validation_prompt "red circle with blue background" "cyan circle with brown floral background" \

|

||||

--train_batch_size=1 \

|

||||

--gradient_accumulation_steps=4 \

|

||||

--gradient_checkpointing \

|

||||

--use_8bit_adam \

|

||||

--push_to_hub

|

||||

accelerate config

|

||||

```

|

||||

|

||||

## Training on a 12 GB GPU

|

||||

|

||||

Enable the following optimizations to train on a 12GB GPU:

|

||||

- Gradient checkpointing

|

||||

- bitsandbyte's 8-bit optimizer (take a look at the [installation]((https://github.com/TimDettmers/bitsandbytes#requirements--installation) instructions if you don't already have it installed)

|

||||

- xFormers (take a look at the [installation](https://huggingface.co/docs/diffusers/training/optimization/xformers) instructions if you don't already have it installed)

|

||||

- set gradients to `None`

|

||||

During configuration, confirm that you want to use DeepSpeed stage 2. Now it should be possible to train on under 8GB vRAM by combining DeepSpeed stage 2, fp16 mixed precision, and offloading the model parameters and the optimizer state to the CPU. The drawback is that this requires more system RAM (~25 GB). See the [DeepSpeed documentation](https://huggingface.co/docs/accelerate/usage_guides/deepspeed) for more configuration options. Your configuration file should look something like:

|

||||

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

export OUTPUT_DIR="path to save model"

|

||||

|

||||

accelerate launch train_controlnet.py \

|

||||

--pretrained_model_name_or_path=$MODEL_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--dataset_name=fusing/fill50k \

|

||||

--resolution=512 \

|

||||

--learning_rate=1e-5 \

|

||||

--validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \

|

||||

--validation_prompt "red circle with blue background" "cyan circle with brown floral background" \

|

||||

--train_batch_size=1 \

|

||||

--gradient_accumulation_steps=4 \

|

||||

--gradient_checkpointing \

|

||||

--use_8bit_adam \

|

||||

--enable_xformers_memory_efficient_attention \

|

||||

--set_grads_to_none \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

When using `enable_xformers_memory_efficient_attention`, please make sure to install `xformers` by `pip install xformers`.

|

||||

|

||||

## Training on an 8 GB GPU

|

||||

|

||||

We have not exhaustively tested DeepSpeed support for ControlNet. While the configuration does

|

||||

save memory, we have not confirmed whether the configuration trains successfully. You will very likely

|

||||

have to make changes to the config to have a successful training run.

|

||||

|

||||

Enable the following optimizations to train on a 8GB GPU:

|

||||

- Gradient checkpointing

|

||||

- bitsandbyte's 8-bit optimizer (take a look at the [installation]((https://github.com/TimDettmers/bitsandbytes#requirements--installation) instructions if you don't already have it installed)

|

||||

- xFormers (take a look at the [installation](https://huggingface.co/docs/diffusers/training/optimization/xformers) instructions if you don't already have it installed)

|

||||

- set gradients to `None`

|

||||

- DeepSpeed stage 2 with parameter and optimizer offloading

|

||||

- fp16 mixed precision

|

||||

|

||||

[DeepSpeed](https://www.deepspeed.ai/) can offload tensors from VRAM to either

|

||||

CPU or NVME. This requires significantly more RAM (about 25 GB).

|

||||

|

||||

You'll have to configure your environment with `accelerate config` to enable DeepSpeed stage 2.

|

||||

|

||||

The configuration file should look like this:

|

||||

|

||||

```yaml

|

||||

compute_environment: LOCAL_MACHINE

|

||||

deepspeed_config:

|

||||

gradient_accumulation_steps: 4

|

||||

@@ -261,73 +263,104 @@ deepspeed_config:

|

||||

distributed_type: DEEPSPEED

|

||||

```

|

||||

|

||||

<Tip>

|

||||

You should also change the default Adam optimizer to DeepSpeed’s optimized version of Adam [`deepspeed.ops.adam.DeepSpeedCPUAdam`](https://deepspeed.readthedocs.io/en/latest/optimizers.html#adam-cpu) for a substantial speedup. Enabling `DeepSpeedCPUAdam` requires your system’s CUDA toolchain version to be the same as the one installed with PyTorch.

|

||||

|

||||

See [documentation](https://huggingface.co/docs/accelerate/usage_guides/deepspeed) for more DeepSpeed configuration options.

|

||||

bitsandbytes 8-bit optimizers don’t seem to be compatible with DeepSpeed at the moment.

|

||||

|

||||

</Tip>

|

||||

That's it! You don't need to add any additional parameters to your training command.

|

||||

|

||||

Changing the default Adam optimizer to DeepSpeed's Adam

|

||||

`deepspeed.ops.adam.DeepSpeedCPUAdam` gives a substantial speedup but

|

||||

it requires a CUDA toolchain with the same version as PyTorch. 8-bit optimizer

|

||||

does not seem to be compatible with DeepSpeed at the moment.

|

||||

</hfoption>

|

||||

</hfoptions>

|

||||

|

||||

<hfoptions id="training-inference">

|

||||

<hfoption id="PyTorch">

|

||||

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

export OUTPUT_DIR="path to save model"

|

||||

export OUTPUT_DIR="path/to/save/model"

|

||||

|

||||

accelerate launch train_controlnet.py \

|

||||

--pretrained_model_name_or_path=$MODEL_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--dataset_name=fusing/fill50k \

|

||||

--resolution=512 \

|

||||

--learning_rate=1e-5 \

|

||||

--validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \

|

||||

--validation_prompt "red circle with blue background" "cyan circle with brown floral background" \

|

||||

--train_batch_size=1 \

|

||||

--gradient_accumulation_steps=4 \

|

||||

--gradient_checkpointing \

|

||||

--enable_xformers_memory_efficient_attention \

|

||||

--set_grads_to_none \

|

||||

--mixed_precision fp16 \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

## Inference

|

||||

</hfoption>

|

||||

<hfoption id="Flax">

|

||||

|

||||

The trained model can be run with the [`StableDiffusionControlNetPipeline`].

|

||||

Set `base_model_path` and `controlnet_path` to the values `--pretrained_model_name_or_path` and

|

||||

`--output_dir` were respectively set to in the training script.

|

||||

With Flax, you can [profile your code](https://jax.readthedocs.io/en/latest/profiling.html) by adding the `--profile_steps==5` parameter to your training command. Install the Tensorboard profile plugin:

|

||||

|

||||

```bash

|

||||

pip install tensorflow tensorboard-plugin-profile

|

||||

tensorboard --logdir runs/fill-circle-100steps-20230411_165612/

|

||||

```

|

||||

|

||||

Then you can inspect the profile at [http://localhost:6006/#profile](http://localhost:6006/#profile).

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

If you run into version conflicts with the plugin, try uninstalling and reinstalling all versions of TensorFlow and Tensorboard. The debugging functionality of the profile plugin is still experimental, and not all views are fully functional. The `trace_viewer` cuts off events after 1M, which can result in all your device traces getting lost if for example, you profile the compilation step by accident.

|

||||

|

||||

</Tip>

|

||||

|

||||

```bash

|

||||

python3 train_controlnet_flax.py \

|

||||

--pretrained_model_name_or_path=$MODEL_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--dataset_name=fusing/fill50k \

|

||||

--resolution=512 \

|

||||

--learning_rate=1e-5 \

|

||||

--validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \

|

||||

--validation_prompt "red circle with blue background" "cyan circle with brown floral background" \

|

||||

--validation_steps=1000 \

|

||||

--train_batch_size=2 \

|

||||

--revision="non-ema" \

|

||||

--from_pt \

|

||||

--report_to="wandb" \

|

||||

--tracker_project_name=$HUB_MODEL_ID \

|

||||

--num_train_epochs=11 \

|

||||

--push_to_hub \

|

||||

--hub_model_id=$HUB_MODEL_ID

|

||||

```

|

||||

|

||||

</hfoption>

|

||||

</hfoptions>

|

||||

|

||||

Once training is complete, you can use your newly trained model for inference!

|

||||

|

||||

```py

|

||||

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

|

||||

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

||||

from diffusers.utils import load_image

|

||||

import torch

|

||||

|

||||

base_model_path = "path to model"

|

||||

controlnet_path = "path to controlnet"

|

||||

|

||||

controlnet = ControlNetModel.from_pretrained(controlnet_path, torch_dtype=torch.float16, use_safetensors=True)

|

||||

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

||||

base_model_path, controlnet=controlnet, torch_dtype=torch.float16, use_safetensors=True

|

||||

)

|

||||

|

||||

# speed up diffusion process with faster scheduler and memory optimization

|

||||

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

|

||||

# remove following line if xformers is not installed

|

||||

pipe.enable_xformers_memory_efficient_attention()

|

||||

|

||||

pipe.enable_model_cpu_offload()

|

||||

controlnet = ControlNetModel.from_pretrained("path/to/controlnet", torch_dtype=torch.float16)

|

||||

pipeline = StableDiffusionControlNetPipeline.from_pretrained(

|

||||

"path/to/base/model", controlnet=controlnet, torch_dtype=torch.float16

|

||||

).to("cuda")

|

||||

|

||||

control_image = load_image("./conditioning_image_1.png")

|

||||

prompt = "pale golden rod circle with old lace background"

|

||||

|

||||

# generate image

|

||||

generator = torch.manual_seed(0)

|

||||

image = pipe(prompt, num_inference_steps=20, generator=generator, image=control_image).images[0]

|

||||

|

||||

image.save("./output.png")

|

||||

```

|

||||

|

||||

## Stable Diffusion XL

|

||||

|

||||

Training with [Stable Diffusion XL](https://huggingface.co/papers/2307.01952) is also supported via the `train_controlnet_sdxl.py` script. Please refer to the docs [here](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/README_sdxl.md).

|

||||

Stable Diffusion XL (SDXL) is a powerful text-to-image model that generates high-resolution images, and it adds a second text-encoder to its architecture. Use the [`train_controlnet_sdxl.py`](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/train_controlnet_sdxl.py) script to train a ControlNet adapter for the SDXL model.

|

||||

|

||||

The SDXL training script is discussed in more detail in the [SDXL training](sdxl) guide.

|

||||

|

||||

## Next steps

|

||||

|

||||

Congratulations on training your own ControlNet! To learn more about how to use your new model, the following guides may be helpful:

|

||||

|

||||

- Learn how to [use a ControlNet](../using-diffusers/controlnet) for inference on a variety of tasks.

|

||||

@@ -10,76 +10,233 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Custom Diffusion training example

|

||||

# Custom Diffusion

|

||||

|

||||

[Custom Diffusion](https://arxiv.org/abs/2212.04488) is a method to customize text-to-image models like Stable Diffusion given just a few (4~5) images of a subject.

|

||||

The `train_custom_diffusion.py` script shows how to implement the training procedure and adapt it for stable diffusion.

|

||||

[Custom Diffusion](https://huggingface.co/papers/2212.04488) is a training technique for personalizing image generation models. Like Textual Inversion, DreamBooth, and LoRA, Custom Diffusion only requires a few (~4-5) example images. This technique works by only training weights in the cross-attention layers, and it uses a special word to represent the newly learned concept. Custom Diffusion is unique because it can also learn multiple concepts at the same time.

|

||||

|

||||

This training example was contributed by [Nupur Kumari](https://nupurkmr9.github.io/) (one of the authors of Custom Diffusion).

|

||||

If you're training on a GPU with limited vRAM, you should try enabling xFormers with `--enable_xformers_memory_efficient_attention` for faster training with lower vRAM requirements (16GB). To save even more memory, add `--set_grads_to_none` in the training argument to set the gradients to `None` instead of zero (this option can cause some issues, so if you experience any, try removing this parameter).

|

||||

|

||||

## Running locally with PyTorch

|

||||

This guide will explore the [train_custom_diffusion.py](https://github.com/huggingface/diffusers/blob/main/examples/custom_diffusion/train_custom_diffusion.py) script to help you become more familiar with it, and how you can adapt it for your own use-case.

|

||||

|

||||

### Installing the dependencies

|

||||

|

||||

Before running the scripts, make sure to install the library's training dependencies:

|

||||

|

||||

**Important**

|

||||

|

||||

To make sure you can successfully run the latest versions of the example scripts, we highly recommend **installing from source** and keeping the install up to date as we update the example scripts frequently and install some example-specific requirements. To do this, execute the following steps in a new virtual environment:

|

||||

Before running the script, make sure you install the library from source:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/huggingface/diffusers

|

||||

cd diffusers

|

||||

pip install -e .

|

||||

pip install .

|

||||

```

|

||||

|

||||

Then cd into the [example folder](https://github.com/huggingface/diffusers/tree/main/examples/custom_diffusion)

|

||||

|

||||

```

|

||||

cd examples/custom_diffusion

|

||||

```

|

||||

|

||||

Now run

|

||||

Navigate to the example folder with the training script and install the required dependencies:

|

||||

|

||||

```bash

|

||||

cd examples/custom_diffusion

|

||||

pip install -r requirements.txt

|

||||

pip install clip-retrieval

|

||||

pip install clip-retrieval

|

||||

```

|

||||

|

||||

And initialize an [🤗Accelerate](https://github.com/huggingface/accelerate/) environment with:

|

||||

<Tip>

|

||||

|

||||

🤗 Accelerate is a library for helping you train on multiple GPUs/TPUs or with mixed-precision. It'll automatically configure your training setup based on your hardware and environment. Take a look at the 🤗 Accelerate [Quick tour](https://huggingface.co/docs/accelerate/quicktour) to learn more.

|

||||

|

||||

</Tip>

|

||||

|

||||

Initialize an 🤗 Accelerate environment:

|

||||

|

||||

```bash

|

||||

accelerate config

|

||||

```

|

||||

|

||||

Or for a default accelerate configuration without answering questions about your environment

|

||||

To setup a default 🤗 Accelerate environment without choosing any configurations:

|

||||

|

||||

```bash

|

||||

accelerate config default

|

||||

```

|

||||

|

||||

Or if your environment doesn't support an interactive shell e.g. a notebook

|

||||

Or if your environment doesn't support an interactive shell, like a notebook, you can use:

|

||||

|

||||

```python

|

||||

```bash

|

||||

from accelerate.utils import write_basic_config

|

||||

|

||||

write_basic_config()

|

||||

```

|

||||

### Cat example 😺

|

||||

|

||||

Now let's get our dataset. Download dataset from [here](https://www.cs.cmu.edu/~custom-diffusion/assets/data.zip) and unzip it. To use your own dataset, take a look at the [Create a dataset for training](create_dataset) guide.

|

||||

Lastly, if you want to train a model on your own dataset, take a look at the [Create a dataset for training](create_dataset) guide to learn how to create a dataset that works with the training script.

|

||||

|

||||

We also collect 200 real images using `clip-retrieval` which are combined with the target images in the training dataset as a regularization. This prevents overfitting to the given target image. The following flags enable the regularization `with_prior_preservation`, `real_prior` with `prior_loss_weight=1.`.

|

||||

The `class_prompt` should be the category name same as target image. The collected real images are with text captions similar to the `class_prompt`. The retrieved image are saved in `class_data_dir`. You can disable `real_prior` to use generated images as regularization. To collect the real images use this command first before training.

|

||||

<Tip>

|

||||

|

||||

The following sections highlight parts of the training script that are important for understanding how to modify it, but it doesn't cover every aspect of the script in detail. If you're interested in learning more, feel free to read through the [script](https://github.com/huggingface/diffusers/blob/main/examples/custom_diffusion/train_custom_diffusion.py) and let us know if you have any questions or concerns.

|

||||

|

||||

</Tip>

|

||||

|

||||

## Script parameters

|

||||

|

||||

The training script contains all the parameters to help you customize your training run. These are found in the [`parse_args()`](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/custom_diffusion/train_custom_diffusion.py#L319) function. The function comes with default values, but you can also set your own values in the training command if you'd like.

|

||||

|

||||

For example, to change the resolution of the input image:

|

||||

|

||||

```bash

|

||||

accelerate launch train_custom_diffusion.py \

|

||||

--resolution=256

|

||||

```

|

||||

|

||||

Many of the basic parameters are described in the [DreamBooth](dreambooth#script-parameters) training guide, so this guide focuses on the parameters unique to Custom Diffusion:

|

||||

|

||||

- `--freeze_model`: freezes the key and value parameters in the cross-attention layer; the default is `crossattn_kv`, but you can set it to `crossattn` to train all the parameters in the cross-attention layer

|

||||

- `--concepts_list`: to learn multiple concepts, provide a path to a JSON file containing the concepts

|

||||

- `--modifier_token`: a special word used to represent the learned concept

|

||||

- `--initializer_token`:

|

||||

|

||||

### Prior preservation loss

|

||||

|

||||

Prior preservation loss is a method that uses a model's own generated samples to help it learn how to generate more diverse images. Because these generated sample images belong to the same class as the images you provided, they help the model retain what it has learned about the class and how it can use what it already knows about the class to make new compositions.

|

||||

|

||||

Many of the parameters for prior preservation loss are described in the [DreamBooth](dreambooth#prior-preservation-loss) training guide.

|

||||

|

||||

### Regularization

|

||||

|

||||

Custom Diffusion includes training the target images with a small set of real images to prevent overfitting. As you can imagine, this can be easy to do when you're only training on a few images! Download 200 real images with `clip_retrieval`. The `class_prompt` should be the same category as the target images. These images are stored in `class_data_dir`.

|

||||

|

||||

```bash

|

||||

pip install clip-retrieval

|

||||

python retrieve.py --class_prompt cat --class_data_dir real_reg/samples_cat --num_class_images 200

|

||||

```

|

||||

|

||||

**___Note: Change the `resolution` to 768 if you are using the [stable-diffusion-2](https://huggingface.co/stabilityai/stable-diffusion-2) 768x768 model.___**

|

||||

To enable regularization, add the following parameters:

|

||||

|

||||

The script creates and saves model checkpoints and a `pytorch_custom_diffusion_weights.bin` file in your repository.

|

||||

- `--with_prior_preservation`: whether to use prior preservation loss

|

||||

- `--prior_loss_weight`: controls the influence of the prior preservation loss on the model

|

||||

- `--real_prior`: whether to use a small set of real images to prevent overfitting

|

||||

|

||||

```bash

|

||||

accelerate launch train_custom_diffusion.py \

|

||||

--with_prior_preservation \

|

||||

--prior_loss_weight=1.0 \

|

||||

--class_data_dir="./real_reg/samples_cat" \

|

||||

--class_prompt="cat" \

|

||||

--real_prior=True \

|

||||

```

|

||||

|

||||

## Training script

|

||||

|

||||

<Tip>

|

||||

|

||||

A lot of the code in the Custom Diffusion training script is similar to the [DreamBooth](dreambooth#training-script) script. This guide instead focuses on the code that is relevant to Custom Diffusion.

|

||||

|

||||

</Tip>

|

||||

|

||||

The Custom Diffusion training script has two dataset classes:

|

||||

|

||||

- [`CustomDiffusionDataset`](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/custom_diffusion/train_custom_diffusion.py#L165): preprocesses the images, class images, and prompts for training

|

||||

- [`PromptDataset`](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/custom_diffusion/train_custom_diffusion.py#L148): prepares the prompts for generating class images

|

||||

|

||||

Next, the `modifier_token` is [added to the tokenizer](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/custom_diffusion/train_custom_diffusion.py#L811), converted to token ids, and the token embeddings are resized to account for the new `modifier_token`. Then the `modifier_token` embeddings are initialized with the embeddings of the `initializer_token`. All parameters in the text encoder are frozen, except for the token embeddings since this is what the model is trying to learn to associate with the concepts.

|

||||

|

||||

```py

|

||||

params_to_freeze = itertools.chain(

|

||||

text_encoder.text_model.encoder.parameters(),

|

||||

text_encoder.text_model.final_layer_norm.parameters(),

|

||||

text_encoder.text_model.embeddings.position_embedding.parameters(),

|

||||

)

|

||||

freeze_params(params_to_freeze)

|

||||

```

|

||||

|

||||

Now you'll need to add the [Custom Diffusion weights](https://github.com/huggingface/diffusers/blob/64603389da01082055a901f2883c4810d1144edb/examples/custom_diffusion/train_custom_diffusion.py#L911C3-L911C3) to the attention layers. This is a really important step for getting the shape and size of the attention weights correct, and for setting the appropriate number of attention processors in each UNet block.

|

||||

|

||||

```py

|

||||

st = unet.state_dict()

|

||||

for name, _ in unet.attn_processors.items():

|

||||

cross_attention_dim = None if name.endswith("attn1.processor") else unet.config.cross_attention_dim

|

||||

if name.startswith("mid_block"):

|

||||

hidden_size = unet.config.block_out_channels[-1]

|

||||

elif name.startswith("up_blocks"):

|

||||

block_id = int(name[len("up_blocks.")])

|

||||

hidden_size = list(reversed(unet.config.block_out_channels))[block_id]

|

||||

elif name.startswith("down_blocks"):

|

||||

block_id = int(name[len("down_blocks.")])

|

||||

hidden_size = unet.config.block_out_channels[block_id]

|

||||

layer_name = name.split(".processor")[0]

|

||||

weights = {

|

||||

"to_k_custom_diffusion.weight": st[layer_name + ".to_k.weight"],

|

||||

"to_v_custom_diffusion.weight": st[layer_name + ".to_v.weight"],

|

||||

}

|

||||

if train_q_out:

|

||||

weights["to_q_custom_diffusion.weight"] = st[layer_name + ".to_q.weight"]

|

||||

weights["to_out_custom_diffusion.0.weight"] = st[layer_name + ".to_out.0.weight"]

|

||||

weights["to_out_custom_diffusion.0.bias"] = st[layer_name + ".to_out.0.bias"]

|

||||

if cross_attention_dim is not None:

|

||||

custom_diffusion_attn_procs[name] = attention_class(

|

||||

train_kv=train_kv,

|

||||

train_q_out=train_q_out,

|

||||

hidden_size=hidden_size,

|

||||

cross_attention_dim=cross_attention_dim,

|

||||

).to(unet.device)

|

||||

custom_diffusion_attn_procs[name].load_state_dict(weights)

|

||||

else:

|

||||

custom_diffusion_attn_procs[name] = attention_class(

|

||||

train_kv=False,

|

||||

train_q_out=False,

|

||||

hidden_size=hidden_size,

|

||||

cross_attention_dim=cross_attention_dim,

|

||||

)

|

||||

del st

|

||||

unet.set_attn_processor(custom_diffusion_attn_procs)

|

||||

custom_diffusion_layers = AttnProcsLayers(unet.attn_processors)

|

||||

```

|

||||

|

||||

The [optimizer](https://github.com/huggingface/diffusers/blob/84cd9e8d01adb47f046b1ee449fc76a0c32dc4e2/examples/custom_diffusion/train_custom_diffusion.py#L982) is initialized to update the cross-attention layer parameters:

|

||||

|

||||

```py

|

||||

optimizer = optimizer_class(

|

||||

itertools.chain(text_encoder.get_input_embeddings().parameters(), custom_diffusion_layers.parameters())

|

||||

if args.modifier_token is not None

|

||||

else custom_diffusion_layers.parameters(),

|

||||

lr=args.learning_rate,

|

||||

betas=(args.adam_beta1, args.adam_beta2),

|

||||

weight_decay=args.adam_weight_decay,

|

||||

eps=args.adam_epsilon,

|

||||

)

|

||||

```

|

||||

|

||||

In the [training loop](https://github.com/huggingface/diffusers/blob/84cd9e8d01adb47f046b1ee449fc76a0c32dc4e2/examples/custom_diffusion/train_custom_diffusion.py#L1048), it is important to only update the embeddings for the concept you're trying to learn. This means setting the gradients of all the other token embeddings to zero:

|

||||

|

||||

```py

|

||||

if args.modifier_token is not None:

|

||||

if accelerator.num_processes > 1:

|

||||

grads_text_encoder = text_encoder.module.get_input_embeddings().weight.grad

|

||||

else:

|

||||

grads_text_encoder = text_encoder.get_input_embeddings().weight.grad

|

||||

index_grads_to_zero = torch.arange(len(tokenizer)) != modifier_token_id[0]

|

||||

for i in range(len(modifier_token_id[1:])):

|

||||

index_grads_to_zero = index_grads_to_zero & (

|

||||

torch.arange(len(tokenizer)) != modifier_token_id[i]

|

||||

)

|

||||

grads_text_encoder.data[index_grads_to_zero, :] = grads_text_encoder.data[

|

||||

index_grads_to_zero, :

|

||||

].fill_(0)

|

||||

```

|

||||

|

||||

## Launch the script

|

||||

|

||||

Once you’ve made all your changes or you’re okay with the default configuration, you’re ready to launch the training script! 🚀

|

||||

|

||||

In this guide, you'll download and use these example [cat images](https://www.cs.cmu.edu/~custom-diffusion/assets/data.zip). You can also create and use your own dataset if you want (see the [Create a dataset for training](create_dataset) guide).

|

||||

|

||||

Set the environment variable `MODEL_NAME` to a model id on the Hub or a path to a local model, `INSTANCE_DIR` to the path where you just downloaded the cat images to, and `OUTPUT_DIR` to where you want to save the model. You'll use `<new1>` as the special word to tie the newly learned embeddings to. The script creates and saves model checkpoints and a pytorch_custom_diffusion_weights.bin file to your repository.

|

||||

|

||||

To monitor training progress with Weights and Biases, add the `--report_to=wandb` parameter to the training command and specify a validation prompt with `--validation_prompt`. This is useful for debugging and saving intermediate results.

|

||||

|

||||

<Tip>

|

||||

|

||||

If you're training on human faces, the Custom Diffusion team has found the following parameters to work well:

|

||||

|

||||

- `--learning_rate=5e-6`

|

||||

- `--max_train_steps` can be anywhere between 1000 and 2000

|

||||

- `--freeze_model=crossattn`

|

||||

- use at least 15-20 images to train with

|

||||

|

||||

</Tip>

|

||||

|

||||

<hfoptions id="training-inference">

|

||||

<hfoption id="single concept">

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

@@ -91,68 +248,38 @@ accelerate launch train_custom_diffusion.py \

|

||||

--instance_data_dir=$INSTANCE_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--class_data_dir=./real_reg/samples_cat/ \

|

||||

--with_prior_preservation --real_prior --prior_loss_weight=1.0 \

|

||||

--class_prompt="cat" --num_class_images=200 \

|

||||

--with_prior_preservation \

|

||||

--real_prior \

|

||||

--prior_loss_weight=1.0 \

|

||||

--class_prompt="cat" \

|

||||

--num_class_images=200 \

|

||||

--instance_prompt="photo of a <new1> cat" \

|

||||

--resolution=512 \

|

||||

--train_batch_size=2 \

|

||||

--learning_rate=1e-5 \

|

||||

--lr_warmup_steps=0 \

|

||||

--max_train_steps=250 \

|

||||

--scale_lr --hflip \

|

||||

--modifier_token "<new1>" \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

**Use `--enable_xformers_memory_efficient_attention` for faster training with lower VRAM requirement (16GB per GPU). Follow [this guide](https://github.com/facebookresearch/xformers) for installation instructions.**

|

||||

|

||||

To track your experiments using Weights and Biases (`wandb`) and to save intermediate results (which we HIGHLY recommend), follow these steps:

|

||||

|

||||

* Install `wandb`: `pip install wandb`.

|

||||

* Authorize: `wandb login`.

|

||||

* Then specify a `validation_prompt` and set `report_to` to `wandb` while launching training. You can also configure the following related arguments:

|

||||

* `num_validation_images`

|

||||

* `validation_steps`

|

||||

|

||||

Here is an example command:

|

||||

|

||||

```bash

|

||||

accelerate launch train_custom_diffusion.py \

|

||||

--pretrained_model_name_or_path=$MODEL_NAME \

|

||||

--instance_data_dir=$INSTANCE_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--class_data_dir=./real_reg/samples_cat/ \

|

||||

--with_prior_preservation --real_prior --prior_loss_weight=1.0 \

|

||||

--class_prompt="cat" --num_class_images=200 \

|

||||

--instance_prompt="photo of a <new1> cat" \

|

||||

--resolution=512 \

|

||||

--train_batch_size=2 \

|

||||

--learning_rate=1e-5 \

|

||||

--lr_warmup_steps=0 \

|

||||

--max_train_steps=250 \

|

||||

--scale_lr --hflip \

|

||||

--scale_lr \

|

||||

--hflip \

|

||||

--modifier_token "<new1>" \

|

||||

--validation_prompt="<new1> cat sitting in a bucket" \

|

||||

--report_to="wandb" \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

Here is an example [Weights and Biases page](https://wandb.ai/sayakpaul/custom-diffusion/runs/26ghrcau) where you can check out the intermediate results along with other training details.

|

||||

</hfoption>

|

||||

<hfoption id="multiple concepts">

|

||||

|

||||

If you specify `--push_to_hub`, the learned parameters will be pushed to a repository on the Hugging Face Hub. Here is an [example repository](https://huggingface.co/sayakpaul/custom-diffusion-cat).

|

||||

Custom Diffusion can also learn multiple concepts if you provide a [JSON](https://github.com/adobe-research/custom-diffusion/blob/main/assets/concept_list.json) file with some details about each concept it should learn.

|

||||

|

||||

### Training on multiple concepts 🐱🪵

|

||||

|

||||

Provide a [json](https://github.com/adobe-research/custom-diffusion/blob/main/assets/concept_list.json) file with the info about each concept, similar to [this](https://github.com/ShivamShrirao/diffusers/blob/main/examples/dreambooth/train_dreambooth.py).

|

||||

|

||||

To collect the real images run this command for each concept in the json file.

|

||||

Run clip-retrieval to collect some real images to use for regularization:

|

||||

|

||||

```bash

|

||||

pip install clip-retrieval

|

||||

python retrieve.py --class_prompt {} --class_data_dir {} --num_class_images 200

|

||||

```

|

||||

|

||||

And then we're ready to start training!

|

||||

Then you can launch the script:

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

@@ -162,73 +289,40 @@ accelerate launch train_custom_diffusion.py \

|

||||

--pretrained_model_name_or_path=$MODEL_NAME \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--concepts_list=./concept_list.json \

|

||||

--with_prior_preservation --real_prior --prior_loss_weight=1.0 \

|

||||

--with_prior_preservation \

|

||||

--real_prior \

|

||||

--prior_loss_weight=1.0 \

|

||||

--resolution=512 \

|

||||

--train_batch_size=2 \

|

||||

--learning_rate=1e-5 \

|

||||

--lr_warmup_steps=0 \

|

||||

--max_train_steps=500 \

|

||||

--num_class_images=200 \

|

||||

--scale_lr --hflip \

|

||||

--scale_lr \

|

||||

--hflip \

|

||||

--modifier_token "<new1>+<new2>" \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

Here is an example [Weights and Biases page](https://wandb.ai/sayakpaul/custom-diffusion/runs/3990tzkg) where you can check out the intermediate results along with other training details.

|

||||

</hfoption>

|

||||

</hfoptions>

|

||||

|

||||

### Training on human faces

|

||||

Once training is finished, you can use your new Custom Diffusion model for inference.

|

||||

|

||||

For fine-tuning on human faces we found the following configuration to work better: `learning_rate=5e-6`, `max_train_steps=1000 to 2000`, and `freeze_model=crossattn` with at least 15-20 images.

|

||||

<hfoptions id="training-inference">

|

||||

<hfoption id="single concept">

|

||||

|

||||

To collect the real images use this command first before training.

|

||||

|

||||

```bash

|

||||

pip install clip-retrieval

|

||||

python retrieve.py --class_prompt person --class_data_dir real_reg/samples_person --num_class_images 200

|

||||

```

|

||||

|

||||

Then start training!

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export OUTPUT_DIR="path-to-save-model"

|

||||

export INSTANCE_DIR="path-to-images"

|

||||

|

||||

accelerate launch train_custom_diffusion.py \

|

||||

--pretrained_model_name_or_path=$MODEL_NAME \

|

||||

--instance_data_dir=$INSTANCE_DIR \

|

||||

--output_dir=$OUTPUT_DIR \

|

||||

--class_data_dir=./real_reg/samples_person/ \

|

||||

--with_prior_preservation --real_prior --prior_loss_weight=1.0 \

|

||||

--class_prompt="person" --num_class_images=200 \

|

||||

--instance_prompt="photo of a <new1> person" \

|

||||

--resolution=512 \

|

||||

--train_batch_size=2 \

|

||||

--learning_rate=5e-6 \

|

||||

--lr_warmup_steps=0 \

|

||||

--max_train_steps=1000 \

|

||||

--scale_lr --hflip --noaug \

|

||||

--freeze_model crossattn \

|

||||

--modifier_token "<new1>" \

|

||||

--enable_xformers_memory_efficient_attention \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

## Inference

|

||||

|

||||

Once you have trained a model using the above command, you can run inference using the below command. Make sure to include the `modifier token` (e.g. \<new1\> in above example) in your prompt.

|

||||

|

||||

```python

|

||||

```py

|

||||

import torch

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16, use_safetensors=True

|

||||

pipeline = DiffusionPipeline.from_pretrained(

|

||||

"CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16,

|

||||

).to("cuda")

|

||||

pipe.unet.load_attn_procs("path-to-save-model", weight_name="pytorch_custom_diffusion_weights.bin")

|

||||

pipe.load_textual_inversion("path-to-save-model", weight_name="<new1>.bin")

|

||||

pipeline.unet.load_attn_procs("path-to-save-model", weight_name="pytorch_custom_diffusion_weights.bin")

|

||||

pipeline.load_textual_inversion("path-to-save-model", weight_name="<new1>.bin")

|

||||

|

||||

image = pipe(

|

||||

image = pipeline(

|

||||

"<new1> cat sitting in a bucket",

|

||||

num_inference_steps=100,

|

||||

guidance_scale=6.0,

|

||||

@@ -237,47 +331,20 @@ image = pipe(

|

||||

image.save("cat.png")

|

||||

```

|

||||

|

||||

It's possible to directly load these parameters from a Hub repository:

|

||||

</hfoption>

|

||||

<hfoption id="multiple concepts">

|

||||

|

||||

```python

|

||||

```py

|

||||

import torch

|

||||

from huggingface_hub.repocard import RepoCard

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

model_id = "sayakpaul/custom-diffusion-cat"

|

||||

card = RepoCard.load(model_id)

|

||||

base_model_id = card.data.to_dict()["base_model"]

|

||||

pipeline = DiffusionPipeline.from_pretrained("sayakpaul/custom-diffusion-cat-wooden-pot", torch_dtype=torch.float16).to("cuda")

|

||||

pipeline.unet.load_attn_procs(model_id, weight_name="pytorch_custom_diffusion_weights.bin")

|

||||

pipeline.load_textual_inversion(model_id, weight_name="<new1>.bin")

|

||||

pipeline.load_textual_inversion(model_id, weight_name="<new2>.bin")

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(base_model_id, torch_dtype=torch.float16, use_safetensors=True).to("cuda")

|

||||

pipe.unet.load_attn_procs(model_id, weight_name="pytorch_custom_diffusion_weights.bin")

|

||||

pipe.load_textual_inversion(model_id, weight_name="<new1>.bin")

|

||||

|

||||

image = pipe(

|

||||

"<new1> cat sitting in a bucket",

|

||||

num_inference_steps=100,

|

||||

guidance_scale=6.0,

|

||||

eta=1.0,

|

||||

).images[0]

|

||||

image.save("cat.png")

|

||||

```

|

||||

|

||||

Here is an example of performing inference with multiple concepts:

|

||||

|

||||

```python

|

||||

import torch

|

||||

from huggingface_hub.repocard import RepoCard

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

model_id = "sayakpaul/custom-diffusion-cat-wooden-pot"

|

||||

card = RepoCard.load(model_id)

|

||||

base_model_id = card.data.to_dict()["base_model"]

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(base_model_id, torch_dtype=torch.float16, use_safetensors=True).to("cuda")

|

||||

pipe.unet.load_attn_procs(model_id, weight_name="pytorch_custom_diffusion_weights.bin")

|

||||

pipe.load_textual_inversion(model_id, weight_name="<new1>.bin")

|

||||

pipe.load_textual_inversion(model_id, weight_name="<new2>.bin")

|

||||

|

||||

image = pipe(

|

||||

image = pipeline(

|

||||

"the <new1> cat sculpture in the style of a <new2> wooden pot",

|

||||

num_inference_steps=100,

|

||||

guidance_scale=6.0,

|

||||

@@ -286,20 +353,11 @@ image = pipe(

|

||||

image.save("multi-subject.png")

|

||||

```

|

||||

|

||||

Here, `cat` and `wooden pot` refer to the multiple concepts.

|

||||

</hfoption>

|

||||

</hfoptions>

|

||||

|

||||