mirror of

https://github.com/huggingface/diffusers.git

synced 2026-01-27 17:22:53 +03:00

Errata (#8322)

* Fix typos

* Trim trailing whitespaces

* Remove a trailing whitespace

* chore: Update MarigoldDepthPipeline checkpoint to prs-eth/marigold-lcm-v1-0

* Revert "chore: Update MarigoldDepthPipeline checkpoint to prs-eth/marigold-lcm-v1-0"

This reverts commit fd742b30b4.

* pokemon -> naruto

* `DPMSolverMultistep` -> `DPMSolverMultistepScheduler`

* Improve Markdown stylization

* Improve style

* Improve style

* Refactor pipeline variable names for consistency

* up style

This commit is contained in:

@@ -16,7 +16,7 @@ aMUSEd was introduced in [aMUSEd: An Open MUSE Reproduction](https://huggingface

|

||||

|

||||

Amused is a lightweight text to image model based off of the [MUSE](https://arxiv.org/abs/2301.00704) architecture. Amused is particularly useful in applications that require a lightweight and fast model such as generating many images quickly at once.

|

||||

|

||||

Amused is a vqvae token based transformer that can generate an image in fewer forward passes than many diffusion models. In contrast with muse, it uses the smaller text encoder CLIP-L/14 instead of t5-xxl. Due to its small parameter count and few forward pass generation process, amused can generate many images quickly. This benefit is seen particularly at larger batch sizes.

|

||||

Amused is a vqvae token based transformer that can generate an image in fewer forward passes than many diffusion models. In contrast with muse, it uses the smaller text encoder CLIP-L/14 instead of t5-xxl. Due to its small parameter count and few forward pass generation process, amused can generate many images quickly. This benefit is seen particularly at larger batch sizes.

|

||||

|

||||

The abstract from the paper is:

|

||||

|

||||

|

||||

@@ -11,12 +11,12 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

Kandinsky 3 is created by [Vladimir Arkhipkin](https://github.com/oriBetelgeuse),[Anastasia Maltseva](https://github.com/NastyaMittseva),[Igor Pavlov](https://github.com/boomb0om),[Andrei Filatov](https://github.com/anvilarth),[Arseniy Shakhmatov](https://github.com/cene555),[Andrey Kuznetsov](https://github.com/kuznetsoffandrey),[Denis Dimitrov](https://github.com/denndimitrov), [Zein Shaheen](https://github.com/zeinsh)

|

||||

|

||||

The description from it's Github page:

|

||||

The description from it's Github page:

|

||||

|

||||

*Kandinsky 3.0 is an open-source text-to-image diffusion model built upon the Kandinsky2-x model family. In comparison to its predecessors, enhancements have been made to the text understanding and visual quality of the model, achieved by increasing the size of the text encoder and Diffusion U-Net models, respectively.*

|

||||

|

||||

Its architecture includes 3 main components:

|

||||

1. [FLAN-UL2](https://huggingface.co/google/flan-ul2), which is an encoder decoder model based on the T5 architecture.

|

||||

1. [FLAN-UL2](https://huggingface.co/google/flan-ul2), which is an encoder decoder model based on the T5 architecture.

|

||||

2. New U-Net architecture featuring BigGAN-deep blocks doubles depth while maintaining the same number of parameters.

|

||||

3. Sber-MoVQGAN is a decoder proven to have superior results in image restoration.

|

||||

|

||||

|

||||

@@ -25,11 +25,11 @@ You can find additional information about LEDITS++ on the [project page](https:/

|

||||

</Tip>

|

||||

|

||||

<Tip warning={true}>

|

||||

Due to some backward compatability issues with the current diffusers implementation of [`~schedulers.DPMSolverMultistepScheduler`] this implementation of LEdits++ can no longer guarantee perfect inversion.

|

||||

This issue is unlikely to have any noticeable effects on applied use-cases. However, we provide an alternative implementation that guarantees perfect inversion in a dedicated [GitHub repo](https://github.com/ml-research/ledits_pp).

|

||||

Due to some backward compatability issues with the current diffusers implementation of [`~schedulers.DPMSolverMultistepScheduler`] this implementation of LEdits++ can no longer guarantee perfect inversion.

|

||||

This issue is unlikely to have any noticeable effects on applied use-cases. However, we provide an alternative implementation that guarantees perfect inversion in a dedicated [GitHub repo](https://github.com/ml-research/ledits_pp).

|

||||

</Tip>

|

||||

|

||||

We provide two distinct pipelines based on different pre-trained models.

|

||||

We provide two distinct pipelines based on different pre-trained models.

|

||||

|

||||

## LEditsPPPipelineStableDiffusion

|

||||

[[autodoc]] pipelines.ledits_pp.LEditsPPPipelineStableDiffusion

|

||||

|

||||

@@ -14,10 +14,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

|

||||

|

||||

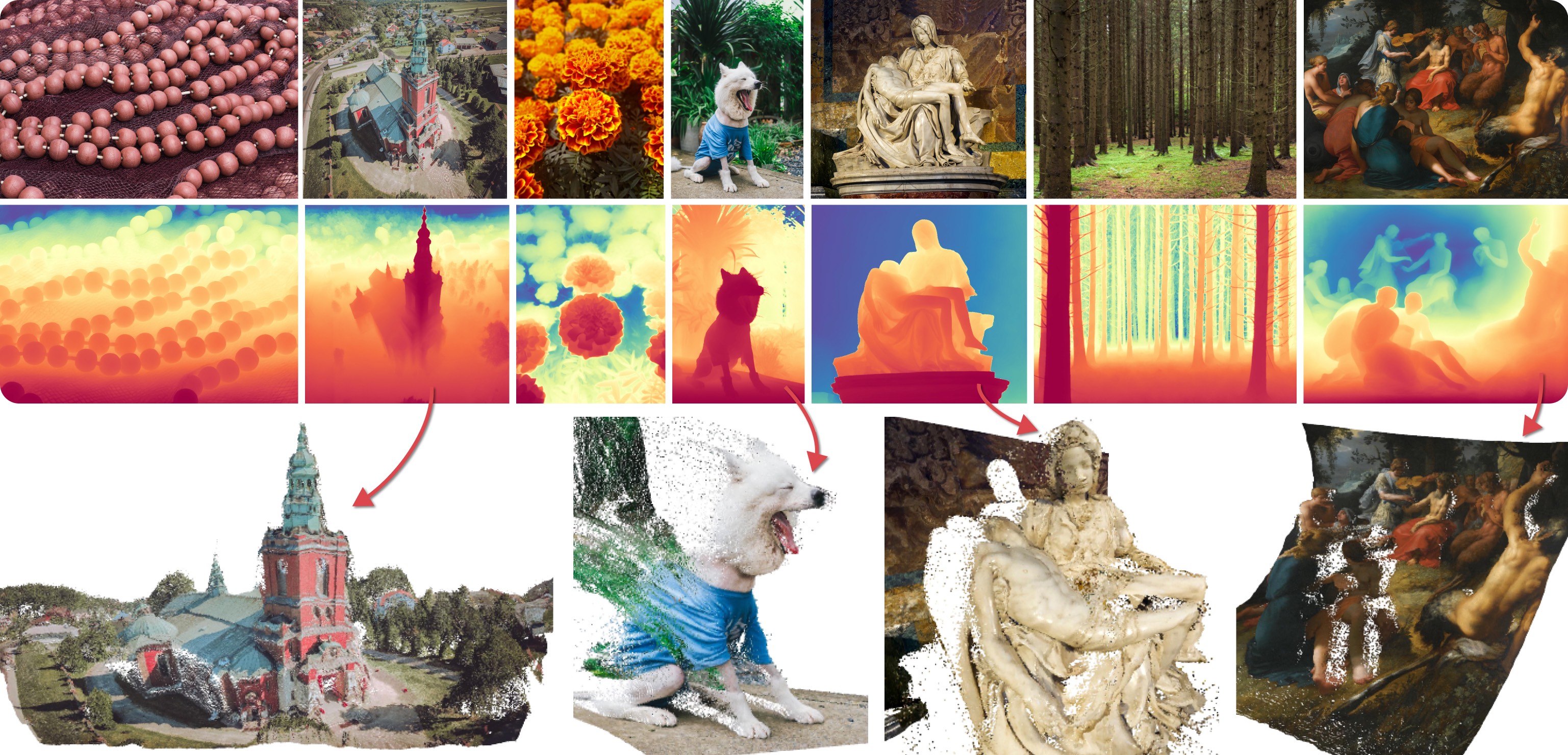

Marigold was proposed in [Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation](https://huggingface.co/papers/2312.02145), a CVPR 2024 Oral paper by [Bingxin Ke](http://www.kebingxin.com/), [Anton Obukhov](https://www.obukhov.ai/), [Shengyu Huang](https://shengyuh.github.io/), [Nando Metzger](https://nandometzger.github.io/), [Rodrigo Caye Daudt](https://rcdaudt.github.io/), and [Konrad Schindler](https://scholar.google.com/citations?user=FZuNgqIAAAAJ&hl=en).

|

||||

The idea is to repurpose the rich generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional computer vision tasks.

|

||||

Initially, this idea was explored to fine-tune Stable Diffusion for Monocular Depth Estimation, as shown in the teaser above.

|

||||

Later,

|

||||

Marigold was proposed in [Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation](https://huggingface.co/papers/2312.02145), a CVPR 2024 Oral paper by [Bingxin Ke](http://www.kebingxin.com/), [Anton Obukhov](https://www.obukhov.ai/), [Shengyu Huang](https://shengyuh.github.io/), [Nando Metzger](https://nandometzger.github.io/), [Rodrigo Caye Daudt](https://rcdaudt.github.io/), and [Konrad Schindler](https://scholar.google.com/citations?user=FZuNgqIAAAAJ&hl=en).

|

||||

The idea is to repurpose the rich generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional computer vision tasks.

|

||||

Initially, this idea was explored to fine-tune Stable Diffusion for Monocular Depth Estimation, as shown in the teaser above.

|

||||

Later,

|

||||

- [Tianfu Wang](https://tianfwang.github.io/) trained the first Latent Consistency Model (LCM) of Marigold, which unlocked fast single-step inference;

|

||||

- [Kevin Qu](https://www.linkedin.com/in/kevin-qu-b3417621b/?locale=en_US) extended the approach to Surface Normals Estimation;

|

||||

- [Anton Obukhov](https://www.obukhov.ai/) contributed the pipelines and documentation into diffusers (enabled and supported by [YiYi Xu](https://yiyixuxu.github.io/) and [Sayak Paul](https://sayak.dev/)).

|

||||

@@ -28,7 +28,7 @@ The abstract from the paper is:

|

||||

|

||||

## Available Pipelines

|

||||

|

||||

Each pipeline supports one Computer Vision task, which takes an input RGB image as input and produces a *prediction* of the modality of interest, such as a depth map of the input image.

|

||||

Each pipeline supports one Computer Vision task, which takes an input RGB image as input and produces a *prediction* of the modality of interest, such as a depth map of the input image.

|

||||

Currently, the following tasks are implemented:

|

||||

|

||||

| Pipeline | Predicted Modalities | Demos |

|

||||

@@ -39,7 +39,7 @@ Currently, the following tasks are implemented:

|

||||

|

||||

## Available Checkpoints

|

||||

|

||||

The original checkpoints can be found under the [PRS-ETH](https://huggingface.co/prs-eth/) Hugging Face organization.

|

||||

The original checkpoints can be found under the [PRS-ETH](https://huggingface.co/prs-eth/) Hugging Face organization.

|

||||

|

||||

<Tip>

|

||||

|

||||

@@ -49,11 +49,11 @@ Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers)

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

Marigold pipelines were designed and tested only with `DDIMScheduler` and `LCMScheduler`.

|

||||

Marigold pipelines were designed and tested only with `DDIMScheduler` and `LCMScheduler`.

|

||||

Depending on the scheduler, the number of inference steps required to get reliable predictions varies, and there is no universal value that works best across schedulers.

|

||||

Because of that, the default value of `num_inference_steps` in the `__call__` method of the pipeline is set to `None` (see the API reference).

|

||||

Unless set explicitly, its value will be taken from the checkpoint configuration `model_index.json`.

|

||||

This is done to ensure high-quality predictions when calling the pipeline with just the `image` argument.

|

||||

Because of that, the default value of `num_inference_steps` in the `__call__` method of the pipeline is set to `None` (see the API reference).

|

||||

Unless set explicitly, its value will be taken from the checkpoint configuration `model_index.json`.

|

||||

This is done to ensure high-quality predictions when calling the pipeline with just the `image` argument.

|

||||

|

||||

</Tip>

|

||||

|

||||

|

||||

@@ -37,7 +37,7 @@ Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers.m

|

||||

|

||||

## Inference with under 8GB GPU VRAM

|

||||

|

||||

Run the [`PixArtAlphaPipeline`] with under 8GB GPU VRAM by loading the text encoder in 8-bit precision. Let's walk through a full-fledged example.

|

||||

Run the [`PixArtAlphaPipeline`] with under 8GB GPU VRAM by loading the text encoder in 8-bit precision. Let's walk through a full-fledged example.

|

||||

|

||||

First, install the [bitsandbytes](https://github.com/TimDettmers/bitsandbytes) library:

|

||||

|

||||

@@ -75,10 +75,10 @@ with torch.no_grad():

|

||||

prompt_embeds, prompt_attention_mask, negative_embeds, negative_prompt_attention_mask = pipe.encode_prompt(prompt)

|

||||

```

|

||||

|

||||

Since text embeddings have been computed, remove the `text_encoder` and `pipe` from the memory, and free up som GPU VRAM:

|

||||

Since text embeddings have been computed, remove the `text_encoder` and `pipe` from the memory, and free up some GPU VRAM:

|

||||

|

||||

```python

|

||||

import gc

|

||||

import gc

|

||||

|

||||

def flush():

|

||||

gc.collect()

|

||||

@@ -99,7 +99,7 @@ pipe = PixArtAlphaPipeline.from_pretrained(

|

||||

).to("cuda")

|

||||

|

||||

latents = pipe(

|

||||

negative_prompt=None,

|

||||

negative_prompt=None,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

prompt_attention_mask=prompt_attention_mask,

|

||||

@@ -146,4 +146,3 @@ While loading the `text_encoder`, you set `load_in_8bit` to `True`. You could al

|

||||

[[autodoc]] PixArtAlphaPipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

@@ -39,7 +39,7 @@ Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers)

|

||||

|

||||

## Inference with under 8GB GPU VRAM

|

||||

|

||||

Run the [`PixArtSigmaPipeline`] with under 8GB GPU VRAM by loading the text encoder in 8-bit precision. Let's walk through a full-fledged example.

|

||||

Run the [`PixArtSigmaPipeline`] with under 8GB GPU VRAM by loading the text encoder in 8-bit precision. Let's walk through a full-fledged example.

|

||||

|

||||

First, install the [bitsandbytes](https://github.com/TimDettmers/bitsandbytes) library:

|

||||

|

||||

@@ -59,7 +59,6 @@ text_encoder = T5EncoderModel.from_pretrained(

|

||||

subfolder="text_encoder",

|

||||

load_in_8bit=True,

|

||||

device_map="auto",

|

||||

|

||||

)

|

||||

pipe = PixArtSigmaPipeline.from_pretrained(

|

||||

"PixArt-alpha/PixArt-Sigma-XL-2-1024-MS",

|

||||

@@ -77,10 +76,10 @@ with torch.no_grad():

|

||||

prompt_embeds, prompt_attention_mask, negative_embeds, negative_prompt_attention_mask = pipe.encode_prompt(prompt)

|

||||

```

|

||||

|

||||

Since text embeddings have been computed, remove the `text_encoder` and `pipe` from the memory, and free up som GPU VRAM:

|

||||

Since text embeddings have been computed, remove the `text_encoder` and `pipe` from the memory, and free up some GPU VRAM:

|

||||

|

||||

```python

|

||||

import gc

|

||||

import gc

|

||||

|

||||

def flush():

|

||||

gc.collect()

|

||||

@@ -101,7 +100,7 @@ pipe = PixArtSigmaPipeline.from_pretrained(

|

||||

).to("cuda")

|

||||

|

||||

latents = pipe(

|

||||

negative_prompt=None,

|

||||

negative_prompt=None,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

prompt_attention_mask=prompt_attention_mask,

|

||||

@@ -148,4 +147,3 @@ While loading the `text_encoder`, you set `load_in_8bit` to `True`. You could al

|

||||

[[autodoc]] PixArtSigmaPipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

@@ -177,7 +177,7 @@ inpaint = StableDiffusionInpaintPipeline(**text2img.components)

|

||||

|

||||

The Stable Diffusion pipelines are automatically supported in [Gradio](https://github.com/gradio-app/gradio/), a library that makes creating beautiful and user-friendly machine learning apps on the web a breeze. First, make sure you have Gradio installed:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install -U gradio

|

||||

```

|

||||

|

||||

@@ -209,4 +209,4 @@ gr.Interface.from_pipeline(pipe).launch()

|

||||

```

|

||||

|

||||

By default, the web demo runs on a local server. If you'd like to share it with others, you can generate a temporary public

|

||||

link by setting `share=True` in `launch()`. Or, you can host your demo on [Hugging Face Spaces](https://huggingface.co/spaces)https://huggingface.co/spaces for a permanent link.

|

||||

link by setting `share=True` in `launch()`. Or, you can host your demo on [Hugging Face Spaces](https://huggingface.co/spaces)https://huggingface.co/spaces for a permanent link.

|

||||

@@ -12,7 +12,7 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# EDMDPMSolverMultistepScheduler

|

||||

|

||||

`EDMDPMSolverMultistepScheduler` is a [Karras formulation](https://huggingface.co/papers/2206.00364) of `DPMSolverMultistep`, a multistep scheduler from [DPM-Solver: A Fast ODE Solver for Diffusion Probabilistic Model Sampling in Around 10 Steps](https://huggingface.co/papers/2206.00927) and [DPM-Solver++: Fast Solver for Guided Sampling of Diffusion Probabilistic Models](https://huggingface.co/papers/2211.01095) by Cheng Lu, Yuhao Zhou, Fan Bao, Jianfei Chen, Chongxuan Li, and Jun Zhu.

|

||||

`EDMDPMSolverMultistepScheduler` is a [Karras formulation](https://huggingface.co/papers/2206.00364) of `DPMSolverMultistepScheduler`, a multistep scheduler from [DPM-Solver: A Fast ODE Solver for Diffusion Probabilistic Model Sampling in Around 10 Steps](https://huggingface.co/papers/2206.00927) and [DPM-Solver++: Fast Solver for Guided Sampling of Diffusion Probabilistic Models](https://huggingface.co/papers/2211.01095) by Cheng Lu, Yuhao Zhou, Fan Bao, Jianfei Chen, Chongxuan Li, and Jun Zhu.

|

||||

|

||||

DPMSolver (and the improved version DPMSolver++) is a fast dedicated high-order solver for diffusion ODEs with convergence order guarantee. Empirically, DPMSolver sampling with only 20 steps can generate high-quality

|

||||

samples, and it can generate quite good samples even in 10 steps.

|

||||

|

||||

@@ -12,7 +12,7 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# DPMSolverMultistepScheduler

|

||||

|

||||

`DPMSolverMultistep` is a multistep scheduler from [DPM-Solver: A Fast ODE Solver for Diffusion Probabilistic Model Sampling in Around 10 Steps](https://huggingface.co/papers/2206.00927) and [DPM-Solver++: Fast Solver for Guided Sampling of Diffusion Probabilistic Models](https://huggingface.co/papers/2211.01095) by Cheng Lu, Yuhao Zhou, Fan Bao, Jianfei Chen, Chongxuan Li, and Jun Zhu.

|

||||

`DPMSolverMultistepScheduler` is a multistep scheduler from [DPM-Solver: A Fast ODE Solver for Diffusion Probabilistic Model Sampling in Around 10 Steps](https://huggingface.co/papers/2206.00927) and [DPM-Solver++: Fast Solver for Guided Sampling of Diffusion Probabilistic Models](https://huggingface.co/papers/2211.01095) by Cheng Lu, Yuhao Zhou, Fan Bao, Jianfei Chen, Chongxuan Li, and Jun Zhu.

|

||||

|

||||

DPMSolver (and the improved version DPMSolver++) is a fast dedicated high-order solver for diffusion ODEs with convergence order guarantee. Empirically, DPMSolver sampling with only 20 steps can generate high-quality

|

||||

samples, and it can generate quite good samples even in 10 steps.

|

||||

|

||||

@@ -36,7 +36,7 @@ Then load and enable the [`DeepCacheSDHelper`](https://github.com/horseee/DeepCa

|

||||

image = pipe("a photo of an astronaut on a moon").images[0]

|

||||

```

|

||||

|

||||

The `set_params` method accepts two arguments: `cache_interval` and `cache_branch_id`. `cache_interval` means the frequency of feature caching, specified as the number of steps between each cache operation. `cache_branch_id` identifies which branch of the network (ordered from the shallowest to the deepest layer) is responsible for executing the caching processes.

|

||||

The `set_params` method accepts two arguments: `cache_interval` and `cache_branch_id`. `cache_interval` means the frequency of feature caching, specified as the number of steps between each cache operation. `cache_branch_id` identifies which branch of the network (ordered from the shallowest to the deepest layer) is responsible for executing the caching processes.

|

||||

Opting for a lower `cache_branch_id` or a larger `cache_interval` can lead to faster inference speed at the expense of reduced image quality (ablation experiments of these two hyperparameters can be found in the [paper](https://arxiv.org/abs/2312.00858)). Once those arguments are set, use the `enable` or `disable` methods to activate or deactivate the `DeepCacheSDHelper`.

|

||||

|

||||

<div class="flex justify-center">

|

||||

|

||||

@@ -188,7 +188,7 @@ def latents_to_rgb(latents):

|

||||

```py

|

||||

def decode_tensors(pipe, step, timestep, callback_kwargs):

|

||||

latents = callback_kwargs["latents"]

|

||||

|

||||

|

||||

image = latents_to_rgb(latents)

|

||||

image.save(f"{step}.png")

|

||||

|

||||

|

||||

@@ -138,15 +138,15 @@ Because Marigold's latent space is compatible with the base Stable Diffusion, it

|

||||

```diff

|

||||

import diffusers

|

||||

import torch

|

||||

|

||||

|

||||

pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

|

||||

"prs-eth/marigold-depth-lcm-v1-0", variant="fp16", torch_dtype=torch.float16

|

||||

).to("cuda")

|

||||

|

||||

|

||||

+ pipe.vae = diffusers.AutoencoderTiny.from_pretrained(

|

||||

+ "madebyollin/taesd", torch_dtype=torch.float16

|

||||

+ ).cuda()

|

||||

|

||||

|

||||

image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

|

||||

depth = pipe(image)

|

||||

```

|

||||

@@ -156,13 +156,13 @@ As suggested in [Optimizations](../optimization/torch2.0#torch.compile), adding

|

||||

```diff

|

||||

import diffusers

|

||||

import torch

|

||||

|

||||

|

||||

pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

|

||||

"prs-eth/marigold-depth-lcm-v1-0", variant="fp16", torch_dtype=torch.float16

|

||||

).to("cuda")

|

||||

|

||||

|

||||

+ pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

|

||||

|

||||

|

||||

image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

|

||||

depth = pipe(image)

|

||||

```

|

||||

@@ -208,7 +208,7 @@ model_paper_kwargs = {

|

||||

diffusers.schedulers.LCMScheduler: {

|

||||

"num_inference_steps": 4,

|

||||

"ensemble_size": 5,

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

|

||||

@@ -261,7 +261,7 @@ model_paper_kwargs = {

|

||||

diffusers.schedulers.LCMScheduler: {

|

||||

"num_inference_steps": 4,

|

||||

"ensemble_size": 10,

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

|

||||

@@ -415,7 +415,7 @@ image = diffusers.utils.load_image(

|

||||

|

||||

pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

|

||||

"prs-eth/marigold-depth-lcm-v1-0", torch_dtype=torch.float16, variant="fp16"

|

||||

).to("cuda")

|

||||

).to(device)

|

||||

|

||||

depth_image = pipe(image, generator=generator).prediction

|

||||

depth_image = pipe.image_processor.visualize_depth(depth_image, color_map="binary")

|

||||

@@ -423,10 +423,10 @@ depth_image[0].save("motorcycle_controlnet_depth.png")

|

||||

|

||||

controlnet = diffusers.ControlNetModel.from_pretrained(

|

||||

"diffusers/controlnet-depth-sdxl-1.0", torch_dtype=torch.float16, variant="fp16"

|

||||

).to("cuda")

|

||||

).to(device)

|

||||

pipe = diffusers.StableDiffusionXLControlNetPipeline.from_pretrained(

|

||||

"SG161222/RealVisXL_V4.0", torch_dtype=torch.float16, variant="fp16", controlnet=controlnet

|

||||

).to("cuda")

|

||||

).to(device)

|

||||

pipe.scheduler = diffusers.DPMSolverMultistepScheduler.from_config(pipe.scheduler.config, use_karras_sigmas=True)

|

||||

|

||||

controlnet_out = pipe(

|

||||

|

||||

@@ -134,7 +134,7 @@ sigmas = [14.615, 6.315, 3.771, 2.181, 1.342, 0.862, 0.555, 0.380, 0.234, 0.113,

|

||||

prompt = "anthropomorphic capybara wearing a suit and working with a computer"

|

||||

generator = torch.Generator(device='cuda').manual_seed(123)

|

||||

image = pipeline(

|

||||

prompt=prompt,

|

||||

prompt=prompt,

|

||||

num_inference_steps=10,

|

||||

sigmas=sigmas,

|

||||

generator=generator

|

||||

|

||||

@@ -34,7 +34,7 @@ Stable Diffusion XL은 Dustin Podell, Zion English, Kyle Lacey, Andreas Blattman

|

||||

SDXL을 사용하기 전에 `transformers`, `accelerate`, `safetensors` 와 `invisible_watermark`를 설치하세요.

|

||||

다음과 같이 라이브러리를 설치할 수 있습니다:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install transformers

|

||||

pip install accelerate

|

||||

pip install safetensors

|

||||

@@ -46,7 +46,7 @@ pip install invisible-watermark>=0.2.0

|

||||

Stable Diffusion XL로 이미지를 생성할 때 워터마크가 보이지 않도록 추가하는 것을 권장하는데, 이는 다운스트림(downstream) 어플리케이션에서 기계에 합성되었는지를 식별하는데 도움을 줄 수 있습니다. 그렇게 하려면 [invisible_watermark 라이브러리](https://pypi.org/project/invisible-watermark/)를 통해 설치해주세요:

|

||||

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install invisible-watermark>=0.2.0

|

||||

```

|

||||

|

||||

@@ -75,11 +75,11 @@ prompt = "Astronaut in a jungle, cold color palette, muted colors, detailed, 8k"

|

||||

image = pipe(prompt=prompt).images[0]

|

||||

```

|

||||

|

||||

### Image-to-image

|

||||

### Image-to-image

|

||||

|

||||

*image-to-image*를 위해 다음과 같이 SDXL을 사용할 수 있습니다:

|

||||

|

||||

```py

|

||||

```py

|

||||

import torch

|

||||

from diffusers import StableDiffusionXLImg2ImgPipeline

|

||||

from diffusers.utils import load_image

|

||||

@@ -99,7 +99,7 @@ image = pipe(prompt, image=init_image).images[0]

|

||||

|

||||

*inpainting*를 위해 다음과 같이 SDXL을 사용할 수 있습니다:

|

||||

|

||||

```py

|

||||

```py

|

||||

import torch

|

||||

from diffusers import StableDiffusionXLInpaintPipeline

|

||||

from diffusers.utils import load_image

|

||||

@@ -352,7 +352,7 @@ out-of-memory 에러가 난다면, [`StableDiffusionXLPipeline.enable_model_cpu_

|

||||

|

||||

**참고** Stable Diffusion XL을 `torch`가 2.0 버전 미만에서 실행시키고 싶을 때, xformers 어텐션을 사용해주세요:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install xformers

|

||||

```

|

||||

|

||||

|

||||

@@ -93,13 +93,13 @@ cd diffusers

|

||||

|

||||

**PyTorch의 경우**

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install -e ".[torch]"

|

||||

```

|

||||

|

||||

**Flax의 경우**

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install -e ".[flax]"

|

||||

```

|

||||

|

||||

|

||||

@@ -19,13 +19,13 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

다음 명령어로 ONNX Runtime를 지원하는 🤗 Optimum를 설치합니다:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install optimum["onnxruntime"]

|

||||

```

|

||||

|

||||

## Stable Diffusion 추론

|

||||

|

||||

아래 코드는 ONNX 런타임을 사용하는 방법을 보여줍니다. `StableDiffusionPipeline` 대신 `OnnxStableDiffusionPipeline`을 사용해야 합니다.

|

||||

아래 코드는 ONNX 런타임을 사용하는 방법을 보여줍니다. `StableDiffusionPipeline` 대신 `OnnxStableDiffusionPipeline`을 사용해야 합니다.

|

||||

PyTorch 모델을 불러오고 즉시 ONNX 형식으로 변환하려는 경우 `export=True`로 설정합니다.

|

||||

|

||||

```python

|

||||

@@ -38,7 +38,7 @@ images = pipe(prompt).images[0]

|

||||

pipe.save_pretrained("./onnx-stable-diffusion-v1-5")

|

||||

```

|

||||

|

||||

파이프라인을 ONNX 형식으로 오프라인으로 내보내고 나중에 추론에 사용하려는 경우,

|

||||

파이프라인을 ONNX 형식으로 오프라인으로 내보내고 나중에 추론에 사용하려는 경우,

|

||||

[`optimum-cli export`](https://huggingface.co/docs/optimum/main/en/exporters/onnx/usage_guides/export_a_model#exporting-a-model-to-onnx-using-the-cli) 명령어를 사용할 수 있습니다:

|

||||

|

||||

```bash

|

||||

@@ -47,7 +47,7 @@ optimum-cli export onnx --model runwayml/stable-diffusion-v1-5 sd_v15_onnx/

|

||||

|

||||

그 다음 추론을 수행합니다:

|

||||

|

||||

```python

|

||||

```python

|

||||

from optimum.onnxruntime import ORTStableDiffusionPipeline

|

||||

|

||||

model_id = "sd_v15_onnx"

|

||||

|

||||

@@ -19,7 +19,7 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

다음 명령어로 🤗 Optimum을 설치합니다:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install optimum["openvino"]

|

||||

```

|

||||

|

||||

|

||||

@@ -59,7 +59,7 @@ image

|

||||

|

||||

먼저 `compel` 라이브러리를 설치해야합니다:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install compel

|

||||

```

|

||||

|

||||

|

||||

@@ -95,13 +95,13 @@ cd diffusers

|

||||

|

||||

**PyTorch**

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install -e ".[torch]"

|

||||

```

|

||||

|

||||

**Flax**

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install -e ".[flax]"

|

||||

```

|

||||

|

||||

|

||||

@@ -25,7 +25,7 @@ from diffusers.utils.torch_utils import randn_tensor

|

||||

|

||||

EXAMPLE_DOC_STRING = """

|

||||

Examples:

|

||||

```

|

||||

```py

|

||||

from io import BytesIO

|

||||

|

||||

import requests

|

||||

|

||||

@@ -113,9 +113,9 @@ accelerate launch train_lcm_distill_lora_sdxl_wds.py \

|

||||

--push_to_hub \

|

||||

```

|

||||

|

||||

We provide another version for LCM LoRA SDXL that follows best practices of `peft` and leverages the `datasets` library for quick experimentation. The script doesn't load two UNets unlike `train_lcm_distill_lora_sdxl_wds.py` which reduces the memory requirements quite a bit.

|

||||

We provide another version for LCM LoRA SDXL that follows best practices of `peft` and leverages the `datasets` library for quick experimentation. The script doesn't load two UNets unlike `train_lcm_distill_lora_sdxl_wds.py` which reduces the memory requirements quite a bit.

|

||||

|

||||

Below is an example training command that trains an LCM LoRA on the [Pokemons dataset](https://huggingface.co/datasets/lambdalabs/naruto-blip-captions):

|

||||

Below is an example training command that trains an LCM LoRA on the [Narutos dataset](https://huggingface.co/datasets/lambdalabs/naruto-blip-captions):

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="stabilityai/stable-diffusion-xl-base-1.0"

|

||||

@@ -125,7 +125,7 @@ export VAE_PATH="madebyollin/sdxl-vae-fp16-fix"

|

||||

accelerate launch train_lcm_distill_lora_sdxl.py \

|

||||

--pretrained_teacher_model=${MODEL_NAME} \

|

||||

--pretrained_vae_model_name_or_path=${VAE_PATH} \

|

||||

--output_dir="pokemons-lora-lcm-sdxl" \

|

||||

--output_dir="narutos-lora-lcm-sdxl" \

|

||||

--mixed_precision="fp16" \

|

||||

--dataset_name=$DATASET_NAME \

|

||||

--resolution=1024 \

|

||||

|

||||

@@ -101,7 +101,7 @@ accelerate launch train_controlnet.py \

|

||||

`accelerate` allows for seamless multi-GPU training. Follow the instructions [here](https://huggingface.co/docs/accelerate/basic_tutorials/launch)

|

||||

for running distributed training with `accelerate`. Here is an example command:

|

||||

|

||||

```bash

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

export OUTPUT_DIR="path to save model"

|

||||

|

||||

@@ -123,21 +123,21 @@ accelerate launch --mixed_precision="fp16" --multi_gpu train_controlnet.py \

|

||||

|

||||

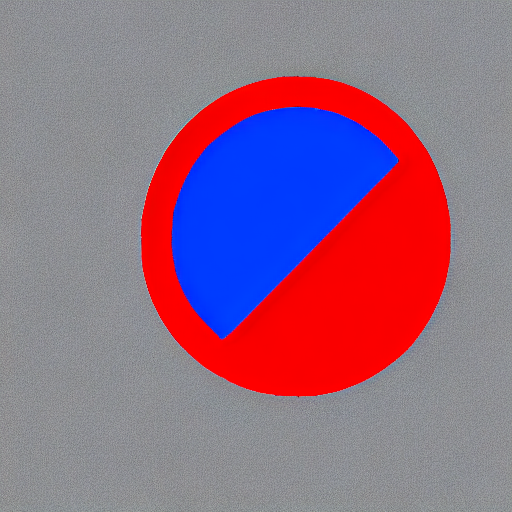

#### After 300 steps with batch size 8

|

||||

|

||||

| | |

|

||||

| | |

|

||||

|-------------------|:-------------------------:|

|

||||

| | red circle with blue background |

|

||||

| | red circle with blue background |

|

||||

|  |

|

||||

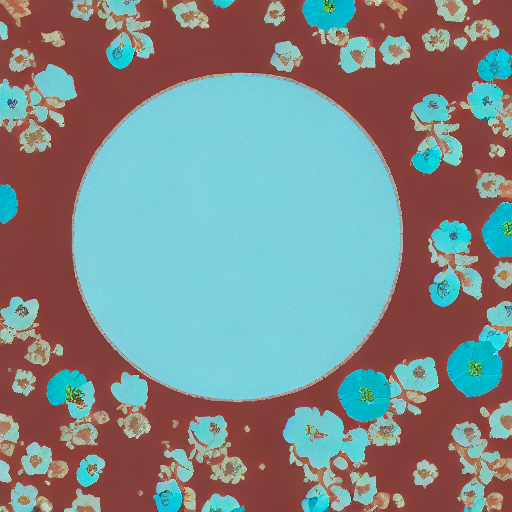

| | cyan circle with brown floral background |

|

||||

| | cyan circle with brown floral background |

|

||||

|  |

|

||||

|

||||

|

||||

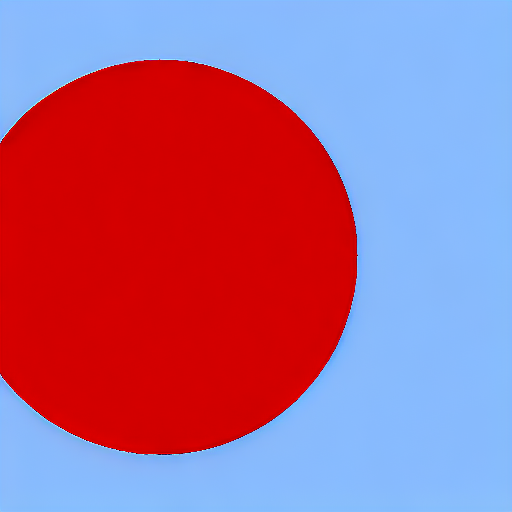

#### After 6000 steps with batch size 8:

|

||||

|

||||

| | |

|

||||

| | |

|

||||

|-------------------|:-------------------------:|

|

||||

| | red circle with blue background |

|

||||

| | red circle with blue background |

|

||||

|  |

|

||||

| | cyan circle with brown floral background |

|

||||

| | cyan circle with brown floral background |

|

||||

|  |

|

||||

|

||||

## Training on a 16 GB GPU

|

||||

@@ -194,7 +194,7 @@ accelerate launch train_controlnet.py \

|

||||

--set_grads_to_none

|

||||

```

|

||||

|

||||

When using `enable_xformers_memory_efficient_attention`, please make sure to install `xformers` by `pip install xformers`.

|

||||

When using `enable_xformers_memory_efficient_attention`, please make sure to install `xformers` by `pip install xformers`.

|

||||

|

||||

## Training on an 8 GB GPU

|

||||

|

||||

@@ -209,7 +209,7 @@ Optimizations:

|

||||

- DeepSpeed stage 2 with parameter and optimizer offloading

|

||||

- fp16 mixed precision

|

||||

|

||||

[DeepSpeed](https://www.deepspeed.ai/) can offload tensors from VRAM to either

|

||||

[DeepSpeed](https://www.deepspeed.ai/) can offload tensors from VRAM to either

|

||||

CPU or NVME. This requires significantly more RAM (about 25 GB).

|

||||

|

||||

Use `accelerate config` to enable DeepSpeed stage 2.

|

||||

@@ -256,7 +256,7 @@ accelerate launch train_controlnet.py \

|

||||

## Performing inference with the trained ControlNet

|

||||

|

||||

The trained model can be run the same as the original ControlNet pipeline with the newly trained ControlNet.

|

||||

Set `base_model_path` and `controlnet_path` to the values `--pretrained_model_name_or_path` and

|

||||

Set `base_model_path` and `controlnet_path` to the values `--pretrained_model_name_or_path` and

|

||||

`--output_dir` were respectively set to in the training script.

|

||||

|

||||

```py

|

||||

@@ -315,13 +315,13 @@ gcloud alpha compute tpus tpu-vm ssh $VM_NAME --zone $ZONE -- \

|

||||

|

||||

When connected install JAX `0.4.5`:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install "jax[tpu]==0.4.5" -f https://storage.googleapis.com/jax-releases/libtpu_releases.html

|

||||

```

|

||||

|

||||

To verify that JAX was correctly installed, you can run the following command:

|

||||

|

||||

```

|

||||

```py

|

||||

import jax

|

||||

jax.device_count()

|

||||

```

|

||||

@@ -351,14 +351,14 @@ pip install wandb

|

||||

|

||||

Now let's downloading two conditioning images that we will use to run validation during the training in order to track our progress

|

||||

|

||||

```

|

||||

```sh

|

||||

wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/controlnet_training/conditioning_image_1.png

|

||||

wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/controlnet_training/conditioning_image_2.png

|

||||

```

|

||||

|

||||

We encourage you to store or share your model with the community. To use huggingface hub, please login to your Hugging Face account, or ([create one](https://huggingface.co/docs/diffusers/main/en/training/hf.co/join) if you don’t have one already):

|

||||

|

||||

```

|

||||

```sh

|

||||

huggingface-cli login

|

||||

```

|

||||

|

||||

@@ -429,12 +429,12 @@ When work with a larger dataset, you may need to run training process for a long

|

||||

```bash

|

||||

--checkpointing_steps=500

|

||||

```

|

||||

This will save the trained model in subfolders of your output_dir. Subfolder names is the number of steps performed so far; for example: a checkpoint saved after 500 training steps would be saved in a subfolder named 500

|

||||

This will save the trained model in subfolders of your output_dir. Subfolder names is the number of steps performed so far; for example: a checkpoint saved after 500 training steps would be saved in a subfolder named 500

|

||||

|

||||

You can then start your training from this saved checkpoint with

|

||||

You can then start your training from this saved checkpoint with

|

||||

|

||||

```bash

|

||||

--controlnet_model_name_or_path="./control_out/500"

|

||||

--controlnet_model_name_or_path="./control_out/500"

|

||||

```

|

||||

|

||||

We support training with the Min-SNR weighting strategy proposed in [Efficient Diffusion Training via Min-SNR Weighting Strategy](https://arxiv.org/abs/2303.09556) which helps to achieve faster convergence by rebalancing the loss. To use it, one needs to set the `--snr_gamma` argument. The recommended value when using it is `5.0`.

|

||||

|

||||

@@ -43,7 +43,7 @@ from accelerate.utils import write_basic_config

|

||||

write_basic_config()

|

||||

```

|

||||

|

||||

When running `accelerate config`, if we specify torch compile mode to True there can be dramatic speedups.

|

||||

When running `accelerate config`, if we specify torch compile mode to True there can be dramatic speedups.

|

||||

Note also that we use PEFT library as backend for LoRA training, make sure to have `peft>=0.6.0` installed in your environment.

|

||||

|

||||

### Dog toy example

|

||||

@@ -231,7 +231,7 @@ accelerate launch --mixed_precision="fp16" train_dreambooth.py \

|

||||

|

||||

### Fine-tune text encoder with the UNet.

|

||||

|

||||

The script also allows to fine-tune the `text_encoder` along with the `unet`. It's been observed experimentally that fine-tuning `text_encoder` gives much better results especially on faces.

|

||||

The script also allows to fine-tune the `text_encoder` along with the `unet`. It's been observed experimentally that fine-tuning `text_encoder` gives much better results especially on faces.

|

||||

Pass the `--train_text_encoder` argument to the script to enable training `text_encoder`.

|

||||

|

||||

___Note: Training text encoder requires more memory, with this option the training won't fit on 16GB GPU. It needs at least 24GB VRAM.___

|

||||

@@ -303,7 +303,7 @@ In a nutshell, LoRA allows to adapt pretrained models by adding pairs of rank-de

|

||||

- Rank-decomposition matrices have significantly fewer parameters than the original model, which means that trained LoRA weights are easily portable.

|

||||

- LoRA attention layers allow to control to which extent the model is adapted towards new training images via a `scale` parameter.

|

||||

|

||||

[cloneofsimo](https://github.com/cloneofsimo) was the first to try out LoRA training for Stable Diffusion in

|

||||

[cloneofsimo](https://github.com/cloneofsimo) was the first to try out LoRA training for Stable Diffusion in

|

||||

the popular [lora](https://github.com/cloneofsimo/lora) GitHub repository.

|

||||

|

||||

### Training

|

||||

@@ -326,7 +326,7 @@ export INSTANCE_DIR="dog"

|

||||

export OUTPUT_DIR="path-to-save-model"

|

||||

```

|

||||

|

||||

For this example we want to directly store the trained LoRA embeddings on the Hub, so

|

||||

For this example we want to directly store the trained LoRA embeddings on the Hub, so

|

||||

we need to be logged in and add the `--push_to_hub` flag.

|

||||

|

||||

```bash

|

||||

@@ -356,7 +356,7 @@ accelerate launch train_dreambooth_lora.py \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

**___Note: When using LoRA we can use a much higher learning rate compared to vanilla dreambooth. Here we

|

||||

**___Note: When using LoRA we can use a much higher learning rate compared to vanilla dreambooth. Here we

|

||||

use *1e-4* instead of the usual *2e-6*.___**

|

||||

|

||||

The final LoRA embedding weights have been uploaded to [patrickvonplaten/lora_dreambooth_dog_example](https://huggingface.co/patrickvonplaten/lora_dreambooth_dog_example). **___Note: [The final weights](https://huggingface.co/patrickvonplaten/lora/blob/main/pytorch_attn_procs.bin) are only 3 MB in size which is orders of magnitudes smaller than the original model.**

|

||||

@@ -365,14 +365,14 @@ The training results are summarized [here](https://api.wandb.ai/report/patrickvo

|

||||

You can use the `Step` slider to see how the model learned the features of our subject while the model trained.

|

||||

|

||||

Optionally, we can also train additional LoRA layers for the text encoder. Specify the `--train_text_encoder` argument above for that. If you're interested to know more about how we

|

||||

enable this support, check out this [PR](https://github.com/huggingface/diffusers/pull/2918).

|

||||

enable this support, check out this [PR](https://github.com/huggingface/diffusers/pull/2918).

|

||||

|

||||

With the default hyperparameters from the above, the training seems to go in a positive direction. Check out [this panel](https://wandb.ai/sayakpaul/dreambooth-lora/reports/test-23-04-17-17-00-13---Vmlldzo0MDkwNjMy). The trained LoRA layers are available [here](https://huggingface.co/sayakpaul/dreambooth).

|

||||

|

||||

|

||||

### Inference

|

||||

|

||||

After training, LoRA weights can be loaded very easily into the original pipeline. First, you need to

|

||||

After training, LoRA weights can be loaded very easily into the original pipeline. First, you need to

|

||||

load the original pipeline:

|

||||

|

||||

```python

|

||||

@@ -394,9 +394,9 @@ image = pipe("A picture of a sks dog in a bucket", num_inference_steps=25).image

|

||||

|

||||

If you are loading the LoRA parameters from the Hub and if the Hub repository has

|

||||

a `base_model` tag (such as [this](https://huggingface.co/patrickvonplaten/lora_dreambooth_dog_example/blob/main/README.md?code=true#L4)), then

|

||||

you can do:

|

||||

you can do:

|

||||

|

||||

```py

|

||||

```py

|

||||

from huggingface_hub.repocard import RepoCard

|

||||

|

||||

lora_model_id = "patrickvonplaten/lora_dreambooth_dog_example"

|

||||

@@ -413,7 +413,7 @@ weights. For example:

|

||||

```python

|

||||

from huggingface_hub.repocard import RepoCard

|

||||

from diffusers import StableDiffusionPipeline

|

||||

import torch

|

||||

import torch

|

||||

|

||||

lora_model_id = "sayakpaul/dreambooth-text-encoder-test"

|

||||

card = RepoCard.load(lora_model_id)

|

||||

@@ -430,7 +430,7 @@ Note that the use of [`LoraLoaderMixin.load_lora_weights`](https://huggingface.c

|

||||

|

||||

* LoRA parameters that don't have separate identifiers for the UNet and the text encoder (such as [`"patrickvonplaten/lora_dreambooth_dog_example"`](https://huggingface.co/patrickvonplaten/lora_dreambooth_dog_example)). So, you can just do:

|

||||

|

||||

```py

|

||||

```py

|

||||

pipe.load_lora_weights(lora_model_path)

|

||||

```

|

||||

|

||||

@@ -529,11 +529,11 @@ To save even more memory, pass the `--set_grads_to_none` argument to the script.

|

||||

More info: https://pytorch.org/docs/stable/generated/torch.optim.Optimizer.zero_grad.html

|

||||

|

||||

### Experimental results

|

||||

You can refer to [this blog post](https://huggingface.co/blog/dreambooth) that discusses some of DreamBooth experiments in detail. Specifically, it recommends a set of DreamBooth-specific tips and tricks that we have found to work well for a variety of subjects.

|

||||

You can refer to [this blog post](https://huggingface.co/blog/dreambooth) that discusses some of DreamBooth experiments in detail. Specifically, it recommends a set of DreamBooth-specific tips and tricks that we have found to work well for a variety of subjects.

|

||||

|

||||

## IF

|

||||

|

||||

You can use the lora and full dreambooth scripts to train the text to image [IF model](https://huggingface.co/DeepFloyd/IF-I-XL-v1.0) and the stage II upscaler

|

||||

You can use the lora and full dreambooth scripts to train the text to image [IF model](https://huggingface.co/DeepFloyd/IF-I-XL-v1.0) and the stage II upscaler

|

||||

[IF model](https://huggingface.co/DeepFloyd/IF-II-L-v1.0).

|

||||

|

||||

Note that IF has a predicted variance, and our finetuning scripts only train the models predicted error, so for finetuned IF models we switch to a fixed

|

||||

@@ -553,7 +553,7 @@ pipe.scheduler = pipe.scheduler.__class__.from_config(pipe.scheduler.config, var

|

||||

|

||||

Additionally, a few alternative cli flags are needed for IF.

|

||||

|

||||

`--resolution=64`: IF is a pixel space diffusion model. In order to operate on un-compressed pixels, the input images are of a much smaller resolution.

|

||||

`--resolution=64`: IF is a pixel space diffusion model. In order to operate on un-compressed pixels, the input images are of a much smaller resolution.

|

||||

|

||||

`--pre_compute_text_embeddings`: IF uses [T5](https://huggingface.co/docs/transformers/model_doc/t5) for its text encoder. In order to save GPU memory, we pre compute all text embeddings and then de-allocate

|

||||

T5.

|

||||

@@ -568,7 +568,7 @@ We find LoRA to be sufficient for finetuning the stage I model as the low resolu

|

||||

For common and/or not-visually complex object concepts, you can get away with not-finetuning the upscaler. Just be sure to adjust the prompt passed to the

|

||||

upscaler to remove the new token from the instance prompt. I.e. if your stage I prompt is "a sks dog", use "a dog" for your stage II prompt.

|

||||

|

||||

For finegrained detail like faces that aren't present in the original training set, we find that full finetuning of the stage II upscaler is better than

|

||||

For finegrained detail like faces that aren't present in the original training set, we find that full finetuning of the stage II upscaler is better than

|

||||

LoRA finetuning stage II.

|

||||

|

||||

For finegrained detail like faces, we find that lower learning rates along with larger batch sizes work best.

|

||||

@@ -647,7 +647,7 @@ python train_dreambooth_lora.py \

|

||||

--resolution=256 \

|

||||

--train_batch_size=4 \

|

||||

--gradient_accumulation_steps=1 \

|

||||

--learning_rate=1e-6 \

|

||||

--learning_rate=1e-6 \

|

||||

--max_train_steps=2000 \

|

||||

--validation_prompt="a sks dog" \

|

||||

--validation_epochs=100 \

|

||||

@@ -663,9 +663,9 @@ python train_dreambooth_lora.py \

|

||||

`--skip_save_text_encoder`: When training the full model, this will skip saving the entire T5 with the finetuned model. You can still load the pipeline

|

||||

with a T5 loaded from the original model.

|

||||

|

||||

`use_8bit_adam`: Due to the size of the optimizer states, we recommend training the full XL IF model with 8bit adam.

|

||||

`use_8bit_adam`: Due to the size of the optimizer states, we recommend training the full XL IF model with 8bit adam.

|

||||

|

||||

`--learning_rate=1e-7`: For full dreambooth, IF requires very low learning rates. With higher learning rates model quality will degrade. Note that it is

|

||||

`--learning_rate=1e-7`: For full dreambooth, IF requires very low learning rates. With higher learning rates model quality will degrade. Note that it is

|

||||

likely the learning rate can be increased with larger batch sizes.

|

||||

|

||||

Using 8bit adam and a batch size of 4, the model can be trained in ~48 GB VRAM.

|

||||

@@ -741,4 +741,4 @@ accelerate launch train_dreambooth.py \

|

||||

|

||||

## Stable Diffusion XL

|

||||

|

||||

We support fine-tuning of the UNet shipped in [Stable Diffusion XL](https://huggingface.co/papers/2307.01952) with DreamBooth and LoRA via the `train_dreambooth_lora_sdxl.py` script. Please refer to the docs [here](./README_sdxl.md).

|

||||

We support fine-tuning of the UNet shipped in [Stable Diffusion XL](https://huggingface.co/papers/2307.01952) with DreamBooth and LoRA via the `train_dreambooth_lora_sdxl.py` script. Please refer to the docs [here](./README_sdxl.md).

|

||||

|

||||

@@ -34,7 +34,7 @@ For this example we want to directly store the trained LoRA embeddings on the Hu

|

||||

|

||||

___

|

||||

|

||||

### Pokemon example

|

||||

### Naruto example

|

||||

|

||||

For all our examples, we will directly store the trained weights on the Hub, so we need to be logged in and add the `--push_to_hub` flag. In order to do that, you have to be a registered user on the 🤗 Hugging Face Hub, and you'll also need to use an access token for the code to work. For more information on access tokens, please refer to the [User Access Tokens](https://huggingface.co/docs/hub/security-tokens) guide.

|

||||

|

||||

@@ -44,13 +44,13 @@ Run the following command to authenticate your token

|

||||

huggingface-cli login

|

||||

```

|

||||

|

||||

We also use [Weights and Biases](https://docs.wandb.ai/quickstart) logging by default, because it is really useful to monitor the training progress by regularly generating sample images during training. To install wandb, run

|

||||

We also use [Weights and Biases](https://docs.wandb.ai/quickstart) logging by default, because it is really useful to monitor the training progress by regularly generating sample images during training. To install wandb, run

|

||||

|

||||

```bash

|

||||

pip install wandb

|

||||

```

|

||||

|

||||

To disable wandb logging, remove the `--report_to=="wandb"` and `--validation_prompts="A robot pokemon, 4k photo"` flags from below examples

|

||||

To disable wandb logging, remove the `--report_to=="wandb"` and `--validation_prompts="A robot naruto, 4k photo"` flags from below examples

|

||||

|

||||

#### Fine-tune decoder

|

||||

<br>

|

||||

@@ -70,10 +70,10 @@ accelerate launch --mixed_precision="fp16" train_text_to_image_decoder.py \

|

||||

--max_grad_norm=1 \

|

||||

--checkpoints_total_limit=3 \

|

||||

--lr_scheduler="constant" --lr_warmup_steps=0 \

|

||||

--validation_prompts="A robot pokemon, 4k photo" \

|

||||

--validation_prompts="A robot naruto, 4k photo" \

|

||||

--report_to="wandb" \

|

||||

--push_to_hub \

|

||||

--output_dir="kandi2-decoder-pokemon-model"

|

||||

--output_dir="kandi2-decoder-naruto-model"

|

||||

```

|

||||

<!-- accelerate_snippet_end -->

|

||||

|

||||

@@ -95,14 +95,14 @@ accelerate launch --mixed_precision="fp16" train_text_to_image_decoder.py \

|

||||

--max_grad_norm=1 \

|

||||

--checkpoints_total_limit=3 \

|

||||

--lr_scheduler="constant" --lr_warmup_steps=0 \

|

||||

--validation_prompts="A robot pokemon, 4k photo" \

|

||||

--validation_prompts="A robot naruto, 4k photo" \

|

||||

--report_to="wandb" \

|

||||

--push_to_hub \

|

||||

--output_dir="kandi22-decoder-pokemon-model"

|

||||

--output_dir="kandi22-decoder-naruto-model"

|

||||

```

|

||||

|

||||

|

||||

Once the training is finished the model will be saved in the `output_dir` specified in the command. In this example it's `kandi22-decoder-pokemon-model`. To load the fine-tuned model for inference just pass that path to `AutoPipelineForText2Image`

|

||||

Once the training is finished the model will be saved in the `output_dir` specified in the command. In this example it's `kandi22-decoder-naruto-model`. To load the fine-tuned model for inference just pass that path to `AutoPipelineForText2Image`

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForText2Image

|

||||

@@ -111,9 +111,9 @@ import torch

|

||||

pipe = AutoPipelineForText2Image.from_pretrained(output_dir, torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt='A robot pokemon, 4k photo'

|

||||

prompt='A robot naruto, 4k photo'

|

||||

images = pipe(prompt=prompt).images

|

||||

images[0].save("robot-pokemon.png")

|

||||

images[0].save("robot-naruto.png")

|

||||

```

|

||||

|

||||

Checkpoints only save the unet, so to run inference from a checkpoint, just load the unet

|

||||

@@ -127,11 +127,11 @@ unet = UNet2DConditionModel.from_pretrained(model_path + "/checkpoint-<N>/unet")

|

||||

pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-2-2-decoder", unet=unet, torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

image = pipe(prompt="A robot pokemon, 4k photo").images[0]

|

||||

image.save("robot-pokemon.png")

|

||||

image = pipe(prompt="A robot naruto, 4k photo").images[0]

|

||||

image.save("robot-naruto.png")

|

||||

```

|

||||

|

||||

#### Fine-tune prior

|

||||

#### Fine-tune prior

|

||||

|

||||

You can fine-tune the Kandinsky prior model with `train_text_to_image_prior.py` script. Note that we currently do not support `--gradient_checkpointing` for prior model fine-tuning.

|

||||

|

||||

@@ -151,15 +151,15 @@ accelerate launch --mixed_precision="fp16" train_text_to_image_prior.py \

|

||||

--max_grad_norm=1 \

|

||||

--checkpoints_total_limit=3 \

|

||||

--lr_scheduler="constant" --lr_warmup_steps=0 \

|

||||

--validation_prompts="A robot pokemon, 4k photo" \

|

||||

--validation_prompts="A robot naruto, 4k photo" \

|

||||

--report_to="wandb" \

|

||||

--push_to_hub \

|

||||

--output_dir="kandi2-prior-pokemon-model"

|

||||

--output_dir="kandi2-prior-naruto-model"

|

||||

```

|

||||

<!-- accelerate_snippet_end -->

|

||||

|

||||

|

||||

To perform inference with the fine-tuned prior model, you will need to first create a prior pipeline by passing the `output_dir` to `DiffusionPipeline`. Then create a `KandinskyV22CombinedPipeline` from a pretrained or fine-tuned decoder checkpoint along with all the modules of the prior pipeline you just created.

|

||||

To perform inference with the fine-tuned prior model, you will need to first create a prior pipeline by passing the `output_dir` to `DiffusionPipeline`. Then create a `KandinskyV22CombinedPipeline` from a pretrained or fine-tuned decoder checkpoint along with all the modules of the prior pipeline you just created.

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForText2Image, DiffusionPipeline

|

||||

@@ -170,12 +170,12 @@ prior_components = {"prior_" + k: v for k,v in pipe_prior.components.items()}

|

||||

pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-2-2-decoder", **prior_components, torch_dtype=torch.float16)

|

||||

|

||||

pipe.enable_model_cpu_offload()

|

||||

prompt='A robot pokemon, 4k photo'

|

||||

prompt='A robot naruto, 4k photo'

|

||||

images = pipe(prompt=prompt, negative_prompt=negative_prompt).images

|

||||

images[0]

|

||||

```

|

||||

|

||||

If you want to use a fine-tuned decoder checkpoint along with your fine-tuned prior checkpoint, you can simply replace the "kandinsky-community/kandinsky-2-2-decoder" in above code with your custom model repo name. Note that in order to be able to create a `KandinskyV22CombinedPipeline`, your model repository need to have a prior tag. If you have created your model repo using our training script, the prior tag is automatically included.

|

||||

If you want to use a fine-tuned decoder checkpoint along with your fine-tuned prior checkpoint, you can simply replace the "kandinsky-community/kandinsky-2-2-decoder" in above code with your custom model repo name. Note that in order to be able to create a `KandinskyV22CombinedPipeline`, your model repository need to have a prior tag. If you have created your model repo using our training script, the prior tag is automatically included.

|

||||

|

||||

#### Training with multiple GPUs

|

||||

|

||||

@@ -196,10 +196,10 @@ accelerate launch --mixed_precision="fp16" --multi_gpu train_text_to_image_deco

|

||||

--max_grad_norm=1 \

|

||||

--checkpoints_total_limit=3 \

|

||||

--lr_scheduler="constant" --lr_warmup_steps=0 \

|

||||

--validation_prompts="A robot pokemon, 4k photo" \

|

||||

--validation_prompts="A robot naruto, 4k photo" \

|

||||

--report_to="wandb" \

|

||||

--push_to_hub \

|

||||

--output_dir="kandi2-decoder-pokemon-model"

|

||||

--output_dir="kandi2-decoder-naruto-model"

|

||||

```

|

||||

|

||||

|

||||

@@ -227,10 +227,10 @@ on consumer GPUs like Tesla T4, Tesla V100.

|

||||

|

||||

### Training

|

||||

|

||||

First, you need to set up your development environment as explained in the [installation](#installing-the-dependencies). Make sure to set the `MODEL_NAME` and `DATASET_NAME` environment variables. Here, we will use [Kandinsky 2.2](https://huggingface.co/kandinsky-community/kandinsky-2-2-decoder) and the [Pokemons dataset](https://huggingface.co/datasets/lambdalabs/naruto-blip-captions).

|

||||

First, you need to set up your development environment as explained in the [installation](#installing-the-dependencies). Make sure to set the `MODEL_NAME` and `DATASET_NAME` environment variables. Here, we will use [Kandinsky 2.2](https://huggingface.co/kandinsky-community/kandinsky-2-2-decoder) and the [Narutos dataset](https://huggingface.co/datasets/lambdalabs/naruto-blip-captions).

|

||||

|

||||

|

||||

#### Train decoder

|

||||

#### Train decoder

|

||||

|

||||

```bash

|

||||

export DATASET_NAME="lambdalabs/naruto-blip-captions"

|

||||

@@ -244,7 +244,7 @@ accelerate launch --mixed_precision="fp16" train_text_to_image_decoder_lora.py \

|

||||

--seed=42 \

|

||||

--rank=4 \

|

||||

--gradient_checkpointing \

|

||||

--output_dir="kandi22-decoder-pokemon-lora" \

|

||||

--output_dir="kandi22-decoder-naruto-lora" \

|

||||

--validation_prompt="cute dragon creature" --report_to="wandb" \

|

||||

--push_to_hub \

|

||||

```

|

||||

@@ -262,7 +262,7 @@ accelerate launch --mixed_precision="fp16" train_text_to_image_prior_lora.py \

|

||||

--learning_rate=1e-04 --lr_scheduler="constant" --lr_warmup_steps=0 \

|

||||

--seed=42 \

|

||||

--rank=4 \

|

||||

--output_dir="kandi22-prior-pokemon-lora" \

|

||||

--output_dir="kandi22-prior-naruto-lora" \

|

||||

--validation_prompt="cute dragon creature" --report_to="wandb" \

|

||||

--push_to_hub \

|

||||

```

|

||||

@@ -274,7 +274,7 @@ accelerate launch --mixed_precision="fp16" train_text_to_image_prior_lora.py \

|

||||

|

||||

#### Inference using fine-tuned LoRA checkpoint for decoder

|

||||

|

||||

Once you have trained a Kandinsky decoder model using the above command, inference can be done with the `AutoPipelineForText2Image` after loading the trained LoRA weights. You need to pass the `output_dir` for loading the LoRA weights, which in this case is `kandi22-decoder-pokemon-lora`.

|

||||

Once you have trained a Kandinsky decoder model using the above command, inference can be done with the `AutoPipelineForText2Image` after loading the trained LoRA weights. You need to pass the `output_dir` for loading the LoRA weights, which in this case is `kandi22-decoder-naruto-lora`.

|

||||

|

||||

|

||||

```python

|

||||

@@ -285,9 +285,9 @@ pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-

|

||||

pipe.unet.load_attn_procs(output_dir)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt='A robot pokemon, 4k photo'

|

||||

prompt='A robot naruto, 4k photo'

|

||||

image = pipe(prompt=prompt).images[0]

|

||||

image.save("robot_pokemon.png")

|

||||

image.save("robot_naruto.png")

|

||||

```

|

||||

|

||||

#### Inference using fine-tuned LoRA checkpoint for prior

|

||||

@@ -300,9 +300,9 @@ pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-

|

||||

pipe.prior_prior.load_attn_procs(output_dir)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt='A robot pokemon, 4k photo'

|

||||

prompt='A robot naruto, 4k photo'

|

||||

image = pipe(prompt=prompt).images[0]

|

||||

image.save("robot_pokemon.png")

|

||||

image.save("robot_naruto.png")

|

||||

image

|

||||

```

|

||||

|

||||

|

||||

@@ -1,16 +1,16 @@

|

||||

# Overview

|

||||

|

||||

These examples show how to run [Diffuser](https://arxiv.org/abs/2205.09991) in Diffusers.

|

||||

These examples show how to run [Diffuser](https://arxiv.org/abs/2205.09991) in Diffusers.

|

||||

There are two ways to use the script, `run_diffuser_locomotion.py`.

|

||||

|

||||

The key option is a change of the variable `n_guide_steps`.

|

||||

The key option is a change of the variable `n_guide_steps`.

|

||||

When `n_guide_steps=0`, the trajectories are sampled from the diffusion model, but not fine-tuned to maximize reward in the environment.

|

||||

By default, `n_guide_steps=2` to match the original implementation.

|

||||

|

||||

|

||||

|

||||

You will need some RL specific requirements to run the examples:

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install -f https://download.pytorch.org/whl/torch_stable.html \

|

||||

free-mujoco-py \

|

||||

einops \

|

||||

|

||||

@@ -6,7 +6,7 @@ Updating them to the most recent version of the library will require some work.

|

||||

|

||||

To use any of them, just run the command

|

||||

|

||||

```

|

||||

```sh

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

inside the folder of your choice.

|

||||

|

||||

@@ -19,7 +19,7 @@ on consumer GPUs like Tesla T4, Tesla V100.

|

||||

|

||||

### Training

|

||||

|

||||

First, you need to set up your development environment as is explained in the [installation section](#installing-the-dependencies). Make sure to set the `MODEL_NAME` and `DATASET_NAME` environment variables. Here, we will use [Stable Diffusion v1-4](https://hf.co/CompVis/stable-diffusion-v1-4) and the [Pokemons dataset](https://huggingface.co/datasets/lambdalabs/naruto-blip-captions).

|

||||

First, you need to set up your development environment as is explained in the [installation section](#installing-the-dependencies). Make sure to set the `MODEL_NAME` and `DATASET_NAME` environment variables. Here, we will use [Stable Diffusion v1-4](https://hf.co/CompVis/stable-diffusion-v1-4) and the [Narutos dataset](https://huggingface.co/datasets/lambdalabs/naruto-blip-captions).

|

||||

|

||||

**___Note: Change the `resolution` to 768 if you are using the [stable-diffusion-2](https://huggingface.co/stabilityai/stable-diffusion-2) 768x768 model.___**

|

||||

|

||||

@@ -30,7 +30,7 @@ export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export DATASET_NAME="lambdalabs/naruto-blip-captions"

|

||||

```

|

||||

|

||||

For this example we want to directly store the trained LoRA embeddings on the Hub, so

|

||||

For this example we want to directly store the trained LoRA embeddings on the Hub, so

|

||||

we need to be logged in and add the `--push_to_hub` flag.

|

||||

|

||||

```bash

|

||||

@@ -48,7 +48,7 @@ accelerate launch --mixed_precision="fp16" train_text_to_image_lora.py \

|

||||

--num_train_epochs=100 --checkpointing_steps=5000 \

|

||||

--learning_rate=1e-04 --lr_scheduler="constant" --lr_warmup_steps=0 \

|

||||

--seed=42 \

|

||||

--output_dir="sd-pokemon-model-lora" \

|

||||

--output_dir="sd-naruto-model-lora" \

|

||||

--validation_prompt="cute dragon creature" --report_to="wandb"

|

||||

--use_peft \

|

||||

--lora_r=4 --lora_alpha=32 \

|

||||

@@ -61,12 +61,12 @@ The above command will also run inference as fine-tuning progresses and log the

|

||||

|

||||

The final LoRA embedding weights have been uploaded to [sayakpaul/sd-model-finetuned-lora-t4](https://huggingface.co/sayakpaul/sd-model-finetuned-lora-t4). **___Note: [The final weights](https://huggingface.co/sayakpaul/sd-model-finetuned-lora-t4/blob/main/pytorch_lora_weights.bin) are only 3 MB in size, which is orders of magnitudes smaller than the original model.___**

|

||||

|

||||

You can check some inference samples that were logged during the course of the fine-tuning process [here](https://wandb.ai/sayakpaul/text2image-fine-tune/runs/q4lc0xsw).

|

||||

You can check some inference samples that were logged during the course of the fine-tuning process [here](https://wandb.ai/sayakpaul/text2image-fine-tune/runs/q4lc0xsw).

|

||||

|

||||

### Inference

|

||||

|

||||

Once you have trained a model using above command, the inference can be done simply using the `StableDiffusionPipeline` after loading the trained LoRA weights. You

|

||||

need to pass the `output_dir` for loading the LoRA weights which, in this case, is `sd-pokemon-model-lora`.

|

||||

Once you have trained a model using above command, the inference can be done simply using the `StableDiffusionPipeline` after loading the trained LoRA weights. You

|

||||

need to pass the `output_dir` for loading the LoRA weights which, in this case, is `sd-naruto-model-lora`.

|

||||

|

||||

```python

|

||||

from diffusers import StableDiffusionPipeline

|

||||

@@ -77,7 +77,7 @@ pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4",

|

||||

pipe.unet.load_attn_procs(model_path)

|

||||

pipe.to("cuda")

|

||||

|

||||

prompt = "A pokemon with green eyes and red legs."

|

||||

prompt = "A naruto with green eyes and red legs."

|

||||

image = pipe(prompt, num_inference_steps=30, guidance_scale=7.5).images[0]

|

||||

image.save("pokemon.png")

|

||||

image.save("naruto.png")

|

||||

```

|

||||

@@ -32,7 +32,7 @@ And initialize an [🤗Accelerate](https://github.com/huggingface/accelerate/) e

|

||||

accelerate config

|

||||

```

|

||||

|

||||

### Pokemon example

|

||||

### Naruto example

|

||||

|

||||

You need to accept the model license before downloading or using the weights. In this example we'll use model version `v1-4`, so you'll need to visit [its card](https://huggingface.co/CompVis/stable-diffusion-v1-4), read the license and tick the checkbox if you agree.

|

||||

|

||||

@@ -51,7 +51,7 @@ If you have already cloned the repo, then you won't need to go through these ste

|

||||

## Use ONNXRuntime to accelerate training

|

||||

In order to leverage onnxruntime to accelerate training, please use train_text_to_image.py

|

||||

|

||||

The command to train a DDPM UNetCondition model on the Pokemon dataset with onnxruntime:

|

||||