mirror of

https://github.com/huggingface/diffusers.git

synced 2026-01-27 17:22:53 +03:00

[Docs] Korean translation update (#4022)

* feat) optimization kr translation * fix) typo, italic setting * feat) dreambooth, text2image kr * feat) lora kr * fix) LoRA * fix) fp16 fix * fix) doc-builder style * fix) fp16 일부 단어 수정 * fix) fp16 style fix * fix) opt, training docs update * merge conflict * Fix community pipelines (#3266) * Allow disabling torch 2_0 attention (#3273) * Allow disabling torch 2_0 attention * make style * Update src/diffusers/models/attention.py * Release: v0.16.1 * feat) toctree update * feat) toctree update * Fix custom releases (#3708) * Fix custom releases * make style * Fix loading if unexpected keys are present (#3720) * Fix loading * make style * Release: v0.17.0 * opt_overview * commit * Create pipeline_overview.mdx * unconditional_image_generatoin_1stDraft * ✨ Add translation for write_own_pipeline.mdx * conditional-직역, 언컨디셔널 * unconditional_image_generation first draft * reviese * Update pipeline_overview.mdx * revise-2 * ♻️ translation fixed for write_own_pipeline.mdx * complete translate basic_training.mdx * other-formats.mdx 번역 완료 * fix tutorials/basic_training.mdx * other-formats 수정 * inpaint 한국어 번역 * depth2img translation * translate training/adapt-a-model.mdx * revised_all * feedback taken * using_safetensors.mdx_first_draft * custom_pipeline_examples.mdx_first_draft * img2img 한글번역 완료 * tutorial_overview edit * reusing_seeds * torch2.0 * translate complete * fix) 용어 통일 규약 반영 * [fix] 피드백을 반영해서 번역 보정 * 오탈자 정정 + 컨벤션 위배된 부분 정정 * typo, style fix * toctree update * copyright fix * toctree fix * Update _toctree.yml --------- Co-authored-by: Chanran Kim <seriousran@gmail.com> Co-authored-by: apolinário <joaopaulo.passos@gmail.com> Co-authored-by: Patrick von Platen <patrick.v.platen@gmail.com> Co-authored-by: Lee, Hongkyu <75282888+howsmyanimeprofilepicture@users.noreply.github.com> Co-authored-by: hyeminan <adios9709@gmail.com> Co-authored-by: movie5 <oyh5800@naver.com> Co-authored-by: idra79haza <idra79haza@github.com> Co-authored-by: Jihwan Kim <cuchoco@naver.com> Co-authored-by: jungwoo <boonkoonheart@gmail.com> Co-authored-by: jjuun0 <jh061993@gmail.com> Co-authored-by: szjung-test <93111772+szjung-test@users.noreply.github.com> Co-authored-by: idra79haza <37795618+idra79haza@users.noreply.github.com> Co-authored-by: howsmyanimeprofilepicture <howsmyanimeprofilepicture@gmail.com> Co-authored-by: hoswmyanimeprofilepicture <hoswmyanimeprofilepicture@gmail.com>

This commit is contained in:

@@ -8,14 +8,69 @@

|

||||

- local: installation

|

||||

title: "설치"

|

||||

title: "시작하기"

|

||||

|

||||

- sections:

|

||||

- local: tutorials/tutorial_overview

|

||||

title: 개요

|

||||

- local: using-diffusers/write_own_pipeline

|

||||

title: 모델과 스케줄러 이해하기

|

||||

- local: tutorials/basic_training

|

||||

title: Diffusion 모델 학습하기

|

||||

title: Tutorials

|

||||

- sections:

|

||||

- sections:

|

||||

- local: in_translation

|

||||

title: 개요

|

||||

- local: in_translation

|

||||

- local: using-diffusers/loading

|

||||

title: 파이프라인, 모델, 스케줄러 불러오기

|

||||

- local: using-diffusers/schedulers

|

||||

title: 다른 스케줄러들을 가져오고 비교하기

|

||||

- local: using-diffusers/custom_pipeline_overview

|

||||

title: 커뮤니티 파이프라인 불러오기

|

||||

- local: using-diffusers/using_safetensors

|

||||

title: 세이프텐서 불러오기

|

||||

- local: using-diffusers/other-formats

|

||||

title: 다른 형식의 Stable Diffusion 불러오기

|

||||

title: 불러오기 & 허브

|

||||

- sections:

|

||||

- local: using-diffusers/pipeline_overview

|

||||

title: 개요

|

||||

- local: using-diffusers/unconditional_image_generation

|

||||

title: Unconditional 이미지 생성

|

||||

- local: in_translation

|

||||

title: Text-to-image 생성

|

||||

- local: using-diffusers/img2img

|

||||

title: Text-guided image-to-image

|

||||

- local: using-diffusers/inpaint

|

||||

title: Text-guided 이미지 인페인팅

|

||||

- local: using-diffusers/depth2img

|

||||

title: Text-guided depth-to-image

|

||||

- local: in_translation

|

||||

title: Textual inversion

|

||||

- local: in_translation

|

||||

title: 여러 GPU를 사용한 분산 추론

|

||||

- local: using-diffusers/reusing_seeds

|

||||

title: Deterministic 생성으로 이미지 퀄리티 높이기

|

||||

- local: in_translation

|

||||

title: 재현 가능한 파이프라인 생성하기

|

||||

- local: using-diffusers/custom_pipeline_examples

|

||||

title: 커뮤니티 파이프라인들

|

||||

- local: in_translation

|

||||

title: 커뮤티니 파이프라인에 기여하는 방법

|

||||

- local: in_translation

|

||||

title: JAX/Flax에서의 Stable Diffusion

|

||||

- local: in_translation

|

||||

title: Weighting Prompts

|

||||

title: 추론을 위한 파이프라인

|

||||

- sections:

|

||||

- local: training/overview

|

||||

title: 개요

|

||||

- local: in_translation

|

||||

title: 학습을 위한 데이터셋 생성하기

|

||||

- local: training/adapt_a_model

|

||||

title: 새로운 태스크에 모델 적용하기

|

||||

- local: training/unconditional_training

|

||||

title: Unconditional 이미지 생성

|

||||

- local: training/text_inversion

|

||||

title: Textual Inversion

|

||||

- local: training/dreambooth

|

||||

title: DreamBooth

|

||||

@@ -27,13 +82,16 @@

|

||||

title: ControlNet

|

||||

- local: in_translation

|

||||

title: InstructPix2Pix 학습

|

||||

title: 학습

|

||||

- local: in_translation

|

||||

title: Custom Diffusion

|

||||

title: Training

|

||||

title: Diffusers 사용하기

|

||||

- sections:

|

||||

- local: in_translation

|

||||

- local: optimization/opt_overview

|

||||

title: 개요

|

||||

- local: optimization/fp16

|

||||

title: 메모리와 속도

|

||||

- local: in_translation

|

||||

- local: optimization/torch2.0

|

||||

title: Torch2.0 지원

|

||||

- local: optimization/xformers

|

||||

title: xFormers

|

||||

@@ -41,8 +99,12 @@

|

||||

title: ONNX

|

||||

- local: optimization/open_vino

|

||||

title: OpenVINO

|

||||

- local: in_translation

|

||||

title: Core ML

|

||||

- local: optimization/mps

|

||||

title: MPS

|

||||

- local: optimization/habana

|

||||

title: Habana Gaudi

|

||||

- local: in_translation

|

||||

title: Token Merging

|

||||

title: 최적화/특수 하드웨어

|

||||

|

||||

@@ -59,7 +59,7 @@ torch.backends.cuda.matmul.allow_tf32 = True

|

||||

|

||||

## 반정밀도 가중치

|

||||

|

||||

더 많은 GPU 메모리를 절약하고 더 빠른 속도를 얻기 위해 모델 가중치를 반정밀도(half precision)로 직접 로드하고 실행할 수 있습니다.

|

||||

더 많은 GPU 메모리를 절약하고 더 빠른 속도를 얻기 위해 모델 가중치를 반정밀도(half precision)로 직접 불러오고 실행할 수 있습니다.

|

||||

여기에는 `fp16`이라는 브랜치에 저장된 float16 버전의 가중치를 불러오고, 그 때 `float16` 유형을 사용하도록 PyTorch에 지시하는 작업이 포함됩니다.

|

||||

|

||||

```Python

|

||||

|

||||

17

docs/source/ko/optimization/opt_overview.mdx

Normal file

17

docs/source/ko/optimization/opt_overview.mdx

Normal file

@@ -0,0 +1,17 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# 개요

|

||||

|

||||

노이즈가 많은 출력에서 적은 출력으로 만드는 과정으로 고품질 생성 모델의 출력을 만드는 각각의 반복되는 스텝은 많은 계산이 필요합니다. 🧨 Diffuser의 목표 중 하나는 모든 사람이 이 기술을 널리 이용할 수 있도록 하는 것이며, 여기에는 소비자 및 특수 하드웨어에서 빠른 추론을 가능하게 하는 것을 포함합니다.

|

||||

|

||||

이 섹션에서는 추론 속도를 최적화하고 메모리 소비를 줄이기 위한 반정밀(half-precision) 가중치 및 sliced attention과 같은 팁과 요령을 다룹니다. 또한 [`torch.compile`](https://pytorch.org/tutorials/intermediate/torch_compile_tutorial.html) 또는 [ONNX Runtime](https://onnxruntime.ai/docs/)을 사용하여 PyTorch 코드의 속도를 높이고, [xFormers](https://facebookresearch.github.io/xformers/)를 사용하여 memory-efficient attention을 활성화하는 방법을 배울 수 있습니다. Apple Silicon, Intel 또는 Habana 프로세서와 같은 특정 하드웨어에서 추론을 실행하기 위한 가이드도 있습니다.

|

||||

445

docs/source/ko/optimization/torch2.0.mdx

Normal file

445

docs/source/ko/optimization/torch2.0.mdx

Normal file

@@ -0,0 +1,445 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Diffusers에서의 PyTorch 2.0 가속화 지원

|

||||

|

||||

`0.13.0` 버전부터 Diffusers는 [PyTorch 2.0](https://pytorch.org/get-started/pytorch-2.0/)에서의 최신 최적화를 지원합니다. 이는 다음을 포함됩니다.

|

||||

1. momory-efficient attention을 사용한 가속화된 트랜스포머 지원 - `xformers`같은 추가적인 dependencies 필요 없음

|

||||

2. 추가 성능 향상을 위한 개별 모델에 대한 컴파일 기능 [torch.compile](https://pytorch.org/tutorials/intermediate/torch_compile_tutorial.html) 지원

|

||||

|

||||

|

||||

## 설치

|

||||

가속화된 어텐션 구현과 및 `torch.compile()`을 사용하기 위해, pip에서 최신 버전의 PyTorch 2.0을 설치되어 있고 diffusers 0.13.0. 버전 이상인지 확인하세요. 아래 설명된 바와 같이, PyTorch 2.0이 활성화되어 있을 때 diffusers는 최적화된 어텐션 프로세서([`AttnProcessor2_0`](https://github.com/huggingface/diffusers/blob/1a5797c6d4491a879ea5285c4efc377664e0332d/src/diffusers/models/attention_processor.py#L798))를 사용합니다.

|

||||

|

||||

```bash

|

||||

pip install --upgrade torch diffusers

|

||||

```

|

||||

|

||||

## 가속화된 트랜스포머와 `torch.compile` 사용하기.

|

||||

|

||||

|

||||

1. **가속화된 트랜스포머 구현**

|

||||

|

||||

PyTorch 2.0에는 [`torch.nn.functional.scaled_dot_product_attention`](https://pytorch.org/docs/master/generated/torch.nn.functional.scaled_dot_product_attention) 함수를 통해 최적화된 memory-efficient attention의 구현이 포함되어 있습니다. 이는 입력 및 GPU 유형에 따라 여러 최적화를 자동으로 활성화합니다. 이는 [xFormers](https://github.com/facebookresearch/xformers)의 `memory_efficient_attention`과 유사하지만 기본적으로 PyTorch에 내장되어 있습니다.

|

||||

|

||||

이러한 최적화는 PyTorch 2.0이 설치되어 있고 `torch.nn.functional.scaled_dot_product_attention`을 사용할 수 있는 경우 Diffusers에서 기본적으로 활성화됩니다. 이를 사용하려면 `torch 2.0`을 설치하고 파이프라인을 사용하기만 하면 됩니다. 예를 들어:

|

||||

|

||||

```Python

|

||||

import torch

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

|

||||

pipe = pipe.to("cuda")

|

||||

|

||||

prompt = "a photo of an astronaut riding a horse on mars"

|

||||

image = pipe(prompt).images[0]

|

||||

```

|

||||

|

||||

이를 명시적으로 활성화하려면(필수는 아님) 아래와 같이 수행할 수 있습니다.

|

||||

|

||||

```diff

|

||||

import torch

|

||||

from diffusers import DiffusionPipeline

|

||||

+ from diffusers.models.attention_processor import AttnProcessor2_0

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16).to("cuda")

|

||||

+ pipe.unet.set_attn_processor(AttnProcessor2_0())

|

||||

|

||||

prompt = "a photo of an astronaut riding a horse on mars"

|

||||

image = pipe(prompt).images[0]

|

||||

```

|

||||

|

||||

이 실행 과정은 `xFormers`만큼 빠르고 메모리적으로 효율적이어야 합니다. 자세한 내용은 [벤치마크](#benchmark)에서 확인하세요.

|

||||

|

||||

파이프라인을 보다 deterministic으로 만들거나 파인 튜닝된 모델을 [Core ML](https://huggingface.co/docs/diffusers/v0.16.0/en/optimization/coreml#how-to-run-stable-diffusion-with-core-ml)과 같은 다른 형식으로 변환해야 하는 경우 바닐라 어텐션 프로세서 ([`AttnProcessor`](https://github.com/huggingface/diffusers/blob/1a5797c6d4491a879ea5285c4efc377664e0332d/src/diffusers/models/attention_processor.py#L402))로 되돌릴 수 있습니다. 일반 어텐션 프로세서를 사용하려면 [`~diffusers.UNet2DConditionModel.set_default_attn_processor`] 함수를 사용할 수 있습니다:

|

||||

|

||||

```Python

|

||||

import torch

|

||||

from diffusers import DiffusionPipeline

|

||||

from diffusers.models.attention_processor import AttnProcessor

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16).to("cuda")

|

||||

pipe.unet.set_default_attn_processor()

|

||||

|

||||

prompt = "a photo of an astronaut riding a horse on mars"

|

||||

image = pipe(prompt).images[0]

|

||||

```

|

||||

|

||||

2. **torch.compile**

|

||||

|

||||

추가적인 속도 향상을 위해 새로운 `torch.compile` 기능을 사용할 수 있습니다. 파이프라인의 UNet은 일반적으로 계산 비용이 가장 크기 때문에 나머지 하위 모델(텍스트 인코더와 VAE)은 그대로 두고 `unet`을 `torch.compile`로 래핑합니다. 자세한 내용과 다른 옵션은 [torch 컴파일 문서](https://pytorch.org/tutorials/intermediate/torch_compile_tutorial.html)를 참조하세요.

|

||||

|

||||

```python

|

||||

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

|

||||

images = pipe(prompt, num_inference_steps=steps, num_images_per_prompt=batch_size).images

|

||||

```

|

||||

|

||||

GPU 유형에 따라 `compile()`은 가속화된 트랜스포머 최적화를 통해 **5% - 300%**의 _추가 성능 향상_을 얻을 수 있습니다. 그러나 컴파일은 Ampere(A100, 3090), Ada(4090) 및 Hopper(H100)와 같은 최신 GPU 아키텍처에서 더 많은 성능 향상을 가져올 수 있음을 참고하세요.

|

||||

|

||||

컴파일은 완료하는 데 약간의 시간이 걸리므로, 파이프라인을 한 번 준비한 다음 동일한 유형의 추론 작업을 여러 번 수행해야 하는 상황에 가장 적합합니다. 다른 이미지 크기에서 컴파일된 파이프라인을 호출하면 시간적 비용이 많이 들 수 있는 컴파일 작업이 다시 트리거됩니다.

|

||||

|

||||

|

||||

## 벤치마크

|

||||

|

||||

PyTorch 2.0의 효율적인 어텐션 구현과 `torch.compile`을 사용하여 가장 많이 사용되는 5개의 파이프라인에 대해 다양한 GPU와 배치 크기에 걸쳐 포괄적인 벤치마크를 수행했습니다. 여기서는 [`torch.compile()`이 최적으로 활용되도록 하는](https://github.com/huggingface/diffusers/pull/3313) `diffusers 0.17.0.dev0`을 사용했습니다.

|

||||

|

||||

### 벤치마킹 코드

|

||||

|

||||

#### Stable Diffusion text-to-image

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

import torch

|

||||

|

||||

path = "runwayml/stable-diffusion-v1-5"

|

||||

|

||||

run_compile = True # Set True / False

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(path, torch_dtype=torch.float16)

|

||||

pipe = pipe.to("cuda")

|

||||

pipe.unet.to(memory_format=torch.channels_last)

|

||||

|

||||

if run_compile:

|

||||

print("Run torch compile")

|

||||

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

|

||||

|

||||

prompt = "ghibli style, a fantasy landscape with castles"

|

||||

|

||||

for _ in range(3):

|

||||

images = pipe(prompt=prompt).images

|

||||

```

|

||||

|

||||

#### Stable Diffusion image-to-image

|

||||

|

||||

```python

|

||||

from diffusers import StableDiffusionImg2ImgPipeline

|

||||

import requests

|

||||

import torch

|

||||

from PIL import Image

|

||||

from io import BytesIO

|

||||

|

||||

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

||||

|

||||

response = requests.get(url)

|

||||

init_image = Image.open(BytesIO(response.content)).convert("RGB")

|

||||

init_image = init_image.resize((512, 512))

|

||||

|

||||

path = "runwayml/stable-diffusion-v1-5"

|

||||

|

||||

run_compile = True # Set True / False

|

||||

|

||||

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(path, torch_dtype=torch.float16)

|

||||

pipe = pipe.to("cuda")

|

||||

pipe.unet.to(memory_format=torch.channels_last)

|

||||

|

||||

if run_compile:

|

||||

print("Run torch compile")

|

||||

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

|

||||

|

||||

prompt = "ghibli style, a fantasy landscape with castles"

|

||||

|

||||

for _ in range(3):

|

||||

image = pipe(prompt=prompt, image=init_image).images[0]

|

||||

```

|

||||

|

||||

#### Stable Diffusion - inpainting

|

||||

|

||||

```python

|

||||

from diffusers import StableDiffusionInpaintPipeline

|

||||

import requests

|

||||

import torch

|

||||

from PIL import Image

|

||||

from io import BytesIO

|

||||

|

||||

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

||||

|

||||

def download_image(url):

|

||||

response = requests.get(url)

|

||||

return Image.open(BytesIO(response.content)).convert("RGB")

|

||||

|

||||

|

||||

img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

|

||||

mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

|

||||

|

||||

init_image = download_image(img_url).resize((512, 512))

|

||||

mask_image = download_image(mask_url).resize((512, 512))

|

||||

|

||||

path = "runwayml/stable-diffusion-inpainting"

|

||||

|

||||

run_compile = True # Set True / False

|

||||

|

||||

pipe = StableDiffusionInpaintPipeline.from_pretrained(path, torch_dtype=torch.float16)

|

||||

pipe = pipe.to("cuda")

|

||||

pipe.unet.to(memory_format=torch.channels_last)

|

||||

|

||||

if run_compile:

|

||||

print("Run torch compile")

|

||||

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

|

||||

|

||||

prompt = "ghibli style, a fantasy landscape with castles"

|

||||

|

||||

for _ in range(3):

|

||||

image = pipe(prompt=prompt, image=init_image, mask_image=mask_image).images[0]

|

||||

```

|

||||

|

||||

#### ControlNet

|

||||

|

||||

```python

|

||||

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

|

||||

import requests

|

||||

import torch

|

||||

from PIL import Image

|

||||

from io import BytesIO

|

||||

|

||||

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

||||

|

||||

response = requests.get(url)

|

||||

init_image = Image.open(BytesIO(response.content)).convert("RGB")

|

||||

init_image = init_image.resize((512, 512))

|

||||

|

||||

path = "runwayml/stable-diffusion-v1-5"

|

||||

|

||||

run_compile = True # Set True / False

|

||||

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

|

||||

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

||||

path, controlnet=controlnet, torch_dtype=torch.float16

|

||||

)

|

||||

|

||||

pipe = pipe.to("cuda")

|

||||

pipe.unet.to(memory_format=torch.channels_last)

|

||||

pipe.controlnet.to(memory_format=torch.channels_last)

|

||||

|

||||

if run_compile:

|

||||

print("Run torch compile")

|

||||

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

|

||||

pipe.controlnet = torch.compile(pipe.controlnet, mode="reduce-overhead", fullgraph=True)

|

||||

|

||||

prompt = "ghibli style, a fantasy landscape with castles"

|

||||

|

||||

for _ in range(3):

|

||||

image = pipe(prompt=prompt, image=init_image).images[0]

|

||||

```

|

||||

|

||||

#### IF text-to-image + upscaling

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

import torch

|

||||

|

||||

run_compile = True # Set True / False

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-M-v1.0", variant="fp16", text_encoder=None, torch_dtype=torch.float16)

|

||||

pipe.to("cuda")

|

||||

pipe_2 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-II-M-v1.0", variant="fp16", text_encoder=None, torch_dtype=torch.float16)

|

||||

pipe_2.to("cuda")

|

||||

pipe_3 = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-x4-upscaler", torch_dtype=torch.float16)

|

||||

pipe_3.to("cuda")

|

||||

|

||||

|

||||

pipe.unet.to(memory_format=torch.channels_last)

|

||||

pipe_2.unet.to(memory_format=torch.channels_last)

|

||||

pipe_3.unet.to(memory_format=torch.channels_last)

|

||||

|

||||

if run_compile:

|

||||

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

|

||||

pipe_2.unet = torch.compile(pipe_2.unet, mode="reduce-overhead", fullgraph=True)

|

||||

pipe_3.unet = torch.compile(pipe_3.unet, mode="reduce-overhead", fullgraph=True)

|

||||

|

||||

prompt = "the blue hulk"

|

||||

|

||||

prompt_embeds = torch.randn((1, 2, 4096), dtype=torch.float16)

|

||||

neg_prompt_embeds = torch.randn((1, 2, 4096), dtype=torch.float16)

|

||||

|

||||

for _ in range(3):

|

||||

image = pipe(prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

|

||||

image_2 = pipe_2(image=image, prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

|

||||

image_3 = pipe_3(prompt=prompt, image=image, noise_level=100).images

|

||||

```

|

||||

|

||||

PyTorch 2.0 및 `torch.compile()`로 얻을 수 있는 가능한 속도 향상에 대해, [Stable Diffusion text-to-image pipeline](StableDiffusionPipeline)에 대한 상대적인 속도 향상을 보여주는 차트를 5개의 서로 다른 GPU 제품군(배치 크기 4)에 대해 나타냅니다:

|

||||

|

||||

|

||||

|

||||

To give you an even better idea of how this speed-up holds for the other pipelines presented above, consider the following

|

||||

plot that shows the benchmarking numbers from an A100 across three different batch sizes

|

||||

(with PyTorch 2.0 nightly and `torch.compile()`):

|

||||

이 속도 향상이 위에 제시된 다른 파이프라인에 대해서도 어떻게 유지되는지 더 잘 이해하기 위해, 세 가지의 다른 배치 크기에 걸쳐 A100의 벤치마킹(PyTorch 2.0 nightly 및 `torch.compile() 사용) 수치를 보여주는 차트를 보입니다:

|

||||

|

||||

|

||||

|

||||

_(위 차트의 벤치마크 메트릭은 **초당 iteration 수(iterations/second)**입니다)_

|

||||

|

||||

그러나 투명성을 위해 모든 벤치마킹 수치를 공개합니다!

|

||||

|

||||

다음 표들에서는, **_초당 처리되는 iteration_** 수 측면에서의 결과를 보여줍니다.

|

||||

|

||||

### A100 (batch size: 1)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 21.66 | 23.13 | 44.03 | 49.74 |

|

||||

| SD - img2img | 21.81 | 22.40 | 43.92 | 46.32 |

|

||||

| SD - inpaint | 22.24 | 23.23 | 43.76 | 49.25 |

|

||||

| SD - controlnet | 15.02 | 15.82 | 32.13 | 36.08 |

|

||||

| IF | 20.21 / <br>13.84 / <br>24.00 | 20.12 / <br>13.70 / <br>24.03 | ❌ | 97.34 / <br>27.23 / <br>111.66 |

|

||||

|

||||

### A100 (batch size: 4)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 11.6 | 13.12 | 14.62 | 17.27 |

|

||||

| SD - img2img | 11.47 | 13.06 | 14.66 | 17.25 |

|

||||

| SD - inpaint | 11.67 | 13.31 | 14.88 | 17.48 |

|

||||

| SD - controlnet | 8.28 | 9.38 | 10.51 | 12.41 |

|

||||

| IF | 25.02 | 18.04 | ❌ | 48.47 |

|

||||

|

||||

### A100 (batch size: 16)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 3.04 | 3.6 | 3.83 | 4.68 |

|

||||

| SD - img2img | 2.98 | 3.58 | 3.83 | 4.67 |

|

||||

| SD - inpaint | 3.04 | 3.66 | 3.9 | 4.76 |

|

||||

| SD - controlnet | 2.15 | 2.58 | 2.74 | 3.35 |

|

||||

| IF | 8.78 | 9.82 | ❌ | 16.77 |

|

||||

|

||||

### V100 (batch size: 1)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 18.99 | 19.14 | 20.95 | 22.17 |

|

||||

| SD - img2img | 18.56 | 19.18 | 20.95 | 22.11 |

|

||||

| SD - inpaint | 19.14 | 19.06 | 21.08 | 22.20 |

|

||||

| SD - controlnet | 13.48 | 13.93 | 15.18 | 15.88 |

|

||||

| IF | 20.01 / <br>9.08 / <br>23.34 | 19.79 / <br>8.98 / <br>24.10 | ❌ | 55.75 / <br>11.57 / <br>57.67 |

|

||||

|

||||

### V100 (batch size: 4)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 5.96 | 5.89 | 6.83 | 6.86 |

|

||||

| SD - img2img | 5.90 | 5.91 | 6.81 | 6.82 |

|

||||

| SD - inpaint | 5.99 | 6.03 | 6.93 | 6.95 |

|

||||

| SD - controlnet | 4.26 | 4.29 | 4.92 | 4.93 |

|

||||

| IF | 15.41 | 14.76 | ❌ | 22.95 |

|

||||

|

||||

### V100 (batch size: 16)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 1.66 | 1.66 | 1.92 | 1.90 |

|

||||

| SD - img2img | 1.65 | 1.65 | 1.91 | 1.89 |

|

||||

| SD - inpaint | 1.69 | 1.69 | 1.95 | 1.93 |

|

||||

| SD - controlnet | 1.19 | 1.19 | OOM after warmup | 1.36 |

|

||||

| IF | 5.43 | 5.29 | ❌ | 7.06 |

|

||||

|

||||

### T4 (batch size: 1)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 6.9 | 6.95 | 7.3 | 7.56 |

|

||||

| SD - img2img | 6.84 | 6.99 | 7.04 | 7.55 |

|

||||

| SD - inpaint | 6.91 | 6.7 | 7.01 | 7.37 |

|

||||

| SD - controlnet | 4.89 | 4.86 | 5.35 | 5.48 |

|

||||

| IF | 17.42 / <br>2.47 / <br>18.52 | 16.96 / <br>2.45 / <br>18.69 | ❌ | 24.63 / <br>2.47 / <br>23.39 |

|

||||

|

||||

### T4 (batch size: 4)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 1.79 | 1.79 | 2.03 | 1.99 |

|

||||

| SD - img2img | 1.77 | 1.77 | 2.05 | 2.04 |

|

||||

| SD - inpaint | 1.81 | 1.82 | 2.09 | 2.09 |

|

||||

| SD - controlnet | 1.34 | 1.27 | 1.47 | 1.46 |

|

||||

| IF | 5.79 | 5.61 | ❌ | 7.39 |

|

||||

|

||||

### T4 (batch size: 16)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 2.34s | 2.30s | OOM after 2nd iteration | 1.99s |

|

||||

| SD - img2img | 2.35s | 2.31s | OOM after warmup | 2.00s |

|

||||

| SD - inpaint | 2.30s | 2.26s | OOM after 2nd iteration | 1.95s |

|

||||

| SD - controlnet | OOM after 2nd iteration | OOM after 2nd iteration | OOM after warmup | OOM after warmup |

|

||||

| IF * | 1.44 | 1.44 | ❌ | 1.94 |

|

||||

|

||||

### RTX 3090 (batch size: 1)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 22.56 | 22.84 | 23.84 | 25.69 |

|

||||

| SD - img2img | 22.25 | 22.61 | 24.1 | 25.83 |

|

||||

| SD - inpaint | 22.22 | 22.54 | 24.26 | 26.02 |

|

||||

| SD - controlnet | 16.03 | 16.33 | 17.38 | 18.56 |

|

||||

| IF | 27.08 / <br>9.07 / <br>31.23 | 26.75 / <br>8.92 / <br>31.47 | ❌ | 68.08 / <br>11.16 / <br>65.29 |

|

||||

|

||||

### RTX 3090 (batch size: 4)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 6.46 | 6.35 | 7.29 | 7.3 |

|

||||

| SD - img2img | 6.33 | 6.27 | 7.31 | 7.26 |

|

||||

| SD - inpaint | 6.47 | 6.4 | 7.44 | 7.39 |

|

||||

| SD - controlnet | 4.59 | 4.54 | 5.27 | 5.26 |

|

||||

| IF | 16.81 | 16.62 | ❌ | 21.57 |

|

||||

|

||||

### RTX 3090 (batch size: 16)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 1.7 | 1.69 | 1.93 | 1.91 |

|

||||

| SD - img2img | 1.68 | 1.67 | 1.93 | 1.9 |

|

||||

| SD - inpaint | 1.72 | 1.71 | 1.97 | 1.94 |

|

||||

| SD - controlnet | 1.23 | 1.22 | 1.4 | 1.38 |

|

||||

| IF | 5.01 | 5.00 | ❌ | 6.33 |

|

||||

|

||||

### RTX 4090 (batch size: 1)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 40.5 | 41.89 | 44.65 | 49.81 |

|

||||

| SD - img2img | 40.39 | 41.95 | 44.46 | 49.8 |

|

||||

| SD - inpaint | 40.51 | 41.88 | 44.58 | 49.72 |

|

||||

| SD - controlnet | 29.27 | 30.29 | 32.26 | 36.03 |

|

||||

| IF | 69.71 / <br>18.78 / <br>85.49 | 69.13 / <br>18.80 / <br>85.56 | ❌ | 124.60 / <br>26.37 / <br>138.79 |

|

||||

|

||||

### RTX 4090 (batch size: 4)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 12.62 | 12.84 | 15.32 | 15.59 |

|

||||

| SD - img2img | 12.61 | 12,.79 | 15.35 | 15.66 |

|

||||

| SD - inpaint | 12.65 | 12.81 | 15.3 | 15.58 |

|

||||

| SD - controlnet | 9.1 | 9.25 | 11.03 | 11.22 |

|

||||

| IF | 31.88 | 31.14 | ❌ | 43.92 |

|

||||

|

||||

### RTX 4090 (batch size: 16)

|

||||

|

||||

| **Pipeline** | **torch 2.0 - <br>no compile** | **torch nightly - <br>no compile** | **torch 2.0 - <br>compile** | **torch nightly - <br>compile** |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| SD - txt2img | 3.17 | 3.2 | 3.84 | 3.85 |

|

||||

| SD - img2img | 3.16 | 3.2 | 3.84 | 3.85 |

|

||||

| SD - inpaint | 3.17 | 3.2 | 3.85 | 3.85 |

|

||||

| SD - controlnet | 2.23 | 2.3 | 2.7 | 2.75 |

|

||||

| IF | 9.26 | 9.2 | ❌ | 13.31 |

|

||||

|

||||

## 참고

|

||||

|

||||

* Follow [this PR](https://github.com/huggingface/diffusers/pull/3313) for more details on the environment used for conducting the benchmarks.

|

||||

* For the IF pipeline and batch sizes > 1, we only used a batch size of >1 in the first IF pipeline for text-to-image generation and NOT for upscaling. So, that means the two upscaling pipelines received a batch size of 1.

|

||||

|

||||

*Thanks to [Horace He](https://github.com/Chillee) from the PyTorch team for their support in improving our support of `torch.compile()` in Diffusers.*

|

||||

|

||||

* 벤치마크 수행에 사용된 환경에 대한 자세한 내용은 [이 PR](https://github.com/huggingface/diffusers/pull/3313)을 참조하세요.

|

||||

* IF 파이프라인와 배치 크기 > 1의 경우 첫 번째 IF 파이프라인에서 text-to-image 생성을 위한 배치 크기 > 1만 사용했으며 업스케일링에는 사용하지 않았습니다. 즉, 두 개의 업스케일링 파이프라인이 배치 크기 1임을 의미합니다.

|

||||

|

||||

*Diffusers에서 `torch.compile()` 지원을 개선하는 데 도움을 준 PyTorch 팀의 [Horace He](https://github.com/Chillee)에게 감사드립니다.*

|

||||

54

docs/source/ko/training/adapt_a_model.mdx

Normal file

54

docs/source/ko/training/adapt_a_model.mdx

Normal file

@@ -0,0 +1,54 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# 새로운 작업에 대한 모델을 적용하기

|

||||

|

||||

많은 diffusion 시스템은 같은 구성 요소들을 공유하므로 한 작업에 대해 사전학습된 모델을 완전히 다른 작업에 적용할 수 있습니다.

|

||||

|

||||

이 인페인팅을 위한 가이드는 사전학습된 [`UNet2DConditionModel`]의 아키텍처를 초기화하고 수정하여 사전학습된 text-to-image 모델을 어떻게 인페인팅에 적용하는지를 알려줄 것입니다.

|

||||

|

||||

## UNet2DConditionModel 파라미터 구성

|

||||

|

||||

[`UNet2DConditionModel`]은 [input sample](https://huggingface.co/docs/diffusers/v0.16.0/en/api/models#diffusers.UNet2DConditionModel.in_channels)에서 4개의 채널을 기본적으로 허용합니다. 예를 들어, [`runwayml/stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5)와 같은 사전학습된 text-to-image 모델을 불러오고 `in_channels`의 수를 확인합니다:

|

||||

|

||||

```py

|

||||

from diffusers import StableDiffusionPipeline

|

||||

|

||||

pipeline = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5")

|

||||

pipeline.unet.config["in_channels"]

|

||||

4

|

||||

```

|

||||

|

||||

인페인팅은 입력 샘플에 9개의 채널이 필요합니다. [`runwayml/stable-diffusion-inpainting`](https://huggingface.co/runwayml/stable-diffusion-inpainting)와 같은 사전학습된 인페인팅 모델에서 이 값을 확인할 수 있습니다:

|

||||

|

||||

```py

|

||||

from diffusers import StableDiffusionPipeline

|

||||

|

||||

pipeline = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-inpainting")

|

||||

pipeline.unet.config["in_channels"]

|

||||

9

|

||||

```

|

||||

|

||||

인페인팅에 대한 text-to-image 모델을 적용하기 위해, `in_channels` 수를 4에서 9로 수정해야 할 것입니다.

|

||||

|

||||

사전학습된 text-to-image 모델의 가중치와 [`UNet2DConditionModel`]을 초기화하고 `in_channels`를 9로 수정해 주세요. `in_channels`의 수를 수정하면 크기가 달라지기 때문에 크기가 안 맞는 오류를 피하기 위해 `ignore_mismatched_sizes=True` 및 `low_cpu_mem_usage=False`를 설정해야 합니다.

|

||||

|

||||

```py

|

||||

from diffusers import UNet2DConditionModel

|

||||

|

||||

model_id = "runwayml/stable-diffusion-v1-5"

|

||||

unet = UNet2DConditionModel.from_pretrained(

|

||||

model_id, subfolder="unet", in_channels=9, low_cpu_mem_usage=False, ignore_mismatched_sizes=True

|

||||

)

|

||||

```

|

||||

|

||||

Text-to-image 모델로부터 다른 구성 요소의 사전학습된 가중치는 체크포인트로부터 초기화되지만 `unet`의 입력 채널 가중치 (`conv_in.weight`)는 랜덤하게 초기화됩니다. 그렇지 않으면 모델이 노이즈를 리턴하기 때문에 인페인팅의 모델을 파인튜닝 할 때 중요합니다.

|

||||

@@ -273,7 +273,7 @@ from diffusers import DiffusionPipeline, UNet2DConditionModel

|

||||

from transformers import CLIPTextModel

|

||||

import torch

|

||||

|

||||

# 학습에 사용된 것과 동일한 인수(model, revision)로 파이프라인을 로드합니다.

|

||||

# 학습에 사용된 것과 동일한 인수(model, revision)로 파이프라인을 불러옵니다.

|

||||

model_id = "CompVis/stable-diffusion-v1-4"

|

||||

|

||||

unet = UNet2DConditionModel.from_pretrained("/sddata/dreambooth/daruma-v2-1/checkpoint-100/unet")

|

||||

@@ -294,7 +294,7 @@ If you have **`"accelerate<0.16.0"`** installed, you need to convert it to an in

|

||||

from accelerate import Accelerator

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

# 학습에 사용된 것과 동일한 인수(model, revision)로 파이프라인을 로드합니다.

|

||||

# 학습에 사용된 것과 동일한 인수(model, revision)로 파이프라인을 불러옵니다.

|

||||

model_id = "CompVis/stable-diffusion-v1-4"

|

||||

pipeline = DiffusionPipeline.from_pretrained(model_id)

|

||||

|

||||

|

||||

@@ -102,7 +102,7 @@ accelerate launch train_dreambooth_lora.py \

|

||||

>>> pipe = StableDiffusionPipeline.from_pretrained(model_base, torch_dtype=torch.float16)

|

||||

```

|

||||

|

||||

*기본 모델의 가중치 위에* 파인튜닝된 DreamBooth 모델에서 LoRA 가중치를 로드한 다음, 더 빠른 추론을 위해 파이프라인을 GPU로 이동합니다. LoRA 가중치를 프리징된 사전 훈련된 모델 가중치와 병합할 때, 선택적으로 'scale' 매개변수로 어느 정도의 가중치를 병합할 지 조절할 수 있습니다:

|

||||

*기본 모델의 가중치 위에* 파인튜닝된 DreamBooth 모델에서 LoRA 가중치를 불러온 다음, 더 빠른 추론을 위해 파이프라인을 GPU로 이동합니다. LoRA 가중치를 프리징된 사전 훈련된 모델 가중치와 병합할 때, 선택적으로 'scale' 매개변수로 어느 정도의 가중치를 병합할 지 조절할 수 있습니다:

|

||||

|

||||

<Tip>

|

||||

|

||||

|

||||

73

docs/source/ko/training/overview.mdx

Normal file

73

docs/source/ko/training/overview.mdx

Normal file

@@ -0,0 +1,73 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# 🧨 Diffusers 학습 예시

|

||||

|

||||

이번 챕터에서는 다양한 유즈케이스들에 대한 예제 코드들을 통해 어떻게하면 효과적으로 `diffusers` 라이브러리를 사용할 수 있을까에 대해 알아보도록 하겠습니다.

|

||||

|

||||

**Note**: 혹시 오피셜한 예시코드를 찾고 있다면, [여기](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines)를 참고해보세요!

|

||||

|

||||

여기서 다룰 예시들은 다음을 지향합니다.

|

||||

|

||||

- **손쉬운 디펜던시 설치** (Self-contained) : 여기서 사용될 예시 코드들의 디펜던시 패키지들은 전부 `pip install` 명령어를 통해 설치 가능한 패키지들입니다. 또한 친절하게 `requirements.txt` 파일에 해당 패키지들이 명시되어 있어, `pip install -r requirements.txt`로 간편하게 해당 디펜던시들을 설치할 수 있습니다. 예시: [train_unconditional.py](https://github.com/huggingface/diffusers/blob/main/examples/unconditional_image_generation/train_unconditional.py), [requirements.txt](https://github.com/huggingface/diffusers/blob/main/examples/unconditional_image_generation/requirements.txt)

|

||||

- **손쉬운 수정** (Easy-to-tweak) : 저희는 가능하면 많은 유즈 케이스들을 제공하고자 합니다. 하지만 예시는 결국 그저 예시라는 점들 기억해주세요. 여기서 제공되는 예시코드들을 그저 단순히 복사-붙혀넣기하는 식으로는 여러분이 마주한 문제들을 손쉽게 해결할 순 없을 것입니다. 다시 말해 어느 정도는 여러분의 상황과 니즈에 맞춰 코드를 일정 부분 고쳐나가야 할 것입니다. 따라서 대부분의 학습 예시들은 데이터의 전처리 과정과 학습 과정에 대한 코드들을 함께 제공함으로써, 사용자가 니즈에 맞게 손쉬운 수정할 수 있도록 돕고 있습니다.

|

||||

- **입문자 친화적인** (Beginner-friendly) : 이번 챕터는 diffusion 모델과 `diffusers` 라이브러리에 대한 전반적인 이해를 돕기 위해 작성되었습니다. 따라서 diffusion 모델에 대한 최신 SOTA (state-of-the-art) 방법론들 가운데서도, 입문자에게는 많이 어려울 수 있다고 판단되면, 해당 방법론들은 여기서 다루지 않으려고 합니다.

|

||||

- **하나의 태스크만 포함할 것**(One-purpose-only): 여기서 다룰 예시들은 하나의 태스크만 포함하고 있어야 합니다. 물론 이미지 초해상화(super-resolution)와 이미지 보정(modification)과 같은 유사한 모델링 프로세스를 갖는 태스크들이 존재하겠지만, 하나의 예제에 하나의 태스크만을 담는 것이 더 이해하기 용이하다고 판단했기 때문입니다.

|

||||

|

||||

|

||||

|

||||

저희는 diffusion 모델의 대표적인 태스크들을 다루는 공식 예제를 제공하고 있습니다. *공식* 예제는 현재 진행형으로 `diffusers` 관리자들(maintainers)에 의해 관리되고 있습니다. 또한 저희는 앞서 정의한 저희의 철학을 엄격하게 따르고자 노력하고 있습니다. 혹시 여러분께서 이러한 예시가 반드시 필요하다고 생각되신다면, 언제든지 [Feature Request](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=) 혹은 직접 [Pull Request](https://github.com/huggingface/diffusers/compare)를 주시기 바랍니다. 저희는 언제나 환영입니다!

|

||||

|

||||

학습 예시들은 다양한 태스크들에 대해 diffusion 모델을 사전학습(pretrain)하거나 파인튜닝(fine-tuning)하는 법을 보여줍니다. 현재 다음과 같은 예제들을 지원하고 있습니다.

|

||||

|

||||

- [Unconditional Training](./unconditional_training)

|

||||

- [Text-to-Image Training](./text2image)

|

||||

- [Text Inversion](./text_inversion)

|

||||

- [Dreambooth](./dreambooth)

|

||||

|

||||

memory-efficient attention 연산을 수행하기 위해, 가능하면 [xFormers](../optimization/xformers)를 설치해주시기 바랍니다. 이를 통해 학습 속도를 늘리고 메모리에 대한 부담을 줄일 수 있습니다.

|

||||

|

||||

| Task | 🤗 Accelerate | 🤗 Datasets | Colab

|

||||

|---|---|:---:|:---:|

|

||||

| [**Unconditional Image Generation**](./unconditional_training) | ✅ | ✅ | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/training_example.ipynb)

|

||||

| [**Text-to-Image fine-tuning**](./text2image) | ✅ | ✅ |

|

||||

| [**Textual Inversion**](./text_inversion) | ✅ | - | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb)

|

||||

| [**Dreambooth**](./dreambooth) | ✅ | - | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb)

|

||||

| [**Training with LoRA**](./lora) | ✅ | - | - |

|

||||

| [**ControlNet**](./controlnet) | ✅ | ✅ | - |

|

||||

| [**InstructPix2Pix**](./instructpix2pix) | ✅ | ✅ | - |

|

||||

| [**Custom Diffusion**](./custom_diffusion) | ✅ | ✅ | - |

|

||||

|

||||

|

||||

## 커뮤니티

|

||||

|

||||

공식 예제 외에도 **커뮤니티 예제** 역시 제공하고 있습니다. 해당 예제들은 우리의 커뮤니티에 의해 관리됩니다. 커뮤니티 예쩨는 학습 예시나 추론 파이프라인으로 구성될 수 있습니다. 이러한 커뮤니티 예시들의 경우, 앞서 정의했던 철학들을 좀 더 관대하게 적용하고 있습니다. 또한 이러한 커뮤니티 예시들의 경우, 모든 이슈들에 대한 유지보수를 보장할 수는 없습니다.

|

||||

|

||||

유용하긴 하지만, 아직은 대중적이지 못하거나 저희의 철학에 부합하지 않는 예제들은 [community examples](https://github.com/huggingface/diffusers/tree/main/examples/community) 폴더에 담기게 됩니다.

|

||||

|

||||

**Note**: 커뮤니티 예제는 `diffusers`에 기여(contribution)를 희망하는 분들에게 [아주 좋은 기여 수단](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22)이 될 수 있습니다.

|

||||

|

||||

## 주목할 사항들

|

||||

|

||||

최신 버전의 예시 코드들의 성공적인 구동을 보장하기 위해서는, 반드시 **소스코드를 통해 `diffusers`를 설치해야 하며,** 해당 예시 코드들이 요구하는 디펜던시들 역시 설치해야 합니다. 이를 위해 새로운 가상 환경을 구축하고 다음의 명령어를 실행해야 합니다.

|

||||

|

||||

```bash

|

||||

git clone https://github.com/huggingface/diffusers

|

||||

cd diffusers

|

||||

pip install .

|

||||

```

|

||||

|

||||

그 다음 `cd` 명령어를 통해 해당 예제 디렉토리에 접근해서 다음 명령어를 실행하면 됩니다.

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

275

docs/source/ko/training/text_inversion.mdx

Normal file

275

docs/source/ko/training/text_inversion.mdx

Normal file

@@ -0,0 +1,275 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

|

||||

|

||||

# Textual-Inversion

|

||||

|

||||

[[open-in-colab]]

|

||||

|

||||

[textual-inversion](https://arxiv.org/abs/2208.01618)은 소수의 예시 이미지에서 새로운 콘셉트를 포착하는 기법입니다. 이 기술은 원래 [Latent Diffusion](https://github.com/CompVis/latent-diffusion)에서 시연되었지만, 이후 [Stable Diffusion](https://huggingface.co/docs/diffusers/main/en/conceptual/stable_diffusion)과 같은 유사한 다른 모델에도 적용되었습니다. 학습된 콘셉트는 text-to-image 파이프라인에서 생성된 이미지를 더 잘 제어하는 데 사용할 수 있습니다. 이 모델은 텍스트 인코더의 임베딩 공간에서 새로운 '단어'를 학습하여 개인화된 이미지 생성을 위한 텍스트 프롬프트 내에서 사용됩니다.

|

||||

|

||||

|

||||

<small>By using just 3-5 images you can teach new concepts to a model such as Stable Diffusion for personalized image generation <a href="https://github.com/rinongal/textual_inversion">(image source)</a>.</small>

|

||||

|

||||

이 가이드에서는 textual-inversion으로 [`runwayml/stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5) 모델을 학습하는 방법을 설명합니다. 이 가이드에서 사용된 모든 textual-inversion 학습 스크립트는 [여기](https://github.com/huggingface/diffusers/tree/main/examples/textual_inversion)에서 확인할 수 있습니다. 내부적으로 어떻게 작동하는지 자세히 살펴보고 싶으시다면 해당 링크를 참조해주시기 바랍니다.

|

||||

|

||||

<Tip>

|

||||

|

||||

[Stable Diffusion Textual Inversion Concepts Library](https://huggingface.co/sd-concepts-library)에는 커뮤니티에서 제작한 학습된 textual-inversion 모델들이 있습니다. 시간이 지남에 따라 더 많은 콘셉트들이 추가되어 유용한 리소스로 성장할 것입니다!

|

||||

|

||||

</Tip>

|

||||

|

||||

시작하기 전에 학습을 위한 의존성 라이브러리들을 설치해야 합니다:

|

||||

|

||||

```bash

|

||||

pip install diffusers accelerate transformers

|

||||

```

|

||||

|

||||

의존성 라이브러리들의 설치가 완료되면, [🤗Accelerate](https://github.com/huggingface/accelerate/) 환경을 초기화시킵니다.

|

||||

|

||||

```bash

|

||||

accelerate config

|

||||

```

|

||||

|

||||

별도의 설정없이, 기본 🤗Accelerate 환경을 설정하려면 다음과 같이 하세요:

|

||||

|

||||

```bash

|

||||

accelerate config default

|

||||

```

|

||||

|

||||

또는 사용 중인 환경이 노트북과 같은 대화형 셸을 지원하지 않는다면, 다음과 같이 사용할 수 있습니다:

|

||||

|

||||

```py

|

||||

from accelerate.utils import write_basic_config

|

||||

|

||||

write_basic_config()

|

||||

```

|

||||

|

||||

마지막으로, Memory-Efficient Attention을 통해 메모리 사용량을 줄이기 위해 [xFormers](https://huggingface.co/docs/diffusers/main/en/training/optimization/xformers)를 설치합니다. xFormers를 설치한 후, 학습 스크립트에 `--enable_xformers_memory_efficient_attention` 인자를 추가합니다. xFormers는 Flax에서 지원되지 않습니다.

|

||||

|

||||

## 허브에 모델 업로드하기

|

||||

|

||||

모델을 허브에 저장하려면, 학습 스크립트에 다음 인자를 추가해야 합니다.

|

||||

|

||||

```bash

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

## 체크포인트 저장 및 불러오기

|

||||

|

||||

학습중에 모델의 체크포인트를 정기적으로 저장하는 것이 좋습니다. 이렇게 하면 어떤 이유로든 학습이 중단된 경우 저장된 체크포인트에서 학습을 다시 시작할 수 있습니다. 학습 스크립트에 다음 인자를 전달하면 500단계마다 전체 학습 상태가 `output_dir`의 하위 폴더에 체크포인트로서 저장됩니다.

|

||||

|

||||

```bash

|

||||

--checkpointing_steps=500

|

||||

```

|

||||

|

||||

저장된 체크포인트에서 학습을 재개하려면, 학습 스크립트와 재개할 특정 체크포인트에 다음 인자를 전달하세요.

|

||||

|

||||

```bash

|

||||

--resume_from_checkpoint="checkpoint-1500"

|

||||

```

|

||||

|

||||

## 파인 튜닝

|

||||

|

||||

학습용 데이터셋으로 [고양이 장난감 데이터셋](https://huggingface.co/datasets/diffusers/cat_toy_example)을 다운로드하여 디렉토리에 저장하세요. 여러분만의 고유한 데이터셋을 사용하고자 한다면, [학습용 데이터셋 만들기](https://huggingface.co/docs/diffusers/training/create_dataset) 가이드를 살펴보시기 바랍니다.

|

||||

|

||||

```py

|

||||

from huggingface_hub import snapshot_download

|

||||

|

||||

local_dir = "./cat"

|

||||

snapshot_download(

|

||||

"diffusers/cat_toy_example", local_dir=local_dir, repo_type="dataset", ignore_patterns=".gitattributes"

|

||||

)

|

||||

```

|

||||

|

||||

모델의 리포지토리 ID(또는 모델 가중치가 포함된 디렉터리 경로)를 `MODEL_NAME` 환경 변수에 할당하고, 해당 값을 [`pretrained_model_name_or_path`](https://huggingface.co/docs/diffusers/en/api/diffusion_pipeline#diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path) 인자에 전달합니다. 그리고 이미지가 포함된 디렉터리 경로를 `DATA_DIR` 환경 변수에 할당합니다.

|

||||

|

||||

이제 [학습 스크립트](https://github.com/huggingface/diffusers/blob/main/examples/textual_inversion/textual_inversion.py)를 실행할 수 있습니다. 스크립트는 다음 파일을 생성하고 리포지토리에 저장합니다.

|

||||

|

||||

- `learned_embeds.bin`

|

||||

- `token_identifier.txt`

|

||||

- `type_of_concept.txt`.

|

||||

|

||||

<Tip>

|

||||

|

||||

💡V100 GPU 1개를 기준으로 전체 학습에는 최대 1시간이 걸립니다. 학습이 완료되기를 기다리는 동안 궁금한 점이 있으면 아래 섹션에서 [textual-inversion이 어떻게 작동하는지](https://huggingface.co/docs/diffusers/training/text_inversion#how-it-works) 자유롭게 확인하세요 !

|

||||

|

||||

</Tip>

|

||||

|

||||

<frameworkcontent>

|

||||

<pt>

|

||||

```bash

|

||||

export MODEL_NAME="runwayml/stable-diffusion-v1-5"

|

||||

export DATA_DIR="./cat"

|

||||

|

||||

accelerate launch textual_inversion.py \

|

||||

--pretrained_model_name_or_path=$MODEL_NAME \

|

||||

--train_data_dir=$DATA_DIR \

|

||||

--learnable_property="object" \

|

||||

--placeholder_token="<cat-toy>" --initializer_token="toy" \

|

||||

--resolution=512 \

|

||||

--train_batch_size=1 \

|

||||

--gradient_accumulation_steps=4 \

|

||||

--max_train_steps=3000 \

|

||||

--learning_rate=5.0e-04 --scale_lr \

|

||||

--lr_scheduler="constant" \

|

||||

--lr_warmup_steps=0 \

|

||||

--output_dir="textual_inversion_cat" \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

<Tip>

|

||||

|

||||

💡학습 성능을 올리기 위해, 플레이스홀더 토큰(`<cat-toy>`)을 (단일한 임베딩 벡터가 아닌) 복수의 임베딩 벡터로 표현하는 것 역시 고려할 있습니다. 이러한 트릭이 모델이 보다 복잡한 이미지의 스타일(앞서 말한 콘셉트)을 더 잘 캡처하는 데 도움이 될 수 있습니다. 복수의 임베딩 벡터 학습을 활성화하려면 다음 옵션을 전달하십시오.

|

||||

|

||||

```bash

|

||||

--num_vectors=5

|

||||

```

|

||||

|

||||

</Tip>

|

||||

</pt>

|

||||

<jax>

|

||||

|

||||

TPU에 액세스할 수 있는 경우, [Flax 학습 스크립트](https://github.com/huggingface/diffusers/blob/main/examples/textual_inversion/textual_inversion_flax.py)를 사용하여 더 빠르게 모델을 학습시켜보세요. (물론 GPU에서도 작동합니다.) 동일한 설정에서 Flax 학습 스크립트는 PyTorch 학습 스크립트보다 최소 70% 더 빨라야 합니다! ⚡️

|

||||

|

||||

시작하기 앞서 Flax에 대한 의존성 라이브러리들을 설치해야 합니다.

|

||||

|

||||

```bash

|

||||

pip install -U -r requirements_flax.txt

|

||||

```

|

||||

|

||||

모델의 리포지토리 ID(또는 모델 가중치가 포함된 디렉터리 경로)를 `MODEL_NAME` 환경 변수에 할당하고, 해당 값을 [`pretrained_model_name_or_path`](https://huggingface.co/docs/diffusers/en/api/diffusion_pipeline#diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path) 인자에 전달합니다.

|

||||

|

||||

그런 다음 [학습 스크립트](https://github.com/huggingface/diffusers/blob/main/examples/textual_inversion/textual_inversion_flax.py)를 시작할 수 있습니다.

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="duongna/stable-diffusion-v1-4-flax"

|

||||

export DATA_DIR="./cat"

|

||||

|

||||

python textual_inversion_flax.py \

|

||||

--pretrained_model_name_or_path=$MODEL_NAME \

|

||||

--train_data_dir=$DATA_DIR \

|

||||

--learnable_property="object" \

|

||||

--placeholder_token="<cat-toy>" --initializer_token="toy" \

|

||||

--resolution=512 \

|

||||

--train_batch_size=1 \

|

||||

--max_train_steps=3000 \

|

||||

--learning_rate=5.0e-04 --scale_lr \

|

||||

--output_dir="textual_inversion_cat" \

|

||||

--push_to_hub

|

||||

```

|

||||

</jax>

|

||||

</frameworkcontent>

|

||||

|

||||

### 중간 로깅

|

||||

|

||||

모델의 학습 진행 상황을 추적하는 데 관심이 있는 경우, 학습 과정에서 생성된 이미지를 저장할 수 있습니다. 학습 스크립트에 다음 인수를 추가하여 중간 로깅을 활성화합니다.

|

||||

|

||||

- `validation_prompt` : 샘플을 생성하는 데 사용되는 프롬프트(기본값은 `None`으로 설정되며, 이 때 중간 로깅은 비활성화됨)

|

||||

- `num_validation_images` : 생성할 샘플 이미지 수

|

||||

- `validation_steps` : `validation_prompt`로부터 샘플 이미지를 생성하기 전 스텝의 수

|

||||

|

||||

```bash

|

||||

--validation_prompt="A <cat-toy> backpack"

|

||||

--num_validation_images=4

|

||||

--validation_steps=100

|

||||

```

|

||||

|

||||

## 추론

|

||||

|

||||

모델을 학습한 후에는, 해당 모델을 [`StableDiffusionPipeline`]을 사용하여 추론에 사용할 수 있습니다.

|

||||

|

||||

textual-inversion 스크립트는 기본적으로 textual-inversion을 통해 얻어진 임베딩 벡터만을 저장합니다. 해당 임베딩 벡터들은 텍스트 인코더의 임베딩 행렬에 추가되어 있습습니다.

|

||||

|

||||

<frameworkcontent>

|

||||

<pt>

|

||||

<Tip>

|

||||

|

||||

💡 커뮤니티는 [sd-concepts-library](https://huggingface.co/sd-concepts-library) 라는 대규모의 textual-inversion 임베딩 벡터 라이브러리를 만들었습니다. textual-inversion 임베딩을 밑바닥부터 학습하는 대신, 해당 라이브러리에 본인이 찾는 textual-inversion 임베딩이 이미 추가되어 있지 않은지를 확인하는 것도 좋은 방법이 될 것 같습니다.

|

||||

|

||||

</Tip>

|

||||

|

||||

textual-inversion 임베딩 벡터을 불러오기 위해서는, 먼저 해당 임베딩 벡터를 학습할 때 사용한 모델을 불러와야 합니다. 여기서는 [`runwayml/stable-diffusion-v1-5`](https://huggingface.co/docs/diffusers/training/runwayml/stable-diffusion-v1-5) 모델이 사용되었다고 가정하고 불러오겠습니다.

|

||||

|

||||

```python

|

||||

from diffusers import StableDiffusionPipeline

|

||||

import torch

|

||||

|

||||

model_id = "runwayml/stable-diffusion-v1-5"

|

||||

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

|

||||

```

|

||||

|

||||

다음으로 `TextualInversionLoaderMixin.load_textual_inversion` 함수를 통해, textual-inversion 임베딩 벡터를 불러와야 합니다. 여기서 우리는 이전의 `<cat-toy>` 예제의 임베딩을 불러올 것입니다.

|

||||

|

||||

```python

|

||||

pipe.load_textual_inversion("sd-concepts-library/cat-toy")

|

||||

```

|

||||

|

||||

이제 플레이스홀더 토큰(`<cat-toy>`)이 잘 동작하는지를 확인하는 파이프라인을 실행할 수 있습니다.

|

||||

|

||||

```python

|

||||

prompt = "A <cat-toy> backpack"

|

||||

|

||||

image = pipe(prompt, num_inference_steps=50).images[0]

|

||||

image.save("cat-backpack.png")

|

||||

```

|

||||

|

||||

`TextualInversionLoaderMixin.load_textual_inversion`은 Diffusers 형식으로 저장된 텍스트 임베딩 벡터를 로드할 수 있을 뿐만 아니라, [Automatic1111](https://github.com/AUTOMATIC1111/stable-diffusion-webui) 형식으로 저장된 임베딩 벡터도 로드할 수 있습니다. 이렇게 하려면, 먼저 [civitAI](https://civitai.com/models/3036?modelVersionId=8387)에서 임베딩 벡터를 다운로드한 다음 로컬에서 불러와야 합니다.

|

||||

|

||||

```python

|

||||

pipe.load_textual_inversion("./charturnerv2.pt")

|

||||

```

|

||||

</pt>

|

||||

<jax>

|

||||

|

||||

현재 Flax에 대한 `load_textual_inversion` 함수는 없습니다. 따라서 학습 후 textual-inversion 임베딩 벡터가 모델의 일부로서 저장되었는지를 확인해야 합니다. 그런 다음은 다른 Flax 모델과 마찬가지로 실행할 수 있습니다.

|

||||

|

||||

```python

|

||||

import jax

|

||||

import numpy as np

|

||||

from flax.jax_utils import replicate

|

||||

from flax.training.common_utils import shard

|

||||

from diffusers import FlaxStableDiffusionPipeline

|

||||

|

||||

model_path = "path-to-your-trained-model"

|

||||

pipeline, params = FlaxStableDiffusionPipeline.from_pretrained(model_path, dtype=jax.numpy.bfloat16)

|

||||

|

||||

prompt = "A <cat-toy> backpack"

|

||||

prng_seed = jax.random.PRNGKey(0)

|

||||

num_inference_steps = 50

|

||||

|

||||

num_samples = jax.device_count()

|

||||

prompt = num_samples * [prompt]

|

||||

prompt_ids = pipeline.prepare_inputs(prompt)

|

||||

|

||||

# shard inputs and rng

|

||||

params = replicate(params)

|

||||

prng_seed = jax.random.split(prng_seed, jax.device_count())

|

||||

prompt_ids = shard(prompt_ids)

|

||||

|

||||

images = pipeline(prompt_ids, params, prng_seed, num_inference_steps, jit=True).images

|

||||

images = pipeline.numpy_to_pil(np.asarray(images.reshape((num_samples,) + images.shape[-3:])))

|

||||

image.save("cat-backpack.png")

|

||||

```

|

||||

</jax>

|

||||

</frameworkcontent>

|

||||

|

||||

## 작동 방식

|

||||

|

||||

|

||||

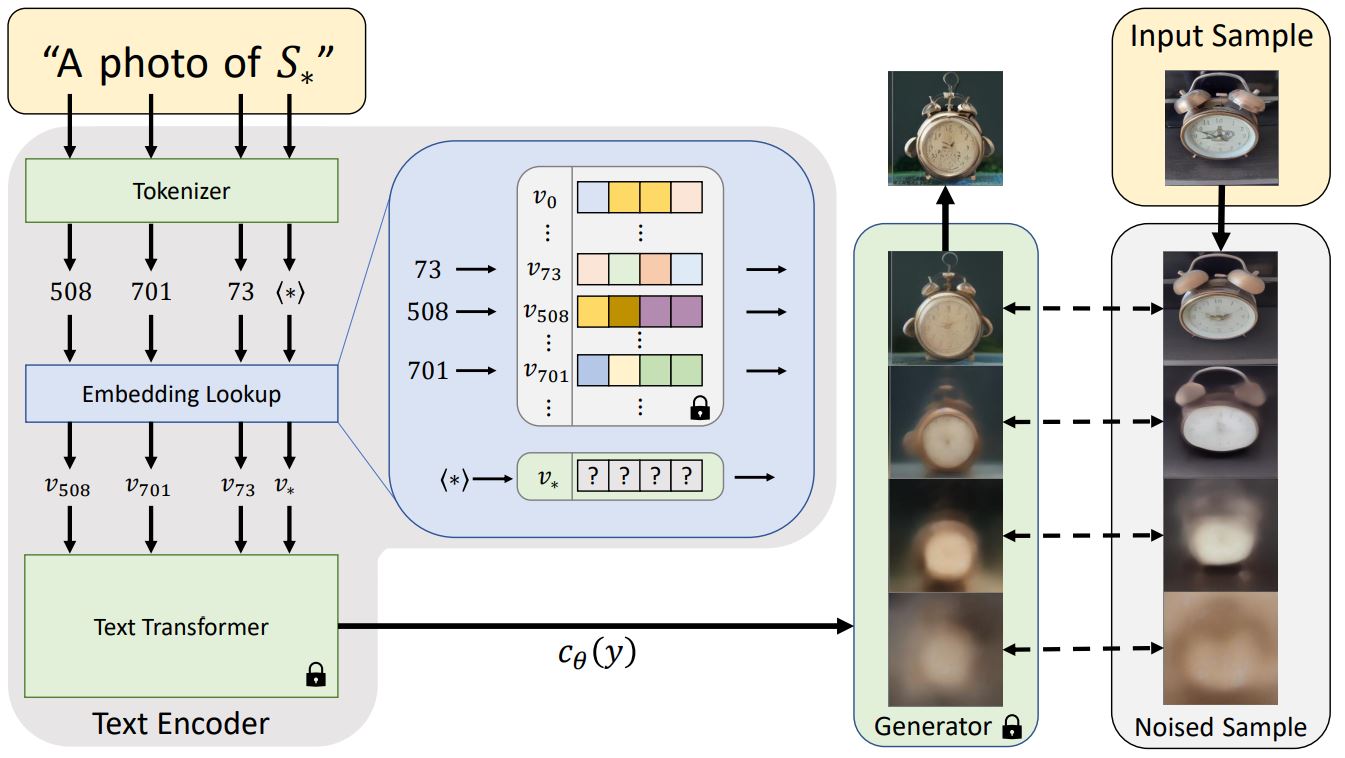

<small>Architecture overview from the Textual Inversion <a href="https://textual-inversion.github.io/">blog post.</a></small>

|

||||

|

||||

일반적으로 텍스트 프롬프트는 모델에 전달되기 전에 임베딩으로 토큰화됩니다. textual-inversion은 비슷한 작업을 수행하지만, 위 다이어그램의 특수 토큰 `S*`로부터 새로운 토큰 임베딩 `v*`를 학습합니다. 모델의 아웃풋은 디퓨전 모델을 조정하는 데 사용되며, 디퓨전 모델이 단 몇 개의 예제 이미지에서 신속하고 새로운 콘셉트를 이해하는 데 도움을 줍니다.

|

||||

|

||||

이를 위해 textual-inversion은 제너레이터 모델과 학습용 이미지의 노이즈 버전을 사용합니다. 제너레이터는 노이즈가 적은 버전의 이미지를 예측하려고 시도하며 토큰 임베딩 `v*`은 제너레이터의 성능에 따라 최적화됩니다. 토큰 임베딩이 새로운 콘셉트를 성공적으로 포착하면 디퓨전 모델에 더 유용한 정보를 제공하고 노이즈가 적은 더 선명한 이미지를 생성하는 데 도움이 됩니다. 이러한 최적화 프로세스는 일반적으로 다양한 프롬프트와 이미지에 수천 번에 노출됨으로써 이루어집니다.

|

||||

|

||||

144

docs/source/ko/training/unconditional_training.mdx

Normal file

144

docs/source/ko/training/unconditional_training.mdx

Normal file

@@ -0,0 +1,144 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Unconditional 이미지 생성

|

||||

|

||||

unconditional 이미지 생성은 text-to-image 또는 image-to-image 모델과 달리 텍스트나 이미지에 대한 조건이 없이 학습 데이터 분포와 유사한 이미지만을 생성합니다.

|

||||

|

||||

<iframe

|

||||

src="https://stevhliu-ddpm-butterflies-128.hf.space"

|

||||

frameborder="0"

|

||||

width="850"

|

||||

height="550"

|

||||

></iframe>

|

||||

|

||||

|

||||

이 가이드에서는 기존에 존재하던 데이터셋과 자신만의 커스텀 데이터셋에 대해 unconditional image generation 모델을 훈련하는 방법을 설명합니다. 훈련 세부 사항에 대해 더 자세히 알고 싶다면 unconditional image generation을 위한 모든 학습 스크립트를 [여기](https://github.com/huggingface/diffusers/tree/main/examples/unconditional_image_generation)에서 확인할 수 있습니다.

|

||||

|

||||

스크립트를 실행하기 전, 먼저 의존성 라이브러리들을 설치해야 합니다.

|

||||

|

||||

```bash

|

||||

pip install diffusers[training] accelerate datasets

|

||||

```

|

||||

|

||||

그 다음 🤗 [Accelerate](https://github.com/huggingface/accelerate/) 환경을 초기화합니다.

|

||||

|

||||

```bash

|

||||

accelerate config

|

||||

```

|

||||

|

||||

별도의 설정 없이 기본 설정으로 🤗 [Accelerate](https://github.com/huggingface/accelerate/) 환경을 초기화해봅시다.

|

||||

|

||||

```bash

|

||||

accelerate config default

|

||||

```

|

||||

|

||||

노트북과 같은 대화형 쉘을 지원하지 않는 환경의 경우, 다음과 같이 사용해볼 수도 있습니다.

|

||||

|

||||

```py

|

||||

from accelerate.utils import write_basic_config

|

||||

|

||||

write_basic_config()

|

||||

```

|

||||

|

||||

## 모델을 허브에 업로드하기

|

||||

|

||||

학습 스크립트에 다음 인자를 추가하여 허브에 모델을 업로드할 수 있습니다.

|

||||

|

||||

```bash

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

## 체크포인트 저장하고 불러오기

|

||||

|

||||

훈련 중 문제가 발생할 경우를 대비하여 체크포인트를 정기적으로 저장하는 것이 좋습니다. 체크포인트를 저장하려면 학습 스크립트에 다음 인자를 전달합니다:

|

||||

|

||||

```bash

|

||||

--checkpointing_steps=500

|

||||

```

|

||||

|

||||

전체 훈련 상태는 500스텝마다 `output_dir`의 하위 폴더에 저장되며, 학습 스크립트에 `--resume_from_checkpoint` 인자를 전달함으로써 체크포인트를 불러오고 훈련을 재개할 수 있습니다.

|

||||

|

||||

```bash

|

||||

--resume_from_checkpoint="checkpoint-1500"

|

||||

```

|

||||

|

||||

## 파인튜닝

|

||||

|

||||

이제 학습 스크립트를 시작할 준비가 되었습니다! `--dataset_name` 인자에 파인튜닝할 데이터셋 이름을 지정한 다음, `--output_dir` 인자에 지정된 경로로 저장합니다. 본인만의 데이터셋를 사용하려면, [학습용 데이터셋 만들기](create_dataset) 가이드를 참조하세요.

|

||||

|

||||

학습 스크립트는 `diffusion_pytorch_model.bin` 파일을 생성하고, 그것을 당신의 리포지토리에 저장합니다.

|

||||

|

||||

<Tip>

|

||||

|

||||

💡 전체 학습은 V100 GPU 4개를 사용할 경우, 2시간이 소요됩니다.

|

||||

|

||||

</Tip>

|

||||

|

||||

예를 들어, [Oxford Flowers](https://huggingface.co/datasets/huggan/flowers-102-categories) 데이터셋을 사용해 파인튜닝할 경우:

|

||||

|

||||

```bash

|

||||

accelerate launch train_unconditional.py \

|

||||

--dataset_name="huggan/flowers-102-categories" \

|

||||

--resolution=64 \

|

||||

--output_dir="ddpm-ema-flowers-64" \

|

||||

--train_batch_size=16 \

|

||||

--num_epochs=100 \

|

||||

--gradient_accumulation_steps=1 \

|

||||

--learning_rate=1e-4 \

|

||||

--lr_warmup_steps=500 \

|

||||

--mixed_precision=no \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

<div class="flex justify-center">

|

||||

<img src="https://user-images.githubusercontent.com/26864830/180248660-a0b143d0-b89a-42c5-8656-2ebf6ece7e52.png"/>

|

||||

</div>

|

||||

[Pokemon](https://huggingface.co/datasets/huggan/pokemon) 데이터셋을 사용할 경우:

|

||||

|

||||

```bash

|

||||

accelerate launch train_unconditional.py \

|

||||

--dataset_name="huggan/pokemon" \

|

||||

--resolution=64 \

|

||||

--output_dir="ddpm-ema-pokemon-64" \

|

||||

--train_batch_size=16 \

|

||||

--num_epochs=100 \

|

||||

--gradient_accumulation_steps=1 \

|

||||

--learning_rate=1e-4 \

|

||||

--lr_warmup_steps=500 \

|

||||

--mixed_precision=no \

|

||||

--push_to_hub

|

||||

```

|

||||

|

||||

<div class="flex justify-center">

|

||||

<img src="https://user-images.githubusercontent.com/26864830/180248200-928953b4-db38-48db-b0c6-8b740fe6786f.png"/>

|

||||

</div>

|

||||

|

||||

### 여러개의 GPU로 훈련하기

|

||||

|

||||

`accelerate`을 사용하면 원활한 다중 GPU 훈련이 가능합니다. `accelerate`을 사용하여 분산 훈련을 실행하려면 [여기](https://huggingface.co/docs/accelerate/basic_tutorials/launch) 지침을 따르세요. 다음은 명령어 예제입니다.

|

||||

|

||||

```bash

|

||||

accelerate launch --mixed_precision="fp16" --multi_gpu train_unconditional.py \

|

||||

--dataset_name="huggan/pokemon" \

|

||||

--resolution=64 --center_crop --random_flip \

|

||||

--output_dir="ddpm-ema-pokemon-64" \

|

||||

--train_batch_size=16 \

|

||||

--num_epochs=100 \

|

||||

--gradient_accumulation_steps=1 \

|

||||

--use_ema \

|

||||

--learning_rate=1e-4 \

|

||||

--lr_warmup_steps=500 \

|

||||

--mixed_precision="fp16" \

|

||||

--logger="wandb" \

|

||||

--push_to_hub

|

||||

```

|

||||

405

docs/source/ko/tutorials/basic_training.mdx

Normal file

405

docs/source/ko/tutorials/basic_training.mdx

Normal file

@@ -0,0 +1,405 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

[[open-in-colab]]

|

||||

|

||||

|

||||

# Diffusion 모델을 학습하기

|

||||

|

||||

Unconditional 이미지 생성은 학습에 사용된 데이터셋과 유사한 이미지를 생성하는 diffusion 모델에서 인기 있는 어플리케이션입니다. 일반적으로, 가장 좋은 결과는 특정 데이터셋에 사전 훈련된 모델을 파인튜닝하는 것으로 얻을 수 있습니다. 이 [허브](https://huggingface.co/search/full-text?q=unconditional-image-generation&type=model)에서 이러한 많은 체크포인트를 찾을 수 있지만, 만약 마음에 드는 체크포인트를 찾지 못했다면, 언제든지 스스로 학습할 수 있습니다!

|

||||

|

||||

이 튜토리얼은 나만의 🦋 나비 🦋를 생성하기 위해 [Smithsonian Butterflies](https://huggingface.co/datasets/huggan/smithsonian_butterflies_subset) 데이터셋의 하위 집합에서 [`UNet2DModel`] 모델을 학습하는 방법을 가르쳐줄 것입니다.

|

||||

|

||||

<Tip>

|

||||

|

||||

💡 이 학습 튜토리얼은 [Training with 🧨 Diffusers](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/training_example.ipynb) 노트북 기반으로 합니다. Diffusion 모델의 작동 방식 및 자세한 내용은 노트북을 확인하세요!

|

||||

|

||||

</Tip>

|

||||

|

||||