diff --git a/docs/source/en/api/pipelines/pia.md b/docs/source/en/api/pipelines/pia.md

index 4793829aed..7bd480b49a 100644

--- a/docs/source/en/api/pipelines/pia.md

+++ b/docs/source/en/api/pipelines/pia.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Image-to-Video Generation with PIA (Personalized Image Animator)

diff --git a/docs/source/en/api/pipelines/pix2pix.md b/docs/source/en/api/pipelines/pix2pix.md

index d0b3bf32b8..20a74577c1 100644

--- a/docs/source/en/api/pipelines/pix2pix.md

+++ b/docs/source/en/api/pipelines/pix2pix.md

@@ -1,4 +1,4 @@

-

+

+# QwenImage

+

+Qwen-Image from the Qwen team is an image generation foundation model in the Qwen series that achieves significant advances in complex text rendering and precise image editing. Experiments show strong general capabilities in both image generation and editing, with exceptional performance in text rendering, especially for Chinese.

+

+Check out the model card [here](https://huggingface.co/Qwen/Qwen-Image) to learn more.

+

+

+

+Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

+

+

+

+## QwenImagePipeline

+

+[[autodoc]] QwenImagePipeline

+ - all

+ - __call__

+

+## QwenImagePipelineOutput

+

+[[autodoc]] pipelines.qwenimage.pipeline_output.QwenImagePipelineOutput

diff --git a/docs/source/en/api/pipelines/sana.md b/docs/source/en/api/pipelines/sana.md

index a66267c094..7491689fd8 100644

--- a/docs/source/en/api/pipelines/sana.md

+++ b/docs/source/en/api/pipelines/sana.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Self-Attention Guidance

[Improving Sample Quality of Diffusion Models Using Self-Attention Guidance](https://huggingface.co/papers/2210.00939) is by Susung Hong et al.

diff --git a/docs/source/en/api/pipelines/semantic_stable_diffusion.md b/docs/source/en/api/pipelines/semantic_stable_diffusion.md

index b9aacd3518..1ce44cf2de 100644

--- a/docs/source/en/api/pipelines/semantic_stable_diffusion.md

+++ b/docs/source/en/api/pipelines/semantic_stable_diffusion.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Semantic Guidance

Semantic Guidance for Diffusion Models was proposed in [SEGA: Instructing Text-to-Image Models using Semantic Guidance](https://huggingface.co/papers/2301.12247) and provides strong semantic control over image generation.

diff --git a/docs/source/en/api/pipelines/shap_e.md b/docs/source/en/api/pipelines/shap_e.md

index 3c1f939c1f..5e5af0656a 100644

--- a/docs/source/en/api/pipelines/shap_e.md

+++ b/docs/source/en/api/pipelines/shap_e.md

@@ -1,4 +1,4 @@

-

+

+

+

+# SkyReels-V2: Infinite-length Film Generative model

+

+[SkyReels-V2](https://huggingface.co/papers/2504.13074) by the SkyReels Team.

+

+*Recent advances in video generation have been driven by diffusion models and autoregressive frameworks, yet critical challenges persist in harmonizing prompt adherence, visual quality, motion dynamics, and duration: compromises in motion dynamics to enhance temporal visual quality, constrained video duration (5-10 seconds) to prioritize resolution, and inadequate shot-aware generation stemming from general-purpose MLLMs' inability to interpret cinematic grammar, such as shot composition, actor expressions, and camera motions. These intertwined limitations hinder realistic long-form synthesis and professional film-style generation. To address these limitations, we propose SkyReels-V2, an Infinite-length Film Generative Model, that synergizes Multi-modal Large Language Model (MLLM), Multi-stage Pretraining, Reinforcement Learning, and Diffusion Forcing Framework. Firstly, we design a comprehensive structural representation of video that combines the general descriptions by the Multi-modal LLM and the detailed shot language by sub-expert models. Aided with human annotation, we then train a unified Video Captioner, named SkyCaptioner-V1, to efficiently label the video data. Secondly, we establish progressive-resolution pretraining for the fundamental video generation, followed by a four-stage post-training enhancement: Initial concept-balanced Supervised Fine-Tuning (SFT) improves baseline quality; Motion-specific Reinforcement Learning (RL) training with human-annotated and synthetic distortion data addresses dynamic artifacts; Our diffusion forcing framework with non-decreasing noise schedules enables long-video synthesis in an efficient search space; Final high-quality SFT refines visual fidelity. All the code and models are available at [this https URL](https://github.com/SkyworkAI/SkyReels-V2).*

+

+You can find all the original SkyReels-V2 checkpoints under the [Skywork](https://huggingface.co/collections/Skywork/skyreels-v2-6801b1b93df627d441d0d0d9) organization.

+

+The following SkyReels-V2 models are supported in Diffusers:

+- [SkyReels-V2 DF 1.3B - 540P](https://huggingface.co/Skywork/SkyReels-V2-DF-1.3B-540P-Diffusers)

+- [SkyReels-V2 DF 14B - 540P](https://huggingface.co/Skywork/SkyReels-V2-DF-14B-540P-Diffusers)

+- [SkyReels-V2 DF 14B - 720P](https://huggingface.co/Skywork/SkyReels-V2-DF-14B-720P-Diffusers)

+- [SkyReels-V2 T2V 14B - 540P](https://huggingface.co/Skywork/SkyReels-V2-T2V-14B-540P-Diffusers)

+- [SkyReels-V2 T2V 14B - 720P](https://huggingface.co/Skywork/SkyReels-V2-T2V-14B-720P-Diffusers)

+- [SkyReels-V2 I2V 1.3B - 540P](https://huggingface.co/Skywork/SkyReels-V2-I2V-1.3B-540P-Diffusers)

+- [SkyReels-V2 I2V 14B - 540P](https://huggingface.co/Skywork/SkyReels-V2-I2V-14B-540P-Diffusers)

+- [SkyReels-V2 I2V 14B - 720P](https://huggingface.co/Skywork/SkyReels-V2-I2V-14B-720P-Diffusers)

+- [SkyReels-V2 FLF2V 1.3B - 540P](https://huggingface.co/Skywork/SkyReels-V2-FLF2V-1.3B-540P-Diffusers)

+

+> [!TIP]

+> Click on the SkyReels-V2 models in the right sidebar for more examples of video generation.

+

+### A _Visual_ Demonstration

+

+ An example with these parameters:

+ base_num_frames=97, num_frames=97, num_inference_steps=30, ar_step=5, causal_block_size=5

+

+ vae_scale_factor_temporal -> 4

+ num_latent_frames: (97-1)//vae_scale_factor_temporal+1 = 25 frames -> 5 blocks of 5 frames each

+

+ base_num_latent_frames = (97-1)//vae_scale_factor_temporal+1 = 25 → blocks = 25//5 = 5 blocks

+ This 5 blocks means the maximum context length of the model is 25 frames in the latent space.

+

+ Asynchronous Processing Timeline:

+ ┌─────────────────────────────────────────────────────────────────┐

+ │ Steps: 1 6 11 16 21 26 31 36 41 46 50 │

+ │ Block 1: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+ │ Block 2: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+ │ Block 3: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+ │ Block 4: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+ │ Block 5: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+ └─────────────────────────────────────────────────────────────────┘

+

+ For Long Videos (num_frames > base_num_frames):

+ base_num_frames acts as the "sliding window size" for processing long videos.

+

+ Example: 257-frame video with base_num_frames=97, overlap_history=17

+ ┌──── Iteration 1 (frames 1-97) ────┐

+ │ Processing window: 97 frames │ → 5 blocks, async processing

+ │ Generates: frames 1-97 │

+ └───────────────────────────────────┘

+ ┌────── Iteration 2 (frames 81-177) ──────┐

+ │ Processing window: 97 frames │

+ │ Overlap: 17 frames (81-97) from prev │ → 5 blocks, async processing

+ │ Generates: frames 98-177 │

+ └─────────────────────────────────────────┘

+ ┌────── Iteration 3 (frames 161-257) ──────┐

+ │ Processing window: 97 frames │

+ │ Overlap: 17 frames (161-177) from prev │ → 5 blocks, async processing

+ │ Generates: frames 178-257 │

+ └──────────────────────────────────────────┘

+

+ Each iteration independently runs the asynchronous processing with its own 5 blocks.

+ base_num_frames controls:

+ 1. Memory usage (larger window = more VRAM)

+ 2. Model context length (must match training constraints)

+ 3. Number of blocks per iteration (base_num_latent_frames // causal_block_size)

+

+ Each block takes 30 steps to complete denoising.

+ Block N starts at step: 1 + (N-1) x ar_step

+ Total steps: 30 + (5-1) x 5 = 50 steps

+

+

+ Synchronous mode (ar_step=0) would process all blocks/frames simultaneously:

+ ┌──────────────────────────────────────────────┐

+ │ Steps: 1 ... 30 │

+ │ All blocks: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+ └──────────────────────────────────────────────┘

+ Total steps: 30 steps

+

+

+ An example on how the step matrix is constructed for asynchronous processing:

+ Given the parameters: (num_inference_steps=30, flow_shift=8, num_frames=97, ar_step=5, causal_block_size=5)

+ - num_latent_frames = (97 frames - 1) // (4 temporal downsampling) + 1 = 25

+ - step_template = [999, 995, 991, 986, 980, 975, 969, 963, 956, 948,

+ 941, 932, 922, 912, 901, 888, 874, 859, 841, 822,

+ 799, 773, 743, 708, 666, 615, 551, 470, 363, 216]

+

+ The algorithm creates a 50x25 step_matrix where:

+ - Row 1: [999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999]

+ - Row 2: [995, 995, 995, 995, 995, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999]

+ - Row 3: [991, 991, 991, 991, 991, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999]

+ - ...

+ - Row 7: [969, 969, 969, 969, 969, 995, 995, 995, 995, 995, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999, 999]

+ - ...

+ - Row 21: [799, 799, 799, 799, 799, 888, 888, 888, 888, 888, 941, 941, 941, 941, 941, 975, 975, 975, 975, 975, 999, 999, 999, 999, 999]

+ - ...

+ - Row 35: [ 0, 0, 0, 0, 0, 216, 216, 216, 216, 216, 666, 666, 666, 666, 666, 822, 822, 822, 822, 822, 901, 901, 901, 901, 901]

+ - ...

+ - Row 42: [ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 551, 551, 551, 551, 551, 773, 773, 773, 773, 773]

+ - ...

+ - Row 50: [ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 216, 216, 216, 216, 216]

+

+ Detailed Row 6 Analysis:

+ - step_matrix[5]: [ 975, 975, 975, 975, 975, 999, 999, 999, 999, 999, 999, ..., 999]

+ - step_index[5]: [ 6, 6, 6, 6, 6, 1, 1, 1, 1, 1, 0, ..., 0]

+ - step_update_mask[5]: [True,True,True,True,True,True,True,True,True,True,False, ...,False]

+ - valid_interval[5]: (0, 25)

+

+ Key Pattern: Block i lags behind Block i-1 by exactly ar_step=5 timesteps, creating the

+ staggered "diffusion forcing" effect where later blocks condition on cleaner earlier blocks.

+

+### Text-to-Video Generation

+

+The example below demonstrates how to generate a video from text.

+

+

+

+

+Refer to the [Reduce memory usage](../../optimization/memory) guide for more details about the various memory saving techniques.

+

+From the original repo:

+>You can use --ar_step 5 to enable asynchronous inference. When asynchronous inference, --causal_block_size 5 is recommended while it is not supposed to be set for synchronous generation... Asynchronous inference will take more steps to diffuse the whole sequence which means it will be SLOWER than synchronous mode. In our experiments, asynchronous inference may improve the instruction following and visual consistent performance.

+

+```py

+# pip install ftfy

+import torch

+from diffusers import AutoModel, SkyReelsV2DiffusionForcingPipeline, UniPCMultistepScheduler

+from diffusers.utils import export_to_video

+

+vae = AutoModel.from_pretrained("Skywork/SkyReels-V2-DF-14B-540P-Diffusers", subfolder="vae", torch_dtype=torch.float32)

+transformer = AutoModel.from_pretrained("Skywork/SkyReels-V2-DF-14B-540P-Diffusers", subfolder="transformer", torch_dtype=torch.bfloat16)

+

+pipeline = SkyReelsV2DiffusionForcingPipeline.from_pretrained(

+ "Skywork/SkyReels-V2-DF-14B-540P-Diffusers",

+ vae=vae,

+ transformer=transformer,

+ torch_dtype=torch.bfloat16

+)

+flow_shift = 8.0 # 8.0 for T2V, 5.0 for I2V

+pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config, flow_shift=flow_shift)

+pipeline = pipeline.to("cuda")

+

+prompt = "A cat and a dog baking a cake together in a kitchen. The cat is carefully measuring flour, while the dog is stirring the batter with a wooden spoon. The kitchen is cozy, with sunlight streaming through the window."

+

+output = pipeline(

+ prompt=prompt,

+ num_inference_steps=30,

+ height=544, # 720 for 720P

+ width=960, # 1280 for 720P

+ num_frames=97,

+ base_num_frames=97, # 121 for 720P

+ ar_step=5, # Controls asynchronous inference (0 for synchronous mode)

+ causal_block_size=5, # Number of frames in each block for asynchronous processing

+ overlap_history=None, # Number of frames to overlap for smooth transitions in long videos; 17 for long video generations

+ addnoise_condition=20, # Improves consistency in long video generation

+).frames[0]

+export_to_video(output, "T2V.mp4", fps=24, quality=8)

+```

+

+

+

+

+### First-Last-Frame-to-Video Generation

+

+The example below demonstrates how to use the image-to-video pipeline to generate a video using a text description, a starting frame, and an ending frame.

+

+

+

+

+```python

+import numpy as np

+import torch

+import torchvision.transforms.functional as TF

+from diffusers import AutoencoderKLWan, SkyReelsV2DiffusionForcingImageToVideoPipeline, UniPCMultistepScheduler

+from diffusers.utils import export_to_video, load_image

+

+

+model_id = "Skywork/SkyReels-V2-DF-14B-720P-Diffusers"

+vae = AutoencoderKLWan.from_pretrained(model_id, subfolder="vae", torch_dtype=torch.float32)

+pipeline = SkyReelsV2DiffusionForcingImageToVideoPipeline.from_pretrained(

+ model_id, vae=vae, torch_dtype=torch.bfloat16

+)

+flow_shift = 5.0 # 8.0 for T2V, 5.0 for I2V

+pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config, flow_shift=flow_shift)

+pipeline.to("cuda")

+

+first_frame = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/flf2v_input_first_frame.png")

+last_frame = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/flf2v_input_last_frame.png")

+

+def aspect_ratio_resize(image, pipeline, max_area=720 * 1280):

+ aspect_ratio = image.height / image.width

+ mod_value = pipeline.vae_scale_factor_spatial * pipeline.transformer.config.patch_size[1]

+ height = round(np.sqrt(max_area * aspect_ratio)) // mod_value * mod_value

+ width = round(np.sqrt(max_area / aspect_ratio)) // mod_value * mod_value

+ image = image.resize((width, height))

+ return image, height, width

+

+def center_crop_resize(image, height, width):

+ # Calculate resize ratio to match first frame dimensions

+ resize_ratio = max(width / image.width, height / image.height)

+

+ # Resize the image

+ width = round(image.width * resize_ratio)

+ height = round(image.height * resize_ratio)

+ size = [width, height]

+ image = TF.center_crop(image, size)

+

+ return image, height, width

+

+first_frame, height, width = aspect_ratio_resize(first_frame, pipeline)

+if last_frame.size != first_frame.size:

+ last_frame, _, _ = center_crop_resize(last_frame, height, width)

+

+prompt = "CG animation style, a small blue bird takes off from the ground, flapping its wings. The bird's feathers are delicate, with a unique pattern on its chest. The background shows a blue sky with white clouds under bright sunshine. The camera follows the bird upward, capturing its flight and the vastness of the sky from a close-up, low-angle perspective."

+

+output = pipeline(

+ image=first_frame, last_image=last_frame, prompt=prompt, height=height, width=width, guidance_scale=5.0

+).frames[0]

+export_to_video(output, "output.mp4", fps=24, quality=8)

+```

+

+

+

+

+

+### Video-to-Video Generation

+

+

+

+

+`SkyReelsV2DiffusionForcingVideoToVideoPipeline` extends a given video.

+

+```python

+import numpy as np

+import torch

+import torchvision.transforms.functional as TF

+from diffusers import AutoencoderKLWan, SkyReelsV2DiffusionForcingVideoToVideoPipeline, UniPCMultistepScheduler

+from diffusers.utils import export_to_video, load_video

+

+

+model_id = "Skywork/SkyReels-V2-DF-14B-540P-Diffusers"

+vae = AutoencoderKLWan.from_pretrained(model_id, subfolder="vae", torch_dtype=torch.float32)

+pipeline = SkyReelsV2DiffusionForcingVideoToVideoPipeline.from_pretrained(

+ model_id, vae=vae, torch_dtype=torch.bfloat16

+)

+flow_shift = 5.0 # 8.0 for T2V, 5.0 for I2V

+pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config, flow_shift=flow_shift)

+pipeline.to("cuda")

+

+video = load_video("input_video.mp4")

+

+prompt = "CG animation style, a small blue bird takes off from the ground, flapping its wings. The bird's feathers are delicate, with a unique pattern on its chest. The background shows a blue sky with white clouds under bright sunshine. The camera follows the bird upward, capturing its flight and the vastness of the sky from a close-up, low-angle perspective."

+

+output = pipeline(

+ video=video, prompt=prompt, height=544, width=960, guidance_scale=5.0,

+ num_inference_steps=30, num_frames=257, base_num_frames=97#, ar_step=5, causal_block_size=5,

+).frames[0]

+export_to_video(output, "output.mp4", fps=24, quality=8)

+# Total frames will be the number of frames of given video + 257

+```

+

+

+

+

+

+## Notes

+

+- SkyReels-V2 supports LoRAs with [`~loaders.SkyReelsV2LoraLoaderMixin.load_lora_weights`].

+

+

+ Show example code

+

+ ```py

+ # pip install ftfy

+ import torch

+ from diffusers import AutoModel, SkyReelsV2DiffusionForcingPipeline

+ from diffusers.utils import export_to_video

+

+ vae = AutoModel.from_pretrained(

+ "Skywork/SkyReels-V2-DF-1.3B-540P-Diffusers", subfolder="vae", torch_dtype=torch.float32

+ )

+ pipeline = SkyReelsV2DiffusionForcingPipeline.from_pretrained(

+ "Skywork/SkyReels-V2-DF-1.3B-540P-Diffusers", vae=vae, torch_dtype=torch.bfloat16

+ )

+ pipeline.to("cuda")

+

+ pipeline.load_lora_weights("benjamin-paine/steamboat-willie-1.3b", adapter_name="steamboat-willie")

+ pipeline.set_adapters("steamboat-willie")

+

+ pipeline.enable_model_cpu_offload()

+

+ # use "steamboat willie style" to trigger the LoRA

+ prompt = """

+ steamboat willie style, golden era animation, The camera rushes from far to near in a low-angle shot,

+ revealing a white ferret on a log. It plays, leaps into the water, and emerges, as the camera zooms in

+ for a close-up. Water splashes berry bushes nearby, while moss, snow, and leaves blanket the ground.

+ Birch trees and a light blue sky frame the scene, with ferns in the foreground. Side lighting casts dynamic

+ shadows and warm highlights. Medium composition, front view, low angle, with depth of field.

+ """

+

+ output = pipeline(

+ prompt=prompt,

+ num_frames=97,

+ guidance_scale=6.0,

+ ).frames[0]

+ export_to_video(output, "output.mp4", fps=24)

+ ```

+

+

+

+

+## SkyReelsV2DiffusionForcingPipeline

+

+[[autodoc]] SkyReelsV2DiffusionForcingPipeline

+ - all

+ - __call__

+

+## SkyReelsV2DiffusionForcingImageToVideoPipeline

+

+[[autodoc]] SkyReelsV2DiffusionForcingImageToVideoPipeline

+ - all

+ - __call__

+

+## SkyReelsV2DiffusionForcingVideoToVideoPipeline

+

+[[autodoc]] SkyReelsV2DiffusionForcingVideoToVideoPipeline

+ - all

+ - __call__

+

+## SkyReelsV2Pipeline

+

+[[autodoc]] SkyReelsV2Pipeline

+ - all

+ - __call__

+

+## SkyReelsV2ImageToVideoPipeline

+

+[[autodoc]] SkyReelsV2ImageToVideoPipeline

+ - all

+ - __call__

+

+## SkyReelsV2PipelineOutput

+

+[[autodoc]] pipelines.skyreels_v2.pipeline_output.SkyReelsV2PipelineOutput

\ No newline at end of file

diff --git a/docs/source/en/api/pipelines/stable_audio.md b/docs/source/en/api/pipelines/stable_audio.md

index 3f689ba0ad..82763a52a9 100644

--- a/docs/source/en/api/pipelines/stable_audio.md

+++ b/docs/source/en/api/pipelines/stable_audio.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# GLIGEN (Grounded Language-to-Image Generation)

The GLIGEN model was created by researchers and engineers from [University of Wisconsin-Madison, Columbia University, and Microsoft](https://github.com/gligen/GLIGEN). The [`StableDiffusionGLIGENPipeline`] and [`StableDiffusionGLIGENTextImagePipeline`] can generate photorealistic images conditioned on grounding inputs. Along with text and bounding boxes with [`StableDiffusionGLIGENPipeline`], if input images are given, [`StableDiffusionGLIGENTextImagePipeline`] can insert objects described by text at the region defined by bounding boxes. Otherwise, it'll generate an image described by the caption/prompt and insert objects described by text at the region defined by bounding boxes. It's trained on COCO2014D and COCO2014CD datasets, and the model uses a frozen CLIP ViT-L/14 text encoder to condition itself on grounding inputs.

diff --git a/docs/source/en/api/pipelines/stable_diffusion/image_variation.md b/docs/source/en/api/pipelines/stable_diffusion/image_variation.md

index 57dd2f0d5b..7a50971fdf 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/image_variation.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/image_variation.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# K-Diffusion

[k-diffusion](https://github.com/crowsonkb/k-diffusion) is a popular library created by [Katherine Crowson](https://github.com/crowsonkb/). We provide `StableDiffusionKDiffusionPipeline` and `StableDiffusionXLKDiffusionPipeline` that allow you to run Stable DIffusion with samplers from k-diffusion.

diff --git a/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md b/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md

index 9abccd6e13..d5a15cb002 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Text-to-(RGB, depth)

diff --git a/docs/source/en/api/pipelines/stable_diffusion/overview.md b/docs/source/en/api/pipelines/stable_diffusion/overview.md

index 2598409121..7e6e16c347 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/overview.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/overview.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Safe Stable Diffusion

Safe Stable Diffusion was proposed in [Safe Latent Diffusion: Mitigating Inappropriate Degeneration in Diffusion Models](https://huggingface.co/papers/2211.05105) and mitigates inappropriate degeneration from Stable Diffusion models because they're trained on unfiltered web-crawled datasets. For instance Stable Diffusion may unexpectedly generate nudity, violence, images depicting self-harm, and otherwise offensive content. Safe Stable Diffusion is an extension of Stable Diffusion that drastically reduces this type of content.

diff --git a/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md b/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md

index bf7ce9d793..30e4379066 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md

@@ -1,4 +1,4 @@

-

-

-

-🧪 This pipeline is for research purposes only.

-

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

# Text-to-video

diff --git a/docs/source/en/api/pipelines/text_to_video_zero.md b/docs/source/en/api/pipelines/text_to_video_zero.md

index a84ce0be11..5fe3789d82 100644

--- a/docs/source/en/api/pipelines/text_to_video_zero.md

+++ b/docs/source/en/api/pipelines/text_to_video_zero.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Text2Video-Zero

diff --git a/docs/source/en/api/pipelines/unclip.md b/docs/source/en/api/pipelines/unclip.md

index 943cebdb28..8011a4b533 100644

--- a/docs/source/en/api/pipelines/unclip.md

+++ b/docs/source/en/api/pipelines/unclip.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# unCLIP

[Hierarchical Text-Conditional Image Generation with CLIP Latents](https://huggingface.co/papers/2204.06125) is by Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, Mark Chen. The unCLIP model in 🤗 Diffusers comes from kakaobrain's [karlo](https://github.com/kakaobrain/karlo).

diff --git a/docs/source/en/api/pipelines/unidiffuser.md b/docs/source/en/api/pipelines/unidiffuser.md

index 802aefea6b..7d767f2db5 100644

--- a/docs/source/en/api/pipelines/unidiffuser.md

+++ b/docs/source/en/api/pipelines/unidiffuser.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# UniDiffuser

diff --git a/docs/source/en/api/pipelines/value_guided_sampling.md b/docs/source/en/api/pipelines/value_guided_sampling.md

index 5aaee9090c..797847ee47 100644

--- a/docs/source/en/api/pipelines/value_guided_sampling.md

+++ b/docs/source/en/api/pipelines/value_guided_sampling.md

@@ -1,4 +1,4 @@

-

+

+# AutoPipelineBlocks

+

+

+

+🧪 **Experimental Feature**: Modular Diffusers is an experimental feature we are actively developing. The API may be subject to breaking changes.

+

+

+

+`AutoPipelineBlocks` is a subclass of `ModularPipelineBlocks`. It is a multi-block that automatically selects which sub-blocks to run based on the inputs provided at runtime, creating conditional workflows that adapt to different scenarios. The main purpose is convenience and portability - for developers, you can package everything into one workflow, making it easier to share and use.

+

+In this tutorial, we will show you how to create an `AutoPipelineBlocks` and learn more about how the conditional selection works.

+

+

+

+Other types of multi-blocks include [SequentialPipelineBlocks](sequential_pipeline_blocks.md) (for linear workflows) and [LoopSequentialPipelineBlocks](loop_sequential_pipeline_blocks.md) (for iterative workflows). For information on creating individual blocks, see the [PipelineBlock guide](pipeline_block.md).

+

+Additionally, like all `ModularPipelineBlocks`, `AutoPipelineBlocks` are definitions/specifications, not runnable pipelines. You need to convert them into a `ModularPipeline` to actually execute them. For information on creating and running pipelines, see the [Modular Pipeline guide](modular_pipeline.md).

+

+

+

+For example, you might want to support text-to-image and image-to-image tasks. Instead of creating two separate pipelines, you can create an `AutoPipelineBlocks` that automatically chooses the workflow based on whether an `image` input is provided.

+

+Let's see an example. We'll use the helper function from the [PipelineBlock guide](./pipeline_block.md) to create our blocks:

+

+**Helper Function**

+

+```py

+from diffusers.modular_pipelines import PipelineBlock, InputParam, OutputParam

+import torch

+

+def make_block(inputs=[], intermediate_inputs=[], intermediate_outputs=[], block_fn=None, description=None):

+ class TestBlock(PipelineBlock):

+ model_name = "test"

+

+ @property

+ def inputs(self):

+ return inputs

+

+ @property

+ def intermediate_inputs(self):

+ return intermediate_inputs

+

+ @property

+ def intermediate_outputs(self):

+ return intermediate_outputs

+

+ @property

+ def description(self):

+ return description if description is not None else ""

+

+ def __call__(self, components, state):

+ block_state = self.get_block_state(state)

+ if block_fn is not None:

+ block_state = block_fn(block_state, state)

+ self.set_block_state(state, block_state)

+ return components, state

+

+ return TestBlock

+```

+

+Now let's create a dummy `AutoPipelineBlocks` that includes dummy text-to-image, image-to-image, and inpaint pipelines.

+

+

+```py

+from diffusers.modular_pipelines import AutoPipelineBlocks

+

+# These are dummy blocks and we only focus on "inputs" for our purpose

+inputs = [InputParam(name="prompt")]

+# block_fn prints out which workflow is running so we can see the execution order at runtime

+block_fn = lambda x, y: print("running the text-to-image workflow")

+block_t2i_cls = make_block(inputs=inputs, block_fn=block_fn, description="I'm a text-to-image workflow!")

+

+inputs = [InputParam(name="prompt"), InputParam(name="image")]

+block_fn = lambda x, y: print("running the image-to-image workflow")

+block_i2i_cls = make_block(inputs=inputs, block_fn=block_fn, description="I'm a image-to-image workflow!")

+

+inputs = [InputParam(name="prompt"), InputParam(name="image"), InputParam(name="mask")]

+block_fn = lambda x, y: print("running the inpaint workflow")

+block_inpaint_cls = make_block(inputs=inputs, block_fn=block_fn, description="I'm a inpaint workflow!")

+

+class AutoImageBlocks(AutoPipelineBlocks):

+ # List of sub-block classes to choose from

+ block_classes = [block_inpaint_cls, block_i2i_cls, block_t2i_cls]

+ # Names for each block in the same order

+ block_names = ["inpaint", "img2img", "text2img"]

+ # Trigger inputs that determine which block to run

+ # - "mask" triggers inpaint workflow

+ # - "image" triggers img2img workflow (but only if mask is not provided)

+ # - if none of above, runs the text2img workflow (default)

+ block_trigger_inputs = ["mask", "image", None]

+ # Description is extremely important for AutoPipelineBlocks

+ @property

+ def description(self):

+ return (

+ "Pipeline generates images given different types of conditions!\n"

+ + "This is an auto pipeline block that works for text2img, img2img and inpainting tasks.\n"

+ + " - inpaint workflow is run when `mask` is provided.\n"

+ + " - img2img workflow is run when `image` is provided (but only when `mask` is not provided).\n"

+ + " - text2img workflow is run when neither `image` nor `mask` is provided.\n"

+ )

+

+# Create the blocks

+auto_blocks = AutoImageBlocks()

+# convert to pipeline

+auto_pipeline = auto_blocks.init_pipeline()

+```

+

+Now we have created an `AutoPipelineBlocks` that contains 3 sub-blocks. Notice the warning message at the top - this automatically appears in every `ModularPipelineBlocks` that contains `AutoPipelineBlocks` to remind end users that dynamic block selection happens at runtime.

+

+```py

+AutoImageBlocks(

+ Class: AutoPipelineBlocks

+

+ ====================================================================================================

+ This pipeline contains blocks that are selected at runtime based on inputs.

+ Trigger Inputs: ['mask', 'image']

+ ====================================================================================================

+

+

+ Description: Pipeline generates images given different types of conditions!

+ This is an auto pipeline block that works for text2img, img2img and inpainting tasks.

+ - inpaint workflow is run when `mask` is provided.

+ - img2img workflow is run when `image` is provided (but only when `mask` is not provided).

+ - text2img workflow is run when neither `image` nor `mask` is provided.

+

+

+

+ Sub-Blocks:

+ • inpaint [trigger: mask] (TestBlock)

+ Description: I'm a inpaint workflow!

+

+ • img2img [trigger: image] (TestBlock)

+ Description: I'm a image-to-image workflow!

+

+ • text2img [default] (TestBlock)

+ Description: I'm a text-to-image workflow!

+

+)

+```

+

+Check out the documentation with `print(auto_pipeline.doc)`:

+

+```py

+>>> print(auto_pipeline.doc)

+class AutoImageBlocks

+

+ Pipeline generates images given different types of conditions!

+ This is an auto pipeline block that works for text2img, img2img and inpainting tasks.

+ - inpaint workflow is run when `mask` is provided.

+ - img2img workflow is run when `image` is provided (but only when `mask` is not provided).

+ - text2img workflow is run when neither `image` nor `mask` is provided.

+

+ Inputs:

+

+ prompt (`None`, *optional*):

+

+ image (`None`, *optional*):

+

+ mask (`None`, *optional*):

+```

+

+There is a fundamental trade-off of AutoPipelineBlocks: it trades clarity for convenience. While it is really easy for packaging multiple workflows, it can become confusing without proper documentation. e.g. if we just throw a pipeline at you and tell you that it contains 3 sub-blocks and takes 3 inputs `prompt`, `image` and `mask`, and ask you to run an image-to-image workflow: if you don't have any prior knowledge on how these pipelines work, you would be pretty clueless, right?

+

+This pipeline we just made though, has a docstring that shows all available inputs and workflows and explains how to use each with different inputs. So it's really helpful for users. For example, it's clear that you need to pass `image` to run img2img. This is why the description field is absolutely critical for AutoPipelineBlocks. We highly recommend you to explain the conditional logic very well for each `AutoPipelineBlocks` you would make. We also recommend to always test individual pipelines first before packaging them into AutoPipelineBlocks.

+

+Let's run this auto pipeline with different inputs to see if the conditional logic works as described. Remember that we have added `print` in each `PipelineBlock`'s `__call__` method to print out its workflow name, so it should be easy to tell which one is running:

+

+```py

+>>> _ = auto_pipeline(image="image", mask="mask")

+running the inpaint workflow

+>>> _ = auto_pipeline(image="image")

+running the image-to-image workflow

+>>> _ = auto_pipeline(prompt="prompt")

+running the text-to-image workflow

+>>> _ = auto_pipeline(image="prompt", mask="mask")

+running the inpaint workflow

+```

+

+However, even with documentation, it can become very confusing when AutoPipelineBlocks are combined with other blocks. The complexity grows quickly when you have nested AutoPipelineBlocks or use them as sub-blocks in larger pipelines.

+

+Let's make another `AutoPipelineBlocks` - this one only contains one block, and it does not include `None` in its `block_trigger_inputs` (which corresponds to the default block to run when none of the trigger inputs are provided). This means this block will be skipped if the trigger input (`ip_adapter_image`) is not provided at runtime.

+

+```py

+from diffusers.modular_pipelines import SequentialPipelineBlocks, InsertableDict

+inputs = [InputParam(name="ip_adapter_image")]

+block_fn = lambda x, y: print("running the ip-adapter workflow")

+block_ipa_cls = make_block(inputs=inputs, block_fn=block_fn, description="I'm a IP-adapter workflow!")

+

+class AutoIPAdapter(AutoPipelineBlocks):

+ block_classes = [block_ipa_cls]

+ block_names = ["ip-adapter"]

+ block_trigger_inputs = ["ip_adapter_image"]

+ @property

+ def description(self):

+ return "Run IP Adapter step if `ip_adapter_image` is provided."

+```

+

+Now let's combine these 2 auto blocks together into a `SequentialPipelineBlocks`:

+

+```py

+auto_ipa_blocks = AutoIPAdapter()

+blocks_dict = InsertableDict()

+blocks_dict["ip-adapter"] = auto_ipa_blocks

+blocks_dict["image-generation"] = auto_blocks

+all_blocks = SequentialPipelineBlocks.from_blocks_dict(blocks_dict)

+pipeline = all_blocks.init_pipeline()

+```

+

+Let's take a look: now things get more confusing. In this particular example, you could still try to explain the conditional logic in the `description` field here - there are only 4 possible execution paths so it's doable. However, since this is a `SequentialPipelineBlocks` that could contain many more blocks, the complexity can quickly get out of hand as the number of blocks increases.

+

+```py

+>>> all_blocks

+SequentialPipelineBlocks(

+ Class: ModularPipelineBlocks

+

+ ====================================================================================================

+ This pipeline contains blocks that are selected at runtime based on inputs.

+ Trigger Inputs: ['image', 'mask', 'ip_adapter_image']

+ Use `get_execution_blocks()` with input names to see selected blocks (e.g. `get_execution_blocks('image')`).

+ ====================================================================================================

+

+

+ Description:

+

+

+ Sub-Blocks:

+ [0] ip-adapter (AutoIPAdapter)

+ Description: Run IP Adapter step if `ip_adapter_image` is provided.

+

+

+ [1] image-generation (AutoImageBlocks)

+ Description: Pipeline generates images given different types of conditions!

+ This is an auto pipeline block that works for text2img, img2img and inpainting tasks.

+ - inpaint workflow is run when `mask` is provided.

+ - img2img workflow is run when `image` is provided (but only when `mask` is not provided).

+ - text2img workflow is run when neither `image` nor `mask` is provided.

+

+

+)

+

+```

+

+This is when the `get_execution_blocks()` method comes in handy - it basically extracts a `SequentialPipelineBlocks` that only contains the blocks that are actually run based on your inputs.

+

+Let's try some examples:

+

+`mask`: we expect it to skip the first ip-adapter since `ip_adapter_image` is not provided, and then run the inpaint for the second block.

+

+```py

+>>> all_blocks.get_execution_blocks('mask')

+SequentialPipelineBlocks(

+ Class: ModularPipelineBlocks

+

+ Description:

+

+

+ Sub-Blocks:

+ [0] image-generation (TestBlock)

+ Description: I'm a inpaint workflow!

+

+)

+```

+

+Let's also actually run the pipeline to confirm:

+

+```py

+>>> _ = pipeline(mask="mask")

+skipping auto block: AutoIPAdapter

+running the inpaint workflow

+```

+

+Try a few more:

+

+```py

+print(f"inputs: ip_adapter_image:")

+blocks_select = all_blocks.get_execution_blocks('ip_adapter_image')

+print(f"expected_execution_blocks: {blocks_select}")

+print(f"actual execution blocks:")

+_ = pipeline(ip_adapter_image="ip_adapter_image", prompt="prompt")

+# expect to see ip-adapter + text2img

+

+print(f"inputs: image:")

+blocks_select = all_blocks.get_execution_blocks('image')

+print(f"expected_execution_blocks: {blocks_select}")

+print(f"actual execution blocks:")

+_ = pipeline(image="image", prompt="prompt")

+# expect to see img2img

+

+print(f"inputs: prompt:")

+blocks_select = all_blocks.get_execution_blocks('prompt')

+print(f"expected_execution_blocks: {blocks_select}")

+print(f"actual execution blocks:")

+_ = pipeline(prompt="prompt")

+# expect to see text2img (prompt is not a trigger input so fallback to default)

+

+print(f"inputs: mask + ip_adapter_image:")

+blocks_select = all_blocks.get_execution_blocks('mask','ip_adapter_image')

+print(f"expected_execution_blocks: {blocks_select}")

+print(f"actual execution blocks:")

+_ = pipeline(mask="mask", ip_adapter_image="ip_adapter_image")

+# expect to see ip-adapter + inpaint

+```

+

+In summary, `AutoPipelineBlocks` is a good tool for packaging multiple workflows into a single, convenient interface and it can greatly simplify the user experience. However, always provide clear descriptions explaining the conditional logic, test individual pipelines first before combining them, and use `get_execution_blocks()` to understand runtime behavior in complex compositions.

\ No newline at end of file

diff --git a/docs/source/en/modular_diffusers/components_manager.md b/docs/source/en/modular_diffusers/components_manager.md

new file mode 100644

index 0000000000..15b6c66b9b

--- /dev/null

+++ b/docs/source/en/modular_diffusers/components_manager.md

@@ -0,0 +1,514 @@

+

+

+# Components Manager

+

+

+

+🧪 **Experimental Feature**: This is an experimental feature we are actively developing. The API may be subject to breaking changes.

+

+

+

+The Components Manager is a central model registry and management system in diffusers. It lets you add models then reuse them across multiple pipelines and workflows. It tracks all models in one place with useful metadata such as model size, device placement and loaded adapters (LoRA, IP-Adapter). It has mechanisms in place to prevent duplicate model instances, enables memory-efficient sharing. Most significantly, it offers offloading that works across pipelines — unlike regular DiffusionPipeline offloading (i.e. `enable_model_cpu_offload` and `enable_sequential_cpu_offload`) which is limited to one pipeline with predefined sequences, the Components Manager automatically manages your device memory across all your models and workflows.

+

+

+## Basic Operations

+

+Let's start with the most basic operations. First, create a Components Manager:

+

+```py

+from diffusers import ComponentsManager

+comp = ComponentsManager()

+```

+

+Use the `add(name, component)` method to register a component. It returns a unique ID that combines the component name with the object's unique identifier (using Python's `id()` function):

+

+```py

+from diffusers import AutoModel

+text_encoder = AutoModel.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder")

+# Returns component_id like 'text_encoder_139917733042864'

+component_id = comp.add("text_encoder", text_encoder)

+```

+

+You can view all registered components and their metadata:

+

+```py

+>>> comp

+Components:

+===============================================================================================================================================

+Models:

+-----------------------------------------------------------------------------------------------------------------------------------------------

+Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

+-----------------------------------------------------------------------------------------------------------------------------------------------

+text_encoder_139917733042864 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

+-----------------------------------------------------------------------------------------------------------------------------------------------

+

+Additional Component Info:

+==================================================

+```

+

+And remove components using their unique ID:

+

+```py

+comp.remove("text_encoder_139917733042864")

+```

+

+## Duplicate Detection

+

+The Components Manager automatically detects and prevents duplicate model instances to save memory and avoid confusion. Let's walk through how this works in practice.

+

+When you try to add the same object twice, the manager will warn you and return the existing ID:

+

+```py

+>>> comp.add("text_encoder", text_encoder)

+'text_encoder_139917733042864'

+>>> comp.add("text_encoder", text_encoder)

+ComponentsManager: component 'text_encoder' already exists as 'text_encoder_139917733042864'

+'text_encoder_139917733042864'

+```

+

+Even if you add the same object under a different name, it will still be detected as a duplicate:

+

+```py

+>>> comp.add("clip", text_encoder)

+ComponentsManager: adding component 'clip' as 'clip_139917733042864', but it is duplicate of 'text_encoder_139917733042864'

+To remove a duplicate, call `components_manager.remove('

')`.

+'clip_139917733042864'

+```

+

+However, there's a more subtle case where duplicate detection becomes tricky. When you load the same model into different objects, the manager can't detect duplicates unless you use `ComponentSpec`. For example:

+

+```py

+>>> text_encoder_2 = AutoModel.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder")

+>>> comp.add("text_encoder", text_encoder_2)

+'text_encoder_139917732983664'

+```

+

+This creates a problem - you now have two copies of the same model consuming double the memory:

+

+```py

+>>> comp

+Components:

+===============================================================================================================================================

+Models:

+-----------------------------------------------------------------------------------------------------------------------------------------------

+Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

+-----------------------------------------------------------------------------------------------------------------------------------------------

+text_encoder_139917733042864 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

+clip_139917733042864 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

+text_encoder_139917732983664 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

+-----------------------------------------------------------------------------------------------------------------------------------------------

+

+Additional Component Info:

+==================================================

+```

+

+We recommend using `ComponentSpec` to load your models. Models loaded with `ComponentSpec` get tagged with a unique ID that encodes their loading parameters, allowing the Components Manager to detect when different objects represent the same underlying checkpoint:

+

+```py

+from diffusers import ComponentSpec, ComponentsManager

+from transformers import CLIPTextModel

+comp = ComponentsManager()

+

+# Create ComponentSpec for the first text encoder

+spec = ComponentSpec(name="text_encoder", repo="stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder", type_hint=AutoModel)

+# Create ComponentSpec for a duplicate text encoder (it is same checkpoint, from same repo/subfolder)

+spec_duplicated = ComponentSpec(name="text_encoder_duplicated", repo="stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder", type_hint=CLIPTextModel)

+

+# Load and add both components - the manager will detect they're the same model

+comp.add("text_encoder", spec.load())

+comp.add("text_encoder_duplicated", spec_duplicated.load())

+```

+

+Now the manager detects the duplicate and warns you:

+

+```out

+ComponentsManager: adding component 'text_encoder_duplicated_139917580682672', but it has duplicate load_id 'stabilityai/stable-diffusion-xl-base-1.0|text_encoder|null|null' with existing components: text_encoder_139918506246832. To remove a duplicate, call `components_manager.remove('')`.

+'text_encoder_duplicated_139917580682672'

+```

+

+Both models now show the same `load_id`, making it clear they're the same model:

+

+```py

+>>> comp

+Components:

+======================================================================================================================================================================================================

+Models:

+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+text_encoder_139918506246832 | CLIPTextModel | cpu | torch.float32 | 0.46 | stabilityai/stable-diffusion-xl-base-1.0|text_encoder|null|null | N/A

+text_encoder_duplicated_139917580682672 | CLIPTextModel | cpu | torch.float32 | 0.46 | stabilityai/stable-diffusion-xl-base-1.0|text_encoder|null|null | N/A

+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+

+Additional Component Info:

+==================================================

+```

+

+## Collections

+

+Collections are labels you can assign to components for better organization and management. You add a component under a collection by passing the `collection=` parameter when you add the component to the manager, i.e. `add(name, component, collection=...)`. Within each collection, only one component per name is allowed - if you add a second component with the same name, the first one is automatically removed.

+

+Here's how collections work in practice:

+

+```py

+comp = ComponentsManager()

+# Create ComponentSpec for the first UNet (SDXL base)

+spec = ComponentSpec(name="unet", repo="stabilityai/stable-diffusion-xl-base-1.0", subfolder="unet", type_hint=AutoModel)

+# Create ComponentSpec for a different UNet (Juggernaut-XL)

+spec2 = ComponentSpec(name="unet", repo="RunDiffusion/Juggernaut-XL-v9", subfolder="unet", type_hint=AutoModel, variant="fp16")

+

+# Add both UNets to the same collection - the second one will replace the first

+comp.add("unet", spec.load(), collection="sdxl")

+comp.add("unet", spec2.load(), collection="sdxl")

+```

+

+The manager automatically removes the old UNet and adds the new one:

+

+```out

+ComponentsManager: removing existing unet from collection 'sdxl': unet_139917723891888

+'unet_139917723893136'

+```

+

+Only one UNet remains in the collection:

+

+```py

+>>> comp

+Components:

+====================================================================================================================================================================

+Models:

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------

+Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------

+unet_139917723893136 | UNet2DConditionModel | cpu | torch.float32 | 9.56 | RunDiffusion/Juggernaut-XL-v9|unet|fp16|null | sdxl

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------

+

+Additional Component Info:

+==================================================

+```

+

+For example, in node-based systems, you can mark all models loaded from one node with the same collection label, automatically replace models when user loads new checkpoints under same name, batch delete all models in a collection when a node is removed.

+

+## Retrieving Components

+

+The Components Manager provides several methods to retrieve registered components.

+

+The `get_one()` method returns a single component and supports pattern matching for the `name` parameter. You can use:

+- exact matches like `comp.get_one(name="unet")`

+- wildcards like `comp.get_one(name="unet*")` for components starting with "unet"

+- exclusion patterns like `comp.get_one(name="!unet")` to exclude components named "unet"

+- OR patterns like `comp.get_one(name="unet|vae")` to match either "unet" OR "vae".

+

+Optionally, You can add collection and load_id as filters e.g. `comp.get_one(name="unet", collection="sdxl")`. If multiple components match, `get_one()` throws an error.

+

+Another useful method is `get_components_by_names()`, which takes a list of names and returns a dictionary mapping names to components. This is particularly helpful with modular pipelines since they provide lists of required component names, and the returned dictionary can be directly passed to `pipeline.update_components()`.

+

+```py

+# Get components by name list

+component_dict = comp.get_components_by_names(names=["text_encoder", "unet", "vae"])

+# Returns: {"text_encoder": component1, "unet": component2, "vae": component3}

+```

+

+## Using Components Manager with Modular Pipelines

+

+The Components Manager integrates seamlessly with Modular Pipelines. All you need to do is pass a Components Manager instance to `from_pretrained()` or `init_pipeline()` with an optional `collection` parameter:

+

+```py

+from diffusers import ModularPipeline, ComponentsManager

+comp = ComponentsManager()

+pipe = ModularPipeline.from_pretrained("YiYiXu/modular-demo-auto", components_manager=comp, collection="test1")

+```

+

+By default, modular pipelines don't load components immediately, so both the pipeline and Components Manager start empty:

+

+```py

+>>> comp

+Components:

+==================================================

+No components registered.

+==================================================

+```

+

+When you load components on the pipeline, they are automatically registered in the Components Manager:

+

+```py

+>>> pipe.load_components(names="unet")

+>>> comp

+Components:

+==============================================================================================================================================================

+Models:

+--------------------------------------------------------------------------------------------------------------------------------------------------------------

+Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

+--------------------------------------------------------------------------------------------------------------------------------------------------------------

+unet_139917726686304 | UNet2DConditionModel | cpu | torch.float32 | 9.56 | SG161222/RealVisXL_V4.0|unet|null|null | test1

+--------------------------------------------------------------------------------------------------------------------------------------------------------------

+

+Additional Component Info:

+==================================================

+```

+

+Now let's load all default components and then create a second pipeline that reuses all components from the first one. We pass the same Components Manager to the second pipeline but with a different collection:

+

+```py

+# Load all default components

+>>> pipe.load_default_components()

+

+# Create a second pipeline using the same Components Manager but with a different collection

+>>> pipe2 = ModularPipeline.from_pretrained("YiYiXu/modular-demo-auto", components_manager=comp, collection="test2")

+```

+

+As mentioned earlier, `ModularPipeline` has a property `null_component_names` that returns a list of component names it needs to load. We can conveniently use this list with the `get_components_by_names` method on the Components Manager:

+

+```py

+# Get the list of components that pipe2 needs to load

+>>> pipe2.null_component_names

+['text_encoder', 'text_encoder_2', 'tokenizer', 'tokenizer_2', 'image_encoder', 'unet', 'vae', 'scheduler', 'controlnet']

+

+# Retrieve all required components from the Components Manager

+>>> comp_dict = comp.get_components_by_names(names=pipe2.null_component_names)

+

+# Update the pipeline with the retrieved components

+>>> pipe2.update_components(**comp_dict)

+```

+

+The warnings that follow are expected and indicate that the Components Manager is correctly identifying that these components already exist and will be reused rather than creating duplicates:

+

+```out

+ComponentsManager: component 'text_encoder' already exists as 'text_encoder_139917586016400'

+ComponentsManager: component 'text_encoder_2' already exists as 'text_encoder_2_139917699973424'

+ComponentsManager: component 'tokenizer' already exists as 'tokenizer_139917580599504'

+ComponentsManager: component 'tokenizer_2' already exists as 'tokenizer_2_139915763443904'

+ComponentsManager: component 'image_encoder' already exists as 'image_encoder_139917722468304'

+ComponentsManager: component 'unet' already exists as 'unet_139917580609632'

+ComponentsManager: component 'vae' already exists as 'vae_139917722459040'

+ComponentsManager: component 'scheduler' already exists as 'scheduler_139916266559408'

+ComponentsManager: component 'controlnet' already exists as 'controlnet_139917722454432'

+```

+

+

+The pipeline is now fully loaded:

+

+```py

+# null_component_names return empty list, meaning everything are loaded

+>>> pipe2.null_component_names

+[]

+```

+

+No new components were added to the Components Manager - we're reusing everything. All models are now associated with both `test1` and `test2` collections, showing that these components are shared across multiple pipelines:

+```py

+>>> comp

+Components:

+========================================================================================================================================================================================

+Models:

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+text_encoder_139917586016400 | CLIPTextModel | cpu | torch.float32 | 0.46 | SG161222/RealVisXL_V4.0|text_encoder|null|null | test1

+ | | | | | | test2

+text_encoder_2_139917699973424 | CLIPTextModelWithProjection | cpu | torch.float32 | 2.59 | SG161222/RealVisXL_V4.0|text_encoder_2|null|null | test1

+ | | | | | | test2

+unet_139917580609632 | UNet2DConditionModel | cpu | torch.float32 | 9.56 | SG161222/RealVisXL_V4.0|unet|null|null | test1

+ | | | | | | test2

+controlnet_139917722454432 | ControlNetModel | cpu | torch.float32 | 4.66 | diffusers/controlnet-canny-sdxl-1.0|null|null|null | test1

+ | | | | | | test2

+vae_139917722459040 | AutoencoderKL | cpu | torch.float32 | 0.31 | SG161222/RealVisXL_V4.0|vae|null|null | test1

+ | | | | | | test2

+image_encoder_139917722468304 | CLIPVisionModelWithProjection | cpu | torch.float32 | 6.87 | h94/IP-Adapter|sdxl_models/image_encoder|null|null | test1

+ | | | | | | test2

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+

+Other Components:

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+ID | Class | Collection

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+tokenizer_139917580599504 | CLIPTokenizer | test1

+ | | test2

+scheduler_139916266559408 | EulerDiscreteScheduler | test1

+ | | test2

+tokenizer_2_139915763443904 | CLIPTokenizer | test1

+ | | test2

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

+

+Additional Component Info:

+==================================================

+```

+

+

+## Automatic Memory Management

+

+The Components Manager provides a global offloading strategy across all models, regardless of which pipeline is using them:

+

+```py

+comp.enable_auto_cpu_offload(device="cuda")

+```

+

+When enabled, all models start on CPU. The manager moves models to the device right before they're used and moves other models back to CPU when GPU memory runs low. You can set your own rules for which models to offload first. This works smoothly as you add or remove components. Once it's on, you don't need to worry about device placement - you can focus on your workflow.

+

+

+

+## Practical Example: Building Modular Workflows with Component Reuse

+

+Now that we've covered the basics of the Components Manager, let's walk through a practical example that shows how to build workflows in a modular setting and use the Components Manager to reuse components across multiple pipelines. This example demonstrates the true power of Modular Diffusers by working with multiple pipelines that can share components.

+

+In this example, we'll generate latents from a text-to-image pipeline, then refine them with an image-to-image pipeline.

+

+Let's create a modular text-to-image workflow by separating it into three workflows: `text_blocks` for encoding prompts, `t2i_blocks` for generating latents, and `decoder_blocks` for creating final images.

+

+```py

+import torch

+from diffusers.modular_pipelines import SequentialPipelineBlocks

+from diffusers.modular_pipelines.stable_diffusion_xl import ALL_BLOCKS

+

+# Create modular blocks and separate text encoding and decoding steps

+t2i_blocks = SequentialPipelineBlocks.from_blocks_dict(ALL_BLOCKS["text2img"])

+text_blocks = t2i_blocks.sub_blocks.pop("text_encoder")

+decoder_blocks = t2i_blocks.sub_blocks.pop("decode")

+```

+

+Now we will convert them into runnalbe pipelines and set up the Components Manager with auto offloading and organize components under a "t2i" collection

+

+Since we now have 3 different workflows that share components, we create a separate pipeline that serves as a dedicated loader to load all the components, register them to the component manager, and then reuse them across different workflows.

+

+```py

+from diffusers import ComponentsManager, ModularPipeline

+

+# Set up Components Manager with auto offloading

+components = ComponentsManager()

+components.enable_auto_cpu_offload(device="cuda")

+

+# Create a new pipeline to load the components

+t2i_repo = "YiYiXu/modular-demo-auto"

+t2i_loader_pipe = ModularPipeline.from_pretrained(t2i_repo, components_manager=components, collection="t2i")

+

+# convert the 3 blocks into pipelines and attach the same components manager to all 3

+text_node = text_blocks.init_pipeline(t2i_repo, components_manager=components)

+decoder_node = decoder_blocks.init_pipeline(t2i_repo, components_manager=components)

+t2i_pipe = t2i_blocks.init_pipeline(t2i_repo, components_manager=components)

+```

+

+Load all components into the loader pipeline, they should all be automatically registered to Components Manager under the "t2i" collection:

+

+```py

+# Load all components (including IP-Adapter and ControlNet for later use)

+t2i_loader_pipe.load_default_components(torch_dtype=torch.float16)

+```

+

+Now distribute the loaded components to each pipeline:

+

+```py

+# Get VAE for decoder (using get_one since there's only one)

+vae = components.get_one(load_id="SG161222/RealVisXL_V4.0|vae|null|null")

+decoder_node.update_components(vae=vae)

+

+# Get text components for text node (using get_components_by_names for multiple components)

+text_components = components.get_components_by_names(text_node.null_component_names)

+text_node.update_components(**text_components)

+

+# Get remaining components for t2i pipeline

+t2i_components = components.get_components_by_names(t2i_pipe.null_component_names)

+t2i_pipe.update_components(**t2i_components)

+```

+

+Now we can generate images using our modular workflow:

+

+```py

+# Generate text embeddings

+prompt = "an astronaut"

+text_embeddings = text_node(prompt=prompt, output=["prompt_embeds","negative_prompt_embeds", "pooled_prompt_embeds", "negative_pooled_prompt_embeds"])

+

+# Generate latents and decode to image

+generator = torch.Generator(device="cuda").manual_seed(0)

+latents_t2i = t2i_pipe(**text_embeddings, num_inference_steps=25, generator=generator, output="latents")

+image = decoder_node(latents=latents_t2i, output="images")[0]

+image.save("modular_part2_t2i.png")

+```

+

+Let's add a LoRA:

+

+```py

+# Load LoRA weights

+>>> t2i_loader_pipe.load_lora_weights("CiroN2022/toy-face", weight_name="toy_face_sdxl.safetensors", adapter_name="toy_face")

+>>> components

+Components:

+============================================================================================================================================================

+...

+Additional Component Info:

+==================================================

+

+unet:

+ Adapters: ['toy_face']

+```

+

+You can see that the Components Manager tracks adapters metadata for all models it manages, and in our case, only Unet has lora loaded. This means we can reuse existing text embeddings.

+

+```py

+# Generate with LoRA (reusing existing text embeddings)

+generator = torch.Generator(device="cuda").manual_seed(0)

+latents_lora = t2i_pipe(**text_embeddings, num_inference_steps=25, generator=generator, output="latents")

+image = decoder_node(latents=latents_lora, output="images")[0]

+image.save("modular_part2_lora.png")

+```

+

+

+Now let's create a refiner pipeline that reuses components from our text-to-image workflow:

+

+```py

+# Create refiner blocks (removing image_encoder and decode since we work with latents)

+refiner_blocks = SequentialPipelineBlocks.from_blocks_dict(ALL_BLOCKS["img2img"])

+refiner_blocks.sub_blocks.pop("image_encoder")

+refiner_blocks.sub_blocks.pop("decode")

+

+# Create refiner pipeline with different repo and collection,

+# Attach the same component manager to it

+refiner_repo = "YiYiXu/modular_refiner"

+refiner_pipe = refiner_blocks.init_pipeline(refiner_repo, components_manager=components, collection="refiner")

+```

+

+We pass the **same Components Manager** (`components`) to the refiner pipeline, but with a **different collection** (`"refiner"`). This allows the refiner to access and reuse components from the "t2i" collection while organizing its own components (like the refiner UNet) under the "refiner" collection.

+

+```py

+# Load only the refiner UNet (different from t2i UNet)

+refiner_pipe.load_components(names="unet", torch_dtype=torch.float16)

+

+# Reuse components from t2i pipeline using pattern matching

+reuse_components = components.search_components("text_encoder_2|scheduler|vae|tokenizer_2")

+refiner_pipe.update_components(**reuse_components)

+```

+

+When we reuse components from the "t2i" collection, they automatically get added to the "refiner" collection as well. You can verify this by checking the Components Manager - you'll see components like `vae`, `scheduler`, etc. listed under both collections, indicating they're shared between workflows.

+

+Now we can refine any of our generated latents:

+

+```py

+# Refine all our different latents

+refined_latents = refiner_pipe(image_latents=latents_t2i, prompt=prompt, num_inference_steps=10, output="latents")

+refined_image = decoder_node(latents=refined_latents, output="images")[0]

+refined_image.save("modular_part2_t2i_refine_out.png")

+

+refined_latents = refiner_pipe(image_latents=latents_lora, prompt=prompt, num_inference_steps=10, output="latents")

+refined_image = decoder_node(latents=refined_latents, output="images")[0]

+refined_image.save("modular_part2_lora_refine_out.png")

+```

+

+

+Here are the results from our modular pipeline examples.

+

+#### Base Text-to-Image Generation

+| Base Text-to-Image | Base Text-to-Image (Refined) |

+|-------------------|------------------------------|

+|  |  |

+

+#### LoRA

+| LoRA | LoRA (Refined) |

+|-------------------|------------------------------|

+|  |  |

+

diff --git a/docs/source/en/modular_diffusers/end_to_end_guide.md b/docs/source/en/modular_diffusers/end_to_end_guide.md

new file mode 100644

index 0000000000..cb7b87552a

--- /dev/null

+++ b/docs/source/en/modular_diffusers/end_to_end_guide.md

@@ -0,0 +1,648 @@

+

+

+# End-to-End Developer Guide: Building with Modular Diffusers

+

+

+

+🧪 **Experimental Feature**: Modular Diffusers is an experimental feature we are actively developing. The API may be subject to breaking changes.

+

+

+

+

+In this tutorial we will walk through the process of adding a new pipeline to the modular framework using differential diffusion as our example. We'll cover the complete workflow from implementation to deployment: implementing the new pipeline, ensuring compatibility with existing tools, sharing the code on Hugging Face Hub, and deploying it as a UI node.

+

+We'll also demonstrate the 4-step framework process we use for implementing new basic pipelines in the modular system.

+

+1. **Start with an existing pipeline as a base**

+ - Identify which existing pipeline is most similar to the one you want to implement

+ - Determine what part of the pipeline needs modification

+

+2. **Build a working pipeline structure first**

+ - Assemble the complete pipeline structure

+ - Use existing blocks wherever possible

+ - For new blocks, create placeholders (e.g. you can copy from similar blocks and change the name) without implementing custom logic just yet

+

+3. **Set up an example**

+ - Create a simple inference script with expected inputs/outputs

+

+4. **Implement your custom logic and test incrementally**

+ - Add the custom logics the blocks you want to change

+ - Test incrementally, and inspect pipeline states and debug as needed

+

+Let's see how this works with the Differential Diffusion example.

+

+

+## Differential Diffusion Pipeline

+

+### Start with an existing pipeline

+

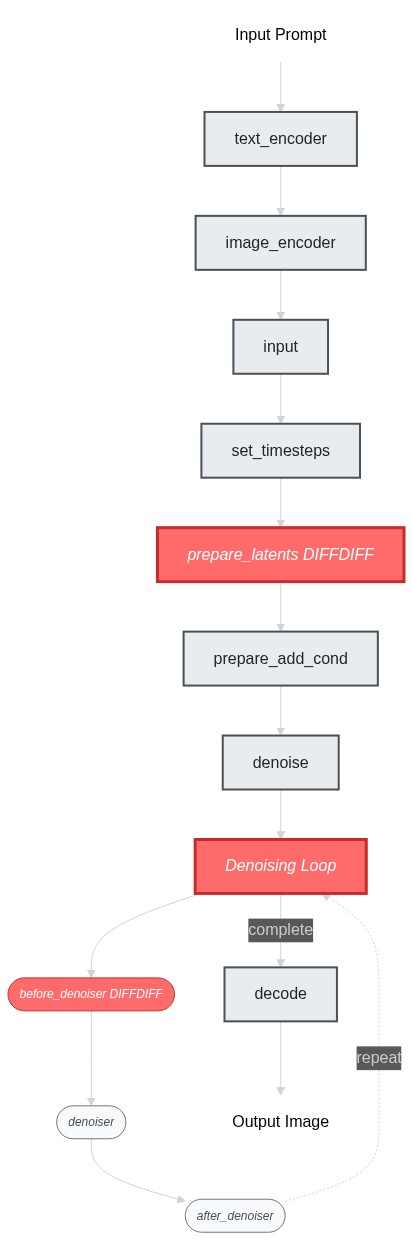

+Differential diffusion (https://differential-diffusion.github.io/) is an image-to-image workflow, so it makes sense for us to start with the preset of pipeline blocks used to build img2img pipeline (`IMAGE2IMAGE_BLOCKS`) and see how we can build this new pipeline with them.

+

+```py

+>>> from diffusers.modular_pipelines.stable_diffusion_xl import IMAGE2IMAGE_BLOCKS

+>>> IMAGE2IMAGE_BLOCKS = InsertableDict([

+... ("text_encoder", StableDiffusionXLTextEncoderStep),

+... ("image_encoder", StableDiffusionXLVaeEncoderStep),

+... ("input", StableDiffusionXLInputStep),

+... ("set_timesteps", StableDiffusionXLImg2ImgSetTimestepsStep),

+... ("prepare_latents", StableDiffusionXLImg2ImgPrepareLatentsStep),

+... ("prepare_add_cond", StableDiffusionXLImg2ImgPrepareAdditionalConditioningStep),

+... ("denoise", StableDiffusionXLDenoiseStep),

+... ("decode", StableDiffusionXLDecodeStep)

+... ])

+```

+

+Note that "denoise" (`StableDiffusionXLDenoiseStep`) is a `LoopSequentialPipelineBlocks` that contains 3 loop blocks (more on LoopSequentialPipelineBlocks [here](https://huggingface.co/docs/diffusers/modular_diffusers/write_own_pipeline_block#loopsequentialpipelineblocks))

+

+```py

+>>> denoise_blocks = IMAGE2IMAGE_BLOCKS["denoise"]()

+>>> print(denoise_blocks)

+```

+

+```out

+StableDiffusionXLDenoiseStep(

+ Class: StableDiffusionXLDenoiseLoopWrapper

+