diff --git a/docs/source/en/api/pipelines/controlnet_sdxl.md b/docs/source/en/api/pipelines/controlnet_sdxl.md

index 17312d135d..7a6b888b49 100644

--- a/docs/source/en/api/pipelines/controlnet_sdxl.md

+++ b/docs/source/en/api/pipelines/controlnet_sdxl.md

@@ -28,6 +28,124 @@ You can find some results below:

+## MultiControlNet

+

+You can compose multiple ControlNet conditionings from different image inputs to create a *MultiControlNet*. To get better results, it is often helpful to:

+

+1. mask conditionings such that they don't overlap (for example, mask the area of a canny image where the pose conditioning is located)

+2. experiment with the [`controlnet_conditioning_scale`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet#diffusers.StableDiffusionControlNetPipeline.__call__.controlnet_conditioning_scale) parameter to determine how much weight to assign to each conditioning input

+

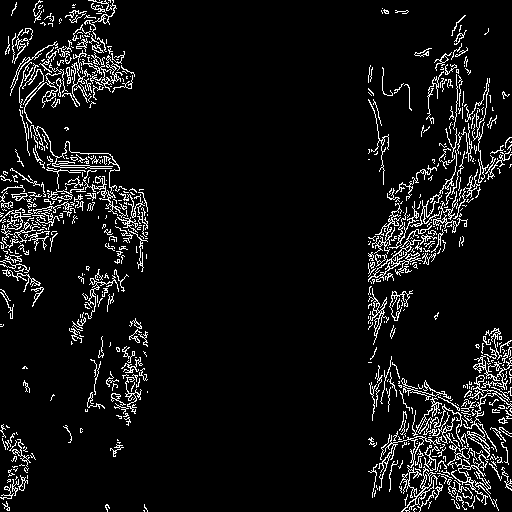

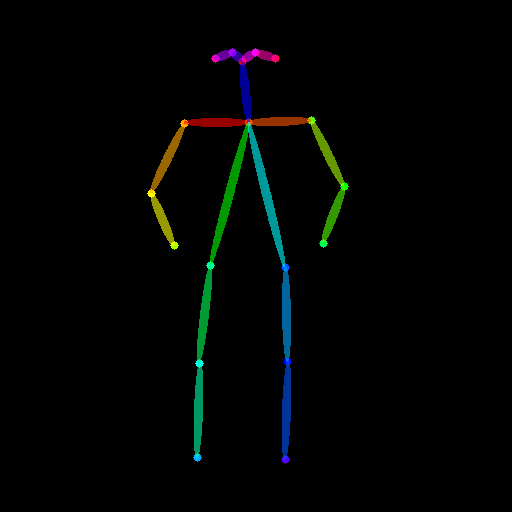

+In this example, you'll combine a canny image and a human pose estimation image to generate a new image.

+

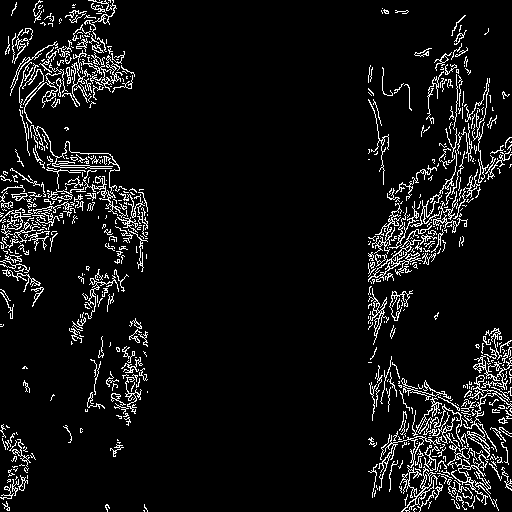

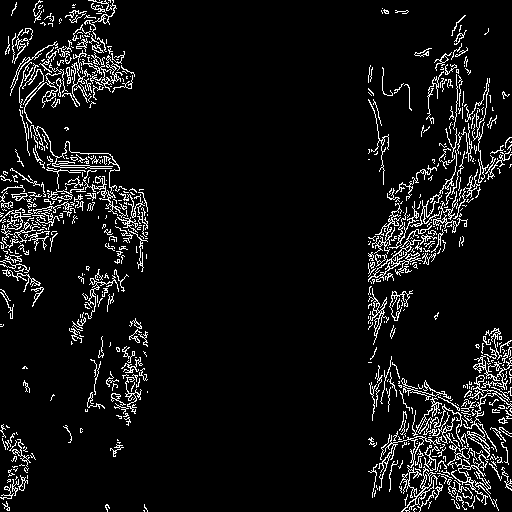

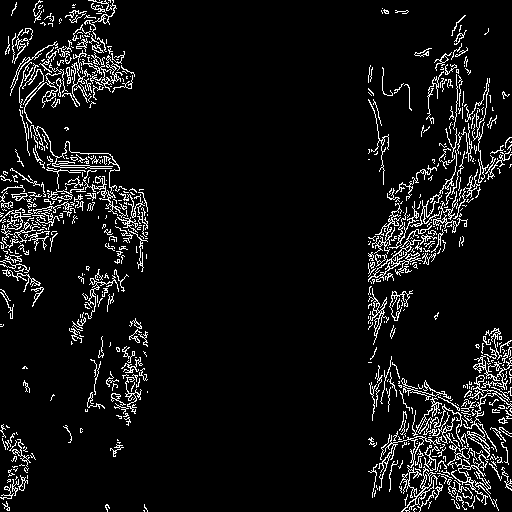

+Prepare the canny image conditioning:

+

+```py

+from diffusers.utils import load_image

+from PIL import Image

+import numpy as np

+import cv2

+

+canny_image = load_image(

+ "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/landscape.png"

+)

+canny_image = np.array(canny_image)

+

+low_threshold = 100

+high_threshold = 200

+

+canny_image = cv2.Canny(canny_image, low_threshold, high_threshold)

+

+# zero out middle columns of image where pose will be overlayed

+zero_start = canny_image.shape[1] // 4

+zero_end = zero_start + canny_image.shape[1] // 2

+canny_image[:, zero_start:zero_end] = 0

+

+canny_image = canny_image[:, :, None]

+canny_image = np.concatenate([canny_image, canny_image, canny_image], axis=2)

+canny_image = Image.fromarray(canny_image).resize((1024, 1024))

+```

+

+

+## MultiControlNet

+

+You can compose multiple ControlNet conditionings from different image inputs to create a *MultiControlNet*. To get better results, it is often helpful to:

+

+1. mask conditionings such that they don't overlap (for example, mask the area of a canny image where the pose conditioning is located)

+2. experiment with the [`controlnet_conditioning_scale`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet#diffusers.StableDiffusionControlNetPipeline.__call__.controlnet_conditioning_scale) parameter to determine how much weight to assign to each conditioning input

+

+In this example, you'll combine a canny image and a human pose estimation image to generate a new image.

+

+Prepare the canny image conditioning:

+

+```py

+from diffusers.utils import load_image

+from PIL import Image

+import numpy as np

+import cv2

+

+canny_image = load_image(

+ "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/landscape.png"

+)

+canny_image = np.array(canny_image)

+

+low_threshold = 100

+high_threshold = 200

+

+canny_image = cv2.Canny(canny_image, low_threshold, high_threshold)

+

+# zero out middle columns of image where pose will be overlayed

+zero_start = canny_image.shape[1] // 4

+zero_end = zero_start + canny_image.shape[1] // 2

+canny_image[:, zero_start:zero_end] = 0

+

+canny_image = canny_image[:, :, None]

+canny_image = np.concatenate([canny_image, canny_image, canny_image], axis=2)

+canny_image = Image.fromarray(canny_image).resize((1024, 1024))

+```

+

+

+

+

+

original image

+

+

+

+

canny image

+

+

+

+

+

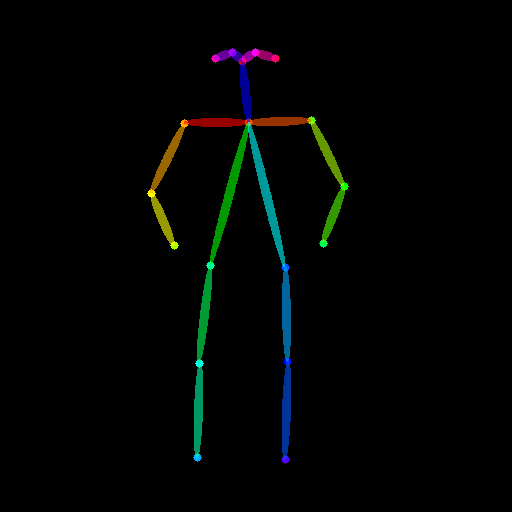

original image

+

+

+

+

human pose image

+

+

+

+

+

+  +

+  +

+  +

+  +## MultiControlNet

+

+You can compose multiple ControlNet conditionings from different image inputs to create a *MultiControlNet*. To get better results, it is often helpful to:

+

+1. mask conditionings such that they don't overlap (for example, mask the area of a canny image where the pose conditioning is located)

+2. experiment with the [`controlnet_conditioning_scale`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet#diffusers.StableDiffusionControlNetPipeline.__call__.controlnet_conditioning_scale) parameter to determine how much weight to assign to each conditioning input

+

+In this example, you'll combine a canny image and a human pose estimation image to generate a new image.

+

+Prepare the canny image conditioning:

+

+```py

+from diffusers.utils import load_image

+from PIL import Image

+import numpy as np

+import cv2

+

+canny_image = load_image(

+ "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/landscape.png"

+)

+canny_image = np.array(canny_image)

+

+low_threshold = 100

+high_threshold = 200

+

+canny_image = cv2.Canny(canny_image, low_threshold, high_threshold)

+

+# zero out middle columns of image where pose will be overlayed

+zero_start = canny_image.shape[1] // 4

+zero_end = zero_start + canny_image.shape[1] // 2

+canny_image[:, zero_start:zero_end] = 0

+

+canny_image = canny_image[:, :, None]

+canny_image = np.concatenate([canny_image, canny_image, canny_image], axis=2)

+canny_image = Image.fromarray(canny_image).resize((1024, 1024))

+```

+

+

+## MultiControlNet

+

+You can compose multiple ControlNet conditionings from different image inputs to create a *MultiControlNet*. To get better results, it is often helpful to:

+

+1. mask conditionings such that they don't overlap (for example, mask the area of a canny image where the pose conditioning is located)

+2. experiment with the [`controlnet_conditioning_scale`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet#diffusers.StableDiffusionControlNetPipeline.__call__.controlnet_conditioning_scale) parameter to determine how much weight to assign to each conditioning input

+

+In this example, you'll combine a canny image and a human pose estimation image to generate a new image.

+

+Prepare the canny image conditioning:

+

+```py

+from diffusers.utils import load_image

+from PIL import Image

+import numpy as np

+import cv2

+

+canny_image = load_image(

+ "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/landscape.png"

+)

+canny_image = np.array(canny_image)

+

+low_threshold = 100

+high_threshold = 200

+

+canny_image = cv2.Canny(canny_image, low_threshold, high_threshold)

+

+# zero out middle columns of image where pose will be overlayed

+zero_start = canny_image.shape[1] // 4

+zero_end = zero_start + canny_image.shape[1] // 2

+canny_image[:, zero_start:zero_end] = 0

+

+canny_image = canny_image[:, :, None]

+canny_image = np.concatenate([canny_image, canny_image, canny_image], axis=2)

+canny_image = Image.fromarray(canny_image).resize((1024, 1024))

+```

+

+ +

+  +

+  +

+  +

+