diff --git a/docs/source/en/optimization/onnx.md b/docs/source/en/optimization/onnx.md

index 6f96ba0cc1..89ea435217 100644

--- a/docs/source/en/optimization/onnx.md

+++ b/docs/source/en/optimization/onnx.md

@@ -23,19 +23,20 @@ Install 🤗 Optimum with the following command for ONNX Runtime support:

pip install optimum["onnxruntime"]

```

-## Stable Diffusion Inference

+## Stable Diffusion

-To load an ONNX model and run inference with the ONNX Runtime, you need to replace [`StableDiffusionPipeline`] with `ORTStableDiffusionPipeline`. In case you want to load

-a PyTorch model and convert it to the ONNX format on-the-fly, you can set `export=True`.

+### Inference

+

+To load an ONNX model and run inference with the ONNX Runtime, you need to replace [`StableDiffusionPipeline`] with `ORTStableDiffusionPipeline`. In case you want to load a PyTorch model and convert it to the ONNX format on-the-fly, you can set `export=True`.

```python

from optimum.onnxruntime import ORTStableDiffusionPipeline

model_id = "runwayml/stable-diffusion-v1-5"

-pipe = ORTStableDiffusionPipeline.from_pretrained(model_id, export=True)

-prompt = "a photo of an astronaut riding a horse on mars"

-images = pipe(prompt).images[0]

-pipe.save_pretrained("./onnx-stable-diffusion-v1-5")

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id, export=True)

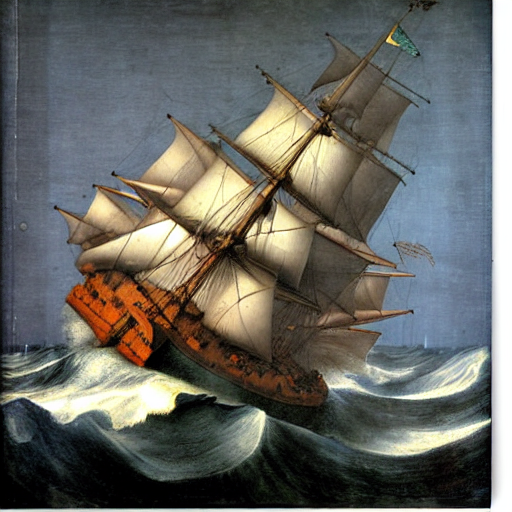

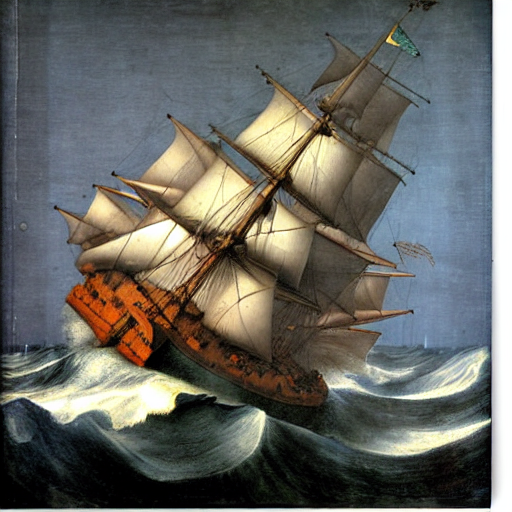

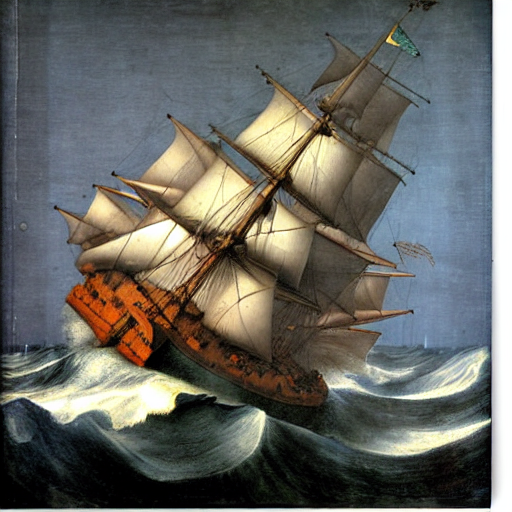

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

+pipeline.save_pretrained("./onnx-stable-diffusion-v1-5")

```

If you want to export the pipeline in the ONNX format offline and later use it for inference,

@@ -51,15 +52,57 @@ Then perform inference:

from optimum.onnxruntime import ORTStableDiffusionPipeline

model_id = "sd_v15_onnx"

-pipe = ORTStableDiffusionPipeline.from_pretrained(model_id)

-prompt = "a photo of an astronaut riding a horse on mars"

-images = pipe(prompt).images[0]

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id)

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

```

Notice that we didn't have to specify `export=True` above.

+

+

+

+

+

+

+ +

+ +

+