diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 53718f8d0e..2a9de8b3f2 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -206,6 +206,8 @@

title: InstructPix2Pix

- local: api/pipelines/kandinsky

title: Kandinsky

+ - local: api/pipelines/kandinsky_v22

+ title: Kandinsky 2.2

- local: api/pipelines/latent_diffusion

title: Latent Diffusion

- local: api/pipelines/panorama

diff --git a/docs/source/en/api/pipelines/kandinsky.mdx b/docs/source/en/api/pipelines/kandinsky.mdx

index 6b6c64a089..79c602b8bc 100644

--- a/docs/source/en/api/pipelines/kandinsky.mdx

+++ b/docs/source/en/api/pipelines/kandinsky.mdx

@@ -212,9 +212,9 @@ init_image = load_image(

"https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main" "/kandinsky/cat.png"

)

-mask = np.ones((768, 768), dtype=np.float32)

+mask = np.zeros((768, 768), dtype=np.float32)

# Let's mask out an area above the cat's head

-mask[:250, 250:-250] = 0

+mask[:250, 250:-250] = 1

out = pipe(

prompt,

@@ -276,208 +276,6 @@ image.save("starry_cat.png")

```

-

-### Text-to-Image Generation with ControlNet Conditioning

-

-In the following, we give a simple example of how to use [`KandinskyV22ControlnetPipeline`] to add control to the text-to-image generation with a depth image.

-

-First, let's take an image and extract its depth map.

-

-```python

-from diffusers.utils import load_image

-

-img = load_image(

- "https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main/kandinskyv22/cat.png"

-).resize((768, 768))

-```

-

-

-We can use the `depth-estimation` pipeline from transformers to process the image and retrieve its depth map.

-

-```python

-import torch

-import numpy as np

-

-from transformers import pipeline

-from diffusers.utils import load_image

-

-

-def make_hint(image, depth_estimator):

- image = depth_estimator(image)["depth"]

- image = np.array(image)

- image = image[:, :, None]

- image = np.concatenate([image, image, image], axis=2)

- detected_map = torch.from_numpy(image).float() / 255.0

- hint = detected_map.permute(2, 0, 1)

- return hint

-

-

-depth_estimator = pipeline("depth-estimation")

-hint = make_hint(img, depth_estimator).unsqueeze(0).half().to("cuda")

-```

-Now, we load the prior pipeline and the text-to-image controlnet pipeline

-

-```python

-from diffusers import KandinskyV22PriorPipeline, KandinskyV22ControlnetPipeline

-

-pipe_prior = KandinskyV22PriorPipeline.from_pretrained(

- "kandinsky-community/kandinsky-2-2-prior", torch_dtype=torch.float16

-)

-pipe_prior = pipe_prior.to("cuda")

-

-pipe = KandinskyV22ControlnetPipeline.from_pretrained(

- "kandinsky-community/kandinsky-2-2-controlnet-depth", torch_dtype=torch.float16

-)

-pipe = pipe.to("cuda")

-```

-

-We pass the prompt and negative prompt through the prior to generate image embeddings

-

-```python

-prompt = "A robot, 4k photo"

-

-negative_prior_prompt = "lowres, text, error, cropped, worst quality, low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, out of frame, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers, long neck, username, watermark, signature"

-

-generator = torch.Generator(device="cuda").manual_seed(43)

-image_emb, zero_image_emb = pipe_prior(

- prompt=prompt, negative_prompt=negative_prior_prompt, generator=generator

-).to_tuple()

-```

-

-Now we can pass the image embeddings and the depth image we extracted to the controlnet pipeline. With Kandinsky 2.2, only prior pipelines accept `prompt` input. You do not need to pass the prompt to the controlnet pipeline.

-

-```python

-images = pipe(

- image_embeds=image_emb,

- negative_image_embeds=zero_image_emb,

- hint=hint,

- num_inference_steps=50,

- generator=generator,

- height=768,

- width=768,

-).images

-

-images[0].save("robot_cat.png")

-```

-

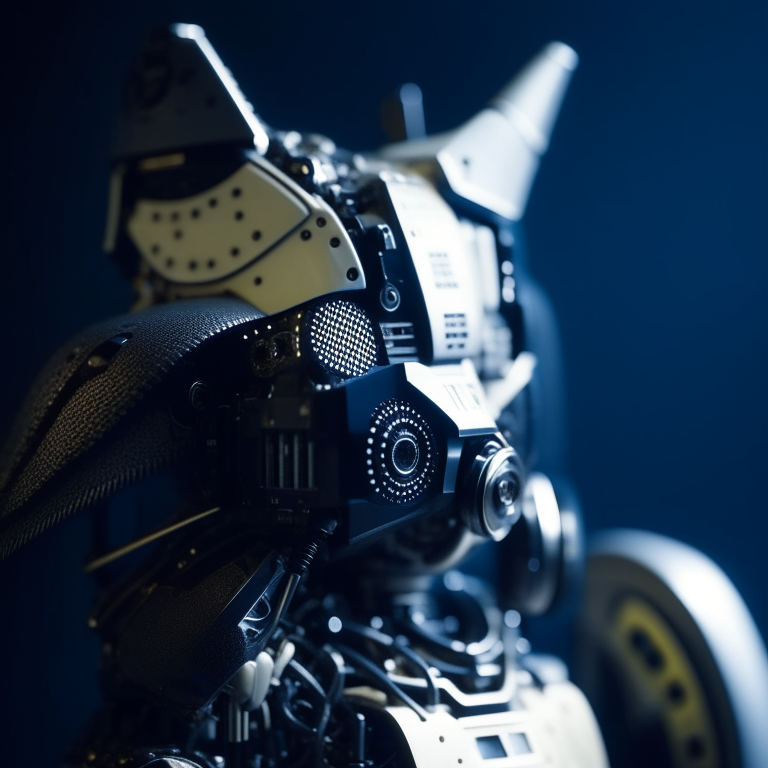

-The output image looks as follow:

-

-

-### Image-to-Image Generation with ControlNet Conditioning

-

-Kandinsky 2.2 also includes a [`KandinskyV22ControlnetImg2ImgPipeline`] that will allow you to add control to the image generation process with both the image and its depth map. This pipeline works really well with [`KandinskyV22PriorEmb2EmbPipeline`], which generates image embeddings based on both a text prompt and an image.

-

-For our robot cat example, we will pass the prompt and cat image together to the prior pipeline to generate an image embedding. We will then use that image embedding and the depth map of the cat to further control the image generation process.

-

-We can use the same cat image and its depth map from the last example.

-

-```python

-import torch

-import numpy as np

-

-from diffusers import KandinskyV22PriorEmb2EmbPipeline, KandinskyV22ControlnetImg2ImgPipeline

-from diffusers.utils import load_image

-from transformers import pipeline

-

-img = load_image(

- "https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main" "/kandinskyv22/cat.png"

-).resize((768, 768))

-

-

-def make_hint(image, depth_estimator):

- image = depth_estimator(image)["depth"]

- image = np.array(image)

- image = image[:, :, None]

- image = np.concatenate([image, image, image], axis=2)

- detected_map = torch.from_numpy(image).float() / 255.0

- hint = detected_map.permute(2, 0, 1)

- return hint

-

-

-depth_estimator = pipeline("depth-estimation")

-hint = make_hint(img, depth_estimator).unsqueeze(0).half().to("cuda")

-

-pipe_prior = KandinskyV22PriorEmb2EmbPipeline.from_pretrained(

- "kandinsky-community/kandinsky-2-2-prior", torch_dtype=torch.float16

-)

-pipe_prior = pipe_prior.to("cuda")

-

-pipe = KandinskyV22ControlnetImg2ImgPipeline.from_pretrained(

- "kandinsky-community/kandinsky-2-2-controlnet-depth", torch_dtype=torch.float16

-)

-pipe = pipe.to("cuda")

-

-prompt = "A robot, 4k photo"

-negative_prior_prompt = "lowres, text, error, cropped, worst quality, low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, out of frame, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers, long neck, username, watermark, signature"

-

-generator = torch.Generator(device="cuda").manual_seed(43)

-

-# run prior pipeline

-

-img_emb = pipe_prior(prompt=prompt, image=img, strength=0.85, generator=generator)

-negative_emb = pipe_prior(prompt=negative_prior_prompt, image=img, strength=1, generator=generator)

-

-# run controlnet img2img pipeline

-images = pipe(

- image=img,

- strength=0.5,

- image_embeds=img_emb.image_embeds,

- negative_image_embeds=negative_emb.image_embeds,

- hint=hint,

- num_inference_steps=50,

- generator=generator,

- height=768,

- width=768,

-).images

-

-images[0].save("robot_cat.png")

-```

-

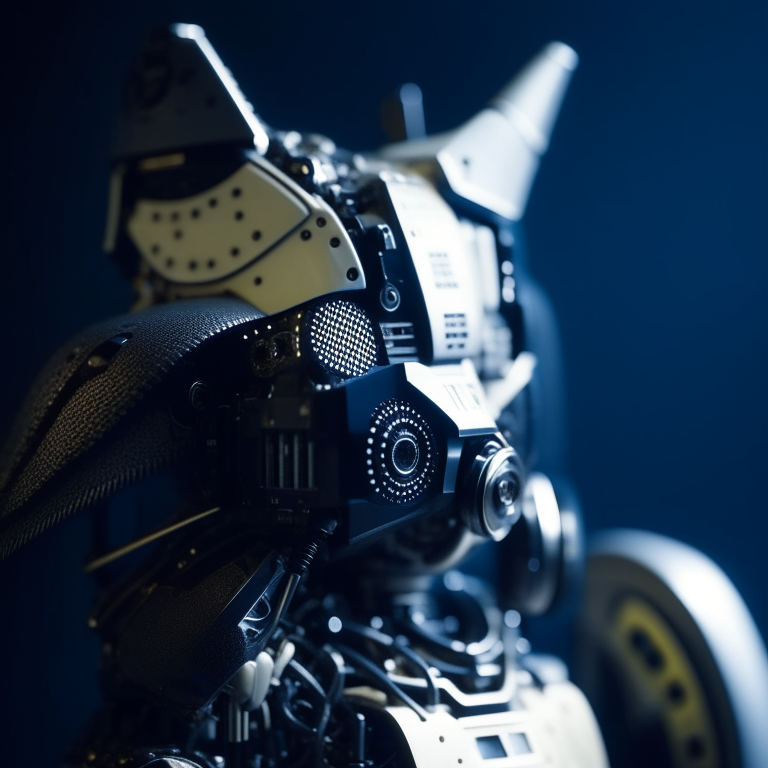

-Here is the output. Compared with the output from our text-to-image controlnet example, it kept a lot more cat facial details from the original image and worked into the robot style we asked for.

-

-

-

-## Kandinsky 2.2

-

-The Kandinsky 2.2 release includes robust new text-to-image models that support text-to-image generation, image-to-image generation, image interpolation, and text-guided image inpainting. The general workflow to perform these tasks using Kandinsky 2.2 is the same as in Kandinsky 2.1. First, you will need to use a prior pipeline to generate image embeddings based on your text prompt, and then use one of the image decoding pipelines to generate the output image. The only difference is that in Kandinsky 2.2, all of the decoding pipelines no longer accept the `prompt` input, and the image generation process is conditioned with only `image_embeds` and `negative_image_embeds`.

-

-Let's look at an example of how to perform text-to-image generation using Kandinsky 2.2.

-

-First, let's create the prior pipeline and text-to-image pipeline with Kandinsky 2.2 checkpoints.

-

-```python

-from diffusers import DiffusionPipeline

-import torch

-

-pipe_prior = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-2-prior", torch_dtype=torch.float16)

-pipe_prior.to("cuda")

-

-t2i_pipe = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-2-decoder", torch_dtype=torch.float16)

-t2i_pipe.to("cuda")

-```

-

-You can then use `pipe_prior` to generate image embeddings.

-

-```python

-prompt = "portrait of a women, blue eyes, cinematic"

-negative_prompt = "low quality, bad quality"

-

-image_embeds, negative_image_embeds = pipe_prior(prompt, guidance_scale=1.0).to_tuple()

-```

-

-Now you can pass these embeddings to the text-to-image pipeline. When using Kandinsky 2.2 you don't need to pass the `prompt` (but you do with the previous version, Kandinsky 2.1).

-

-```

-image = t2i_pipe(image_embeds=image_embeds, negative_image_embeds=negative_image_embeds, height=768, width=768).images[

- 0

-]

-image.save("portrait.png")

-```

-

-

-We used the text-to-image pipeline as an example, but the same process applies to all decoding pipelines in Kandinsky 2.2. For more information, please refer to our API section for each pipeline.

-

-

## Optimization

Running Kandinsky in inference requires running both a first prior pipeline: [`KandinskyPriorPipeline`]

@@ -530,64 +328,24 @@ t2i_pipe.unet = torch.compile(t2i_pipe.unet, mode="reduce-overhead", fullgraph=T

After compilation you should see a very fast inference time. For more information,

feel free to have a look at [Our PyTorch 2.0 benchmark](https://huggingface.co/docs/diffusers/main/en/optimization/torch2.0).

+

+

+To generate images directly from a single pipeline, you can use [`KandinskyCombinedPipeline`], [`KandinskyImg2ImgCombinedPipeline`], [`KandinskyInpaintCombinedPipeline`].

+These combined pipelines wrap the [`KandinskyPriorPipeline`] and [`KandinskyPipeline`], [`KandinskyImg2ImgPipeline`], [`KandinskyInpaintPipeline`] respectively into a single

+pipeline for a simpler user experience

+

+

+

## Available Pipelines:

| Pipeline | Tasks |

|---|---|

-| [pipeline_kandinsky2_2.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2.py) | *Text-to-Image Generation* |

| [pipeline_kandinsky.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky/pipeline_kandinsky.py) | *Text-to-Image Generation* |

-| [pipeline_kandinsky2_2_inpaint.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_inpaint.py) | *Image-Guided Image Generation* |

+| [pipeline_kandinsky_combined.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky_combined.py) | *End-to-end Text-to-Image, image-to-image, Inpainting Generation* |

| [pipeline_kandinsky_inpaint.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky/pipeline_kandinsky_inpaint.py) | *Image-Guided Image Generation* |

-| [pipeline_kandinsky2_2_img2img.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_img2img.py) | *Image-Guided Image Generation* |

| [pipeline_kandinsky_img2img.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky/pipeline_kandinsky_img2img.py) | *Image-Guided Image Generation* |

-| [pipeline_kandinsky2_2_controlnet.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_controlnet.py) | *Image-Guided Image Generation* |

-| [pipeline_kandinsky2_2_controlnet_img2img.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_controlnet_img2img.py) | *Image-Guided Image Generation* |

-### KandinskyV22Pipeline

-

-[[autodoc]] KandinskyV22Pipeline

- - all

- - __call__

-

-### KandinskyV22ControlnetPipeline

-

-[[autodoc]] KandinskyV22ControlnetPipeline

- - all

- - __call__

-

-### KandinskyV22ControlnetImg2ImgPipeline

-

-[[autodoc]] KandinskyV22ControlnetImg2ImgPipeline

- - all

- - __call__

-

-### KandinskyV22Img2ImgPipeline

-

-[[autodoc]] KandinskyV22Img2ImgPipeline

- - all

- - __call__

-

-### KandinskyV22InpaintPipeline

-

-[[autodoc]] KandinskyV22InpaintPipeline

- - all

- - __call__

-

-### KandinskyV22PriorPipeline

-

-[[autodoc]] ## KandinskyV22PriorPipeline

- - all

- - __call__

- - interpolate

-

-### KandinskyV22PriorEmb2EmbPipeline

-

-[[autodoc]] KandinskyV22PriorEmb2EmbPipeline

- - all

- - __call__

- - interpolate

-

### KandinskyPriorPipeline

[[autodoc]] KandinskyPriorPipeline

@@ -612,3 +370,21 @@ feel free to have a look at [Our PyTorch 2.0 benchmark](https://huggingface.co/d

[[autodoc]] KandinskyInpaintPipeline

- all

- __call__

+

+### KandinskyCombinedPipeline

+

+[[autodoc]] KandinskyCombinedPipeline

+ - all

+ - __call__

+

+### KandinskyImg2ImgCombinedPipeline

+

+[[autodoc]] KandinskyImg2ImgCombinedPipeline

+ - all

+ - __call__

+

+### KandinskyInpaintCombinedPipeline

+

+[[autodoc]] KandinskyInpaintCombinedPipeline

+ - all

+ - __call__

diff --git a/docs/source/en/api/pipelines/kandinsky_v22.mdx b/docs/source/en/api/pipelines/kandinsky_v22.mdx

new file mode 100644

index 0000000000..074bc5b8d6

--- /dev/null

+++ b/docs/source/en/api/pipelines/kandinsky_v22.mdx

@@ -0,0 +1,342 @@

+

+

+# Kandinsky 2.2

+

+The Kandinsky 2.2 release includes robust new text-to-image models that support text-to-image generation, image-to-image generation, image interpolation, and text-guided image inpainting. The general workflow to perform these tasks using Kandinsky 2.2 is the same as in Kandinsky 2.1. First, you will need to use a prior pipeline to generate image embeddings based on your text prompt, and then use one of the image decoding pipelines to generate the output image. The only difference is that in Kandinsky 2.2, all of the decoding pipelines no longer accept the `prompt` input, and the image generation process is conditioned with only `image_embeds` and `negative_image_embeds`.

+

+Let's look at an example of how to perform text-to-image generation using Kandinsky 2.2.

+

+First, let's create the prior pipeline and text-to-image pipeline with Kandinsky 2.2 checkpoints.

+

+```python

+from diffusers import DiffusionPipeline

+import torch

+

+pipe_prior = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-2-prior", torch_dtype=torch.float16)

+pipe_prior.to("cuda")

+

+t2i_pipe = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-2-decoder", torch_dtype=torch.float16)

+t2i_pipe.to("cuda")

+```

+

+You can then use `pipe_prior` to generate image embeddings.

+

+```python

+prompt = "portrait of a women, blue eyes, cinematic"

+negative_prompt = "low quality, bad quality"

+

+image_embeds, negative_image_embeds = pipe_prior(prompt, guidance_scale=1.0).to_tuple()

+```

+

+Now you can pass these embeddings to the text-to-image pipeline. When using Kandinsky 2.2 you don't need to pass the `prompt` (but you do with the previous version, Kandinsky 2.1).

+

+```

+image = t2i_pipe(image_embeds=image_embeds, negative_image_embeds=negative_image_embeds, height=768, width=768).images[

+ 0

+]

+image.save("portrait.png")

+```

+

+

+We used the text-to-image pipeline as an example, but the same process applies to all decoding pipelines in Kandinsky 2.2. For more information, please refer to our API section for each pipeline.

+

+### Text-to-Image Generation with ControlNet Conditioning

+

+In the following, we give a simple example of how to use [`KandinskyV22ControlnetPipeline`] to add control to the text-to-image generation with a depth image.

+

+First, let's take an image and extract its depth map.

+

+```python

+from diffusers.utils import load_image

+

+img = load_image(

+ "https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main/kandinskyv22/cat.png"

+).resize((768, 768))

+```

+

+

+We can use the `depth-estimation` pipeline from transformers to process the image and retrieve its depth map.

+

+```python

+import torch

+import numpy as np

+

+from transformers import pipeline

+from diffusers.utils import load_image

+

+

+def make_hint(image, depth_estimator):

+ image = depth_estimator(image)["depth"]

+ image = np.array(image)

+ image = image[:, :, None]

+ image = np.concatenate([image, image, image], axis=2)

+ detected_map = torch.from_numpy(image).float() / 255.0

+ hint = detected_map.permute(2, 0, 1)

+ return hint

+

+

+depth_estimator = pipeline("depth-estimation")

+hint = make_hint(img, depth_estimator).unsqueeze(0).half().to("cuda")

+```

+Now, we load the prior pipeline and the text-to-image controlnet pipeline

+

+```python

+from diffusers import KandinskyV22PriorPipeline, KandinskyV22ControlnetPipeline

+

+pipe_prior = KandinskyV22PriorPipeline.from_pretrained(

+ "kandinsky-community/kandinsky-2-2-prior", torch_dtype=torch.float16

+)

+pipe_prior = pipe_prior.to("cuda")

+

+pipe = KandinskyV22ControlnetPipeline.from_pretrained(

+ "kandinsky-community/kandinsky-2-2-controlnet-depth", torch_dtype=torch.float16

+)

+pipe = pipe.to("cuda")

+```

+

+We pass the prompt and negative prompt through the prior to generate image embeddings

+

+```python

+prompt = "A robot, 4k photo"

+

+negative_prior_prompt = "lowres, text, error, cropped, worst quality, low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, out of frame, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers, long neck, username, watermark, signature"

+

+generator = torch.Generator(device="cuda").manual_seed(43)

+image_emb, zero_image_emb = pipe_prior(

+ prompt=prompt, negative_prompt=negative_prior_prompt, generator=generator

+).to_tuple()

+```

+

+Now we can pass the image embeddings and the depth image we extracted to the controlnet pipeline. With Kandinsky 2.2, only prior pipelines accept `prompt` input. You do not need to pass the prompt to the controlnet pipeline.

+

+```python

+images = pipe(

+ image_embeds=image_emb,

+ negative_image_embeds=zero_image_emb,

+ hint=hint,

+ num_inference_steps=50,

+ generator=generator,

+ height=768,

+ width=768,

+).images

+

+images[0].save("robot_cat.png")

+```

+

+The output image looks as follow:

+

+

+### Image-to-Image Generation with ControlNet Conditioning

+

+Kandinsky 2.2 also includes a [`KandinskyV22ControlnetImg2ImgPipeline`] that will allow you to add control to the image generation process with both the image and its depth map. This pipeline works really well with [`KandinskyV22PriorEmb2EmbPipeline`], which generates image embeddings based on both a text prompt and an image.

+

+For our robot cat example, we will pass the prompt and cat image together to the prior pipeline to generate an image embedding. We will then use that image embedding and the depth map of the cat to further control the image generation process.

+

+We can use the same cat image and its depth map from the last example.

+

+```python

+import torch

+import numpy as np

+

+from diffusers import KandinskyV22PriorEmb2EmbPipeline, KandinskyV22ControlnetImg2ImgPipeline

+from diffusers.utils import load_image

+from transformers import pipeline

+

+img = load_image(

+ "https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main" "/kandinskyv22/cat.png"

+).resize((768, 768))

+

+

+def make_hint(image, depth_estimator):

+ image = depth_estimator(image)["depth"]

+ image = np.array(image)

+ image = image[:, :, None]

+ image = np.concatenate([image, image, image], axis=2)

+ detected_map = torch.from_numpy(image).float() / 255.0

+ hint = detected_map.permute(2, 0, 1)

+ return hint

+

+

+depth_estimator = pipeline("depth-estimation")

+hint = make_hint(img, depth_estimator).unsqueeze(0).half().to("cuda")

+

+pipe_prior = KandinskyV22PriorEmb2EmbPipeline.from_pretrained(

+ "kandinsky-community/kandinsky-2-2-prior", torch_dtype=torch.float16

+)

+pipe_prior = pipe_prior.to("cuda")

+

+pipe = KandinskyV22ControlnetImg2ImgPipeline.from_pretrained(

+ "kandinsky-community/kandinsky-2-2-controlnet-depth", torch_dtype=torch.float16

+)

+pipe = pipe.to("cuda")

+

+prompt = "A robot, 4k photo"

+negative_prior_prompt = "lowres, text, error, cropped, worst quality, low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, out of frame, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers, long neck, username, watermark, signature"

+

+generator = torch.Generator(device="cuda").manual_seed(43)

+

+# run prior pipeline

+

+img_emb = pipe_prior(prompt=prompt, image=img, strength=0.85, generator=generator)

+negative_emb = pipe_prior(prompt=negative_prior_prompt, image=img, strength=1, generator=generator)

+

+# run controlnet img2img pipeline

+images = pipe(

+ image=img,

+ strength=0.5,

+ image_embeds=img_emb.image_embeds,

+ negative_image_embeds=negative_emb.image_embeds,

+ hint=hint,

+ num_inference_steps=50,

+ generator=generator,

+ height=768,

+ width=768,

+).images

+

+images[0].save("robot_cat.png")

+```

+

+Here is the output. Compared with the output from our text-to-image controlnet example, it kept a lot more cat facial details from the original image and worked into the robot style we asked for.

+

+

+

+## Optimization

+

+Running Kandinsky in inference requires running both a first prior pipeline: [`KandinskyPriorPipeline`]

+and a second image decoding pipeline which is one of [`KandinskyPipeline`], [`KandinskyImg2ImgPipeline`], or [`KandinskyInpaintPipeline`].

+

+The bulk of the computation time will always be the second image decoding pipeline, so when looking

+into optimizing the model, one should look into the second image decoding pipeline.

+

+When running with PyTorch < 2.0, we strongly recommend making use of [`xformers`](https://github.com/facebookresearch/xformers)

+to speed-up the optimization. This can be done by simply running:

+

+```py

+from diffusers import DiffusionPipeline

+import torch

+

+t2i_pipe = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

+t2i_pipe.enable_xformers_memory_efficient_attention()

+```

+

+When running on PyTorch >= 2.0, PyTorch's SDPA attention will automatically be used. For more information on

+PyTorch's SDPA, feel free to have a look at [this blog post](https://pytorch.org/blog/accelerated-diffusers-pt-20/).

+

+To have explicit control , you can also manually set the pipeline to use PyTorch's 2.0 efficient attention:

+

+```py

+from diffusers.models.attention_processor import AttnAddedKVProcessor2_0

+

+t2i_pipe.unet.set_attn_processor(AttnAddedKVProcessor2_0())

+```

+

+The slowest and most memory intense attention processor is the default `AttnAddedKVProcessor` processor.

+We do **not** recommend using it except for testing purposes or cases where very high determistic behaviour is desired.

+You can set it with:

+

+```py

+from diffusers.models.attention_processor import AttnAddedKVProcessor

+

+t2i_pipe.unet.set_attn_processor(AttnAddedKVProcessor())

+```

+

+With PyTorch >= 2.0, you can also use Kandinsky with `torch.compile` which depending

+on your hardware can signficantly speed-up your inference time once the model is compiled.

+To use Kandinsksy with `torch.compile`, you can do:

+

+```py

+t2i_pipe.unet.to(memory_format=torch.channels_last)

+t2i_pipe.unet = torch.compile(t2i_pipe.unet, mode="reduce-overhead", fullgraph=True)

+```

+

+After compilation you should see a very fast inference time. For more information,

+feel free to have a look at [Our PyTorch 2.0 benchmark](https://huggingface.co/docs/diffusers/main/en/optimization/torch2.0).

+

+

+

+To generate images directly from a single pipeline, you can use [`KandinskyV22CombinedPipeline`], [`KandinskyV22Img2ImgCombinedPipeline`], [`KandinskyV22InpaintCombinedPipeline`].

+These combined pipelines wrap the [`KandinskyV22PriorPipeline`] and [`KandinskyV22Pipeline`], [`KandinskyV22Img2ImgPipeline`], [`KandinskyV22InpaintPipeline`] respectively into a single

+pipeline for a simpler user experience

+

+

+

+## Available Pipelines:

+

+| Pipeline | Tasks |

+|---|---|

+| [pipeline_kandinsky2_2.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2.py) | *Text-to-Image Generation* |

+| [pipeline_kandinsky2_2_combined.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_combined.py) | *End-to-end Text-to-Image, image-to-image, Inpainting Generation* |

+| [pipeline_kandinsky2_2_inpaint.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_inpaint.py) | *Image-Guided Image Generation* |

+| [pipeline_kandinsky2_2_img2img.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_img2img.py) | *Image-Guided Image Generation* |

+| [pipeline_kandinsky2_2_controlnet.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_controlnet.py) | *Image-Guided Image Generation* |

+| [pipeline_kandinsky2_2_controlnet_img2img.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/kandinsky2_2/pipeline_kandinsky2_2_controlnet_img2img.py) | *Image-Guided Image Generation* |

+

+

+### KandinskyV22Pipeline

+

+[[autodoc]] KandinskyV22Pipeline

+ - all

+ - __call__

+

+### KandinskyV22ControlnetPipeline

+

+[[autodoc]] KandinskyV22ControlnetPipeline

+ - all

+ - __call__

+

+### KandinskyV22ControlnetImg2ImgPipeline

+

+[[autodoc]] KandinskyV22ControlnetImg2ImgPipeline

+ - all

+ - __call__

+

+### KandinskyV22Img2ImgPipeline

+

+[[autodoc]] KandinskyV22Img2ImgPipeline

+ - all

+ - __call__

+

+### KandinskyV22InpaintPipeline

+

+[[autodoc]] KandinskyV22InpaintPipeline

+ - all

+ - __call__

+

+### KandinskyV22PriorPipeline

+

+[[autodoc]] KandinskyV22PriorPipeline

+ - all

+ - __call__

+ - interpolate

+

+### KandinskyV22PriorEmb2EmbPipeline

+

+[[autodoc]] KandinskyV22PriorEmb2EmbPipeline

+ - all

+ - __call__

+ - interpolate

+

+### KandinskyV22CombinedPipeline

+

+[[autodoc]] KandinskyV22CombinedPipeline

+ - all

+ - __call__

+

+### KandinskyV22Img2ImgCombinedPipeline

+

+[[autodoc]] KandinskyV22Img2ImgCombinedPipeline

+ - all

+ - __call__

+

+### KandinskyV22InpaintCombinedPipeline

+

+[[autodoc]] KandinskyV22InpaintCombinedPipeline

+ - all

+ - __call__

diff --git a/src/diffusers/__init__.py b/src/diffusers/__init__.py

index 149744b01d..2ccc9a8bc2 100644

--- a/src/diffusers/__init__.py

+++ b/src/diffusers/__init__.py

@@ -141,13 +141,19 @@ else:

IFPipeline,

IFSuperResolutionPipeline,

ImageTextPipelineOutput,

+ KandinskyCombinedPipeline,

+ KandinskyImg2ImgCombinedPipeline,

KandinskyImg2ImgPipeline,

+ KandinskyInpaintCombinedPipeline,

KandinskyInpaintPipeline,

KandinskyPipeline,

KandinskyPriorPipeline,

+ KandinskyV22CombinedPipeline,

KandinskyV22ControlnetImg2ImgPipeline,

KandinskyV22ControlnetPipeline,

+ KandinskyV22Img2ImgCombinedPipeline,

KandinskyV22Img2ImgPipeline,

+ KandinskyV22InpaintCombinedPipeline,

KandinskyV22InpaintPipeline,

KandinskyV22Pipeline,

KandinskyV22PriorEmb2EmbPipeline,

diff --git a/src/diffusers/pipelines/__init__.py b/src/diffusers/pipelines/__init__.py

index 896a9b8c91..a22da63731 100644

--- a/src/diffusers/pipelines/__init__.py

+++ b/src/diffusers/pipelines/__init__.py

@@ -61,15 +61,21 @@ else:

IFSuperResolutionPipeline,

)

from .kandinsky import (

+ KandinskyCombinedPipeline,

+ KandinskyImg2ImgCombinedPipeline,

KandinskyImg2ImgPipeline,

+ KandinskyInpaintCombinedPipeline,

KandinskyInpaintPipeline,

KandinskyPipeline,

KandinskyPriorPipeline,

)

from .kandinsky2_2 import (

+ KandinskyV22CombinedPipeline,

KandinskyV22ControlnetImg2ImgPipeline,

KandinskyV22ControlnetPipeline,

+ KandinskyV22Img2ImgCombinedPipeline,

KandinskyV22Img2ImgPipeline,

+ KandinskyV22InpaintCombinedPipeline,

KandinskyV22InpaintPipeline,

KandinskyV22Pipeline,

KandinskyV22PriorEmb2EmbPipeline,

diff --git a/src/diffusers/pipelines/auto_pipeline.py b/src/diffusers/pipelines/auto_pipeline.py

index c827231ada..66d306720a 100644

--- a/src/diffusers/pipelines/auto_pipeline.py

+++ b/src/diffusers/pipelines/auto_pipeline.py

@@ -24,8 +24,22 @@ from .controlnet import (

StableDiffusionXLControlNetPipeline,

)

from .deepfloyd_if import IFImg2ImgPipeline, IFInpaintingPipeline, IFPipeline

-from .kandinsky import KandinskyImg2ImgPipeline, KandinskyInpaintPipeline, KandinskyPipeline

-from .kandinsky2_2 import KandinskyV22Img2ImgPipeline, KandinskyV22InpaintPipeline, KandinskyV22Pipeline

+from .kandinsky import (

+ KandinskyCombinedPipeline,

+ KandinskyImg2ImgCombinedPipeline,

+ KandinskyImg2ImgPipeline,

+ KandinskyInpaintCombinedPipeline,

+ KandinskyInpaintPipeline,

+ KandinskyPipeline,

+)

+from .kandinsky2_2 import (

+ KandinskyV22CombinedPipeline,

+ KandinskyV22Img2ImgCombinedPipeline,

+ KandinskyV22Img2ImgPipeline,

+ KandinskyV22InpaintCombinedPipeline,

+ KandinskyV22InpaintPipeline,

+ KandinskyV22Pipeline,

+)

from .stable_diffusion import (

StableDiffusionImg2ImgPipeline,

StableDiffusionInpaintPipeline,

@@ -43,8 +57,8 @@ AUTO_TEXT2IMAGE_PIPELINES_MAPPING = OrderedDict(

("stable-diffusion", StableDiffusionPipeline),

("stable-diffusion-xl", StableDiffusionXLPipeline),

("if", IFPipeline),

- ("kandinsky", KandinskyPipeline),

- ("kandinsky22", KandinskyV22Pipeline),

+ ("kandinsky", KandinskyCombinedPipeline),

+ ("kandinsky22", KandinskyV22CombinedPipeline),

("stable-diffusion-controlnet", StableDiffusionControlNetPipeline),

("stable-diffusion-xl-controlnet", StableDiffusionXLControlNetPipeline),

]

@@ -55,8 +69,8 @@ AUTO_IMAGE2IMAGE_PIPELINES_MAPPING = OrderedDict(

("stable-diffusion", StableDiffusionImg2ImgPipeline),

("stable-diffusion-xl", StableDiffusionXLImg2ImgPipeline),

("if", IFImg2ImgPipeline),

- ("kandinsky", KandinskyImg2ImgPipeline),

- ("kandinsky22", KandinskyV22Img2ImgPipeline),

+ ("kandinsky", KandinskyImg2ImgCombinedPipeline),

+ ("kandinsky22", KandinskyV22Img2ImgCombinedPipeline),

("stable-diffusion-controlnet", StableDiffusionControlNetImg2ImgPipeline),

]

)

@@ -66,9 +80,28 @@ AUTO_INPAINT_PIPELINES_MAPPING = OrderedDict(

("stable-diffusion", StableDiffusionInpaintPipeline),

("stable-diffusion-xl", StableDiffusionXLInpaintPipeline),

("if", IFInpaintingPipeline),

+ ("kandinsky", KandinskyInpaintCombinedPipeline),

+ ("kandinsky22", KandinskyV22InpaintCombinedPipeline),

+ ("stable-diffusion-controlnet", StableDiffusionControlNetInpaintPipeline),

+ ]

+)

+

+_AUTO_TEXT2IMAGE_DECODER_PIPELINES_MAPPING = OrderedDict(

+ [

+ ("kandinsky", KandinskyPipeline),

+ ("kandinsky22", KandinskyV22Pipeline),

+ ]

+)

+_AUTO_IMAGE2IMAGE_DECODER_PIPELINES_MAPPING = OrderedDict(

+ [

+ ("kandinsky", KandinskyImg2ImgPipeline),

+ ("kandinsky22", KandinskyV22Img2ImgPipeline),

+ ]

+)

+_AUTO_INPAINT_DECODER_PIPELINES_MAPPING = OrderedDict(

+ [

("kandinsky", KandinskyInpaintPipeline),

("kandinsky22", KandinskyV22InpaintPipeline),

- ("stable-diffusion-controlnet", StableDiffusionControlNetInpaintPipeline),

]

)

@@ -76,10 +109,27 @@ SUPPORTED_TASKS_MAPPINGS = [

AUTO_TEXT2IMAGE_PIPELINES_MAPPING,

AUTO_IMAGE2IMAGE_PIPELINES_MAPPING,

AUTO_INPAINT_PIPELINES_MAPPING,

+ _AUTO_TEXT2IMAGE_DECODER_PIPELINES_MAPPING,

+ _AUTO_IMAGE2IMAGE_DECODER_PIPELINES_MAPPING,

+ _AUTO_INPAINT_DECODER_PIPELINES_MAPPING,

]

-def _get_task_class(mapping, pipeline_class_name):

+def _get_connected_pipeline(pipeline_cls):

+ # for now connected pipelines can only be loaded from decoder pipelines, such as kandinsky-community/kandinsky-2-2-decoder

+ if pipeline_cls in _AUTO_TEXT2IMAGE_DECODER_PIPELINES_MAPPING.values():

+ return _get_task_class(

+ AUTO_TEXT2IMAGE_PIPELINES_MAPPING, pipeline_cls.__name__, throw_error_if_not_exist=False

+ )

+ if pipeline_cls in _AUTO_IMAGE2IMAGE_DECODER_PIPELINES_MAPPING.values():

+ return _get_task_class(

+ AUTO_IMAGE2IMAGE_PIPELINES_MAPPING, pipeline_cls.__name__, throw_error_if_not_exist=False

+ )

+ if pipeline_cls in _AUTO_INPAINT_DECODER_PIPELINES_MAPPING.values():

+ return _get_task_class(AUTO_INPAINT_PIPELINES_MAPPING, pipeline_cls.__name__, throw_error_if_not_exist=False)

+

+

+def _get_task_class(mapping, pipeline_class_name, throw_error_if_not_exist: bool = True):

def get_model(pipeline_class_name):

for task_mapping in SUPPORTED_TASKS_MAPPINGS:

for model_name, pipeline in task_mapping.items():

@@ -92,7 +142,9 @@ def _get_task_class(mapping, pipeline_class_name):

task_class = mapping.get(model_name, None)

if task_class is not None:

return task_class

- raise ValueError(f"AutoPipeline can't find a pipeline linked to {pipeline_class_name} for {model_name}")

+

+ if throw_error_if_not_exist:

+ raise ValueError(f"AutoPipeline can't find a pipeline linked to {pipeline_class_name} for {model_name}")

def _get_signature_keys(obj):

@@ -336,7 +388,7 @@ class AutoPipelineForText2Image(ConfigMixin):

if len(missing_modules) > 0:

raise ValueError(

- f"Pipeline {text_2_image_cls} expected {expected_modules}, but only {set(passed_class_obj.keys()) + set(original_class_obj.keys())} were passed"

+ f"Pipeline {text_2_image_cls} expected {expected_modules}, but only {set(list(passed_class_obj.keys()) + list(original_class_obj.keys()))} were passed"

)

model = text_2_image_cls(**text_2_image_kwargs)

@@ -581,7 +633,7 @@ class AutoPipelineForImage2Image(ConfigMixin):

if len(missing_modules) > 0:

raise ValueError(

- f"Pipeline {image_2_image_cls} expected {expected_modules}, but only {set(passed_class_obj.keys()) + set(original_class_obj.keys())} were passed"

+ f"Pipeline {image_2_image_cls} expected {expected_modules}, but only {set(list(passed_class_obj.keys()) + list(original_class_obj.keys()))} were passed"

)

model = image_2_image_cls(**image_2_image_kwargs)

@@ -824,7 +876,7 @@ class AutoPipelineForInpainting(ConfigMixin):

if len(missing_modules) > 0:

raise ValueError(

- f"Pipeline {inpainting_cls} expected {expected_modules}, but only {set(passed_class_obj.keys()) + set(original_class_obj.keys())} were passed"

+ f"Pipeline {inpainting_cls} expected {expected_modules}, but only {set(list(passed_class_obj.keys()) + list(original_class_obj.keys()))} were passed"

)

model = inpainting_cls(**inpainting_kwargs)

diff --git a/src/diffusers/pipelines/kandinsky/__init__.py b/src/diffusers/pipelines/kandinsky/__init__.py

index 09bb84e7fd..946d316490 100644

--- a/src/diffusers/pipelines/kandinsky/__init__.py

+++ b/src/diffusers/pipelines/kandinsky/__init__.py

@@ -12,6 +12,11 @@ except OptionalDependencyNotAvailable:

from ...utils.dummy_torch_and_transformers_objects import *

else:

from .pipeline_kandinsky import KandinskyPipeline

+ from .pipeline_kandinsky_combined import (

+ KandinskyCombinedPipeline,

+ KandinskyImg2ImgCombinedPipeline,

+ KandinskyInpaintCombinedPipeline,

+ )

from .pipeline_kandinsky_img2img import KandinskyImg2ImgPipeline

from .pipeline_kandinsky_inpaint import KandinskyInpaintPipeline

from .pipeline_kandinsky_prior import KandinskyPriorPipeline, KandinskyPriorPipelineOutput

diff --git a/src/diffusers/pipelines/kandinsky/pipeline_kandinsky.py b/src/diffusers/pipelines/kandinsky/pipeline_kandinsky.py

index 8e42119191..89afa0060e 100644

--- a/src/diffusers/pipelines/kandinsky/pipeline_kandinsky.py

+++ b/src/diffusers/pipelines/kandinsky/pipeline_kandinsky.py

@@ -12,7 +12,7 @@

# See the License for the specific language governing permissions and

# limitations under the License.

-from typing import List, Optional, Union

+from typing import Callable, List, Optional, Union

import torch

from transformers import (

@@ -269,6 +269,8 @@ class KandinskyPipeline(DiffusionPipeline):

generator: Optional[Union[torch.Generator, List[torch.Generator]]] = None,

latents: Optional[torch.FloatTensor] = None,

output_type: Optional[str] = "pil",

+ callback: Optional[Callable[[int, int, torch.FloatTensor], None]] = None,

+ callback_steps: int = 1,

return_dict: bool = True,

):

"""

@@ -309,6 +311,12 @@ class KandinskyPipeline(DiffusionPipeline):

output_type (`str`, *optional*, defaults to `"pil"`):

The output format of the generate image. Choose between: `"pil"` (`PIL.Image.Image`), `"np"`

(`np.array`) or `"pt"` (`torch.Tensor`).

+ callback (`Callable`, *optional*):

+ A function that calls every `callback_steps` steps during inference. The function is called with the

+ following arguments: `callback(step: int, timestep: int, latents: torch.FloatTensor)`.

+ callback_steps (`int`, *optional*, defaults to 1):

+ The frequency at which the `callback` function is called. If not specified, the callback is called at

+ every step.

return_dict (`bool`, *optional*, defaults to `True`):

Whether or not to return a [`~pipelines.ImagePipelineOutput`] instead of a plain tuple.

@@ -397,6 +405,10 @@ class KandinskyPipeline(DiffusionPipeline):

latents,

generator=generator,

).prev_sample

+

+ if callback is not None and i % callback_steps == 0:

+ callback(i, t, latents)

+

# post-processing

image = self.movq.decode(latents, force_not_quantize=True)["sample"]

diff --git a/src/diffusers/pipelines/kandinsky/pipeline_kandinsky_combined.py b/src/diffusers/pipelines/kandinsky/pipeline_kandinsky_combined.py

new file mode 100644

index 0000000000..c7f439fbab

--- /dev/null

+++ b/src/diffusers/pipelines/kandinsky/pipeline_kandinsky_combined.py

@@ -0,0 +1,791 @@

+# Copyright 2023 The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+from typing import Callable, List, Optional, Union

+

+import PIL

+import torch

+from transformers import (

+ CLIPImageProcessor,

+ CLIPTextModelWithProjection,

+ CLIPTokenizer,

+ CLIPVisionModelWithProjection,

+ XLMRobertaTokenizer,

+)

+

+from ...models import PriorTransformer, UNet2DConditionModel, VQModel

+from ...schedulers import DDIMScheduler, DDPMScheduler, UnCLIPScheduler

+from ...utils import (

+ replace_example_docstring,

+)

+from ..pipeline_utils import DiffusionPipeline

+from .pipeline_kandinsky import KandinskyPipeline

+from .pipeline_kandinsky_img2img import KandinskyImg2ImgPipeline

+from .pipeline_kandinsky_inpaint import KandinskyInpaintPipeline

+from .pipeline_kandinsky_prior import KandinskyPriorPipeline

+from .text_encoder import MultilingualCLIP

+

+

+TEXT2IMAGE_EXAMPLE_DOC_STRING = """

+ Examples:

+ ```py

+ from diffusers import AutoPipelineForText2Image

+ import torch

+

+ pipe = AutoPipelineForText2Image.from_pretrained(

+ "kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16

+ )

+ pipe.enable_model_cpu_offload()

+

+ prompt = "A lion in galaxies, spirals, nebulae, stars, smoke, iridescent, intricate detail, octane render, 8k"

+

+ image = pipe(prompt=prompt, num_inference_steps=25).images[0]

+ ```

+"""

+

+IMAGE2IMAGE_EXAMPLE_DOC_STRING = """

+ Examples:

+ ```py

+ from diffusers import AutoPipelineForImage2Image

+ import torch

+ import requests

+ from io import BytesIO

+ from PIL import Image

+ import os

+

+ pipe = AutoPipelineForImage2Image.from_pretrained(

+ "kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16

+ )

+ pipe.enable_model_cpu_offload()

+

+ prompt = "A fantasy landscape, Cinematic lighting"

+ negative_prompt = "low quality, bad quality"

+

+ url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

+

+ response = requests.get(url)

+ image = Image.open(BytesIO(response.content)).convert("RGB")

+ image.thumbnail((768, 768))

+

+ image = pipe(prompt=prompt, image=original_image, num_inference_steps=25).images[0]

+ ```

+"""

+

+INPAINT_EXAMPLE_DOC_STRING = """

+ Examples:

+ ```py

+ from diffusers import AutoPipelineForInpainting

+ from diffusers.utils import load_image

+ import torch

+ import numpy as np

+

+ pipe = AutoPipelineForInpainting.from_pretrained(

+ "kandinsky-community/kandinsky-2-1-inpaint", torch_dtype=torch.float16

+ )

+ pipe.enable_model_cpu_offload()

+

+ prompt = "A fantasy landscape, Cinematic lighting"

+ negative_prompt = "low quality, bad quality"

+

+ original_image = load_image(

+ "https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main" "/kandinsky/cat.png"

+ )

+

+ mask = np.zeros((768, 768), dtype=np.float32)

+ # Let's mask out an area above the cat's head

+ mask[:250, 250:-250] = 1

+

+ image = pipe(prompt=prompt, image=original_image, mask_image=mask, num_inference_steps=25).images[0]

+ ```

+"""

+

+

+class KandinskyCombinedPipeline(DiffusionPipeline):

+ """

+ Combined Pipeline for text-to-image generation using Kandinsky

+

+ This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

+ library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

+

+ Args:

+ text_encoder ([`MultilingualCLIP`]):

+ Frozen text-encoder.

+ tokenizer ([`XLMRobertaTokenizer`]):

+ Tokenizer of class

+ scheduler (Union[`DDIMScheduler`,`DDPMScheduler`]):

+ A scheduler to be used in combination with `unet` to generate image latents.

+ unet ([`UNet2DConditionModel`]):

+ Conditional U-Net architecture to denoise the image embedding.

+ movq ([`VQModel`]):

+ MoVQ Decoder to generate the image from the latents.

+ prior_prior ([`PriorTransformer`]):

+ The canonincal unCLIP prior to approximate the image embedding from the text embedding.

+ prior_image_encoder ([`CLIPVisionModelWithProjection`]):

+ Frozen image-encoder.

+ prior_text_encoder ([`CLIPTextModelWithProjection`]):

+ Frozen text-encoder.

+ prior_tokenizer (`CLIPTokenizer`):

+ Tokenizer of class

+ [CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

+ prior_scheduler ([`UnCLIPScheduler`]):

+ A scheduler to be used in combination with `prior` to generate image embedding.

+ """

+

+ _load_connected_pipes = True

+

+ def __init__(

+ self,

+ text_encoder: MultilingualCLIP,

+ tokenizer: XLMRobertaTokenizer,

+ unet: UNet2DConditionModel,

+ scheduler: Union[DDIMScheduler, DDPMScheduler],

+ movq: VQModel,

+ prior_prior: PriorTransformer,

+ prior_image_encoder: CLIPVisionModelWithProjection,

+ prior_text_encoder: CLIPTextModelWithProjection,

+ prior_tokenizer: CLIPTokenizer,

+ prior_scheduler: UnCLIPScheduler,

+ prior_image_processor: CLIPImageProcessor,

+ ):

+ super().__init__()

+

+ self.register_modules(

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ unet=unet,

+ scheduler=scheduler,

+ movq=movq,

+ prior_prior=prior_prior,

+ prior_image_encoder=prior_image_encoder,

+ prior_text_encoder=prior_text_encoder,

+ prior_tokenizer=prior_tokenizer,

+ prior_scheduler=prior_scheduler,

+ prior_image_processor=prior_image_processor,

+ )

+ self.prior_pipe = KandinskyPriorPipeline(

+ prior=prior_prior,

+ image_encoder=prior_image_encoder,

+ text_encoder=prior_text_encoder,

+ tokenizer=prior_tokenizer,

+ scheduler=prior_scheduler,

+ image_processor=prior_image_processor,

+ )

+ self.decoder_pipe = KandinskyPipeline(

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ unet=unet,

+ scheduler=scheduler,

+ movq=movq,

+ )

+

+ def enable_model_cpu_offload(self, gpu_id=0):

+ r"""

+ Offloads all models to CPU using accelerate, reducing memory usage with a low impact on performance. Compared

+ to `enable_sequential_cpu_offload`, this method moves one whole model at a time to the GPU when its `forward`

+ method is called, and the model remains in GPU until the next model runs. Memory savings are lower than with

+ `enable_sequential_cpu_offload`, but performance is much better due to the iterative execution of the `unet`.

+ """

+ self.prior_pipe.enable_model_cpu_offload()

+ self.decoder_pipe.enable_model_cpu_offload()

+

+ def progress_bar(self, iterable=None, total=None):

+ self.prior_pipe.progress_bar(iterable=iterable, total=total)

+ self.decoder_pipe.progress_bar(iterable=iterable, total=total)

+ self.decoder_pipe.enable_model_cpu_offload()

+

+ def set_progress_bar_config(self, **kwargs):

+ self.prior_pipe.set_progress_bar_config(**kwargs)

+ self.decoder_pipe.set_progress_bar_config(**kwargs)

+

+ @torch.no_grad()

+ @replace_example_docstring(TEXT2IMAGE_EXAMPLE_DOC_STRING)

+ def __call__(

+ self,

+ prompt: Union[str, List[str]],

+ negative_prompt: Optional[Union[str, List[str]]] = None,

+ num_inference_steps: int = 100,

+ guidance_scale: float = 4.0,

+ num_images_per_prompt: int = 1,

+ height: int = 512,

+ width: int = 512,

+ prior_guidance_scale: float = 4.0,

+ prior_num_inference_steps: int = 25,

+ generator: Optional[Union[torch.Generator, List[torch.Generator]]] = None,

+ latents: Optional[torch.FloatTensor] = None,

+ output_type: Optional[str] = "pil",

+ callback: Optional[Callable[[int, int, torch.FloatTensor], None]] = None,

+ callback_steps: int = 1,

+ return_dict: bool = True,

+ ):

+ """

+ Function invoked when calling the pipeline for generation.

+

+ Args:

+ prompt (`str` or `List[str]`):

+ The prompt or prompts to guide the image generation.

+ negative_prompt (`str` or `List[str]`, *optional*):

+ The prompt or prompts not to guide the image generation. Ignored when not using guidance (i.e., ignored

+ if `guidance_scale` is less than `1`).

+ num_images_per_prompt (`int`, *optional*, defaults to 1):

+ The number of images to generate per prompt.

+ num_inference_steps (`int`, *optional*, defaults to 100):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ height (`int`, *optional*, defaults to 512):

+ The height in pixels of the generated image.

+ width (`int`, *optional*, defaults to 512):

+ The width in pixels of the generated image.

+ prior_guidance_scale (`float`, *optional*, defaults to 4.0):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ prior_num_inference_steps (`int`, *optional*, defaults to 100):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ guidance_scale (`float`, *optional*, defaults to 4.0):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ generator (`torch.Generator` or `List[torch.Generator]`, *optional*):

+ One or a list of [torch generator(s)](https://pytorch.org/docs/stable/generated/torch.Generator.html)

+ to make generation deterministic.

+ latents (`torch.FloatTensor`, *optional*):

+ Pre-generated noisy latents, sampled from a Gaussian distribution, to be used as inputs for image

+ generation. Can be used to tweak the same generation with different prompts. If not provided, a latents

+ tensor will ge generated by sampling using the supplied random `generator`.

+ output_type (`str`, *optional*, defaults to `"pil"`):

+ The output format of the generate image. Choose between: `"pil"` (`PIL.Image.Image`), `"np"`

+ (`np.array`) or `"pt"` (`torch.Tensor`).

+ callback (`Callable`, *optional*):

+ A function that calls every `callback_steps` steps during inference. The function is called with the

+ following arguments: `callback(step: int, timestep: int, latents: torch.FloatTensor)`.

+ callback_steps (`int`, *optional*, defaults to 1):

+ The frequency at which the `callback` function is called. If not specified, the callback is called at

+ every step.

+ return_dict (`bool`, *optional*, defaults to `True`):

+ Whether or not to return a [`~pipelines.ImagePipelineOutput`] instead of a plain tuple.

+

+ Examples:

+

+ Returns:

+ [`~pipelines.ImagePipelineOutput`] or `tuple`

+ """

+ prior_outputs = self.prior_pipe(

+ prompt=prompt,

+ negative_prompt=negative_prompt,

+ num_images_per_prompt=num_images_per_prompt,

+ num_inference_steps=prior_num_inference_steps,

+ generator=generator,

+ latents=latents,

+ guidance_scale=prior_guidance_scale,

+ output_type="pt",

+ return_dict=False,

+ )

+ image_embeds = prior_outputs[0]

+ negative_image_embeds = prior_outputs[1]

+

+ prompt = [prompt] if not isinstance(prompt, (list, tuple)) else prompt

+

+ if len(prompt) < image_embeds.shape[0] and image_embeds.shape[0] % len(prompt) == 0:

+ prompt = (image_embeds.shape[0] // len(prompt)) * prompt

+

+ outputs = self.decoder_pipe(

+ prompt=prompt,

+ image_embeds=image_embeds,

+ negative_image_embeds=negative_image_embeds,

+ width=width,

+ height=height,

+ num_inference_steps=num_inference_steps,

+ generator=generator,

+ guidance_scale=guidance_scale,

+ output_type=output_type,

+ callback=callback,

+ callback_steps=callback_steps,

+ return_dict=return_dict,

+ )

+ return outputs

+

+

+class KandinskyImg2ImgCombinedPipeline(DiffusionPipeline):

+ """

+ Combined Pipeline for image-to-image generation using Kandinsky

+

+ This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

+ library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

+

+ Args:

+ text_encoder ([`MultilingualCLIP`]):

+ Frozen text-encoder.

+ tokenizer ([`XLMRobertaTokenizer`]):

+ Tokenizer of class

+ scheduler (Union[`DDIMScheduler`,`DDPMScheduler`]):

+ A scheduler to be used in combination with `unet` to generate image latents.

+ unet ([`UNet2DConditionModel`]):

+ Conditional U-Net architecture to denoise the image embedding.

+ movq ([`VQModel`]):

+ MoVQ Decoder to generate the image from the latents.

+ prior_prior ([`PriorTransformer`]):

+ The canonincal unCLIP prior to approximate the image embedding from the text embedding.

+ prior_image_encoder ([`CLIPVisionModelWithProjection`]):

+ Frozen image-encoder.

+ prior_text_encoder ([`CLIPTextModelWithProjection`]):

+ Frozen text-encoder.

+ prior_tokenizer (`CLIPTokenizer`):

+ Tokenizer of class

+ [CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

+ prior_scheduler ([`UnCLIPScheduler`]):

+ A scheduler to be used in combination with `prior` to generate image embedding.

+ """

+

+ _load_connected_pipes = True

+

+ def __init__(

+ self,

+ text_encoder: MultilingualCLIP,

+ tokenizer: XLMRobertaTokenizer,

+ unet: UNet2DConditionModel,

+ scheduler: Union[DDIMScheduler, DDPMScheduler],

+ movq: VQModel,

+ prior_prior: PriorTransformer,

+ prior_image_encoder: CLIPVisionModelWithProjection,

+ prior_text_encoder: CLIPTextModelWithProjection,

+ prior_tokenizer: CLIPTokenizer,

+ prior_scheduler: UnCLIPScheduler,

+ prior_image_processor: CLIPImageProcessor,

+ ):

+ super().__init__()

+

+ self.register_modules(

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ unet=unet,

+ scheduler=scheduler,

+ movq=movq,

+ prior_prior=prior_prior,

+ prior_image_encoder=prior_image_encoder,

+ prior_text_encoder=prior_text_encoder,

+ prior_tokenizer=prior_tokenizer,

+ prior_scheduler=prior_scheduler,

+ prior_image_processor=prior_image_processor,

+ )

+ self.prior_pipe = KandinskyPriorPipeline(

+ prior=prior_prior,

+ image_encoder=prior_image_encoder,

+ text_encoder=prior_text_encoder,

+ tokenizer=prior_tokenizer,

+ scheduler=prior_scheduler,

+ image_processor=prior_image_processor,

+ )

+ self.decoder_pipe = KandinskyImg2ImgPipeline(

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ unet=unet,

+ scheduler=scheduler,

+ movq=movq,

+ )

+

+ def enable_model_cpu_offload(self, gpu_id=0):

+ r"""

+ Offloads all models to CPU using accelerate, reducing memory usage with a low impact on performance. Compared

+ to `enable_sequential_cpu_offload`, this method moves one whole model at a time to the GPU when its `forward`

+ method is called, and the model remains in GPU until the next model runs. Memory savings are lower than with

+ `enable_sequential_cpu_offload`, but performance is much better due to the iterative execution of the `unet`.

+ """

+ self.prior_pipe.enable_model_cpu_offload()

+ self.decoder_pipe.enable_model_cpu_offload()

+

+ def progress_bar(self, iterable=None, total=None):

+ self.prior_pipe.progress_bar(iterable=iterable, total=total)

+ self.decoder_pipe.progress_bar(iterable=iterable, total=total)

+ self.decoder_pipe.enable_model_cpu_offload()

+

+ def set_progress_bar_config(self, **kwargs):

+ self.prior_pipe.set_progress_bar_config(**kwargs)

+ self.decoder_pipe.set_progress_bar_config(**kwargs)

+

+ @torch.no_grad()

+ @replace_example_docstring(IMAGE2IMAGE_EXAMPLE_DOC_STRING)

+ def __call__(

+ self,

+ prompt: Union[str, List[str]],

+ image: Union[torch.FloatTensor, PIL.Image.Image, List[torch.FloatTensor], List[PIL.Image.Image]],

+ negative_prompt: Optional[Union[str, List[str]]] = None,

+ num_inference_steps: int = 100,

+ guidance_scale: float = 4.0,

+ num_images_per_prompt: int = 1,

+ strength: float = 0.3,

+ height: int = 512,

+ width: int = 512,

+ prior_guidance_scale: float = 4.0,

+ prior_num_inference_steps: int = 25,

+ generator: Optional[Union[torch.Generator, List[torch.Generator]]] = None,

+ latents: Optional[torch.FloatTensor] = None,

+ output_type: Optional[str] = "pil",

+ callback: Optional[Callable[[int, int, torch.FloatTensor], None]] = None,

+ callback_steps: int = 1,

+ return_dict: bool = True,

+ ):

+ """

+ Function invoked when calling the pipeline for generation.

+

+ Args:

+ prompt (`str` or `List[str]`):

+ The prompt or prompts to guide the image generation.

+ image (`torch.FloatTensor`, `PIL.Image.Image`, `np.ndarray`, `List[torch.FloatTensor]`, `List[PIL.Image.Image]`, or `List[np.ndarray]`):

+ `Image`, or tensor representing an image batch, that will be used as the starting point for the

+ process. Can also accpet image latents as `image`, if passing latents directly, it will not be encoded

+ again.

+ negative_prompt (`str` or `List[str]`, *optional*):

+ The prompt or prompts not to guide the image generation. Ignored when not using guidance (i.e., ignored

+ if `guidance_scale` is less than `1`).

+ num_images_per_prompt (`int`, *optional*, defaults to 1):

+ The number of images to generate per prompt.

+ num_inference_steps (`int`, *optional*, defaults to 100):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ height (`int`, *optional*, defaults to 512):

+ The height in pixels of the generated image.

+ width (`int`, *optional*, defaults to 512):

+ The width in pixels of the generated image.

+ strength (`float`, *optional*, defaults to 0.3):

+ Conceptually, indicates how much to transform the reference `image`. Must be between 0 and 1. `image`

+ will be used as a starting point, adding more noise to it the larger the `strength`. The number of

+ denoising steps depends on the amount of noise initially added. When `strength` is 1, added noise will

+ be maximum and the denoising process will run for the full number of iterations specified in

+ `num_inference_steps`. A value of 1, therefore, essentially ignores `image`.

+ prior_guidance_scale (`float`, *optional*, defaults to 4.0):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ prior_num_inference_steps (`int`, *optional*, defaults to 100):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ guidance_scale (`float`, *optional*, defaults to 4.0):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ generator (`torch.Generator` or `List[torch.Generator]`, *optional*):

+ One or a list of [torch generator(s)](https://pytorch.org/docs/stable/generated/torch.Generator.html)

+ to make generation deterministic.

+ latents (`torch.FloatTensor`, *optional*):

+ Pre-generated noisy latents, sampled from a Gaussian distribution, to be used as inputs for image

+ generation. Can be used to tweak the same generation with different prompts. If not provided, a latents

+ tensor will ge generated by sampling using the supplied random `generator`.

+ output_type (`str`, *optional*, defaults to `"pil"`):

+ The output format of the generate image. Choose between: `"pil"` (`PIL.Image.Image`), `"np"`

+ (`np.array`) or `"pt"` (`torch.Tensor`).

+ callback (`Callable`, *optional*):

+ A function that calls every `callback_steps` steps during inference. The function is called with the

+ following arguments: `callback(step: int, timestep: int, latents: torch.FloatTensor)`.

+ callback_steps (`int`, *optional*, defaults to 1):

+ The frequency at which the `callback` function is called. If not specified, the callback is called at

+ every step.

+ return_dict (`bool`, *optional*, defaults to `True`):

+ Whether or not to return a [`~pipelines.ImagePipelineOutput`] instead of a plain tuple.

+

+ Examples:

+

+ Returns:

+ [`~pipelines.ImagePipelineOutput`] or `tuple`

+ """

+ prior_outputs = self.prior_pipe(

+ prompt=prompt,

+ negative_prompt=negative_prompt,

+ num_images_per_prompt=num_images_per_prompt,

+ num_inference_steps=prior_num_inference_steps,

+ generator=generator,

+ latents=latents,

+ guidance_scale=prior_guidance_scale,

+ output_type="pt",

+ return_dict=False,

+ )

+ image_embeds = prior_outputs[0]

+ negative_image_embeds = prior_outputs[1]

+

+ prompt = [prompt] if not isinstance(prompt, (list, tuple)) else prompt

+ image = [image] if isinstance(prompt, PIL.Image.Image) else image

+

+ if len(prompt) < image_embeds.shape[0] and image_embeds.shape[0] % len(prompt) == 0:

+ prompt = (image_embeds.shape[0] // len(prompt)) * prompt

+

+ if (

+ isinstance(image, (list, tuple))

+ and len(image) < image_embeds.shape[0]

+ and image_embeds.shape[0] % len(image) == 0

+ ):

+ image = (image_embeds.shape[0] // len(image)) * image

+

+ outputs = self.decoder_pipe(

+ prompt=prompt,

+ image=image,

+ image_embeds=image_embeds,

+ negative_image_embeds=negative_image_embeds,

+ strength=strength,

+ width=width,

+ height=height,

+ num_inference_steps=num_inference_steps,

+ generator=generator,

+ guidance_scale=guidance_scale,

+ output_type=output_type,

+ callback=callback,

+ callback_steps=callback_steps,

+ return_dict=return_dict,

+ )

+ return outputs

+

+

+class KandinskyInpaintCombinedPipeline(DiffusionPipeline):

+ """

+ Combined Pipeline for generation using Kandinsky

+

+ This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

+ library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

+

+ Args:

+ text_encoder ([`MultilingualCLIP`]):

+ Frozen text-encoder.

+ tokenizer ([`XLMRobertaTokenizer`]):

+ Tokenizer of class

+ scheduler (Union[`DDIMScheduler`,`DDPMScheduler`]):

+ A scheduler to be used in combination with `unet` to generate image latents.

+ unet ([`UNet2DConditionModel`]):

+ Conditional U-Net architecture to denoise the image embedding.

+ movq ([`VQModel`]):

+ MoVQ Decoder to generate the image from the latents.

+ prior_prior ([`PriorTransformer`]):

+ The canonincal unCLIP prior to approximate the image embedding from the text embedding.

+ prior_image_encoder ([`CLIPVisionModelWithProjection`]):

+ Frozen image-encoder.

+ prior_text_encoder ([`CLIPTextModelWithProjection`]):

+ Frozen text-encoder.

+ prior_tokenizer (`CLIPTokenizer`):

+ Tokenizer of class

+ [CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

+ prior_scheduler ([`UnCLIPScheduler`]):

+ A scheduler to be used in combination with `prior` to generate image embedding.

+ """

+

+ _load_connected_pipes = True

+

+ def __init__(

+ self,

+ text_encoder: MultilingualCLIP,

+ tokenizer: XLMRobertaTokenizer,

+ unet: UNet2DConditionModel,

+ scheduler: Union[DDIMScheduler, DDPMScheduler],

+ movq: VQModel,

+ prior_prior: PriorTransformer,

+ prior_image_encoder: CLIPVisionModelWithProjection,

+ prior_text_encoder: CLIPTextModelWithProjection,

+ prior_tokenizer: CLIPTokenizer,

+ prior_scheduler: UnCLIPScheduler,

+ prior_image_processor: CLIPImageProcessor,

+ ):

+ super().__init__()

+

+ self.register_modules(

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ unet=unet,

+ scheduler=scheduler,

+ movq=movq,

+ prior_prior=prior_prior,

+ prior_image_encoder=prior_image_encoder,

+ prior_text_encoder=prior_text_encoder,

+ prior_tokenizer=prior_tokenizer,

+ prior_scheduler=prior_scheduler,

+ prior_image_processor=prior_image_processor,

+ )

+ self.prior_pipe = KandinskyPriorPipeline(

+ prior=prior_prior,

+ image_encoder=prior_image_encoder,

+ text_encoder=prior_text_encoder,

+ tokenizer=prior_tokenizer,

+ scheduler=prior_scheduler,

+ image_processor=prior_image_processor,

+ )

+ self.decoder_pipe = KandinskyInpaintPipeline(

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ unet=unet,

+ scheduler=scheduler,

+ movq=movq,

+ )

+

+ def enable_model_cpu_offload(self, gpu_id=0):

+ r"""

+ Offloads all models to CPU using accelerate, reducing memory usage with a low impact on performance. Compared

+ to `enable_sequential_cpu_offload`, this method moves one whole model at a time to the GPU when its `forward`

+ method is called, and the model remains in GPU until the next model runs. Memory savings are lower than with

+ `enable_sequential_cpu_offload`, but performance is much better due to the iterative execution of the `unet`.

+ """

+ self.prior_pipe.enable_model_cpu_offload()

+ self.decoder_pipe.enable_model_cpu_offload()

+

+ def progress_bar(self, iterable=None, total=None):

+ self.prior_pipe.progress_bar(iterable=iterable, total=total)

+ self.decoder_pipe.progress_bar(iterable=iterable, total=total)

+ self.decoder_pipe.enable_model_cpu_offload()

+

+ def set_progress_bar_config(self, **kwargs):

+ self.prior_pipe.set_progress_bar_config(**kwargs)

+ self.decoder_pipe.set_progress_bar_config(**kwargs)

+

+ @torch.no_grad()

+ @replace_example_docstring(INPAINT_EXAMPLE_DOC_STRING)

+ def __call__(

+ self,

+ prompt: Union[str, List[str]],

+ image: Union[torch.FloatTensor, PIL.Image.Image, List[torch.FloatTensor], List[PIL.Image.Image]],

+ mask_image: Union[torch.FloatTensor, PIL.Image.Image, List[torch.FloatTensor], List[PIL.Image.Image]],

+ negative_prompt: Optional[Union[str, List[str]]] = None,

+ num_inference_steps: int = 100,

+ guidance_scale: float = 4.0,

+ num_images_per_prompt: int = 1,

+ height: int = 512,

+ width: int = 512,

+ prior_guidance_scale: float = 4.0,

+ prior_num_inference_steps: int = 25,

+ generator: Optional[Union[torch.Generator, List[torch.Generator]]] = None,

+ latents: Optional[torch.FloatTensor] = None,

+ output_type: Optional[str] = "pil",

+ callback: Optional[Callable[[int, int, torch.FloatTensor], None]] = None,

+ callback_steps: int = 1,

+ return_dict: bool = True,

+ ):

+ """

+ Function invoked when calling the pipeline for generation.

+

+ Args:

+ prompt (`str` or `List[str]`):

+ The prompt or prompts to guide the image generation.

+ image (`torch.FloatTensor`, `PIL.Image.Image`, `np.ndarray`, `List[torch.FloatTensor]`, `List[PIL.Image.Image]`, or `List[np.ndarray]`):

+ `Image`, or tensor representing an image batch, that will be used as the starting point for the

+ process. Can also accpet image latents as `image`, if passing latents directly, it will not be encoded

+ again.

+ mask_image (`np.array`):

+ Tensor representing an image batch, to mask `image`. White pixels in the mask will be repainted, while

+ black pixels will be preserved. If `mask_image` is a PIL image, it will be converted to a single

+ channel (luminance) before use. If it's a tensor, it should contain one color channel (L) instead of 3,

+ so the expected shape would be `(B, H, W, 1)`.

+ negative_prompt (`str` or `List[str]`, *optional*):

+ The prompt or prompts not to guide the image generation. Ignored when not using guidance (i.e., ignored

+ if `guidance_scale` is less than `1`).

+ num_images_per_prompt (`int`, *optional*, defaults to 1):

+ The number of images to generate per prompt.

+ num_inference_steps (`int`, *optional*, defaults to 100):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ height (`int`, *optional*, defaults to 512):

+ The height in pixels of the generated image.

+ width (`int`, *optional*, defaults to 512):

+ The width in pixels of the generated image.

+ prior_guidance_scale (`float`, *optional*, defaults to 4.0):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ prior_num_inference_steps (`int`, *optional*, defaults to 100):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ guidance_scale (`float`, *optional*, defaults to 4.0):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ generator (`torch.Generator` or `List[torch.Generator]`, *optional*):

+ One or a list of [torch generator(s)](https://pytorch.org/docs/stable/generated/torch.Generator.html)

+ to make generation deterministic.

+ latents (`torch.FloatTensor`, *optional*):

+ Pre-generated noisy latents, sampled from a Gaussian distribution, to be used as inputs for image

+ generation. Can be used to tweak the same generation with different prompts. If not provided, a latents

+ tensor will ge generated by sampling using the supplied random `generator`.

+ output_type (`str`, *optional*, defaults to `"pil"`):

+ The output format of the generate image. Choose between: `"pil"` (`PIL.Image.Image`), `"np"`

+ (`np.array`) or `"pt"` (`torch.Tensor`).

+ callback (`Callable`, *optional*):

+ A function that calls every `callback_steps` steps during inference. The function is called with the

+ following arguments: `callback(step: int, timestep: int, latents: torch.FloatTensor)`.

+ callback_steps (`int`, *optional*, defaults to 1):

+ The frequency at which the `callback` function is called. If not specified, the callback is called at

+ every step.

+ return_dict (`bool`, *optional*, defaults to `True`):

+ Whether or not to return a [`~pipelines.ImagePipelineOutput`] instead of a plain tuple.

+

+ Examples:

+

+ Returns:

+ [`~pipelines.ImagePipelineOutput`] or `tuple`

+ """

+ prior_outputs = self.prior_pipe(

+ prompt=prompt,

+ negative_prompt=negative_prompt,

+ num_images_per_prompt=num_images_per_prompt,

+ num_inference_steps=prior_num_inference_steps,

+ generator=generator,

+ latents=latents,

+ guidance_scale=prior_guidance_scale,

+ output_type="pt",

+ return_dict=False,

+ )

+ image_embeds = prior_outputs[0]

+ negative_image_embeds = prior_outputs[1]

+

+ prompt = [prompt] if not isinstance(prompt, (list, tuple)) else prompt

+ image = [image] if isinstance(prompt, PIL.Image.Image) else image

+ mask_image = [mask_image] if isinstance(mask_image, PIL.Image.Image) else mask_image

+

+ if len(prompt) < image_embeds.shape[0] and image_embeds.shape[0] % len(prompt) == 0:

+ prompt = (image_embeds.shape[0] // len(prompt)) * prompt

+

+ if (