-

-

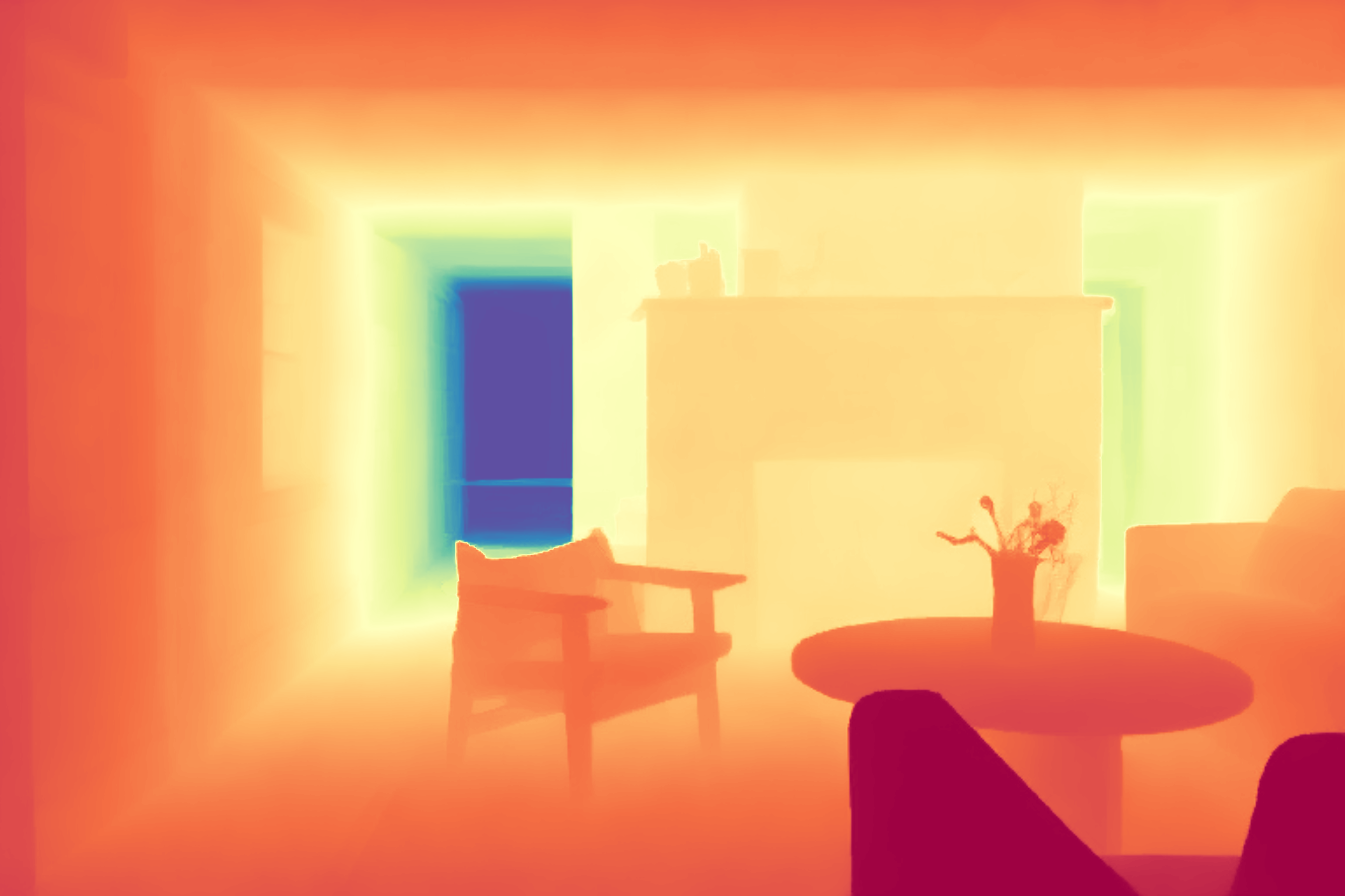

"A photo of a banana-shaped couch in a living room"

+

+

+

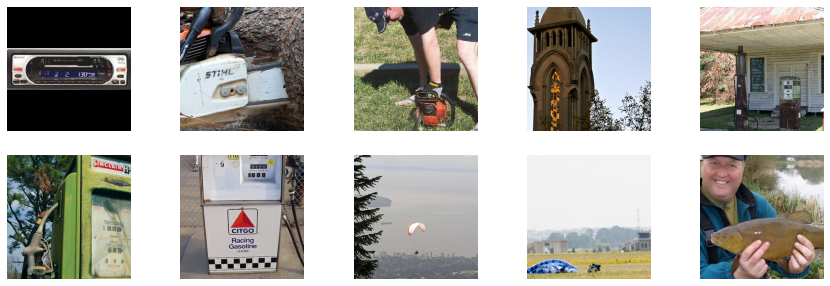

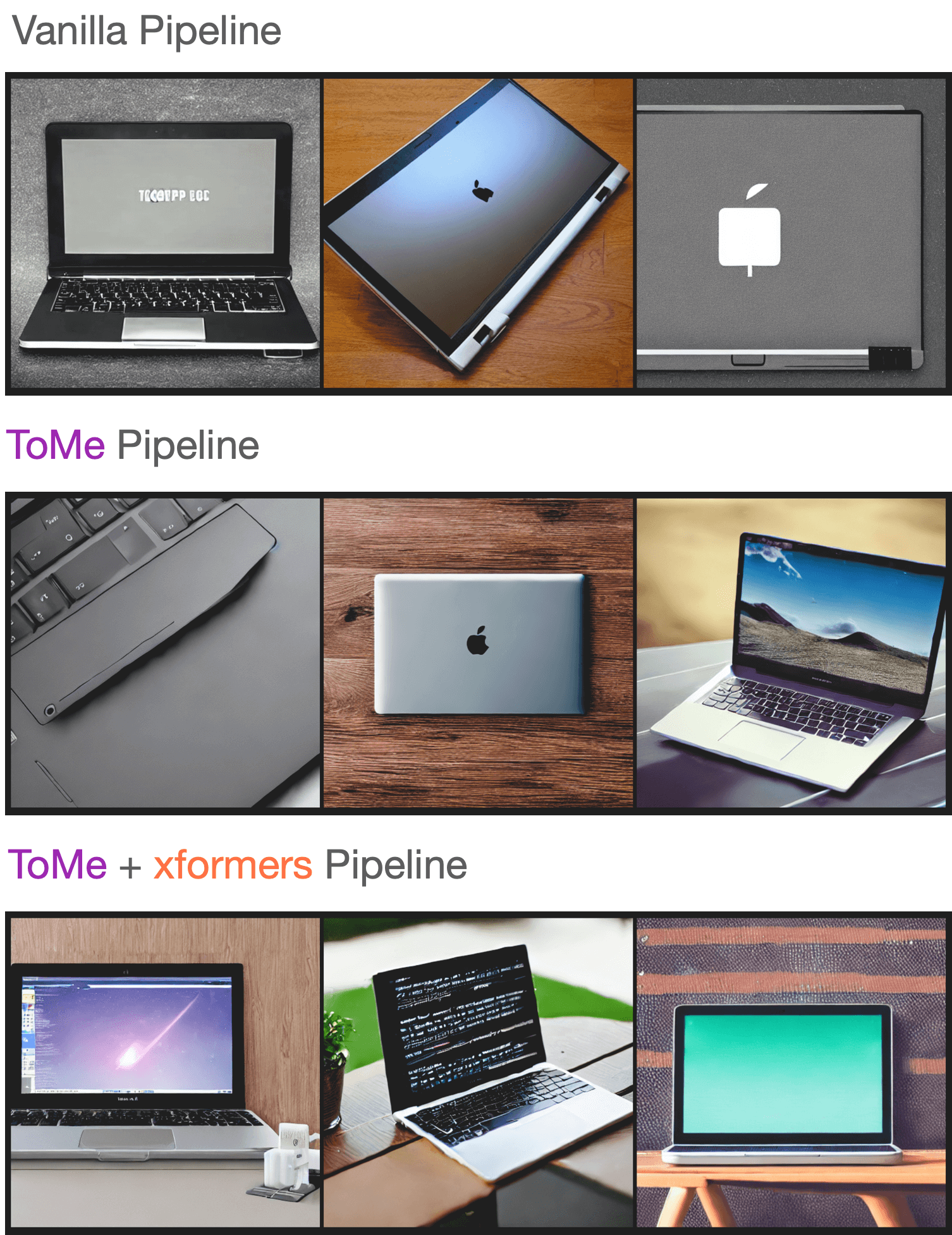

A cute cat lounges on a leaf in a pool during a peaceful summer afternoon, in lofi art style, illustration.

-

-

-

"A vibrant yellow banana-shaped couch sits in a cozy living room, its curve cradling a pile of colorful cushions. on the wooden floor, a patterned rug adds a touch of eclectic charm, and a potted plant sits in the corner, reaching towards the sunlight filtering through the windows"

+

+

+

A cute cat lounges on a floating leaf in a sparkling pool during a peaceful summer afternoon. Clear reflections ripple across the water, with sunlight casting soft, smooth highlights. The illustration is detailed and polished, with elegant lines and harmonious colors, evoking a relaxing, serene, and whimsical lofi mood, anime-inspired and visually comforting.

-## Prompt enhancing with GPT2

-

-Prompt enhancing is a technique for quickly improving prompt quality without spending too much effort constructing one. It uses a model like GPT2 pretrained on Stable Diffusion text prompts to automatically enrich a prompt with additional important keywords to generate high-quality images.

-

-The technique works by curating a list of specific keywords and forcing the model to generate those words to enhance the original prompt. This way, your prompt can be "a cat" and GPT2 can enhance the prompt to "cinematic film still of a cat basking in the sun on a roof in Turkey, highly detailed, high budget hollywood movie, cinemascope, moody, epic, gorgeous, film grain quality sharp focus beautiful detailed intricate stunning amazing epic".

+Be specific and add context. Use photography terms like lens type, focal length, camera angles, and depth of field.

> [!TIP]

-> You should also use a [*offset noise*](https://www.crosslabs.org//blog/diffusion-with-offset-noise) LoRA to improve the contrast in bright and dark images and create better lighting overall. This [LoRA](https://hf.co/stabilityai/stable-diffusion-xl-base-1.0/blob/main/sd_xl_offset_example-lora_1.0.safetensors) is available from [stabilityai/stable-diffusion-xl-base-1.0](https://hf.co/stabilityai/stable-diffusion-xl-base-1.0).

-

-Start by defining certain styles and a list of words (you can check out a more comprehensive list of [words](https://hf.co/LykosAI/GPT-Prompt-Expansion-Fooocus-v2/blob/main/positive.txt) and [styles](https://github.com/lllyasviel/Fooocus/tree/main/sdxl_styles) used by Fooocus) to enhance a prompt with.

-

-```py

-import torch

-from transformers import GenerationConfig, GPT2LMHeadModel, GPT2Tokenizer, LogitsProcessor, LogitsProcessorList

-from diffusers import StableDiffusionXLPipeline

-

-styles = {

- "cinematic": "cinematic film still of {prompt}, highly detailed, high budget hollywood movie, cinemascope, moody, epic, gorgeous, film grain",

- "anime": "anime artwork of {prompt}, anime style, key visual, vibrant, studio anime, highly detailed",

- "photographic": "cinematic photo of {prompt}, 35mm photograph, film, professional, 4k, highly detailed",

- "comic": "comic of {prompt}, graphic illustration, comic art, graphic novel art, vibrant, highly detailed",

- "lineart": "line art drawing {prompt}, professional, sleek, modern, minimalist, graphic, line art, vector graphics",

- "pixelart": " pixel-art {prompt}, low-res, blocky, pixel art style, 8-bit graphics",

-}

-

-words = [

- "aesthetic", "astonishing", "beautiful", "breathtaking", "composition", "contrasted", "epic", "moody", "enhanced",

- "exceptional", "fascinating", "flawless", "glamorous", "glorious", "illumination", "impressive", "improved",

- "inspirational", "magnificent", "majestic", "hyperrealistic", "smooth", "sharp", "focus", "stunning", "detailed",

- "intricate", "dramatic", "high", "quality", "perfect", "light", "ultra", "highly", "radiant", "satisfying",

- "soothing", "sophisticated", "stylish", "sublime", "terrific", "touching", "timeless", "wonderful", "unbelievable",

- "elegant", "awesome", "amazing", "dynamic", "trendy",

-]

-```

-

-You may have noticed in the `words` list, there are certain words that can be paired together to create something more meaningful. For example, the words "high" and "quality" can be combined to create "high quality". Let's pair these words together and remove the words that can't be paired.

-

-```py

-word_pairs = ["highly detailed", "high quality", "enhanced quality", "perfect composition", "dynamic light"]

-

-def find_and_order_pairs(s, pairs):

- words = s.split()

- found_pairs = []

- for pair in pairs:

- pair_words = pair.split()

- if pair_words[0] in words and pair_words[1] in words:

- found_pairs.append(pair)

- words.remove(pair_words[0])

- words.remove(pair_words[1])

-

- for word in words[:]:

- for pair in pairs:

- if word in pair.split():

- words.remove(word)

- break

- ordered_pairs = ", ".join(found_pairs)

- remaining_s = ", ".join(words)

- return ordered_pairs, remaining_s

-```

-

-Next, implement a custom [`~transformers.LogitsProcessor`] class that assigns tokens in the `words` list a value of 0 and assigns tokens not in the `words` list a negative value so they aren't picked during generation. This way, generation is biased towards words in the `words` list. After a word from the list is used, it is also assigned a negative value so it isn't picked again.

-

-```py

-class CustomLogitsProcessor(LogitsProcessor):

- def __init__(self, bias):

- super().__init__()

- self.bias = bias

-

- def __call__(self, input_ids, scores):

- if len(input_ids.shape) == 2:

- last_token_id = input_ids[0, -1]

- self.bias[last_token_id] = -1e10

- return scores + self.bias

-

-word_ids = [tokenizer.encode(word, add_prefix_space=True)[0] for word in words]

-bias = torch.full((tokenizer.vocab_size,), -float("Inf")).to("cuda")

-bias[word_ids] = 0

-processor = CustomLogitsProcessor(bias)

-processor_list = LogitsProcessorList([processor])

-```

-

-Combine the prompt and the `cinematic` style prompt defined in the `styles` dictionary earlier.

-

-```py

-prompt = "a cat basking in the sun on a roof in Turkey"

-style = "cinematic"

-

-prompt = styles[style].format(prompt=prompt)

-prompt

-"cinematic film still of a cat basking in the sun on a roof in Turkey, highly detailed, high budget hollywood movie, cinemascope, moody, epic, gorgeous, film grain"

-```

-

-Load a GPT2 tokenizer and model from the [Gustavosta/MagicPrompt-Stable-Diffusion](https://huggingface.co/Gustavosta/MagicPrompt-Stable-Diffusion) checkpoint (this specific checkpoint is trained to generate prompts) to enhance the prompt.

-

-```py

-tokenizer = GPT2Tokenizer.from_pretrained("Gustavosta/MagicPrompt-Stable-Diffusion")

-model = GPT2LMHeadModel.from_pretrained("Gustavosta/MagicPrompt-Stable-Diffusion", torch_dtype=torch.float16).to(

- "cuda"

-)

-model.eval()

-

-inputs = tokenizer(prompt, return_tensors="pt").to("cuda")

-token_count = inputs["input_ids"].shape[1]

-max_new_tokens = 50 - token_count

-

-generation_config = GenerationConfig(

- penalty_alpha=0.7,

- top_k=50,

- eos_token_id=model.config.eos_token_id,

- pad_token_id=model.config.eos_token_id,

- pad_token=model.config.pad_token_id,

- do_sample=True,

-)

-

-with torch.no_grad():

- generated_ids = model.generate(

- input_ids=inputs["input_ids"],

- attention_mask=inputs["attention_mask"],

- max_new_tokens=max_new_tokens,

- generation_config=generation_config,

- logits_processor=proccesor_list,

- )

-```

-

-Then you can combine the input prompt and the generated prompt. Feel free to take a look at what the generated prompt (`generated_part`) is, the word pairs that were found (`pairs`), and the remaining words (`words`). This is all packed together in the `enhanced_prompt`.

-

-```py

-output_tokens = [tokenizer.decode(generated_id, skip_special_tokens=True) for generated_id in generated_ids]

-input_part, generated_part = output_tokens[0][: len(prompt)], output_tokens[0][len(prompt) :]

-pairs, words = find_and_order_pairs(generated_part, word_pairs)

-formatted_generated_part = pairs + ", " + words

-enhanced_prompt = input_part + ", " + formatted_generated_part

-enhanced_prompt

-["cinematic film still of a cat basking in the sun on a roof in Turkey, highly detailed, high budget hollywood movie, cinemascope, moody, epic, gorgeous, film grain quality sharp focus beautiful detailed intricate stunning amazing epic"]

-```

-

-Finally, load a pipeline and the offset noise LoRA with a *low weight* to generate an image with the enhanced prompt.

-

-```py

-pipeline = StableDiffusionXLPipeline.from_pretrained(

- "RunDiffusion/Juggernaut-XL-v9", torch_dtype=torch.float16, variant="fp16"

-).to("cuda")

-

-pipeline.load_lora_weights(

- "stabilityai/stable-diffusion-xl-base-1.0",

- weight_name="sd_xl_offset_example-lora_1.0.safetensors",

- adapter_name="offset",

-)

-pipeline.set_adapters(["offset"], adapter_weights=[0.2])

-

-image = pipeline(

- enhanced_prompt,

- width=1152,

- height=896,

- guidance_scale=7.5,

- num_inference_steps=25,

-).images[0]

-image

-```

-

-

-

-

-

"a cat basking in the sun on a roof in Turkey"

-

-

-

-

"cinematic film still of a cat basking in the sun on a roof in Turkey, highly detailed, high budget hollywood movie, cinemascope, moody, epic, gorgeous, film grain"

-

-

+> Try a [prompt enhancer](https://huggingface.co/models?sort=downloads&search=prompt+enhancer) to help improve your prompt structure.

## Prompt weighting

-Prompt weighting provides a way to emphasize or de-emphasize certain parts of a prompt, allowing for more control over the generated image. A prompt can include several concepts, which gets turned into contextualized text embeddings. The embeddings are used by the model to condition its cross-attention layers to generate an image (read the Stable Diffusion [blog post](https://huggingface.co/blog/stable_diffusion) to learn more about how it works).

+Prompt weighting makes some words stronger and others weaker. It scales attention scores so you control how much influence each concept has.

-Prompt weighting works by increasing or decreasing the scale of the text embedding vector that corresponds to its concept in the prompt because you may not necessarily want the model to focus on all concepts equally. The easiest way to prepare the prompt embeddings is to use [Stable Diffusion Long Prompt Weighted Embedding](https://github.com/xhinker/sd_embed) (sd_embed). Once you have the prompt-weighted embeddings, you can pass them to any pipeline that has a [prompt_embeds](https://huggingface.co/docs/diffusers/en/api/pipelines/stable_diffusion/text2img#diffusers.StableDiffusionPipeline.__call__.prompt_embeds) (and optionally [negative_prompt_embeds](https://huggingface.co/docs/diffusers/en/api/pipelines/stable_diffusion/text2img#diffusers.StableDiffusionPipeline.__call__.negative_prompt_embeds)) parameter, such as [`StableDiffusionPipeline`], [`StableDiffusionControlNetPipeline`], and [`StableDiffusionXLPipeline`].

+Diffusers handles this through `prompt_embeds` and `pooled_prompt_embeds` arguments which take scaled text embedding vectors. Use the [sd_embed](https://github.com/xhinker/sd_embed) library to generate these embeddings. It also supports longer prompts.

-

-

-If your favorite pipeline doesn't have a `prompt_embeds` parameter, please open an [issue](https://github.com/huggingface/diffusers/issues/new/choose) so we can add it!

-

-

-

-This guide will show you how to weight your prompts with sd_embed.

-

-Before you begin, make sure you have the latest version of sd_embed installed:

-

-```bash

-pip install git+https://github.com/xhinker/sd_embed.git@main

-```

-

-For this example, let's use [`StableDiffusionXLPipeline`].

+> [!NOTE]

+> The sd_embed library only supports Stable Diffusion, Stable Diffusion XL, Stable Diffusion 3, Stable Cascade, and Flux. Prompt weighting doesn't necessarily help for newer models like Flux which already has very good prompt adherence.

```py

-from diffusers import StableDiffusionXLPipeline, UniPCMultistepScheduler

-import torch

-

-pipe = StableDiffusionXLPipeline.from_pretrained("Lykon/dreamshaper-xl-1-0", torch_dtype=torch.float16)

-pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

-pipe.to("cuda")

+!uv pip install git+https://github.com/xhinker/sd_embed.git@main

```

-To upweight or downweight a concept, surround the text with parentheses. More parentheses applies a heavier weight on the text. You can also append a numerical multiplier to the text to indicate how much you want to increase or decrease its weights by.

+Format weighted text with numerical multipliers or parentheses. More parentheses mean stronger weighting.

| format | multiplier |

|---|---|

-| `(hippo)` | increase by 1.1x |

-| `((hippo))` | increase by 1.21x |

-| `(hippo:1.5)` | increase by 1.5x |

-| `(hippo:0.5)` | decrease by 4x |

+| `(cat)` | increase by 1.1x |

+| `((cat))` | increase by 1.21x |

+| `(cat:1.5)` | increase by 1.5x |

+| `(cat:0.5)` | decrease by 4x |

-Create a prompt and use a combination of parentheses and numerical multipliers to upweight various text.

+Create a weighted prompt and pass it to [get_weighted_text_embeddings_sdxl](https://github.com/xhinker/sd_embed/blob/4a47f71150a22942fa606fb741a1c971d95ba56f/src/sd_embed/embedding_funcs.py#L405) to generate embeddings.

+

+> [!TIP]

+> You could also pass negative prompts to `negative_prompt_embeds` and `negative_pooled_prompt_embeds`.

```py

+import torch

+from diffusers import DiffusionPipeline

from sd_embed.embedding_funcs import get_weighted_text_embeddings_sdxl

-prompt = """A whimsical and creative image depicting a hybrid creature that is a mix of a waffle and a hippopotamus.

-This imaginative creature features the distinctive, bulky body of a hippo,

-but with a texture and appearance resembling a golden-brown, crispy waffle.

-The creature might have elements like waffle squares across its skin and a syrup-like sheen.

-It's set in a surreal environment that playfully combines a natural water habitat of a hippo with elements of a breakfast table setting,

-possibly including oversized utensils or plates in the background.

-The image should evoke a sense of playful absurdity and culinary fantasy.

-"""

-

-neg_prompt = """\

-skin spots,acnes,skin blemishes,age spot,(ugly:1.2),(duplicate:1.2),(morbid:1.21),(mutilated:1.2),\

-(tranny:1.2),mutated hands,(poorly drawn hands:1.5),blurry,(bad anatomy:1.2),(bad proportions:1.3),\

-extra limbs,(disfigured:1.2),(missing arms:1.2),(extra legs:1.2),(fused fingers:1.5),\

-(too many fingers:1.5),(unclear eyes:1.2),lowers,bad hands,missing fingers,extra digit,\

-bad hands,missing fingers,(extra arms and legs),(worst quality:2),(low quality:2),\

-(normal quality:2),lowres,((monochrome)),((grayscale))

-"""

-```

-

-Use the `get_weighted_text_embeddings_sdxl` function to generate the prompt embeddings and the negative prompt embeddings. It'll also generated the pooled and negative pooled prompt embeddings since you're using the SDXL model.

-

-> [!TIP]

-> You can safely ignore the error message below about the token index length exceeding the models maximum sequence length. All your tokens will be used in the embedding process.

->

-> ```

-> Token indices sequence length is longer than the specified maximum sequence length for this model

-> ```

-

-```py

-(

- prompt_embeds,

- prompt_neg_embeds,

- pooled_prompt_embeds,

- negative_pooled_prompt_embeds

-) = get_weighted_text_embeddings_sdxl(

- pipe,

- prompt=prompt,

- neg_prompt=neg_prompt

+pipeline = DiffusionPipeline.from_pretrained(

+ "Lykon/dreamshaper-xl-1-0", torch_dtype=torch.bfloat16, device_map="cuda"

)

-image = pipe(

- prompt_embeds=prompt_embeds,

- negative_prompt_embeds=prompt_neg_embeds,

- pooled_prompt_embeds=pooled_prompt_embeds,

- negative_pooled_prompt_embeds=negative_pooled_prompt_embeds,

- num_inference_steps=30,

- height=1024,

- width=1024 + 512,

- guidance_scale=4.0,

- generator=torch.Generator("cuda").manual_seed(2)

-).images[0]

-image

+prompt = """

+A (cute cat:1.4) lounges on a (floating leaf:1.2) in a (sparkling pool:1.1) during a peaceful summer afternoon.

+Gentle ripples reflect pastel skies, while (sunlight:1.1) casts soft highlights. The illustration is smooth and polished

+with elegant, sketchy lines and subtle gradients, evoking a ((whimsical, nostalgic, dreamy lofi atmosphere:2.0)),

+(anime-inspired:1.6), calming, comforting, and visually serene.

+"""

+

+prompt_embeds, _, pooled_prompt_embeds, *_ = get_weighted_text_embeddings_sdxl(pipeline, prompt=prompt)

+```

+

+Pass the embeddings to `prompt_embeds` and `pooled_prompt_embeds` to generate your image.

+

+```py

+image = pipeline(prompt_embeds=prompt_embeds, pooled_prompt_embeds=pooled_prompt_embeds).images[0]

```

-

+

-> [!TIP]

-> Refer to the [sd_embed](https://github.com/xhinker/sd_embed) repository for additional details about long prompt weighting for FLUX.1, Stable Cascade, and Stable Diffusion 1.5.

-

-### Textual inversion

-

-[Textual inversion](../training/text_inversion) is a technique for learning a specific concept from some images which you can use to generate new images conditioned on that concept.

-

-Create a pipeline and use the [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] function to load the textual inversion embeddings (feel free to browse the [Stable Diffusion Conceptualizer](https://huggingface.co/spaces/sd-concepts-library/stable-diffusion-conceptualizer) for 100+ trained concepts):

-

-```py

-import torch

-from diffusers import StableDiffusionPipeline

-

-pipe = StableDiffusionPipeline.from_pretrained(

- "stable-diffusion-v1-5/stable-diffusion-v1-5",

- torch_dtype=torch.float16,

-).to("cuda")

-pipe.load_textual_inversion("sd-concepts-library/midjourney-style")

-```

-

-Add the `

` text to the prompt to trigger the textual inversion.

-

-```py

-from sd_embed.embedding_funcs import get_weighted_text_embeddings_sd15

-

-prompt = """ A whimsical and creative image depicting a hybrid creature that is a mix of a waffle and a hippopotamus.

-This imaginative creature features the distinctive, bulky body of a hippo,

-but with a texture and appearance resembling a golden-brown, crispy waffle.

-The creature might have elements like waffle squares across its skin and a syrup-like sheen.

-It's set in a surreal environment that playfully combines a natural water habitat of a hippo with elements of a breakfast table setting,

-possibly including oversized utensils or plates in the background.

-The image should evoke a sense of playful absurdity and culinary fantasy.

-"""

-

-neg_prompt = """\

-skin spots,acnes,skin blemishes,age spot,(ugly:1.2),(duplicate:1.2),(morbid:1.21),(mutilated:1.2),\

-(tranny:1.2),mutated hands,(poorly drawn hands:1.5),blurry,(bad anatomy:1.2),(bad proportions:1.3),\

-extra limbs,(disfigured:1.2),(missing arms:1.2),(extra legs:1.2),(fused fingers:1.5),\

-(too many fingers:1.5),(unclear eyes:1.2),lowers,bad hands,missing fingers,extra digit,\

-bad hands,missing fingers,(extra arms and legs),(worst quality:2),(low quality:2),\

-(normal quality:2),lowres,((monochrome)),((grayscale))

-"""

-```

-

-Use the `get_weighted_text_embeddings_sd15` function to generate the prompt embeddings and the negative prompt embeddings.

-

-```py

-(

- prompt_embeds,

- prompt_neg_embeds,

-) = get_weighted_text_embeddings_sd15(

- pipe,

- prompt=prompt,

- neg_prompt=neg_prompt

-)

-

-image = pipe(

- prompt_embeds=prompt_embeds,

- negative_prompt_embeds=prompt_neg_embeds,

- height=768,

- width=896,

- guidance_scale=4.0,

- generator=torch.Generator("cuda").manual_seed(2)

-).images[0]

-image

-```

-

-

-

-

-

-

+> [!TIP]

+> 💡 `strength`는 입력 이미지에 추가되는 노이즈의 양을 제어하는 0.0에서 1.0 사이의 값입니다. 1.0에 가까운 값은 다양한 변형을 허용하지만 입력 이미지와 의미적으로 일치하지 않는 이미지를 생성합니다.

프롬프트를 정의하고(지브리 스타일(Ghibli-style)에 맞게 조정된 이 체크포인트의 경우 프롬프트 앞에 `ghibli style` 토큰을 붙여야 합니다) 파이프라인을 실행합니다:

diff --git a/docs/source/ko/using-diffusers/inpaint.md b/docs/source/ko/using-diffusers/inpaint.md

index adf1251176..cefb892186 100644

--- a/docs/source/ko/using-diffusers/inpaint.md

+++ b/docs/source/ko/using-diffusers/inpaint.md

@@ -59,11 +59,8 @@ image = pipe(prompt=prompt, image=init_image, mask_image=mask_image).images[0]

:-------------------------:|:-------------------------:|:-------------------------:|-------------------------:|

+> [!WARNING]

+> 이전의 실험적인 인페인팅 구현에서는 품질이 낮은 다른 프로세스를 사용했습니다. 이전 버전과의 호환성을 보장하기 위해 새 모델이 포함되지 않은 사전학습된 파이프라인을 불러오면 이전 인페인팅 방법이 계속 적용됩니다.

아래 Space에서 이미지 인페인팅을 직접 해보세요!

diff --git a/docs/source/ko/using-diffusers/kandinsky.md b/docs/source/ko/using-diffusers/kandinsky.md

index cc554c67f9..8eff8f5629 100644

--- a/docs/source/ko/using-diffusers/kandinsky.md

+++ b/docs/source/ko/using-diffusers/kandinsky.md

@@ -31,15 +31,12 @@ Kandinsky 모델은 일련의 다국어 text-to-image 생성 모델입니다. Ka

#!pip install -q diffusers transformers accelerate

```

-

+> [!WARNING]

+> Kandinsky 2.1과 2.2의 사용법은 매우 유사합니다! 유일한 차이점은 Kandinsky 2.2는 latents를 디코딩할 때 `프롬프트`를 입력으로 받지 않는다는 것입니다. 대신, Kandinsky 2.2는 디코딩 중에는 `image_embeds`만 받아들입니다.

+>

+>

+>

+> Kandinsky 3는 더 간결한 아키텍처를 가지고 있으며 prior 모델이 필요하지 않습니다. 즉, [Stable Diffusion XL](sdxl)과 같은 다른 diffusion 모델과 사용법이 동일합니다.

## Text-to-image

@@ -321,20 +318,17 @@ make_image_grid([original_image.resize((512, 512)), image.resize((512, 512))], r

## Inpainting

-

+> [!WARNING]

+> ⚠️ Kandinsky 모델은 이제 검은색 픽셀 대신 ⬜️ **흰색 픽셀**을 사용하여 마스크 영역을 표현합니다. 프로덕션에서 [`KandinskyInpaintPipeline`]을 사용하는 경우 흰색 픽셀을 사용하도록 마스크를 변경해야 합니다:

+>

+> ```py

+> # PIL 입력에 대해

+> import PIL.ImageOps

+> mask = PIL.ImageOps.invert(mask)

+>

+> # PyTorch와 NumPy 입력에 대해

+> mask = 1 - mask

+> ```

인페인팅에서는 원본 이미지, 원본 이미지에서 대체할 영역의 마스크, 인페인팅할 내용에 대한 텍스트 프롬프트가 필요합니다. Prior 파이프라인을 불러옵니다:

@@ -565,11 +559,8 @@ image

## ControlNet

-

+> [!WARNING]

+> ⚠️ ControlNet은 Kandinsky 2.2에서만 지원됩니다!

ControlNet을 사용하면 depth map이나 edge detection와 같은 추가 입력을 통해 사전학습된 large diffusion 모델을 conditioning할 수 있습니다. 예를 들어, 모델이 depth map의 구조를 이해하고 보존할 수 있도록 깊이 맵으로 Kandinsky 2.2를 conditioning할 수 있습니다.

diff --git a/docs/source/ko/using-diffusers/loading.md b/docs/source/ko/using-diffusers/loading.md

index 3d6b7634b4..2160acacc2 100644

--- a/docs/source/ko/using-diffusers/loading.md

+++ b/docs/source/ko/using-diffusers/loading.md

@@ -30,11 +30,8 @@ diffusion 모델의 훈련과 추론에 필요한 모든 것은 [`DiffusionPipel

## Diffusion 파이프라인

-

+> [!TIP]

+> 💡 [`DiffusionPipeline`] 클래스가 동작하는 방식에 보다 자세한 내용이 궁금하다면, [DiffusionPipeline explained](#diffusionpipeline에-대해-알아보기) 섹션을 확인해보세요.

[`DiffusionPipeline`] 클래스는 diffusion 모델을 [허브](https://huggingface.co/models?library=diffusers)로부터 불러오는 가장 심플하면서 보편적인 방식입니다. [`DiffusionPipeline.from_pretrained`] 메서드는 적합한 파이프라인 클래스를 자동으로 탐지하고, 필요한 구성요소(configuration)와 가중치(weight) 파일들을 다운로드하고 캐싱한 다음, 해당 파이프라인 인스턴스를 반환합니다.

@@ -175,11 +172,8 @@ Variant란 일반적으로 다음과 같은 체크포인트들을 의미합니

- `torch.float16`과 같이 정밀도는 더 낮지만, 용량 역시 더 작은 부동소수점 타입의 가중치를 사용하는 체크포인트. *(다만 이와 같은 variant의 경우, 추가적인 훈련과 CPU환경에서의 구동이 불가능합니다.)*

- Non-EMA 가중치를 사용하는 체크포인트. *(Non-EMA 가중치의 경우, 파인 튜닝 단계에서 사용하는 것이 권장되는데, 추론 단계에선 사용하지 않는 것이 권장됩니다.)*

-

+> [!TIP]

+> 💡 모델 구조는 동일하지만 서로 다른 학습 환경에서 서로 다른 데이터셋으로 학습된 체크포인트들이 있을 경우, 해당 체크포인트들은 variant 단계가 아닌 리포지토리 단계에서 분리되어 관리되어야 합니다. (즉, 해당 체크포인트들은 서로 다른 리포지토리에서 따로 관리되어야 합니다. 예시: [`stable-diffusion-v1-4`], [`stable-diffusion-v1-5`]).

| **checkpoint type** | **weight name** | **argument for loading weights** |

| ------------------- | ----------------------------------- | -------------------------------- |

diff --git a/docs/source/ko/using-diffusers/loading_adapters.md b/docs/source/ko/using-diffusers/loading_adapters.md

index f0d085bc6a..e7ae116575 100644

--- a/docs/source/ko/using-diffusers/loading_adapters.md

+++ b/docs/source/ko/using-diffusers/loading_adapters.md

@@ -18,11 +18,8 @@ specific language governing permissions and limitations under the License.

이 가이드에서는 DreamBooth, textual inversion 및 LoRA 가중치를 불러오는 방법을 설명합니다.

-

+> [!TIP]

+> 사용할 체크포인트와 임베딩은 [Stable Diffusion Conceptualizer](https://huggingface.co/spaces/sd-concepts-library/stable-diffusion-conceptualizer), [LoRA the Explorer](https://huggingface.co/spaces/multimodalart/LoraTheExplorer), [Diffusers Models Gallery](https://huggingface.co/spaces/huggingface-projects/diffusers-gallery)에서 찾아보시기 바랍니다.

## DreamBooth

@@ -101,11 +98,8 @@ image

[Low-Rank Adaptation (LoRA)](https://huggingface.co/papers/2106.09685)은 속도가 빠르고 파일 크기가 (수백 MB로) 작기 때문에 널리 사용되는 학습 기법입니다. 이 가이드의 다른 방법과 마찬가지로, LoRA는 몇 장의 이미지만으로 새로운 스타일을 학습하도록 모델을 학습시킬 수 있습니다. 이는 diffusion 모델에 새로운 가중치를 삽입한 다음 전체 모델 대신 새로운 가중치만 학습시키는 방식으로 작동합니다. 따라서 LoRA를 더 빠르게 학습시키고 더 쉽게 저장할 수 있습니다.

-

+> [!TIP]

+> LoRA는 다른 학습 방법과 함께 사용할 수 있는 매우 일반적인 학습 기법입니다. 예를 들어, DreamBooth와 LoRA로 모델을 학습하는 것이 일반적입니다. 또한 새롭고 고유한 이미지를 생성하기 위해 여러 개의 LoRA를 불러오고 병합하는 것이 점점 더 일반화되고 있습니다. 병합은 이 불러오기 가이드의 범위를 벗어나므로 자세한 내용은 심층적인 [LoRA 병합](merge_loras) 가이드에서 확인할 수 있습니다.

LoRA는 다른 모델과 함께 사용해야 합니다:

@@ -184,11 +178,8 @@ pipe.set_adapters("my_adapter", scales)

이는 여러 어댑터에서도 작동합니다. 방법은 [이 가이드](https://huggingface.co/docs/diffusers/tutorials/using_peft_for_inference#customize-adapters-strength)를 참조하세요.

-

+> [!WARNING]

+> 현재 [`~loaders.LoraLoaderMixin.set_adapters`]는 어텐션 가중치의 스케일링만 지원합니다. LoRA에 다른 부분(예: resnets or down-/upsamplers)이 있는 경우 1.0의 스케일을 유지합니다.

### Kohya와 TheLastBen

@@ -222,14 +213,11 @@ image = pipeline(prompt).images[0]

image

```

-

+> [!WARNING]

+> Kohya LoRA를 🤗 Diffusers와 함께 사용할 때 몇 가지 제한 사항이 있습니다:

+>

+> - [여기](https://github.com/huggingface/diffusers/pull/4287/#issuecomment-1655110736)에 설명된 여러 가지 이유로 인해 이미지가 ComfyUI와 같은 UI에서 생성된 이미지와 다르게 보일 수 있습니다.

+> - [LyCORIS 체크포인트](https://github.com/KohakuBlueleaf/LyCORIS)가 완전히 지원되지 않습니다. [`~loaders.LoraLoaderMixin.load_lora_weights`] 메서드는 LoRA 및 LoCon 모듈로 LyCORIS 체크포인트를 불러올 수 있지만, Hada 및 LoKR은 지원되지 않습니다.

+

+ +

+ -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- +

+ +

+ +

+ -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

- -

-  -

-  -

-  -

-  -

-  -

-  -

- -

- -

- logo next to the file on the Hub.

-Use the [`~loaders.FromSingleFileMixin.from_single_file`] method to load a model with all the weights stored in a single safetensors file.

-

-```py

-from diffusers import StableDiffusionPipeline

-

-pipeline = StableDiffusionPipeline.from_single_file(

- "https://huggingface.co/WarriorMama777/OrangeMixs/blob/main/Models/AbyssOrangeMix/AbyssOrangeMix.safetensors"

-)

-```

-

-

logo next to the file on the Hub.

-Use the [`~loaders.FromSingleFileMixin.from_single_file`] method to load a model with all the weights stored in a single safetensors file.

-

-```py

-from diffusers import StableDiffusionPipeline

-

-pipeline = StableDiffusionPipeline.from_single_file(

- "https://huggingface.co/WarriorMama777/OrangeMixs/blob/main/Models/AbyssOrangeMix/AbyssOrangeMix.safetensors"

-)

-```

-

- -

- -

-  -

-  -

- -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  +

+  +

+  +

+  +

+  +

+  -

-  +

+  -

-  -

-  -

-  +

+  +

+  +

+  -

-  +

+  -

-  +

+  -

-  -

-  +

+

-

- -

-

|

|  | ***Face of a yellow cat, high resolution, sitting on a park bench*** |

| ***Face of a yellow cat, high resolution, sitting on a park bench*** |  |

-

|

- +

+无论您选择以何种方式贡献,我们都致力于成为一个开放、友好、善良的社区。请阅读我们的[行为准则](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md),并在互动时注意遵守。我们也建议您了解指导本项目的[伦理准则](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines),并请您遵循同样的透明度和责任原则。

+

+我们高度重视社区的反馈,所以如果您认为自己有能帮助改进库的有价值反馈,请不要犹豫说出来——每条消息、评论、issue和拉取请求(PR)都会被阅读和考虑。

+

+## 概述

+

+您可以通过多种方式做出贡献,从在issue和讨论区回答问题,到向核心库添加新的diffusion模型。

+

+下面我们按难度升序列出不同的贡献方式,所有方式对社区都很有价值:

+

+* 1. 在[Diffusers讨论论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers)或[Discord](https://discord.gg/G7tWnz98XR)上提问和回答问题

+* 2. 在[GitHub Issues标签页](https://github.com/huggingface/diffusers/issues/new/choose)提交新issue,或在[GitHub Discussions标签页](https://github.com/huggingface/diffusers/discussions/new/choose)发起新讨论

+* 3. 在[GitHub Issues标签页](https://github.com/huggingface/diffusers/issues)解答issue,或在[GitHub Discussions标签页](https://github.com/huggingface/diffusers/discussions)参与讨论

+* 4. 解决标记为"Good first issue"的简单问题,详见[此处](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22)

+* 5. 参与[文档](https://github.com/huggingface/diffusers/tree/main/docs/source)建设

+* 6. 贡献[社区Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples)

+* 7. 完善[示例代码](https://github.com/huggingface/diffusers/tree/main/examples)

+* 8. 解决标记为"Good second issue"的中等难度问题,详见[此处](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22)

+* 9. 添加新pipeline/模型/调度器,参见["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22)和["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22)类issue。此类贡献请先阅读[设计哲学](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md)

+

+重申:**所有贡献对社区都具有重要价值。**下文将详细说明各类贡献方式。

+

+对于4-9类贡献,您需要提交PR(拉取请求),具体操作详见[如何提交PR](#how-to-open-a-pr)章节。

+

+### 1. 在Diffusers讨论区或Discord提问与解答

+

+任何与Diffusers库相关的问题或讨论都可以发布在[官方论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/)或[Discord频道](https://discord.gg/G7tWnz98XR),包括但不限于:

+- 分享训练/推理实验报告

+- 展示个人项目

+- 咨询非官方训练示例

+- 项目提案

+- 通用反馈

+- 论文解读

+- 基于Diffusers库的个人项目求助

+- 一般性问题

+- 关于diffusion模型的伦理讨论

+- ...

+

+论坛/Discord上的每个问题都能促使社区公开分享知识,很可能帮助未来遇到相同问题的初学者。请务必提出您的疑问。

+同样地,通过回答问题您也在为社区创造公共知识文档,这种贡献极具价值。

+

+**请注意**:提问/回答时投入的精力越多,产生的公共知识质量就越高。精心构建的问题与专业解答能形成高质量知识库,而表述不清的问题则可能降低讨论价值。

+

+低质量的问题或回答会降低公共知识库的整体质量。

+简而言之,高质量的问题或回答应具备*精确性*、*简洁性*、*相关性*、*易于理解*、*可访问性*和*格式规范/表述清晰*等特质。更多详情请参阅[如何提交优质议题](#how-to-write-a-good-issue)章节。

+

+**关于渠道的说明**:

+[*论坛*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63)的内容能被谷歌等搜索引擎更好地收录,且帖子按热度而非时间排序,便于查找历史问答。此外,论坛内容更容易被直接链接引用。

+而*Discord*采用即时聊天模式,适合快速交流。虽然在Discord上可能更快获得解答,但信息会随时间淹没,且难以回溯历史讨论。因此我们强烈建议在论坛发布优质问答,以构建可持续的社区知识库。若Discord讨论产生有价值结论,建议将成果整理发布至论坛以惠及更多读者。

+

+### 2. 在GitHub议题页提交新议题

+

+🧨 Diffusers库的稳健性离不开用户的问题反馈,感谢您的报错。

+

+请注意:GitHub议题仅限处理与Diffusers库代码直接相关的技术问题、错误报告、功能请求或库设计反馈。

+简言之,**与Diffusers库代码(含文档)无关**的内容应发布至[论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63)或[Discord](https://discord.gg/G7tWnz98XR)。

+

+**提交新议题时请遵循以下准则**:

+- 确认是否已有类似议题(使用GitHub议题页的搜索栏)

+- 请勿在现有议题下追加新问题。若存在高度关联议题,应新建议题并添加相关链接

+- 确保使用英文提交。非英语用户可通过[DeepL](https://www.deepl.com/translator)等免费工具翻译

+- 检查升级至最新Diffusers版本是否能解决问题。提交前请确认`python -c "import diffusers; print(diffusers.__version__)"`显示的版本号不低于最新版本

+- 记请记住,你在提交新issue时投入的精力越多,得到的回答质量就越高,Diffusers项目的整体issue质量也会越好。

+

+新issue通常包含以下内容:

+

+#### 2.1 可复现的最小化错误报告

+

+错误报告应始终包含可复现的代码片段,并尽可能简洁明了。具体而言:

+- 尽量缩小问题范围,**不要直接粘贴整个代码文件**

+- 规范代码格式

+- 除Diffusers依赖库外,不要包含其他外部库

+- **务必**提供环境信息:可在终端运行`diffusers-cli env`命令,然后将显示的信息复制到issue中

+- 详细说明问题。如果读者不清楚问题所在及其影响,就无法解决问题

+- **确保**读者能以最小成本复现问题。如果代码片段因缺少库或未定义变量而无法运行,读者将无法提供帮助。请确保提供的可复现代码尽可能精简,可直接复制到Python shell运行

+- 如需特定模型/数据集复现问题,请确保读者能获取这些资源。可将模型/数据集上传至[Hub](https://huggingface.co)便于下载。尽量保持模型和数据集体积最小化,降低复现难度

+

+更多信息请参阅[如何撰写优质issue](#how-to-write-a-good-issue)章节。

+

+提交错误报告请点击[此处](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&projects=&template=bug-report.yml)。

+

+#### 2.2 功能请求

+

+优质的功能请求应包含以下要素:

+

+1. 首先说明动机:

+* 是否与库的使用痛点相关?若是,请解释原因,最好提供演示问题的代码片段

+* 是否因项目需求产生?我们很乐意了解详情!

+* 是否是你已实现且认为对社区有价值的功能?请说明它为你解决了什么问题

+2. 用**完整段落**描述功能特性

+3. 提供**代码片段**演示预期用法

+4. 如涉及论文,请附上链接

+5. 可补充任何有助于理解的辅助材料(示意图、截图等)

+

+提交功能请求请点击[此处](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=)。

+

+#### 2.3 设计反馈

+

+关于库设计的反馈(无论正面还是负面)能极大帮助核心维护者打造更友好的库。要了解当前设计理念,请参阅[此文档](https://huggingface.co/docs/diffusers/conceptual/philosophy)如果您认为某个设计选择与当前理念不符,请说明原因及改进建议。如果某个设计选择因过度遵循理念而限制了使用场景,也请解释原因并提出调整方案。

+若某个设计对您特别实用,请同样留下备注——这对未来的设计决策极具参考价值。

+

+您可通过[此链接](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=)提交设计反馈。

+

+#### 2.4 技术问题

+

+技术问题主要涉及库代码的实现逻辑或特定功能模块的作用。提问时请务必:

+- 附上相关代码链接

+- 详细说明难以理解的具体原因

+

+技术问题提交入口:[点击此处](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml)

+

+#### 2.5 新模型/调度器/pipeline提案

+

+若diffusion模型社区发布了您希望集成到Diffusers库的新模型、pipeline或调度器,请提供以下信息:

+* 简要说明并附论文或发布链接

+* 开源实现链接(如有)

+* 模型权重下载链接(如已公开)

+

+若您愿意参与开发,请告知我们以便指导。另请尝试通过GitHub账号标记原始组件作者。

+

+提案提交地址:[新建请求](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml)

+

+### 3. 解答GitHub问题

+

+回答GitHub问题可能需要Diffusers的技术知识,但我们鼓励所有人尝试参与——即使您对答案不完全正确。高质量回答的建议:

+- 保持简洁精炼

+- 严格聚焦问题本身

+- 提供代码/论文等佐证材料

+- 优先用代码说话:若代码片段能解决问题,请提供完整可复现代码

+

+许多问题可能存在离题、重复或无关情况。您可以通过以下方式协助维护者:

+- 引导提问者精确描述问题

+- 标记重复issue并附原链接

+- 推荐用户至[论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63)或[Discord](https://discord.gg/G7tWnz98XR)

+

+在确认提交的Bug报告正确且需要修改源代码后,请继续阅读以下章节内容。

+

+以下所有贡献都需要提交PR(拉取请求)。具体操作步骤详见[如何提交PR](#how-to-open-a-pr)章节。

+

+### 4. 修复"Good first issue"类问题

+

+标有[Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22)标签的问题通常已说明解决方案建议,便于修复。若该问题尚未关闭且您想尝试解决,只需留言"我想尝试解决这个问题"。通常有三种情况:

+- a.) 问题描述已提出解决方案。若您认可该方案,可直接提交PR或草稿PR进行修复

+- b.) 问题描述未提出解决方案。您可询问修复建议,Diffusers团队会尽快回复。若有成熟解决方案,也可直接提交PR

+- c.) 已有PR但问题未关闭。若原PR停滞,可新开PR并关联原PR(开源社区常见现象)。若PR仍活跃,您可通过建议、审查或协作等方式帮助原作者

+

+### 5. 文档贡献

+

+优秀库**必然**拥有优秀文档!官方文档是新用户的首要接触点,因此文档贡献具有**极高价值**。贡献形式包括:

+- 修正拼写/语法错误

+- 修复文档字符串格式错误(如显示异常或链接失效)

+- 修正文档字符串中张量的形状/维度描述

+- 优化晦涩或错误的说明

+- 更新过时代码示例

+- 文档翻译

+

+[官方文档页面](https://huggingface.co/docs/diffusers/index)所有内容均属可修改范围,对应[文档源文件](https://github.com/huggingface/diffusers/tree/main/docs/source)可进行编辑。修改前请查阅[验证说明](https://github.com/huggingface/diffusers/tree/main/docs)。

+

+### 6. 贡献社区流程

+

+> [!TIP]

+> 阅读[社区流程](../using-diffusers/custom_pipeline_overview#community-pipelines)指南了解GitHub与Hugging Face Hub社区流程的区别。若想了解我们设立社区流程的原因,请查看GitHub Issue [#841](https://github.com/huggingface/diffusers/issues/841)(简而言之,我们无法维护diffusion模型所有可能的推理使用方式,但也不希望限制社区构建这些流程)。

+

+贡献社区流程是向社区分享创意与成果的绝佳方式。您可以在[`DiffusionPipeline`]基础上构建流程,任何人都能通过设置`custom_pipeline`参数加载使用。本节将指导您创建一个简单的"单步"流程——UNet仅执行单次前向传播并调用调度器一次。

+

+1. 为社区流程创建one_step_unet.py文件。只要用户已安装相关包,该文件可包含任意所需包。确保仅有一个继承自[`DiffusionPipeline`]的流程类,用于从Hub加载模型权重和调度器配置。在`__init__`函数中添加UNet和调度器。

+

+ 同时添加`register_modules`函数,确保您的流程及其组件可通过[`~DiffusionPipeline.save_pretrained`]保存。

+

+```py

+from diffusers import DiffusionPipeline

+import torch

+

+class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

+ def __init__(self, unet, scheduler):

+ super().__init__()

+

+ self.register_modules(unet=unet, scheduler=scheduler)

+```

+

+2. 在前向传播中(建议定义为`__call__`),可添加任意功能。对于"单步"流程,创建随机图像并通过设置`timestep=1`调用UNet和调度器一次。

+

+```py

+ from diffusers import DiffusionPipeline

+ import torch

+

+ class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

+ def __init__(self, unet, scheduler):

+ super().__init__()

+

+ self.register_modules(unet=unet, scheduler=scheduler)

+

+ def __call__(self):

+ image = torch.randn(

+ (1, self.unet.config.in_channels, self.unet.config.sample_size, self.unet.config.sample_size),

+ )

+ timestep = 1

+

+ model_output = self.unet(image, timestep).sample

+ scheduler_output = self.scheduler.step(model_output, timestep, image).prev_sample

+

+ return scheduler_output

+```

+

+现在您可以通过传入UNet和调度器来运行流程,若流程结构相同也可加载预训练权重。

+

+```python

+from diffusers import DDPMScheduler, UNet2DModel

+

+scheduler = DDPMScheduler()

+unet = UNet2DModel()

+

+pipeline = UnetSchedulerOneForwardPipeline(unet=unet, scheduler=scheduler)

+output = pipeline()

+# 加载预训练权重

+pipeline = UnetSchedulerOneForwardPipeline.from_pretrained("google/ddpm-cifar10-32", use_safetensors=True)

+output = pipeline()

+```

+

+您可以选择将pipeline作为GitHub社区pipeline或Hub社区pipeline进行分享。

+

+

+

+无论您选择以何种方式贡献,我们都致力于成为一个开放、友好、善良的社区。请阅读我们的[行为准则](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md),并在互动时注意遵守。我们也建议您了解指导本项目的[伦理准则](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines),并请您遵循同样的透明度和责任原则。

+

+我们高度重视社区的反馈,所以如果您认为自己有能帮助改进库的有价值反馈,请不要犹豫说出来——每条消息、评论、issue和拉取请求(PR)都会被阅读和考虑。

+

+## 概述

+

+您可以通过多种方式做出贡献,从在issue和讨论区回答问题,到向核心库添加新的diffusion模型。

+

+下面我们按难度升序列出不同的贡献方式,所有方式对社区都很有价值:

+

+* 1. 在[Diffusers讨论论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers)或[Discord](https://discord.gg/G7tWnz98XR)上提问和回答问题

+* 2. 在[GitHub Issues标签页](https://github.com/huggingface/diffusers/issues/new/choose)提交新issue,或在[GitHub Discussions标签页](https://github.com/huggingface/diffusers/discussions/new/choose)发起新讨论

+* 3. 在[GitHub Issues标签页](https://github.com/huggingface/diffusers/issues)解答issue,或在[GitHub Discussions标签页](https://github.com/huggingface/diffusers/discussions)参与讨论

+* 4. 解决标记为"Good first issue"的简单问题,详见[此处](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22)

+* 5. 参与[文档](https://github.com/huggingface/diffusers/tree/main/docs/source)建设

+* 6. 贡献[社区Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples)

+* 7. 完善[示例代码](https://github.com/huggingface/diffusers/tree/main/examples)

+* 8. 解决标记为"Good second issue"的中等难度问题,详见[此处](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22)

+* 9. 添加新pipeline/模型/调度器,参见["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22)和["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22)类issue。此类贡献请先阅读[设计哲学](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md)

+

+重申:**所有贡献对社区都具有重要价值。**下文将详细说明各类贡献方式。

+

+对于4-9类贡献,您需要提交PR(拉取请求),具体操作详见[如何提交PR](#how-to-open-a-pr)章节。

+

+### 1. 在Diffusers讨论区或Discord提问与解答

+

+任何与Diffusers库相关的问题或讨论都可以发布在[官方论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/)或[Discord频道](https://discord.gg/G7tWnz98XR),包括但不限于:

+- 分享训练/推理实验报告

+- 展示个人项目

+- 咨询非官方训练示例

+- 项目提案

+- 通用反馈

+- 论文解读

+- 基于Diffusers库的个人项目求助

+- 一般性问题

+- 关于diffusion模型的伦理讨论

+- ...

+

+论坛/Discord上的每个问题都能促使社区公开分享知识,很可能帮助未来遇到相同问题的初学者。请务必提出您的疑问。

+同样地,通过回答问题您也在为社区创造公共知识文档,这种贡献极具价值。

+

+**请注意**:提问/回答时投入的精力越多,产生的公共知识质量就越高。精心构建的问题与专业解答能形成高质量知识库,而表述不清的问题则可能降低讨论价值。

+

+低质量的问题或回答会降低公共知识库的整体质量。

+简而言之,高质量的问题或回答应具备*精确性*、*简洁性*、*相关性*、*易于理解*、*可访问性*和*格式规范/表述清晰*等特质。更多详情请参阅[如何提交优质议题](#how-to-write-a-good-issue)章节。

+

+**关于渠道的说明**:

+[*论坛*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63)的内容能被谷歌等搜索引擎更好地收录,且帖子按热度而非时间排序,便于查找历史问答。此外,论坛内容更容易被直接链接引用。

+而*Discord*采用即时聊天模式,适合快速交流。虽然在Discord上可能更快获得解答,但信息会随时间淹没,且难以回溯历史讨论。因此我们强烈建议在论坛发布优质问答,以构建可持续的社区知识库。若Discord讨论产生有价值结论,建议将成果整理发布至论坛以惠及更多读者。

+

+### 2. 在GitHub议题页提交新议题

+

+🧨 Diffusers库的稳健性离不开用户的问题反馈,感谢您的报错。

+

+请注意:GitHub议题仅限处理与Diffusers库代码直接相关的技术问题、错误报告、功能请求或库设计反馈。

+简言之,**与Diffusers库代码(含文档)无关**的内容应发布至[论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63)或[Discord](https://discord.gg/G7tWnz98XR)。

+

+**提交新议题时请遵循以下准则**:

+- 确认是否已有类似议题(使用GitHub议题页的搜索栏)

+- 请勿在现有议题下追加新问题。若存在高度关联议题,应新建议题并添加相关链接

+- 确保使用英文提交。非英语用户可通过[DeepL](https://www.deepl.com/translator)等免费工具翻译

+- 检查升级至最新Diffusers版本是否能解决问题。提交前请确认`python -c "import diffusers; print(diffusers.__version__)"`显示的版本号不低于最新版本

+- 记请记住,你在提交新issue时投入的精力越多,得到的回答质量就越高,Diffusers项目的整体issue质量也会越好。

+

+新issue通常包含以下内容:

+

+#### 2.1 可复现的最小化错误报告

+

+错误报告应始终包含可复现的代码片段,并尽可能简洁明了。具体而言:

+- 尽量缩小问题范围,**不要直接粘贴整个代码文件**

+- 规范代码格式

+- 除Diffusers依赖库外,不要包含其他外部库

+- **务必**提供环境信息:可在终端运行`diffusers-cli env`命令,然后将显示的信息复制到issue中

+- 详细说明问题。如果读者不清楚问题所在及其影响,就无法解决问题

+- **确保**读者能以最小成本复现问题。如果代码片段因缺少库或未定义变量而无法运行,读者将无法提供帮助。请确保提供的可复现代码尽可能精简,可直接复制到Python shell运行

+- 如需特定模型/数据集复现问题,请确保读者能获取这些资源。可将模型/数据集上传至[Hub](https://huggingface.co)便于下载。尽量保持模型和数据集体积最小化,降低复现难度

+

+更多信息请参阅[如何撰写优质issue](#how-to-write-a-good-issue)章节。

+

+提交错误报告请点击[此处](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&projects=&template=bug-report.yml)。

+

+#### 2.2 功能请求

+

+优质的功能请求应包含以下要素:

+

+1. 首先说明动机:

+* 是否与库的使用痛点相关?若是,请解释原因,最好提供演示问题的代码片段

+* 是否因项目需求产生?我们很乐意了解详情!

+* 是否是你已实现且认为对社区有价值的功能?请说明它为你解决了什么问题

+2. 用**完整段落**描述功能特性

+3. 提供**代码片段**演示预期用法

+4. 如涉及论文,请附上链接

+5. 可补充任何有助于理解的辅助材料(示意图、截图等)

+

+提交功能请求请点击[此处](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=)。

+

+#### 2.3 设计反馈

+

+关于库设计的反馈(无论正面还是负面)能极大帮助核心维护者打造更友好的库。要了解当前设计理念,请参阅[此文档](https://huggingface.co/docs/diffusers/conceptual/philosophy)如果您认为某个设计选择与当前理念不符,请说明原因及改进建议。如果某个设计选择因过度遵循理念而限制了使用场景,也请解释原因并提出调整方案。

+若某个设计对您特别实用,请同样留下备注——这对未来的设计决策极具参考价值。

+

+您可通过[此链接](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=)提交设计反馈。

+

+#### 2.4 技术问题

+

+技术问题主要涉及库代码的实现逻辑或特定功能模块的作用。提问时请务必:

+- 附上相关代码链接

+- 详细说明难以理解的具体原因

+

+技术问题提交入口:[点击此处](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml)

+

+#### 2.5 新模型/调度器/pipeline提案

+

+若diffusion模型社区发布了您希望集成到Diffusers库的新模型、pipeline或调度器,请提供以下信息:

+* 简要说明并附论文或发布链接

+* 开源实现链接(如有)

+* 模型权重下载链接(如已公开)

+

+若您愿意参与开发,请告知我们以便指导。另请尝试通过GitHub账号标记原始组件作者。

+

+提案提交地址:[新建请求](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml)

+

+### 3. 解答GitHub问题

+

+回答GitHub问题可能需要Diffusers的技术知识,但我们鼓励所有人尝试参与——即使您对答案不完全正确。高质量回答的建议:

+- 保持简洁精炼

+- 严格聚焦问题本身

+- 提供代码/论文等佐证材料

+- 优先用代码说话:若代码片段能解决问题,请提供完整可复现代码

+

+许多问题可能存在离题、重复或无关情况。您可以通过以下方式协助维护者:

+- 引导提问者精确描述问题

+- 标记重复issue并附原链接

+- 推荐用户至[论坛](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63)或[Discord](https://discord.gg/G7tWnz98XR)

+

+在确认提交的Bug报告正确且需要修改源代码后,请继续阅读以下章节内容。

+

+以下所有贡献都需要提交PR(拉取请求)。具体操作步骤详见[如何提交PR](#how-to-open-a-pr)章节。

+

+### 4. 修复"Good first issue"类问题

+

+标有[Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22)标签的问题通常已说明解决方案建议,便于修复。若该问题尚未关闭且您想尝试解决,只需留言"我想尝试解决这个问题"。通常有三种情况:

+- a.) 问题描述已提出解决方案。若您认可该方案,可直接提交PR或草稿PR进行修复

+- b.) 问题描述未提出解决方案。您可询问修复建议,Diffusers团队会尽快回复。若有成熟解决方案,也可直接提交PR

+- c.) 已有PR但问题未关闭。若原PR停滞,可新开PR并关联原PR(开源社区常见现象)。若PR仍活跃,您可通过建议、审查或协作等方式帮助原作者

+

+### 5. 文档贡献

+

+优秀库**必然**拥有优秀文档!官方文档是新用户的首要接触点,因此文档贡献具有**极高价值**。贡献形式包括:

+- 修正拼写/语法错误

+- 修复文档字符串格式错误(如显示异常或链接失效)

+- 修正文档字符串中张量的形状/维度描述

+- 优化晦涩或错误的说明

+- 更新过时代码示例

+- 文档翻译

+

+[官方文档页面](https://huggingface.co/docs/diffusers/index)所有内容均属可修改范围,对应[文档源文件](https://github.com/huggingface/diffusers/tree/main/docs/source)可进行编辑。修改前请查阅[验证说明](https://github.com/huggingface/diffusers/tree/main/docs)。

+

+### 6. 贡献社区流程

+

+> [!TIP]

+> 阅读[社区流程](../using-diffusers/custom_pipeline_overview#community-pipelines)指南了解GitHub与Hugging Face Hub社区流程的区别。若想了解我们设立社区流程的原因,请查看GitHub Issue [#841](https://github.com/huggingface/diffusers/issues/841)(简而言之,我们无法维护diffusion模型所有可能的推理使用方式,但也不希望限制社区构建这些流程)。

+

+贡献社区流程是向社区分享创意与成果的绝佳方式。您可以在[`DiffusionPipeline`]基础上构建流程,任何人都能通过设置`custom_pipeline`参数加载使用。本节将指导您创建一个简单的"单步"流程——UNet仅执行单次前向传播并调用调度器一次。

+

+1. 为社区流程创建one_step_unet.py文件。只要用户已安装相关包,该文件可包含任意所需包。确保仅有一个继承自[`DiffusionPipeline`]的流程类,用于从Hub加载模型权重和调度器配置。在`__init__`函数中添加UNet和调度器。

+

+ 同时添加`register_modules`函数,确保您的流程及其组件可通过[`~DiffusionPipeline.save_pretrained`]保存。

+

+```py

+from diffusers import DiffusionPipeline

+import torch

+

+class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

+ def __init__(self, unet, scheduler):

+ super().__init__()

+

+ self.register_modules(unet=unet, scheduler=scheduler)

+```

+

+2. 在前向传播中(建议定义为`__call__`),可添加任意功能。对于"单步"流程,创建随机图像并通过设置`timestep=1`调用UNet和调度器一次。

+

+```py

+ from diffusers import DiffusionPipeline

+ import torch

+

+ class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

+ def __init__(self, unet, scheduler):

+ super().__init__()

+

+ self.register_modules(unet=unet, scheduler=scheduler)

+

+ def __call__(self):

+ image = torch.randn(

+ (1, self.unet.config.in_channels, self.unet.config.sample_size, self.unet.config.sample_size),

+ )

+ timestep = 1

+

+ model_output = self.unet(image, timestep).sample

+ scheduler_output = self.scheduler.step(model_output, timestep, image).prev_sample

+

+ return scheduler_output

+```

+

+现在您可以通过传入UNet和调度器来运行流程,若流程结构相同也可加载预训练权重。

+

+```python

+from diffusers import DDPMScheduler, UNet2DModel

+

+scheduler = DDPMScheduler()

+unet = UNet2DModel()

+

+pipeline = UnetSchedulerOneForwardPipeline(unet=unet, scheduler=scheduler)

+output = pipeline()

+# 加载预训练权重

+pipeline = UnetSchedulerOneForwardPipeline.from_pretrained("google/ddpm-cifar10-32", use_safetensors=True)

+output = pipeline()

+```

+

+您可以选择将pipeline作为GitHub社区pipeline或Hub社区pipeline进行分享。

+

+

+

+ +

+ +

+ +

+ 徽章的管道表示模型可以利用 Apple silicon 设备上的 MPS 后端进行更快的推理。欢迎提交 [Pull Request](https://github.com/huggingface/diffusers/compare) 来为缺少此徽章的管道添加它。

+

+🤗 Diffusers 与 Apple silicon(M1/M2 芯片)兼容,使用 PyTorch 的 [`mps`](https://pytorch.org/docs/stable/notes/mps.html) 设备,该设备利用 Metal 框架来发挥 MacOS 设备上 GPU 的性能。您需要具备:

+

+- 配备 Apple silicon(M1/M2)硬件的 macOS 计算机

+- macOS 12.6 或更高版本(推荐 13.0 或更高)

+- arm64 版本的 Python

+- [PyTorch 2.0](https://pytorch.org/get-started/locally/)(推荐)或 1.13(支持 `mps` 的最低版本)

+

+`mps` 后端使用 PyTorch 的 `.to()` 接口将 Stable Diffusion 管道移动到您的 M1 或 M2 设备上:

+

+```python

+from diffusers import DiffusionPipeline

+

+pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5")

+pipe = pipe.to("mps")

+

+# 如果您的计算机内存小于 64 GB,推荐使用

+pipe.enable_attention_slicing()

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+image

+```

+

+> [!WARNING]

+> PyTorch [mps](https://pytorch.org/docs/stable/notes/mps.html) 后端不支持大小超过 `2**32` 的 NDArray。如果您遇到此问题,请提交 [Issue](https://github.com/huggingface/diffusers/issues/new/choose) 以便我们调查。

+

+如果您使用 **PyTorch 1.13**,您需要通过管道进行一次额外的"预热"传递。这是一个临时解决方法,用于解决首次推理传递产生的结果与后续传递略有不同的问题。您只需要执行此传递一次,并且在仅进行一次推理步骤后可以丢弃结果。

+

+```diff

+ from diffusers import DiffusionPipeline

+

+ pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5").to("mps")

+ pipe.enable_attention_slicing()

+

+ prompt = "a photo of an astronaut riding a horse on mars"

+ # 如果 PyTorch 版本是 1.13,进行首次"预热"传递

++ _ = pipe(prompt, num_inference_steps=1)

+

+ # 预热传递后,结果与 CPU 设备上的结果匹配。

+ image = pipe(prompt).images[0]

+```

+

+## 故障排除

+

+本节列出了使用 `mps` 后端时的一些常见问题及其解决方法。

+

+### 注意力切片

+

+M1/M2 性能对内存压力非常敏感。当发生这种情况时,系统会自动交换内存,这会显著降低性能。

+

+为了防止这种情况发生,我们建议使用*注意力切片*来减少推理过程中的内存压力并防止交换。这在您的计算机系统内存少于 64GB 或生成非标准分辨率(大于 512×512 像素)的图像时尤其相关。在您的管道上调用 [`~DiffusionPipeline.enable_attention_slicing`] 函数:

+

+```py

+from diffusers import DiffusionPipeline

+import torch

+

+pipeline = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, variant="fp16", use_safetensors=True).to("mps")

+pipeline.enable_attention_slicing()

+```

+

+注意力切片将昂贵的注意力操作分多个步骤执行,而不是一次性完成。在没有统一内存的计算机中,它通常能提高约 20% 的性能,但我们观察到在大多数 Apple 芯片计算机中,除非您有 64GB 或更多 RAM,否则性能会*更好*。

+

+### 批量推理

+

+批量生成多个提示可能会导致崩溃或无法可靠工作。如果是这种情况,请尝试迭代而不是批量处理。

\ No newline at end of file

diff --git a/docs/source/zh/optimization/neuron.md b/docs/source/zh/optimization/neuron.md

new file mode 100644

index 0000000000..99d807a88c

--- /dev/null

+++ b/docs/source/zh/optimization/neuron.md

@@ -0,0 +1,56 @@

+

+

+# AWS Neuron

+

+Diffusers 功能可在 [AWS Inf2 实例](https://aws.amazon.com/ec2/instance-types/inf2/)上使用,这些是由 [Neuron 机器学习加速器](https://aws.amazon.com/machine-learning/inferentia/)驱动的 EC2 实例。这些实例旨在提供更好的计算性能(更高的吞吐量、更低的延迟)和良好的成本效益,使其成为 AWS 用户将扩散模型部署到生产环境的良好选择。

+

+[Optimum Neuron](https://huggingface.co/docs/optimum-neuron/en/index) 是 Hugging Face 库与 AWS 加速器之间的接口,包括 AWS [Trainium](https://aws.amazon.com/machine-learning/trainium/) 和 AWS [Inferentia](https://aws.amazon.com/machine-learning/inferentia/)。它支持 Diffusers 中的许多功能,并具有类似的 API,因此如果您已经熟悉 Diffusers,学习起来更容易。一旦您创建了 AWS Inf2 实例,请安装 Optimum Neuron。

+

+```bash

+python -m pip install --upgrade-strategy eager optimum[neuronx]

+```

+

+> [!TIP]

+> 我们提供预构建的 [Hugging Face Neuron 深度学习 AMI](https://aws.amazon.com/marketplace/pp/prodview-gr3e6yiscria2)(DLAMI)和用于 Amazon SageMaker 的 Optimum Neuron 容器。建议正确设置您的环境。

+

+下面的示例演示了如何在 inf2.8xlarge 实例上使用 Stable Diffusion XL 模型生成图像(一旦模型编译完成,您可以切换到更便宜的 inf2.xlarge 实例)。要生成一些图像,请使用 [`~optimum.neuron.NeuronStableDiffusionXLPipeline`] 类,该类类似于 Diffusers 中的 [`StableDiffusionXLPipeline`] 类。

+

+与 Diffusers 不同,您需要将管道中的模型编译为 Neuron 格式,即 `.neuron`。运行以下命令将模型导出为 `.neuron` 格式。

+

+```bash

+optimum-cli export neuron --model stabilityai/stable-diffusion-xl-base-1.0 \

+ --batch_size 1 \

+ --height 1024 `# 生成图像的高度(像素),例如 768, 1024` \

+ --width 1024 `# 生成图像的宽度(像素),例如 768, 1024` \

+ --num_images_per_prompt 1 `# 每个提示生成的图像数量,默认为 1` \

+ --auto_cast matmul `# 仅转换矩阵乘法操作` \

+ --auto_cast_type bf16 `# 将操作从 FP32 转换为 BF16` \

+ sd_neuron_xl/

+```

+

+现在使用预编译的 SDXL 模型生成一些图像。

+

+```python

+>>> from optimum.neuron import Neu

+ronStableDiffusionXLPipeline

+

+>>> stable_diffusion_xl = NeuronStableDiffusionXLPipeline.from_pretrained("sd_neuron_xl/")

+>>> prompt = "a pig with wings flying in floating US dollar banknotes in the air, skyscrapers behind, warm color palette, muted colors, detailed, 8k"

+>>> image = stable_diffusion_xl(prompt).images[0]

+```

+

+

徽章的管道表示模型可以利用 Apple silicon 设备上的 MPS 后端进行更快的推理。欢迎提交 [Pull Request](https://github.com/huggingface/diffusers/compare) 来为缺少此徽章的管道添加它。

+

+🤗 Diffusers 与 Apple silicon(M1/M2 芯片)兼容,使用 PyTorch 的 [`mps`](https://pytorch.org/docs/stable/notes/mps.html) 设备,该设备利用 Metal 框架来发挥 MacOS 设备上 GPU 的性能。您需要具备:

+

+- 配备 Apple silicon(M1/M2)硬件的 macOS 计算机

+- macOS 12.6 或更高版本(推荐 13.0 或更高)

+- arm64 版本的 Python

+- [PyTorch 2.0](https://pytorch.org/get-started/locally/)(推荐)或 1.13(支持 `mps` 的最低版本)

+

+`mps` 后端使用 PyTorch 的 `.to()` 接口将 Stable Diffusion 管道移动到您的 M1 或 M2 设备上:

+

+```python

+from diffusers import DiffusionPipeline

+

+pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5")

+pipe = pipe.to("mps")

+

+# 如果您的计算机内存小于 64 GB,推荐使用

+pipe.enable_attention_slicing()

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+image

+```

+

+> [!WARNING]

+> PyTorch [mps](https://pytorch.org/docs/stable/notes/mps.html) 后端不支持大小超过 `2**32` 的 NDArray。如果您遇到此问题,请提交 [Issue](https://github.com/huggingface/diffusers/issues/new/choose) 以便我们调查。

+

+如果您使用 **PyTorch 1.13**,您需要通过管道进行一次额外的"预热"传递。这是一个临时解决方法,用于解决首次推理传递产生的结果与后续传递略有不同的问题。您只需要执行此传递一次,并且在仅进行一次推理步骤后可以丢弃结果。

+

+```diff

+ from diffusers import DiffusionPipeline

+

+ pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5").to("mps")

+ pipe.enable_attention_slicing()

+

+ prompt = "a photo of an astronaut riding a horse on mars"

+ # 如果 PyTorch 版本是 1.13,进行首次"预热"传递

++ _ = pipe(prompt, num_inference_steps=1)

+

+ # 预热传递后,结果与 CPU 设备上的结果匹配。

+ image = pipe(prompt).images[0]

+```

+

+## 故障排除

+

+本节列出了使用 `mps` 后端时的一些常见问题及其解决方法。

+

+### 注意力切片

+

+M1/M2 性能对内存压力非常敏感。当发生这种情况时,系统会自动交换内存,这会显著降低性能。

+

+为了防止这种情况发生,我们建议使用*注意力切片*来减少推理过程中的内存压力并防止交换。这在您的计算机系统内存少于 64GB 或生成非标准分辨率(大于 512×512 像素)的图像时尤其相关。在您的管道上调用 [`~DiffusionPipeline.enable_attention_slicing`] 函数:

+

+```py

+from diffusers import DiffusionPipeline

+import torch

+

+pipeline = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, variant="fp16", use_safetensors=True).to("mps")

+pipeline.enable_attention_slicing()

+```

+

+注意力切片将昂贵的注意力操作分多个步骤执行,而不是一次性完成。在没有统一内存的计算机中,它通常能提高约 20% 的性能,但我们观察到在大多数 Apple 芯片计算机中,除非您有 64GB 或更多 RAM,否则性能会*更好*。

+

+### 批量推理

+

+批量生成多个提示可能会导致崩溃或无法可靠工作。如果是这种情况,请尝试迭代而不是批量处理。

\ No newline at end of file

diff --git a/docs/source/zh/optimization/neuron.md b/docs/source/zh/optimization/neuron.md

new file mode 100644

index 0000000000..99d807a88c

--- /dev/null

+++ b/docs/source/zh/optimization/neuron.md

@@ -0,0 +1,56 @@

+

+

+# AWS Neuron

+

+Diffusers 功能可在 [AWS Inf2 实例](https://aws.amazon.com/ec2/instance-types/inf2/)上使用,这些是由 [Neuron 机器学习加速器](https://aws.amazon.com/machine-learning/inferentia/)驱动的 EC2 实例。这些实例旨在提供更好的计算性能(更高的吞吐量、更低的延迟)和良好的成本效益,使其成为 AWS 用户将扩散模型部署到生产环境的良好选择。

+

+[Optimum Neuron](https://huggingface.co/docs/optimum-neuron/en/index) 是 Hugging Face 库与 AWS 加速器之间的接口,包括 AWS [Trainium](https://aws.amazon.com/machine-learning/trainium/) 和 AWS [Inferentia](https://aws.amazon.com/machine-learning/inferentia/)。它支持 Diffusers 中的许多功能,并具有类似的 API,因此如果您已经熟悉 Diffusers,学习起来更容易。一旦您创建了 AWS Inf2 实例,请安装 Optimum Neuron。

+

+```bash

+python -m pip install --upgrade-strategy eager optimum[neuronx]

+```

+

+> [!TIP]

+> 我们提供预构建的 [Hugging Face Neuron 深度学习 AMI](https://aws.amazon.com/marketplace/pp/prodview-gr3e6yiscria2)(DLAMI)和用于 Amazon SageMaker 的 Optimum Neuron 容器。建议正确设置您的环境。

+

+下面的示例演示了如何在 inf2.8xlarge 实例上使用 Stable Diffusion XL 模型生成图像(一旦模型编译完成,您可以切换到更便宜的 inf2.xlarge 实例)。要生成一些图像,请使用 [`~optimum.neuron.NeuronStableDiffusionXLPipeline`] 类,该类类似于 Diffusers 中的 [`StableDiffusionXLPipeline`] 类。

+

+与 Diffusers 不同,您需要将管道中的模型编译为 Neuron 格式,即 `.neuron`。运行以下命令将模型导出为 `.neuron` 格式。

+

+```bash

+optimum-cli export neuron --model stabilityai/stable-diffusion-xl-base-1.0 \

+ --batch_size 1 \

+ --height 1024 `# 生成图像的高度(像素),例如 768, 1024` \

+ --width 1024 `# 生成图像的宽度(像素),例如 768, 1024` \

+ --num_images_per_prompt 1 `# 每个提示生成的图像数量,默认为 1` \

+ --auto_cast matmul `# 仅转换矩阵乘法操作` \

+ --auto_cast_type bf16 `# 将操作从 FP32 转换为 BF16` \

+ sd_neuron_xl/

+```

+

+现在使用预编译的 SDXL 模型生成一些图像。

+

+```python

+>>> from optimum.neuron import Neu

+ronStableDiffusionXLPipeline

+

+>>> stable_diffusion_xl = NeuronStableDiffusionXLPipeline.from_pretrained("sd_neuron_xl/")

+>>> prompt = "a pig with wings flying in floating US dollar banknotes in the air, skyscrapers behind, warm color palette, muted colors, detailed, 8k"

+>>> image = stable_diffusion_xl(prompt).images[0]

+```

+

+ +

+欢迎查看Optimum Neuron [文档](https://huggingface.co/docs/optimum-neuron/en/inference_tutorials/stable_diffusion#generate-images-with-stable-diffusion-models-on-aws-inferentia)中更多不同用例的指南和示例!

\ No newline at end of file

diff --git a/docs/source/zh/optimization/onnx.md b/docs/source/zh/optimization/onnx.md

new file mode 100644

index 0000000000..b70510d51b

--- /dev/null

+++ b/docs/source/zh/optimization/onnx.md

@@ -0,0 +1,79 @@

+

+

+# ONNX Runtime

+

+🤗 [Optimum](https://github.com/huggingface/optimum) 提供了兼容 ONNX Runtime 的 Stable Diffusion 流水线。您需要运行以下命令安装支持 ONNX Runtime 的 🤗 Optimum:

+

+```bash

+pip install -q optimum["onnxruntime"]

+```

+

+本指南将展示如何使用 ONNX Runtime 运行 Stable Diffusion 和 Stable Diffusion XL (SDXL) 流水线。

+

+## Stable Diffusion

+

+要加载并运行推理,请使用 [`~optimum.onnxruntime.ORTStableDiffusionPipeline`]。若需加载 PyTorch 模型并实时转换为 ONNX 格式,请设置 `export=True`:

+

+```python

+from optimum.onnxruntime import ORTStableDiffusionPipeline

+

+model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id, export=True)

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

+pipeline.save_pretrained("./onnx-stable-diffusion-v1-5")

+```

+

+> [!WARNING]

+> 当前批量生成多个提示可能会占用过高内存。在问题修复前,建议采用迭代方式而非批量处理。

+

+如需离线导出 ONNX 格式流水线供后续推理使用,请使用 [`optimum-cli export`](https://huggingface.co/docs/optimum/main/en/exporters/onnx/usage_guides/export_a_model#exporting-a-model-to-onnx-using-the-cli) 命令:

+

+```bash

+optimum-cli export onnx --model stable-diffusion-v1-5/stable-diffusion-v1-5 sd_v15_onnx/

+```

+

+随后进行推理时(无需再次指定 `export=True`):

+

+```python

+from optimum.onnxruntime import ORTStableDiffusionPipeline

+

+model_id = "sd_v15_onnx"

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id)

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

+```

+

+

+

+欢迎查看Optimum Neuron [文档](https://huggingface.co/docs/optimum-neuron/en/inference_tutorials/stable_diffusion#generate-images-with-stable-diffusion-models-on-aws-inferentia)中更多不同用例的指南和示例!

\ No newline at end of file

diff --git a/docs/source/zh/optimization/onnx.md b/docs/source/zh/optimization/onnx.md

new file mode 100644

index 0000000000..b70510d51b

--- /dev/null

+++ b/docs/source/zh/optimization/onnx.md

@@ -0,0 +1,79 @@

+

+

+# ONNX Runtime

+

+🤗 [Optimum](https://github.com/huggingface/optimum) 提供了兼容 ONNX Runtime 的 Stable Diffusion 流水线。您需要运行以下命令安装支持 ONNX Runtime 的 🤗 Optimum:

+

+```bash

+pip install -q optimum["onnxruntime"]

+```

+

+本指南将展示如何使用 ONNX Runtime 运行 Stable Diffusion 和 Stable Diffusion XL (SDXL) 流水线。

+

+## Stable Diffusion

+

+要加载并运行推理,请使用 [`~optimum.onnxruntime.ORTStableDiffusionPipeline`]。若需加载 PyTorch 模型并实时转换为 ONNX 格式,请设置 `export=True`:

+

+```python

+from optimum.onnxruntime import ORTStableDiffusionPipeline

+

+model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id, export=True)

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

+pipeline.save_pretrained("./onnx-stable-diffusion-v1-5")

+```

+

+> [!WARNING]

+> 当前批量生成多个提示可能会占用过高内存。在问题修复前,建议采用迭代方式而非批量处理。

+

+如需离线导出 ONNX 格式流水线供后续推理使用,请使用 [`optimum-cli export`](https://huggingface.co/docs/optimum/main/en/exporters/onnx/usage_guides/export_a_model#exporting-a-model-to-onnx-using-the-cli) 命令:

+

+```bash

+optimum-cli export onnx --model stable-diffusion-v1-5/stable-diffusion-v1-5 sd_v15_onnx/

+```

+

+随后进行推理时(无需再次指定 `export=True`):

+

+```python

+from optimum.onnxruntime import ORTStableDiffusionPipeline

+

+model_id = "sd_v15_onnx"

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id)

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

+```

+

+ +

+ +

+ +

+ +

+ +

+  +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ -

- -

- -

- -

- -

- -

- -

- +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+  +

+  +

+  +

+