diff --git a/docs/source/en/optimization/fp16.md b/docs/source/en/optimization/fp16.md

index 97a1f5830a..010b721536 100644

--- a/docs/source/en/optimization/fp16.md

+++ b/docs/source/en/optimization/fp16.md

@@ -150,6 +150,24 @@ pipeline(prompt, num_inference_steps=30).images[0]

Compilation is slow the first time, but once compiled, it is significantly faster. Try to only use the compiled pipeline on the same type of inference operations. Calling the compiled pipeline on a different image size retriggers compilation which is slow and inefficient.

+### Regional compilation

+

+[Regional compilation](https://docs.pytorch.org/tutorials/recipes/regional_compilation.html) reduces the cold start compilation time by only compiling a specific repeated region (or block) of the model instead of the entire model. The compiler reuses the cached and compiled code for the other blocks.

+

+[Accelerate](https://huggingface.co/docs/accelerate/index) provides the [compile_regions](https://github.com/huggingface/accelerate/blob/273799c85d849a1954a4f2e65767216eb37fa089/src/accelerate/utils/other.py#L78) method for automatically compiling the repeated blocks of a `nn.Module` sequentially. The rest of the model is compiled separately.

+

+```py

+# pip install -U accelerate

+import torch

+from diffusers import StableDiffusionXLPipeline

+from accelerate.utils import compile regions

+

+pipeline = StableDiffusionXLPipeline.from_pretrained(

+ "stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16

+).to("cuda")

+pipeline.unet = compile_regions(pipeline.unet, mode="reduce-overhead", fullgraph=True)

+```

+

### Graph breaks

It is important to specify `fullgraph=True` in torch.compile to ensure there are no graph breaks in the underlying model. This allows you to take advantage of torch.compile without any performance degradation. For the UNet and VAE, this changes how you access the return variables.

@@ -170,6 +188,12 @@ The `step()` function is [called](https://github.com/huggingface/diffusers/blob/

In general, the `sigmas` should [stay on the CPU](https://github.com/huggingface/diffusers/blob/35a969d297cba69110d175ee79c59312b9f49e1e/src/diffusers/schedulers/scheduling_euler_discrete.py#L240) to avoid the communication sync and latency.

+### Benchmarks

+

+Refer to the [diffusers/benchmarks](https://huggingface.co/datasets/diffusers/benchmarks) dataset to see inference latency and memory usage data for compiled pipelines.

+

+The [diffusers-torchao](https://github.com/sayakpaul/diffusers-torchao#benchmarking-results) repository also contains benchmarking results for compiled versions of Flux and CogVideoX.

+

## Dynamic quantization

[Dynamic quantization](https://pytorch.org/tutorials/recipes/recipes/dynamic_quantization.html) improves inference speed by reducing precision to enable faster math operations. This particular type of quantization determines how to scale the activations based on the data at runtime rather than using a fixed scaling factor. As a result, the scaling factor is more accurately aligned with the data.

diff --git a/docs/source/en/optimization/habana.md b/docs/source/en/optimization/habana.md

index 86a0cf0ba0..69964f3244 100644

--- a/docs/source/en/optimization/habana.md

+++ b/docs/source/en/optimization/habana.md

@@ -10,67 +10,22 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

specific language governing permissions and limitations under the License.

-->

-# Habana Gaudi

+# Intel Gaudi

-🤗 Diffusers is compatible with Habana Gaudi through 🤗 [Optimum](https://huggingface.co/docs/optimum/habana/usage_guides/stable_diffusion). Follow the [installation](https://docs.habana.ai/en/latest/Installation_Guide/index.html) guide to install the SynapseAI and Gaudi drivers, and then install Optimum Habana:

+The Intel Gaudi AI accelerator family includes [Intel Gaudi 1](https://habana.ai/products/gaudi/), [Intel Gaudi 2](https://habana.ai/products/gaudi2/), and [Intel Gaudi 3](https://habana.ai/products/gaudi3/). Each server is equipped with 8 devices, known as Habana Processing Units (HPUs), providing 128GB of memory on Gaudi 3, 96GB on Gaudi 2, and 32GB on the first-gen Gaudi. For more details on the underlying hardware architecture, check out the [Gaudi Architecture](https://docs.habana.ai/en/latest/Gaudi_Overview/Gaudi_Architecture.html) overview.

-```bash

-python -m pip install --upgrade-strategy eager optimum[habana]

+Diffusers pipelines can take advantage of HPU acceleration, even if a pipeline hasn't been added to [Optimum for Intel Gaudi](https://huggingface.co/docs/optimum/main/en/habana/index) yet, with the [GPU Migration Toolkit](https://docs.habana.ai/en/latest/PyTorch/PyTorch_Model_Porting/GPU_Migration_Toolkit/GPU_Migration_Toolkit.html).

+

+Call `.to("hpu")` on your pipeline to move it to a HPU device as shown below for Flux:

+```py

+import torch

+from diffusers import DiffusionPipeline

+

+pipeline = DiffusionPipeline.from_pretrained("black-forest-labs/FLUX.1-schnell", torch_dtype=torch.bfloat16)

+pipeline.to("hpu")

+

+image = pipeline("An image of a squirrel in Picasso style").images[0]

```

-To generate images with Stable Diffusion 1 and 2 on Gaudi, you need to instantiate two instances:

-

-- [`~optimum.habana.diffusers.GaudiStableDiffusionPipeline`], a pipeline for text-to-image generation.

-- [`~optimum.habana.diffusers.GaudiDDIMScheduler`], a Gaudi-optimized scheduler.

-

-When you initialize the pipeline, you have to specify `use_habana=True` to deploy it on HPUs and to get the fastest possible generation, you should enable **HPU graphs** with `use_hpu_graphs=True`.

-

-Finally, specify a [`~optimum.habana.GaudiConfig`] which can be downloaded from the [Habana](https://huggingface.co/Habana) organization on the Hub.

-

-```python

-from optimum.habana import GaudiConfig

-from optimum.habana.diffusers import GaudiDDIMScheduler, GaudiStableDiffusionPipeline

-

-model_name = "stabilityai/stable-diffusion-2-base"

-scheduler = GaudiDDIMScheduler.from_pretrained(model_name, subfolder="scheduler")

-pipeline = GaudiStableDiffusionPipeline.from_pretrained(

- model_name,

- scheduler=scheduler,

- use_habana=True,

- use_hpu_graphs=True,

- gaudi_config="Habana/stable-diffusion-2",

-)

-```

-

-Now you can call the pipeline to generate images by batches from one or several prompts:

-

-```python

-outputs = pipeline(

- prompt=[

- "High quality photo of an astronaut riding a horse in space",

- "Face of a yellow cat, high resolution, sitting on a park bench",

- ],

- num_images_per_prompt=10,

- batch_size=4,

-)

-```

-

-For more information, check out 🤗 Optimum Habana's [documentation](https://huggingface.co/docs/optimum/habana/usage_guides/stable_diffusion) and the [example](https://github.com/huggingface/optimum-habana/tree/main/examples/stable-diffusion) provided in the official GitHub repository.

-

-## Benchmark

-

-We benchmarked Habana's first-generation Gaudi and Gaudi2 with the [Habana/stable-diffusion](https://huggingface.co/Habana/stable-diffusion) and [Habana/stable-diffusion-2](https://huggingface.co/Habana/stable-diffusion-2) Gaudi configurations (mixed precision bf16/fp32) to demonstrate their performance.

-

-For [Stable Diffusion v1.5](https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5) on 512x512 images:

-

-| | Latency (batch size = 1) | Throughput |

-| ---------------------- |:------------------------:|:---------------------------:|

-| first-generation Gaudi | 3.80s | 0.308 images/s (batch size = 8) |

-| Gaudi2 | 1.33s | 1.081 images/s (batch size = 8) |

-

-For [Stable Diffusion v2.1](https://huggingface.co/stabilityai/stable-diffusion-2-1) on 768x768 images:

-

-| | Latency (batch size = 1) | Throughput |

-| ---------------------- |:------------------------:|:-------------------------------:|

-| first-generation Gaudi | 10.2s | 0.108 images/s (batch size = 4) |

-| Gaudi2 | 3.17s | 0.379 images/s (batch size = 8) |

+> [!TIP]

+> For Gaudi-optimized diffusion pipeline implementations, we recommend using [Optimum for Intel Gaudi](https://huggingface.co/docs/optimum/main/en/habana/index).

diff --git a/docs/source/en/optimization/memory.md b/docs/source/en/optimization/memory.md

index 5b3bfe650d..6b853a7a08 100644

--- a/docs/source/en/optimization/memory.md

+++ b/docs/source/en/optimization/memory.md

@@ -295,6 +295,13 @@ pipeline.transformer.enable_group_offload(onload_device=onload_device, offload_d

The `low_cpu_mem_usage` parameter can be set to `True` to reduce CPU memory usage when using streams during group offloading. It is best for `leaf_level` offloading and when CPU memory is bottlenecked. Memory is saved by creating pinned tensors on the fly instead of pre-pinning them. However, this may increase overall execution time.

+

+

+The offloading strategies can be combined with [quantization](../quantization/overview.md) to enable further memory savings. For image generation, combining [quantization and model offloading](#model-offloading) can often give the best trade-off between quality, speed, and memory. However, for video generation, as the models are more

+compute-bound, [group-offloading](#group-offloading) tends to be better. Group offloading provides considerable benefits when weight transfers can be overlapped with computation (must use streams). When applying group offloading with quantization on image generation models at typical resolutions (1024x1024, for example), it is usually not possible to *fully* overlap weight transfers if the compute kernel finishes faster, making it communication bound between CPU/GPU (due to device synchronizations).

+

+

+

## Layerwise casting

Layerwise casting stores weights in a smaller data format (for example, `torch.float8_e4m3fn` and `torch.float8_e5m2`) to use less memory and upcasts those weights to a higher precision like `torch.float16` or `torch.bfloat16` for computation. Certain layers (normalization and modulation related weights) are skipped because storing them in fp8 can degrade generation quality.

diff --git a/docs/source/en/optimization/tome.md b/docs/source/en/optimization/tome.md

index 3e574efbfe..f379bc97f4 100644

--- a/docs/source/en/optimization/tome.md

+++ b/docs/source/en/optimization/tome.md

@@ -93,4 +93,4 @@ To reproduce this benchmark, feel free to use this [script](https://gist.github.

| | | 2 | OOM | 13 | 10.78 |

| | | 1 | OOM | 6.66 | 5.54 |

-As seen in the tables above, the speed-up from `tomesd` becomes more pronounced for larger image resolutions. It is also interesting to note that with `tomesd`, it is possible to run the pipeline on a higher resolution like 1024x1024. You may be able to speed-up inference even more with [`torch.compile`](torch2.0).

+As seen in the tables above, the speed-up from `tomesd` becomes more pronounced for larger image resolutions. It is also interesting to note that with `tomesd`, it is possible to run the pipeline on a higher resolution like 1024x1024. You may be able to speed-up inference even more with [`torch.compile`](fp16#torchcompile).

diff --git a/docs/source/en/optimization/torch2.0.md b/docs/source/en/optimization/torch2.0.md

deleted file mode 100644

index 01ea00310a..0000000000

--- a/docs/source/en/optimization/torch2.0.md

+++ /dev/null

@@ -1,421 +0,0 @@

-

-

-# PyTorch 2.0

-

-🤗 Diffusers supports the latest optimizations from [PyTorch 2.0](https://pytorch.org/get-started/pytorch-2.0/) which include:

-

-1. A memory-efficient attention implementation, scaled dot product attention, without requiring any extra dependencies such as xFormers.

-2. [`torch.compile`](https://pytorch.org/tutorials/intermediate/torch_compile_tutorial.html), a just-in-time (JIT) compiler to provide an extra performance boost when individual models are compiled.

-

-Both of these optimizations require PyTorch 2.0 or later and 🤗 Diffusers > 0.13.0.

-

-```bash

-pip install --upgrade torch diffusers

-```

-

-## Scaled dot product attention

-

-[`torch.nn.functional.scaled_dot_product_attention`](https://pytorch.org/docs/master/generated/torch.nn.functional.scaled_dot_product_attention) (SDPA) is an optimized and memory-efficient attention (similar to xFormers) that automatically enables several other optimizations depending on the model inputs and GPU type. SDPA is enabled by default if you're using PyTorch 2.0 and the latest version of 🤗 Diffusers, so you don't need to add anything to your code.

-

-However, if you want to explicitly enable it, you can set a [`DiffusionPipeline`] to use [`~models.attention_processor.AttnProcessor2_0`]:

-

-```diff

- import torch

- from diffusers import DiffusionPipeline

-+ from diffusers.models.attention_processor import AttnProcessor2_0

-

- pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True).to("cuda")

-+ pipe.unet.set_attn_processor(AttnProcessor2_0())

-

- prompt = "a photo of an astronaut riding a horse on mars"

- image = pipe(prompt).images[0]

-```

-

-SDPA should be as fast and memory efficient as `xFormers`; check the [benchmark](#benchmark) for more details.

-

-In some cases - such as making the pipeline more deterministic or converting it to other formats - it may be helpful to use the vanilla attention processor, [`~models.attention_processor.AttnProcessor`]. To revert to [`~models.attention_processor.AttnProcessor`], call the [`~UNet2DConditionModel.set_default_attn_processor`] function on the pipeline:

-

-```diff

- import torch

- from diffusers import DiffusionPipeline

-

- pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True).to("cuda")

-+ pipe.unet.set_default_attn_processor()

-

- prompt = "a photo of an astronaut riding a horse on mars"

- image = pipe(prompt).images[0]

-```

-

-## torch.compile

-

-The `torch.compile` function can often provide an additional speed-up to your PyTorch code. In 🤗 Diffusers, it is usually best to wrap the UNet with `torch.compile` because it does most of the heavy lifting in the pipeline.

-

-```python

-from diffusers import DiffusionPipeline

-import torch

-

-pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True).to("cuda")

-pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

-images = pipe(prompt, num_inference_steps=steps, num_images_per_prompt=batch_size).images[0]

-```

-

-Depending on GPU type, `torch.compile` can provide an *additional speed-up* of **5-300x** on top of SDPA! If you're using more recent GPU architectures such as Ampere (A100, 3090), Ada (4090), and Hopper (H100), `torch.compile` is able to squeeze even more performance out of these GPUs.

-

-Compilation requires some time to complete, so it is best suited for situations where you prepare your pipeline once and then perform the same type of inference operations multiple times. For example, calling the compiled pipeline on a different image size triggers compilation again which can be expensive.

-

-For more information and different options about `torch.compile`, refer to the [`torch_compile`](https://pytorch.org/tutorials/intermediate/torch_compile_tutorial.html) tutorial.

-

-> [!TIP]

-> Learn more about other ways PyTorch 2.0 can help optimize your model in the [Accelerate inference of text-to-image diffusion models](../tutorials/fast_diffusion) tutorial.

-

-## Benchmark

-

-We conducted a comprehensive benchmark with PyTorch 2.0's efficient attention implementation and `torch.compile` across different GPUs and batch sizes for five of our most used pipelines. The code is benchmarked on 🤗 Diffusers v0.17.0.dev0 to optimize `torch.compile` usage (see [here](https://github.com/huggingface/diffusers/pull/3313) for more details).

-

-Expand the dropdown below to find the code used to benchmark each pipeline:

-

-

-

-### Stable Diffusion text-to-image

-

-```python

-from diffusers import DiffusionPipeline

-import torch

-

-path = "stable-diffusion-v1-5/stable-diffusion-v1-5"

-

-run_compile = True # Set True / False

-

-pipe = DiffusionPipeline.from_pretrained(path, torch_dtype=torch.float16, use_safetensors=True)

-pipe = pipe.to("cuda")

-pipe.unet.to(memory_format=torch.channels_last)

-

-if run_compile:

- print("Run torch compile")

- pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

-

-prompt = "ghibli style, a fantasy landscape with castles"

-

-for _ in range(3):

- images = pipe(prompt=prompt).images

-```

-

-### Stable Diffusion image-to-image

-

-```python

-from diffusers import StableDiffusionImg2ImgPipeline

-from diffusers.utils import load_image

-import torch

-

-url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

-

-init_image = load_image(url)

-init_image = init_image.resize((512, 512))

-

-path = "stable-diffusion-v1-5/stable-diffusion-v1-5"

-

-run_compile = True # Set True / False

-

-pipe = StableDiffusionImg2ImgPipeline.from_pretrained(path, torch_dtype=torch.float16, use_safetensors=True)

-pipe = pipe.to("cuda")

-pipe.unet.to(memory_format=torch.channels_last)

-

-if run_compile:

- print("Run torch compile")

- pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

-

-prompt = "ghibli style, a fantasy landscape with castles"

-

-for _ in range(3):

- image = pipe(prompt=prompt, image=init_image).images[0]

-```

-

-### Stable Diffusion inpainting

-

-```python

-from diffusers import StableDiffusionInpaintPipeline

-from diffusers.utils import load_image

-import torch

-

-img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

-mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

-

-init_image = load_image(img_url).resize((512, 512))

-mask_image = load_image(mask_url).resize((512, 512))

-

-path = "runwayml/stable-diffusion-inpainting"

-

-run_compile = True # Set True / False

-

-pipe = StableDiffusionInpaintPipeline.from_pretrained(path, torch_dtype=torch.float16, use_safetensors=True)

-pipe = pipe.to("cuda")

-pipe.unet.to(memory_format=torch.channels_last)

-

-if run_compile:

- print("Run torch compile")

- pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

-

-prompt = "ghibli style, a fantasy landscape with castles"

-

-for _ in range(3):

- image = pipe(prompt=prompt, image=init_image, mask_image=mask_image).images[0]

-```

-

-### ControlNet

-

-```python

-from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

-from diffusers.utils import load_image

-import torch

-

-url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

-

-init_image = load_image(url)

-init_image = init_image.resize((512, 512))

-

-path = "stable-diffusion-v1-5/stable-diffusion-v1-5"

-

-run_compile = True # Set True / False

-controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16, use_safetensors=True)

-pipe = StableDiffusionControlNetPipeline.from_pretrained(

- path, controlnet=controlnet, torch_dtype=torch.float16, use_safetensors=True

-)

-

-pipe = pipe.to("cuda")

-pipe.unet.to(memory_format=torch.channels_last)

-pipe.controlnet.to(memory_format=torch.channels_last)

-

-if run_compile:

- print("Run torch compile")

- pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

- pipe.controlnet = torch.compile(pipe.controlnet, mode="reduce-overhead", fullgraph=True)

-

-prompt = "ghibli style, a fantasy landscape with castles"

-

-for _ in range(3):

- image = pipe(prompt=prompt, image=init_image).images[0]

-```

-

-### DeepFloyd IF text-to-image + upscaling

-

-```python

-from diffusers import DiffusionPipeline

-import torch

-

-run_compile = True # Set True / False

-

-pipe_1 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-M-v1.0", variant="fp16", text_encoder=None, torch_dtype=torch.float16, use_safetensors=True)

-pipe_1.to("cuda")

-pipe_2 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-II-M-v1.0", variant="fp16", text_encoder=None, torch_dtype=torch.float16, use_safetensors=True)

-pipe_2.to("cuda")

-pipe_3 = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-x4-upscaler", torch_dtype=torch.float16, use_safetensors=True)

-pipe_3.to("cuda")

-

-

-pipe_1.unet.to(memory_format=torch.channels_last)

-pipe_2.unet.to(memory_format=torch.channels_last)

-pipe_3.unet.to(memory_format=torch.channels_last)

-

-if run_compile:

- pipe_1.unet = torch.compile(pipe_1.unet, mode="reduce-overhead", fullgraph=True)

- pipe_2.unet = torch.compile(pipe_2.unet, mode="reduce-overhead", fullgraph=True)

- pipe_3.unet = torch.compile(pipe_3.unet, mode="reduce-overhead", fullgraph=True)

-

-prompt = "the blue hulk"

-

-prompt_embeds = torch.randn((1, 2, 4096), dtype=torch.float16)

-neg_prompt_embeds = torch.randn((1, 2, 4096), dtype=torch.float16)

-

-for _ in range(3):

- image_1 = pipe_1(prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

- image_2 = pipe_2(image=image_1, prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

- image_3 = pipe_3(prompt=prompt, image=image_1, noise_level=100).images

-```

-

-

-The graph below highlights the relative speed-ups for the [`StableDiffusionPipeline`] across five GPU families with PyTorch 2.0 and `torch.compile` enabled. The benchmarks for the following graphs are measured in *number of iterations/second*.

-

-

-

-To give you an even better idea of how this speed-up holds for the other pipelines, consider the following

-graph for an A100 with PyTorch 2.0 and `torch.compile`:

-

-

-

-In the following tables, we report our findings in terms of the *number of iterations/second*.

-

-### A100 (batch size: 1)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 21.66 | 23.13 | 44.03 | 49.74 |

-| SD - img2img | 21.81 | 22.40 | 43.92 | 46.32 |

-| SD - inpaint | 22.24 | 23.23 | 43.76 | 49.25 |

-| SD - controlnet | 15.02 | 15.82 | 32.13 | 36.08 |

-| IF | 20.21 /

13.84 /

24.00 | 20.12 /

13.70 /

24.03 | ❌ | 97.34 /

27.23 /

111.66 |

-| SDXL - txt2img | 8.64 | 9.9 | - | - |

-

-### A100 (batch size: 4)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 11.6 | 13.12 | 14.62 | 17.27 |

-| SD - img2img | 11.47 | 13.06 | 14.66 | 17.25 |

-| SD - inpaint | 11.67 | 13.31 | 14.88 | 17.48 |

-| SD - controlnet | 8.28 | 9.38 | 10.51 | 12.41 |

-| IF | 25.02 | 18.04 | ❌ | 48.47 |

-| SDXL - txt2img | 2.44 | 2.74 | - | - |

-

-### A100 (batch size: 16)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 3.04 | 3.6 | 3.83 | 4.68 |

-| SD - img2img | 2.98 | 3.58 | 3.83 | 4.67 |

-| SD - inpaint | 3.04 | 3.66 | 3.9 | 4.76 |

-| SD - controlnet | 2.15 | 2.58 | 2.74 | 3.35 |

-| IF | 8.78 | 9.82 | ❌ | 16.77 |

-| SDXL - txt2img | 0.64 | 0.72 | - | - |

-

-### V100 (batch size: 1)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 18.99 | 19.14 | 20.95 | 22.17 |

-| SD - img2img | 18.56 | 19.18 | 20.95 | 22.11 |

-| SD - inpaint | 19.14 | 19.06 | 21.08 | 22.20 |

-| SD - controlnet | 13.48 | 13.93 | 15.18 | 15.88 |

-| IF | 20.01 /

9.08 /

23.34 | 19.79 /

8.98 /

24.10 | ❌ | 55.75 /

11.57 /

57.67 |

-

-### V100 (batch size: 4)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 5.96 | 5.89 | 6.83 | 6.86 |

-| SD - img2img | 5.90 | 5.91 | 6.81 | 6.82 |

-| SD - inpaint | 5.99 | 6.03 | 6.93 | 6.95 |

-| SD - controlnet | 4.26 | 4.29 | 4.92 | 4.93 |

-| IF | 15.41 | 14.76 | ❌ | 22.95 |

-

-### V100 (batch size: 16)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 1.66 | 1.66 | 1.92 | 1.90 |

-| SD - img2img | 1.65 | 1.65 | 1.91 | 1.89 |

-| SD - inpaint | 1.69 | 1.69 | 1.95 | 1.93 |

-| SD - controlnet | 1.19 | 1.19 | OOM after warmup | 1.36 |

-| IF | 5.43 | 5.29 | ❌ | 7.06 |

-

-### T4 (batch size: 1)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 6.9 | 6.95 | 7.3 | 7.56 |

-| SD - img2img | 6.84 | 6.99 | 7.04 | 7.55 |

-| SD - inpaint | 6.91 | 6.7 | 7.01 | 7.37 |

-| SD - controlnet | 4.89 | 4.86 | 5.35 | 5.48 |

-| IF | 17.42 /

2.47 /

18.52 | 16.96 /

2.45 /

18.69 | ❌ | 24.63 /

2.47 /

23.39 |

-| SDXL - txt2img | 1.15 | 1.16 | - | - |

-

-### T4 (batch size: 4)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 1.79 | 1.79 | 2.03 | 1.99 |

-| SD - img2img | 1.77 | 1.77 | 2.05 | 2.04 |

-| SD - inpaint | 1.81 | 1.82 | 2.09 | 2.09 |

-| SD - controlnet | 1.34 | 1.27 | 1.47 | 1.46 |

-| IF | 5.79 | 5.61 | ❌ | 7.39 |

-| SDXL - txt2img | 0.288 | 0.289 | - | - |

-

-### T4 (batch size: 16)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 2.34s | 2.30s | OOM after 2nd iteration | 1.99s |

-| SD - img2img | 2.35s | 2.31s | OOM after warmup | 2.00s |

-| SD - inpaint | 2.30s | 2.26s | OOM after 2nd iteration | 1.95s |

-| SD - controlnet | OOM after 2nd iteration | OOM after 2nd iteration | OOM after warmup | OOM after warmup |

-| IF * | 1.44 | 1.44 | ❌ | 1.94 |

-| SDXL - txt2img | OOM | OOM | - | - |

-

-### RTX 3090 (batch size: 1)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 22.56 | 22.84 | 23.84 | 25.69 |

-| SD - img2img | 22.25 | 22.61 | 24.1 | 25.83 |

-| SD - inpaint | 22.22 | 22.54 | 24.26 | 26.02 |

-| SD - controlnet | 16.03 | 16.33 | 17.38 | 18.56 |

-| IF | 27.08 /

9.07 /

31.23 | 26.75 /

8.92 /

31.47 | ❌ | 68.08 /

11.16 /

65.29 |

-

-### RTX 3090 (batch size: 4)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 6.46 | 6.35 | 7.29 | 7.3 |

-| SD - img2img | 6.33 | 6.27 | 7.31 | 7.26 |

-| SD - inpaint | 6.47 | 6.4 | 7.44 | 7.39 |

-| SD - controlnet | 4.59 | 4.54 | 5.27 | 5.26 |

-| IF | 16.81 | 16.62 | ❌ | 21.57 |

-

-### RTX 3090 (batch size: 16)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 1.7 | 1.69 | 1.93 | 1.91 |

-| SD - img2img | 1.68 | 1.67 | 1.93 | 1.9 |

-| SD - inpaint | 1.72 | 1.71 | 1.97 | 1.94 |

-| SD - controlnet | 1.23 | 1.22 | 1.4 | 1.38 |

-| IF | 5.01 | 5.00 | ❌ | 6.33 |

-

-### RTX 4090 (batch size: 1)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 40.5 | 41.89 | 44.65 | 49.81 |

-| SD - img2img | 40.39 | 41.95 | 44.46 | 49.8 |

-| SD - inpaint | 40.51 | 41.88 | 44.58 | 49.72 |

-| SD - controlnet | 29.27 | 30.29 | 32.26 | 36.03 |

-| IF | 69.71 /

18.78 /

85.49 | 69.13 /

18.80 /

85.56 | ❌ | 124.60 /

26.37 /

138.79 |

-| SDXL - txt2img | 6.8 | 8.18 | - | - |

-

-### RTX 4090 (batch size: 4)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 12.62 | 12.84 | 15.32 | 15.59 |

-| SD - img2img | 12.61 | 12,.79 | 15.35 | 15.66 |

-| SD - inpaint | 12.65 | 12.81 | 15.3 | 15.58 |

-| SD - controlnet | 9.1 | 9.25 | 11.03 | 11.22 |

-| IF | 31.88 | 31.14 | ❌ | 43.92 |

-| SDXL - txt2img | 2.19 | 2.35 | - | - |

-

-### RTX 4090 (batch size: 16)

-

-| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** |

-|:---:|:---:|:---:|:---:|:---:|

-| SD - txt2img | 3.17 | 3.2 | 3.84 | 3.85 |

-| SD - img2img | 3.16 | 3.2 | 3.84 | 3.85 |

-| SD - inpaint | 3.17 | 3.2 | 3.85 | 3.85 |

-| SD - controlnet | 2.23 | 2.3 | 2.7 | 2.75 |

-| IF | 9.26 | 9.2 | ❌ | 13.31 |

-| SDXL - txt2img | 0.52 | 0.53 | - | - |

-

-## Notes

-

-* Follow this [PR](https://github.com/huggingface/diffusers/pull/3313) for more details on the environment used for conducting the benchmarks.

-* For the DeepFloyd IF pipeline where batch sizes > 1, we only used a batch size of > 1 in the first IF pipeline for text-to-image generation and NOT for upscaling. That means the two upscaling pipelines received a batch size of 1.

-

-*Thanks to [Horace He](https://github.com/Chillee) from the PyTorch team for their support in improving our support of `torch.compile()` in Diffusers.*

diff --git a/docs/source/en/optimization/xdit.md b/docs/source/en/optimization/xdit.md

index 33ff8dc255..ecf4563568 100644

--- a/docs/source/en/optimization/xdit.md

+++ b/docs/source/en/optimization/xdit.md

@@ -2,7 +2,7 @@

[xDiT](https://github.com/xdit-project/xDiT) is an inference engine designed for the large scale parallel deployment of Diffusion Transformers (DiTs). xDiT provides a suite of efficient parallel approaches for Diffusion Models, as well as GPU kernel accelerations.

-There are four parallel methods supported in xDiT, including [Unified Sequence Parallelism](https://arxiv.org/abs/2405.07719), [PipeFusion](https://arxiv.org/abs/2405.14430), CFG parallelism and data parallelism. The four parallel methods in xDiT can be configured in a hybrid manner, optimizing communication patterns to best suit the underlying network hardware.

+There are four parallel methods supported in xDiT, including [Unified Sequence Parallelism](https://huggingface.co/papers/2405.07719), [PipeFusion](https://huggingface.co/papers/2405.14430), CFG parallelism and data parallelism. The four parallel methods in xDiT can be configured in a hybrid manner, optimizing communication patterns to best suit the underlying network hardware.

Optimization orthogonal to parallelization focuses on accelerating single GPU performance. In addition to utilizing well-known Attention optimization libraries, we leverage compilation acceleration technologies such as torch.compile and onediff.

@@ -116,6 +116,6 @@ More detailed performance metric can be found on our [github page](https://githu

[xDiT-project](https://github.com/xdit-project/xDiT)

-[USP: A Unified Sequence Parallelism Approach for Long Context Generative AI](https://arxiv.org/abs/2405.07719)

+[USP: A Unified Sequence Parallelism Approach for Long Context Generative AI](https://huggingface.co/papers/2405.07719)

-[PipeFusion: Displaced Patch Pipeline Parallelism for Inference of Diffusion Transformer Models](https://arxiv.org/abs/2405.14430)

\ No newline at end of file

+[PipeFusion: Displaced Patch Pipeline Parallelism for Inference of Diffusion Transformer Models](https://huggingface.co/papers/2405.14430)

\ No newline at end of file

diff --git a/docs/source/en/quantization/overview.md b/docs/source/en/quantization/overview.md

index 93323f86c7..cc5a7e2891 100644

--- a/docs/source/en/quantization/overview.md

+++ b/docs/source/en/quantization/overview.md

@@ -13,29 +13,120 @@ specific language governing permissions and limitations under the License.

# Quantization

-Quantization techniques focus on representing data with less information while also trying to not lose too much accuracy. This often means converting a data type to represent the same information with fewer bits. For example, if your model weights are stored as 32-bit floating points and they're quantized to 16-bit floating points, this halves the model size which makes it easier to store and reduces memory-usage. Lower precision can also speedup inference because it takes less time to perform calculations with fewer bits.

+Quantization focuses on representing data with fewer bits while also trying to preserve the precision of the original data. This often means converting a data type to represent the same information with fewer bits. For example, if your model weights are stored as 32-bit floating points and they're quantized to 16-bit floating points, this halves the model size which makes it easier to store and reduces memory usage. Lower precision can also speedup inference because it takes less time to perform calculations with fewer bits.

-

+Diffusers supports multiple quantization backends to make large diffusion models like [Flux](../api/pipelines/flux) more accessible. This guide shows how to use the [`~quantizers.PipelineQuantizationConfig`] class to quantize a pipeline during its initialization from a pretrained or non-quantized checkpoint.

-Interested in adding a new quantization method to Diffusers? Refer to the [Contribute new quantization method guide](https://huggingface.co/docs/transformers/main/en/quantization/contribute) to learn more about adding a new quantization method.

+## Pipeline-level quantization

-

+There are two ways you can use [`~quantizers.PipelineQuantizationConfig`] depending on the level of control you want over the quantization specifications of each model in the pipeline.

-

+- for more basic and simple use cases, you only need to define the `quant_backend`, `quant_kwargs`, and `components_to_quantize`

+- for more granular quantization control, provide a `quant_mapping` that provides the quantization specifications for the individual model components

-If you are new to the quantization field, we recommend you to check out these beginner-friendly courses about quantization in collaboration with DeepLearning.AI:

+### Simple quantization

-* [Quantization Fundamentals with Hugging Face](https://www.deeplearning.ai/short-courses/quantization-fundamentals-with-hugging-face/)

-* [Quantization in Depth](https://www.deeplearning.ai/short-courses/quantization-in-depth/)

+Initialize [`~quantizers.PipelineQuantizationConfig`] with the following parameters.

-

+- `quant_backend` specifies which quantization backend to use. Currently supported backends include: `bitsandbytes_4bit`, `bitsandbytes_8bit`, `gguf`, `quanto`, and `torchao`.

+- `quant_kwargs` contains the specific quantization arguments to use.

+- `components_to_quantize` specifies which components of the pipeline to quantize. Typically, you should quantize the most compute intensive components like the transformer. The text encoder is another component to consider quantizing if a pipeline has more than one such as [`FluxPipeline`]. The example below quantizes the T5 text encoder in [`FluxPipeline`] while keeping the CLIP model intact.

-## When to use what?

+```py

+import torch

+from diffusers import DiffusionPipeline

+from diffusers.quantizers import PipelineQuantizationConfig

-Diffusers currently supports the following quantization methods.

-- [BitsandBytes](./bitsandbytes)

-- [TorchAO](./torchao)

-- [GGUF](./gguf)

-- [Quanto](./quanto.md)

+pipeline_quant_config = PipelineQuantizationConfig(

+ quant_backend="bitsandbytes_4bit",

+ quant_kwargs={"load_in_4bit": True, "bnb_4bit_quant_type": "nf4", "bnb_4bit_compute_dtype": torch.bfloat16},

+ components_to_quantize=["transformer", "text_encoder_2"],

+)

+```

-[This resource](https://huggingface.co/docs/transformers/main/en/quantization/overview#when-to-use-what) provides a good overview of the pros and cons of different quantization techniques.

+Pass the `pipeline_quant_config` to [`~DiffusionPipeline.from_pretrained`] to quantize the pipeline.

+

+```py

+pipe = DiffusionPipeline.from_pretrained(

+ "black-forest-labs/FLUX.1-dev",

+ quantization_config=pipeline_quant_config,

+ torch_dtype=torch.bfloat16,

+).to("cuda")

+

+image = pipe("photo of a cute dog").images[0]

+```

+

+### quant_mapping

+

+The `quant_mapping` argument provides more flexible options for how to quantize each individual component in a pipeline, like combining different quantization backends.

+

+Initialize [`~quantizers.PipelineQuantizationConfig`] and pass a `quant_mapping` to it. The `quant_mapping` allows you to specify the quantization options for each component in the pipeline such as the transformer and text encoder.

+

+The example below uses two quantization backends, [`~quantizers.QuantoConfig`] and [`transformers.BitsAndBytesConfig`], for the transformer and text encoder.

+

+```py

+import torch

+from diffusers import DiffusionPipeline

+from diffusers import BitsAndBytesConfig as DiffusersBitsAndBytesConfig

+from diffusers.quantizers.quantization_config import QuantoConfig

+from diffusers.quantizers import PipelineQuantizationConfig

+from transformers import BitsAndBytesConfig as TransformersBitsAndBytesConfig

+

+pipeline_quant_config = PipelineQuantizationConfig(

+ quant_mapping={

+ "transformer": QuantoConfig(weights_dtype="int8"),

+ "text_encoder_2": TransformersBitsAndBytesConfig(

+ load_in_4bit=True, compute_dtype=torch.bfloat16

+ ),

+ }

+)

+```

+

+There is a separate bitsandbytes backend in [Transformers](https://huggingface.co/docs/transformers/main_classes/quantization#transformers.BitsAndBytesConfig). You need to import and use [`transformers.BitsAndBytesConfig`] for components that come from Transformers. For example, `text_encoder_2` in [`FluxPipeline`] is a [`~transformers.T5EncoderModel`] from Transformers so you need to use [`transformers.BitsAndBytesConfig`] instead of [`diffusers.BitsAndBytesConfig`].

+

+> [!TIP]

+> Use the [simple quantization](#simple-quantization) method above if you don't want to manage these distinct imports or aren't sure where each pipeline component comes from.

+

+```py

+import torch

+from diffusers import DiffusionPipeline

+from diffusers import BitsAndBytesConfig as DiffusersBitsAndBytesConfig

+from diffusers.quantizers import PipelineQuantizationConfig

+from transformers import BitsAndBytesConfig as TransformersBitsAndBytesConfig

+

+pipeline_quant_config = PipelineQuantizationConfig(

+ quant_mapping={

+ "transformer": DiffusersBitsAndBytesConfig(load_in_4bit=True, bnb_4bit_compute_dtype=torch.bfloat16),

+ "text_encoder_2": TransformersBitsAndBytesConfig(

+ load_in_4bit=True, compute_dtype=torch.bfloat16

+ ),

+ }

+)

+```

+

+Pass the `pipeline_quant_config` to [`~DiffusionPipeline.from_pretrained`] to quantize the pipeline.

+

+```py

+pipe = DiffusionPipeline.from_pretrained(

+ "black-forest-labs/FLUX.1-dev",

+ quantization_config=pipeline_quant_config,

+ torch_dtype=torch.bfloat16,

+).to("cuda")

+

+image = pipe("photo of a cute dog").images[0]

+```

+

+## Resources

+

+Check out the resources below to learn more about quantization.

+

+- If you are new to quantization, we recommend checking out the following beginner-friendly courses in collaboration with DeepLearning.AI.

+

+ - [Quantization Fundamentals with Hugging Face](https://www.deeplearning.ai/short-courses/quantization-fundamentals-with-hugging-face/)

+ - [Quantization in Depth](https://www.deeplearning.ai/short-courses/quantization-in-depth/)

+

+- Refer to the [Contribute new quantization method guide](https://huggingface.co/docs/transformers/main/en/quantization/contribute) if you're interested in adding a new quantization method.

+

+- The Transformers quantization [Overview](https://huggingface.co/docs/transformers/quantization/overview#when-to-use-what) provides an overview of the pros and cons of different quantization backends.

+

+- Read the [Exploring Quantization Backends in Diffusers](https://huggingface.co/blog/diffusers-quantization) blog post for a brief introduction to each quantization backend, how to choose a backend, and combining quantization with other memory optimizations.

\ No newline at end of file

diff --git a/docs/source/en/quantization/torchao.md b/docs/source/en/quantization/torchao.md

index 3ccca02825..95b30a6e01 100644

--- a/docs/source/en/quantization/torchao.md

+++ b/docs/source/en/quantization/torchao.md

@@ -56,7 +56,7 @@ image = pipe(

image.save("output.png")

```

-TorchAO is fully compatible with [torch.compile](./optimization/torch2.0#torchcompile), setting it apart from other quantization methods. This makes it easy to speed up inference with just one line of code.

+TorchAO is fully compatible with [torch.compile](../optimization/fp16#torchcompile), setting it apart from other quantization methods. This makes it easy to speed up inference with just one line of code.

```python

# In the above code, add the following after initializing the transformer

@@ -91,7 +91,7 @@ The quantization methods supported are as follows:

Some quantization methods are aliases (for example, `int8wo` is the commonly used shorthand for `int8_weight_only`). This allows using the quantization methods described in the torchao docs as-is, while also making it convenient to remember their shorthand notations.

-Refer to the official torchao documentation for a better understanding of the available quantization methods and the exhaustive list of configuration options available.

+Refer to the [official torchao documentation](https://docs.pytorch.org/ao/stable/index.html) for a better understanding of the available quantization methods and the exhaustive list of configuration options available.

## Serializing and Deserializing quantized models

@@ -155,5 +155,5 @@ transformer.load_state_dict(state_dict, strict=True, assign=True)

## Resources

-- [TorchAO Quantization API](https://github.com/pytorch/ao/blob/main/torchao/quantization/README.md)

+- [TorchAO Quantization API](https://docs.pytorch.org/ao/stable/index.html)

- [Diffusers-TorchAO examples](https://github.com/sayakpaul/diffusers-torchao)

diff --git a/docs/source/en/stable_diffusion.md b/docs/source/en/stable_diffusion.md

index fc20d259f5..77610114ec 100644

--- a/docs/source/en/stable_diffusion.md

+++ b/docs/source/en/stable_diffusion.md

@@ -256,6 +256,6 @@ make_image_grid(images, 2, 2)

In this tutorial, you learned how to optimize a [`DiffusionPipeline`] for computational and memory efficiency as well as improving the quality of generated outputs. If you're interested in making your pipeline even faster, take a look at the following resources:

-- Learn how [PyTorch 2.0](./optimization/torch2.0) and [`torch.compile`](https://pytorch.org/docs/stable/generated/torch.compile.html) can yield 5 - 300% faster inference speed. On an A100 GPU, inference can be up to 50% faster!

+- Learn how [PyTorch 2.0](./optimization/fp16) and [`torch.compile`](https://pytorch.org/docs/stable/generated/torch.compile.html) can yield 5 - 300% faster inference speed. On an A100 GPU, inference can be up to 50% faster!

- If you can't use PyTorch 2, we recommend you install [xFormers](./optimization/xformers). Its memory-efficient attention mechanism works great with PyTorch 1.13.1 for faster speed and reduced memory consumption.

- Other optimization techniques, such as model offloading, are covered in [this guide](./optimization/fp16).

diff --git a/docs/source/en/training/ddpo.md b/docs/source/en/training/ddpo.md

index a4538fe070..8ea797f804 100644

--- a/docs/source/en/training/ddpo.md

+++ b/docs/source/en/training/ddpo.md

@@ -12,6 +12,6 @@ specific language governing permissions and limitations under the License.

# Reinforcement learning training with DDPO

-You can fine-tune Stable Diffusion on a reward function via reinforcement learning with the 🤗 TRL library and 🤗 Diffusers. This is done with the Denoising Diffusion Policy Optimization (DDPO) algorithm introduced by Black et al. in [Training Diffusion Models with Reinforcement Learning](https://arxiv.org/abs/2305.13301), which is implemented in 🤗 TRL with the [`~trl.DDPOTrainer`].

+You can fine-tune Stable Diffusion on a reward function via reinforcement learning with the 🤗 TRL library and 🤗 Diffusers. This is done with the Denoising Diffusion Policy Optimization (DDPO) algorithm introduced by Black et al. in [Training Diffusion Models with Reinforcement Learning](https://huggingface.co/papers/2305.13301), which is implemented in 🤗 TRL with the [`~trl.DDPOTrainer`].

For more information, check out the [`~trl.DDPOTrainer`] API reference and the [Finetune Stable Diffusion Models with DDPO via TRL](https://huggingface.co/blog/trl-ddpo) blog post.

\ No newline at end of file

diff --git a/docs/source/en/training/lora.md b/docs/source/en/training/lora.md

index c1f81c48b8..7237879436 100644

--- a/docs/source/en/training/lora.md

+++ b/docs/source/en/training/lora.md

@@ -87,7 +87,7 @@ Lastly, if you want to train a model on your own dataset, take a look at the [Cr

-The following sections highlight parts of the training script that are important for understanding how to modify it, but it doesn't cover every aspect of the script in detail. If you're interested in learning more, feel free to read through the [script](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/text_to_image_lora.py) and let us know if you have any questions or concerns.

+The following sections highlight parts of the training script that are important for understanding how to modify it, but it doesn't cover every aspect of the script in detail. If you're interested in learning more, feel free to read through the [script](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image_lora.py) and let us know if you have any questions or concerns.

diff --git a/docs/source/en/training/overview.md b/docs/source/en/training/overview.md

index 5396afc0b8..bcd855ccb5 100644

--- a/docs/source/en/training/overview.md

+++ b/docs/source/en/training/overview.md

@@ -59,5 +59,5 @@ pip install -r requirements_sdxl.txt

To speedup training and reduce memory-usage, we recommend:

-- using PyTorch 2.0 or higher to automatically use [scaled dot product attention](../optimization/torch2.0#scaled-dot-product-attention) during training (you don't need to make any changes to the training code)

+- using PyTorch 2.0 or higher to automatically use [scaled dot product attention](../optimization/fp16#scaled-dot-product-attention) during training (you don't need to make any changes to the training code)

- installing [xFormers](../optimization/xformers) to enable memory-efficient attention

\ No newline at end of file

diff --git a/docs/source/en/tutorials/fast_diffusion.md b/docs/source/en/tutorials/fast_diffusion.md

deleted file mode 100644

index 0f1133dc2d..0000000000

--- a/docs/source/en/tutorials/fast_diffusion.md

+++ /dev/null

@@ -1,322 +0,0 @@

-

-

-# Accelerate inference of text-to-image diffusion models

-

-Diffusion models are slower than their GAN counterparts because of the iterative and sequential reverse diffusion process. There are several techniques that can address this limitation such as progressive timestep distillation ([LCM LoRA](../using-diffusers/inference_with_lcm_lora)), model compression ([SSD-1B](https://huggingface.co/segmind/SSD-1B)), and reusing adjacent features of the denoiser ([DeepCache](../optimization/deepcache)).

-

-However, you don't necessarily need to use these techniques to speed up inference. With PyTorch 2 alone, you can accelerate the inference latency of text-to-image diffusion pipelines by up to 3x. This tutorial will show you how to progressively apply the optimizations found in PyTorch 2 to reduce inference latency. You'll use the [Stable Diffusion XL (SDXL)](../using-diffusers/sdxl) pipeline in this tutorial, but these techniques are applicable to other text-to-image diffusion pipelines too.

-

-Make sure you're using the latest version of Diffusers:

-

-```bash

-pip install -U diffusers

-```

-

-Then upgrade the other required libraries too:

-

-```bash

-pip install -U transformers accelerate peft

-```

-

-Install [PyTorch nightly](https://pytorch.org/) to benefit from the latest and fastest kernels:

-

-```bash

-pip3 install --pre torch --index-url https://download.pytorch.org/whl/nightly/cu121

-```

-

-> [!TIP]

-> The results reported below are from a 80GB 400W A100 with its clock rate set to the maximum.

-> If you're interested in the full benchmarking code, take a look at [huggingface/diffusion-fast](https://github.com/huggingface/diffusion-fast).

-

-

-## Baseline

-

-Let's start with a baseline. Disable reduced precision and the [`scaled_dot_product_attention` (SDPA)](../optimization/torch2.0#scaled-dot-product-attention) function which is automatically used by Diffusers:

-

-```python

-from diffusers import StableDiffusionXLPipeline

-

-# Load the pipeline in full-precision and place its model components on CUDA.

-pipe = StableDiffusionXLPipeline.from_pretrained(

- "stabilityai/stable-diffusion-xl-base-1.0"

-).to("cuda")

-

-# Run the attention ops without SDPA.

-pipe.unet.set_default_attn_processor()

-pipe.vae.set_default_attn_processor()

-

-prompt = "Astronaut in a jungle, cold color palette, muted colors, detailed, 8k"

-image = pipe(prompt, num_inference_steps=30).images[0]

-```

-

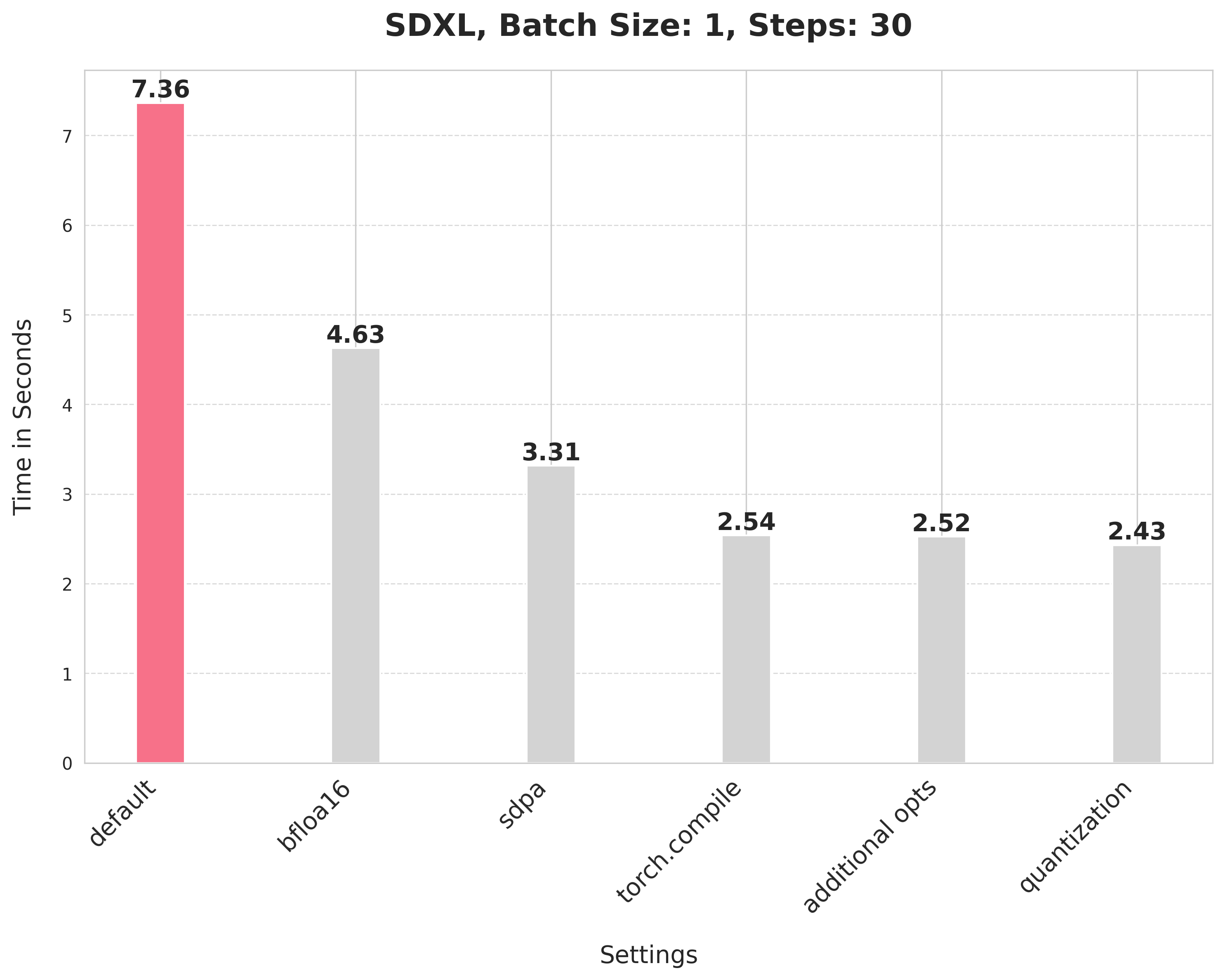

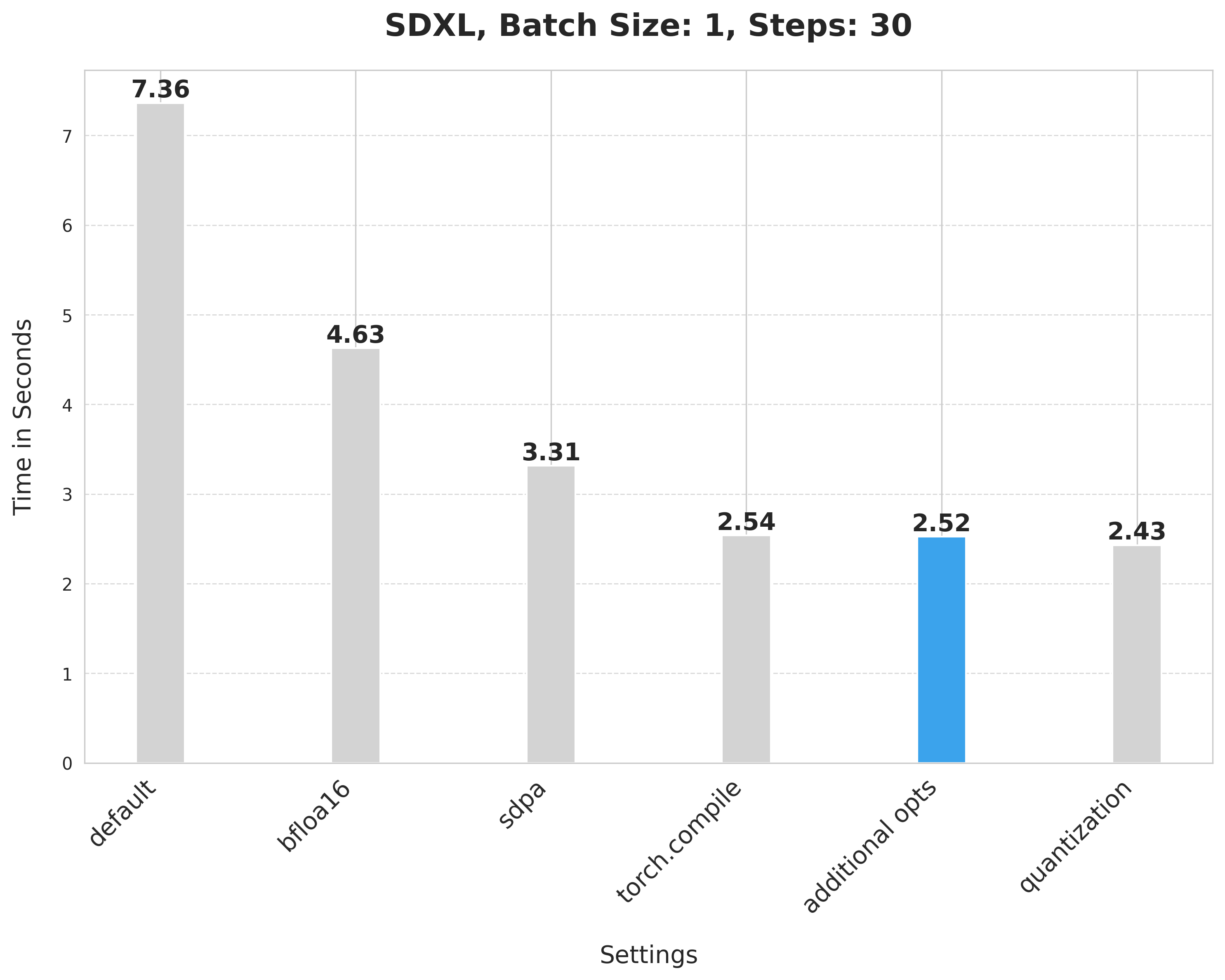

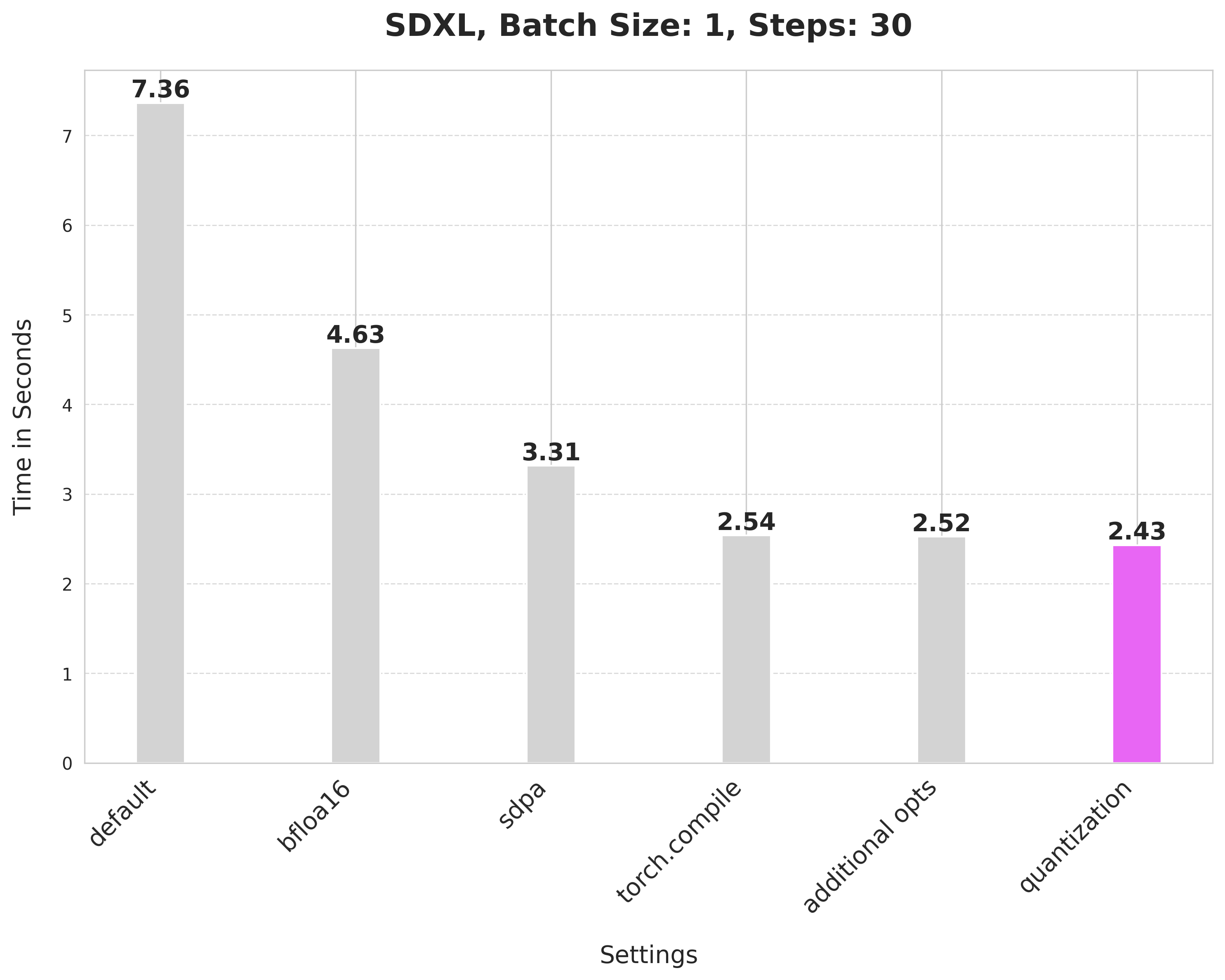

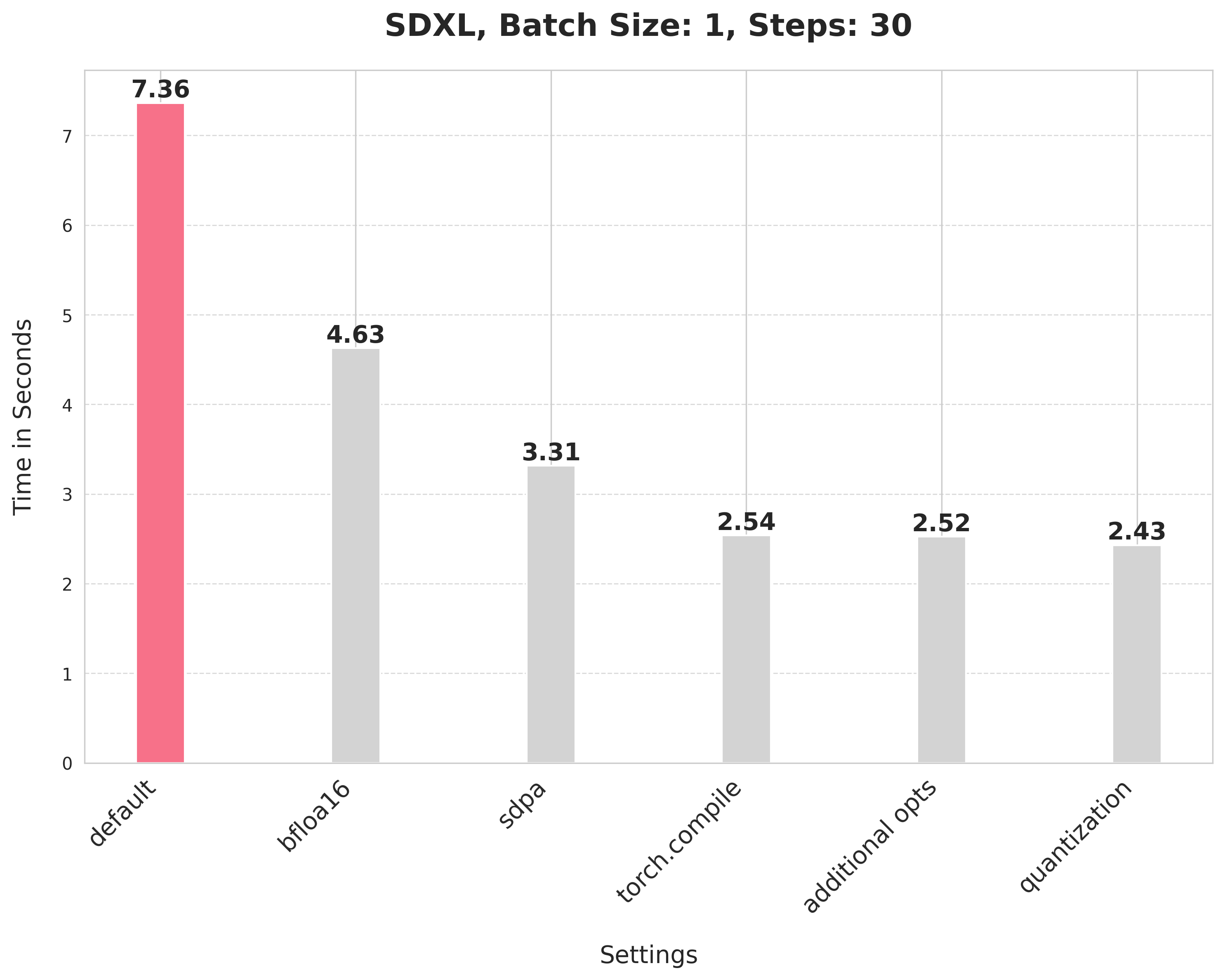

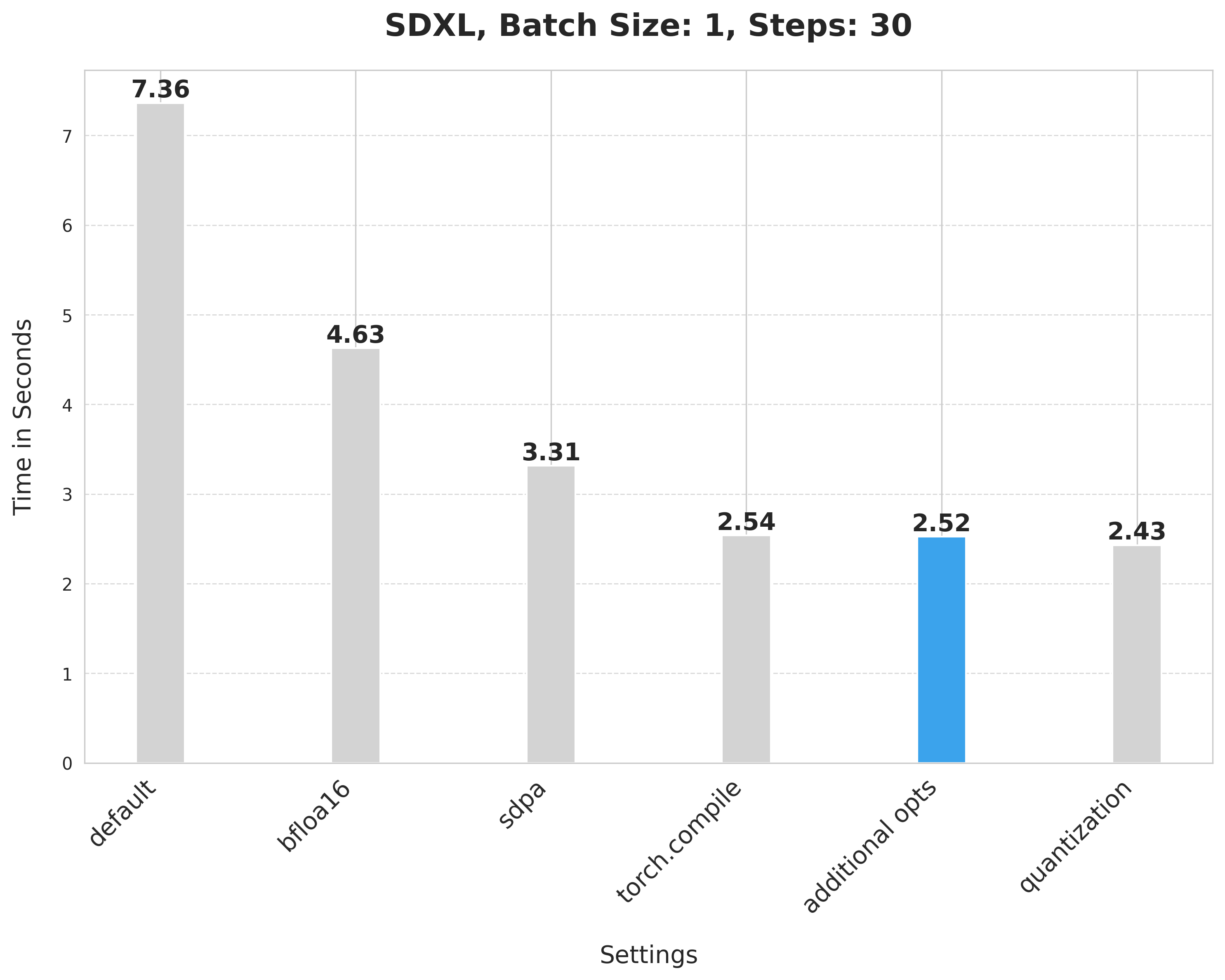

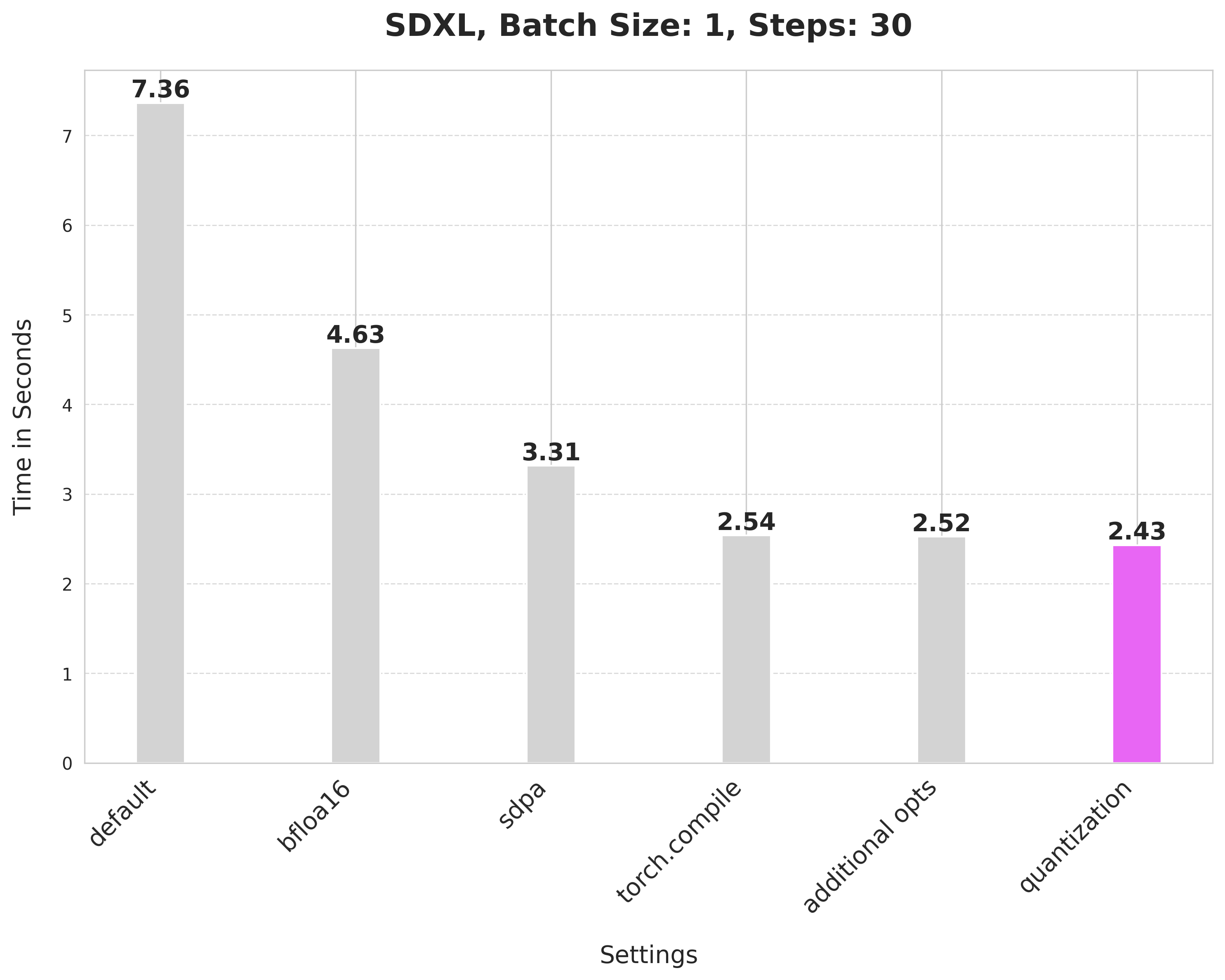

-This default setup takes 7.36 seconds.

-

-

-

-

-

-## bfloat16

-

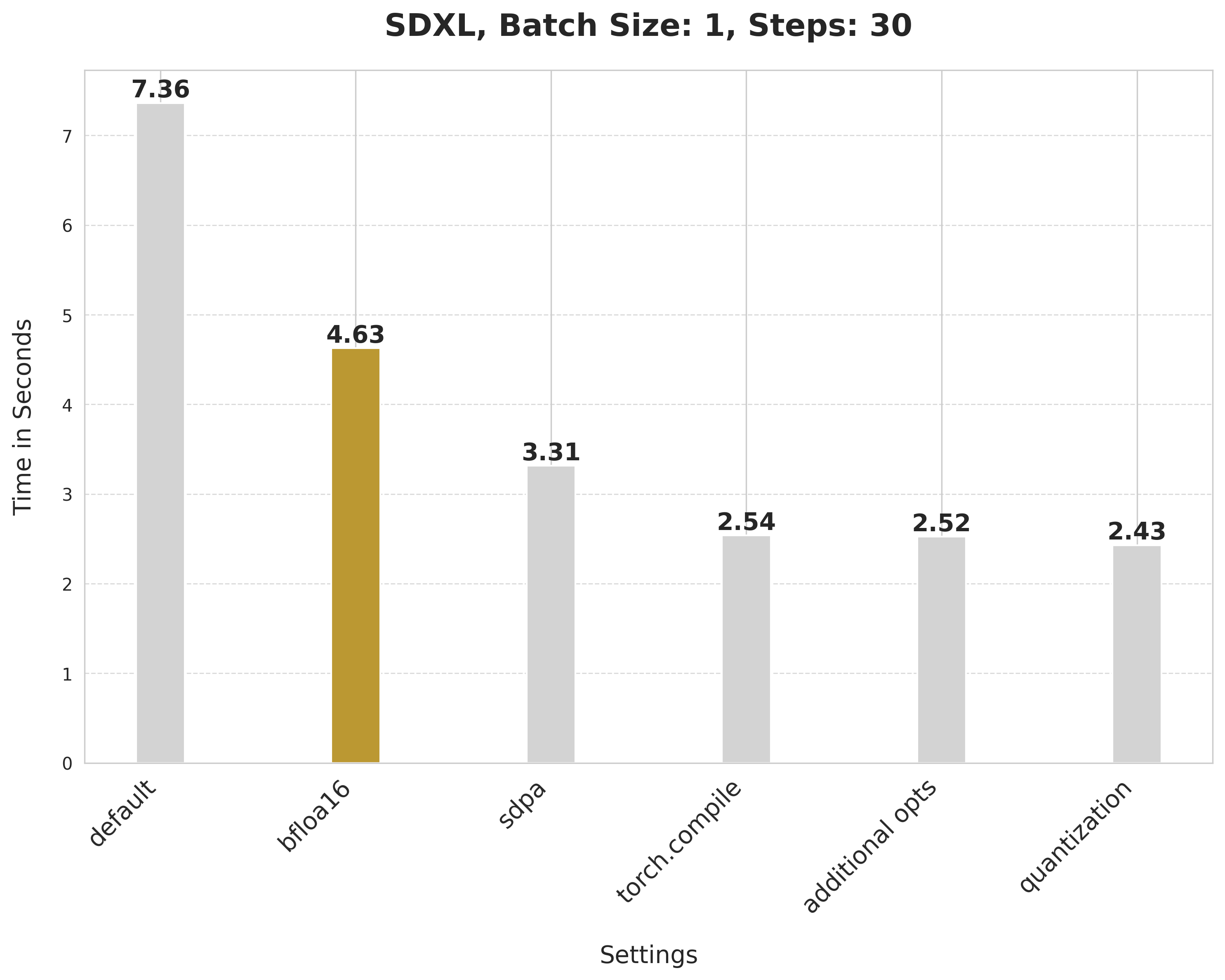

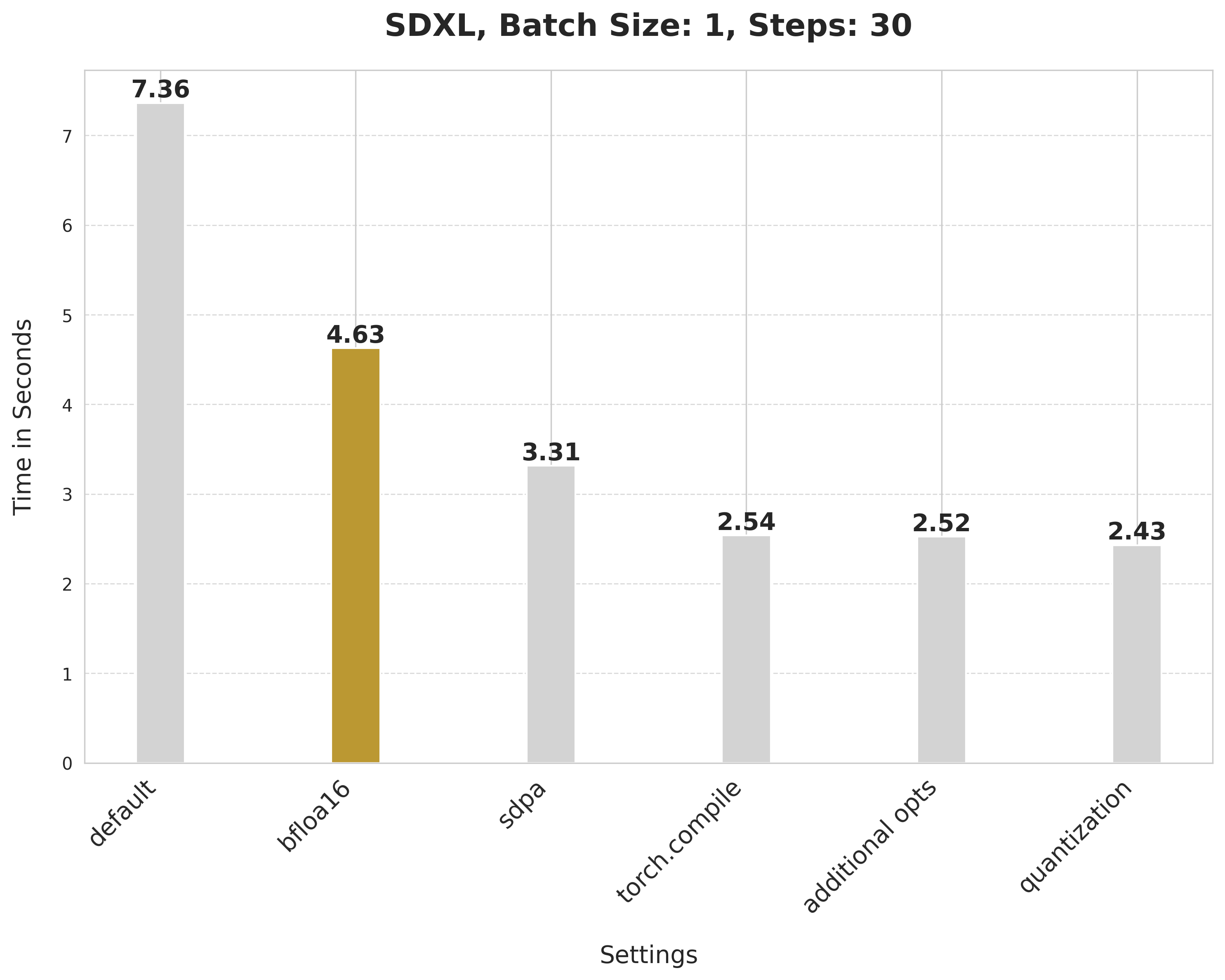

-Enable the first optimization, reduced precision or more specifically bfloat16. There are several benefits of using reduced precision:

-

-* Using a reduced numerical precision (such as float16 or bfloat16) for inference doesn’t affect the generation quality but significantly improves latency.

-* The benefits of using bfloat16 compared to float16 are hardware dependent, but modern GPUs tend to favor bfloat16.

-* bfloat16 is much more resilient when used with quantization compared to float16, but more recent versions of the quantization library ([torchao](https://github.com/pytorch-labs/ao)) we used don't have numerical issues with float16.

-

-```python

-from diffusers import StableDiffusionXLPipeline

-import torch

-

-pipe = StableDiffusionXLPipeline.from_pretrained(

- "stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.bfloat16

-).to("cuda")

-

-# Run the attention ops without SDPA.

-pipe.unet.set_default_attn_processor()

-pipe.vae.set_default_attn_processor()

-

-prompt = "Astronaut in a jungle, cold color palette, muted colors, detailed, 8k"

-image = pipe(prompt, num_inference_steps=30).images[0]

-```

-

-bfloat16 reduces the latency from 7.36 seconds to 4.63 seconds.

-

-

-

-

-

-

-

-In our later experiments with float16, recent versions of torchao do not incur numerical problems from float16.

-

-

-

-Take a look at the [Speed up inference](../optimization/fp16) guide to learn more about running inference with reduced precision.

-

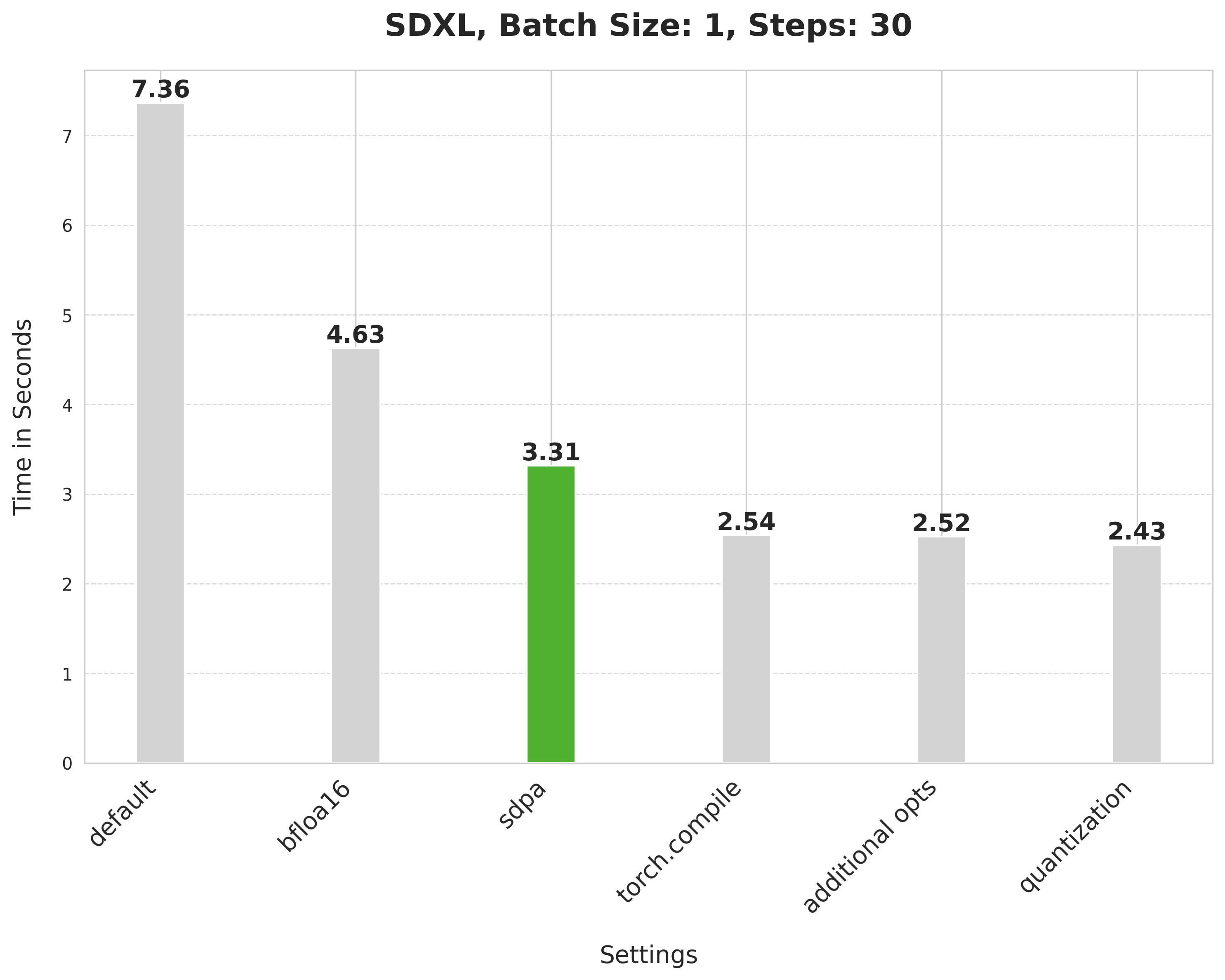

-## SDPA

-

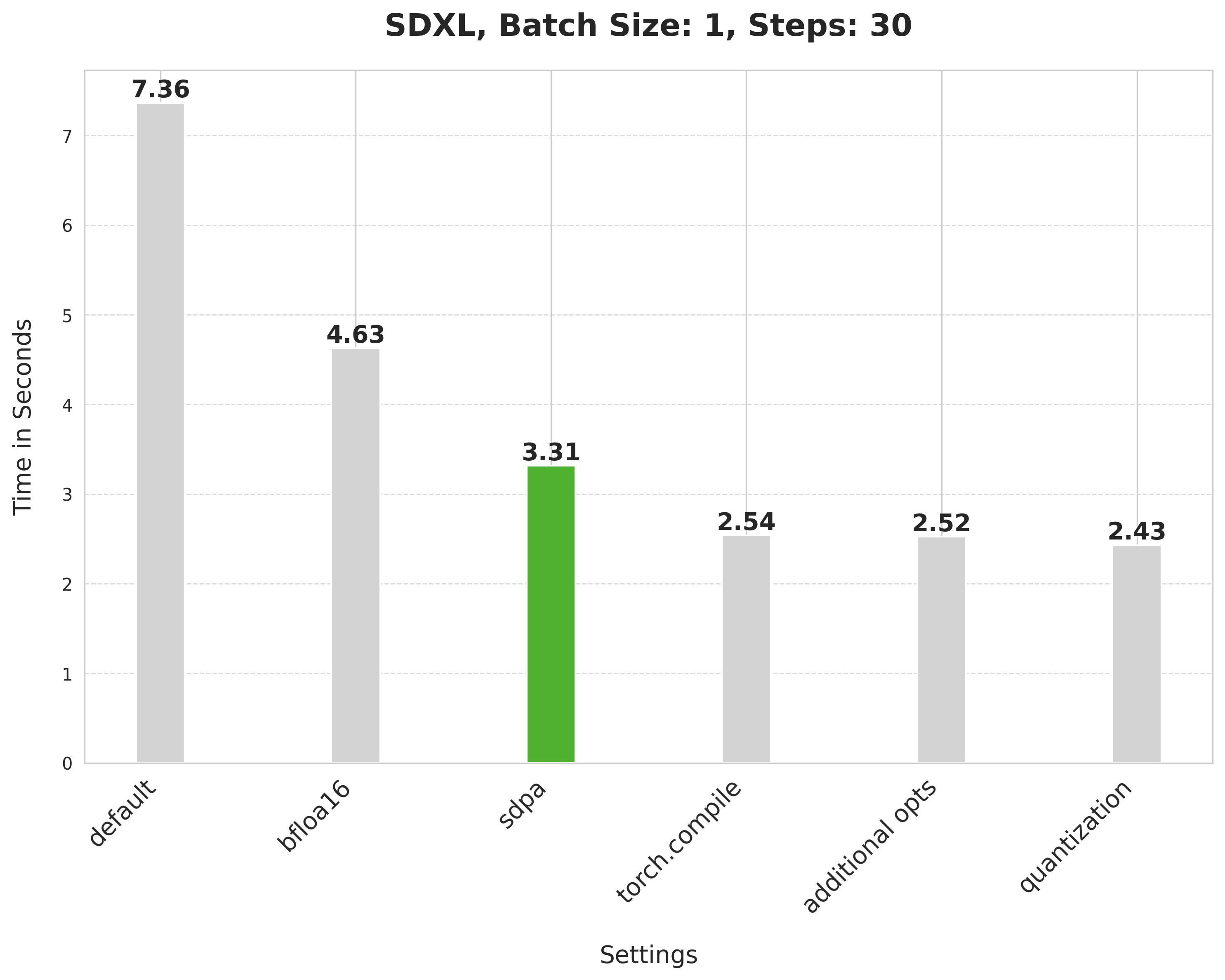

-Attention blocks are intensive to run. But with PyTorch's [`scaled_dot_product_attention`](../optimization/torch2.0#scaled-dot-product-attention) function, it is a lot more efficient. This function is used by default in Diffusers so you don't need to make any changes to the code.

-

-```python

-from diffusers import StableDiffusionXLPipeline

-import torch

-

-pipe = StableDiffusionXLPipeline.from_pretrained(

- "stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.bfloat16

-).to("cuda")

-

-prompt = "Astronaut in a jungle, cold color palette, muted colors, detailed, 8k"

-image = pipe(prompt, num_inference_steps=30).images[0]

-```

-

-Scaled dot product attention improves the latency from 4.63 seconds to 3.31 seconds.

-

-

-

-

-

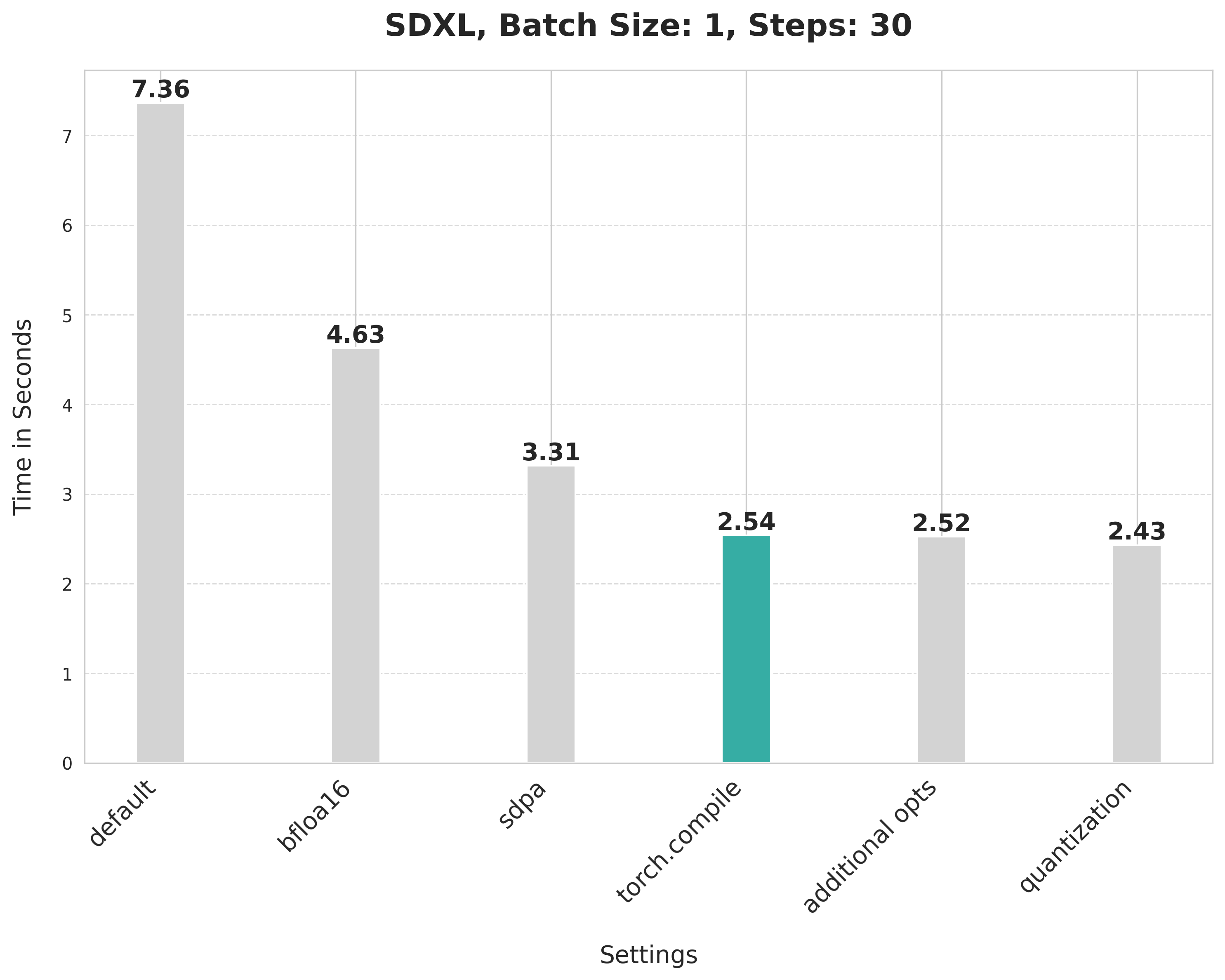

-## torch.compile

-

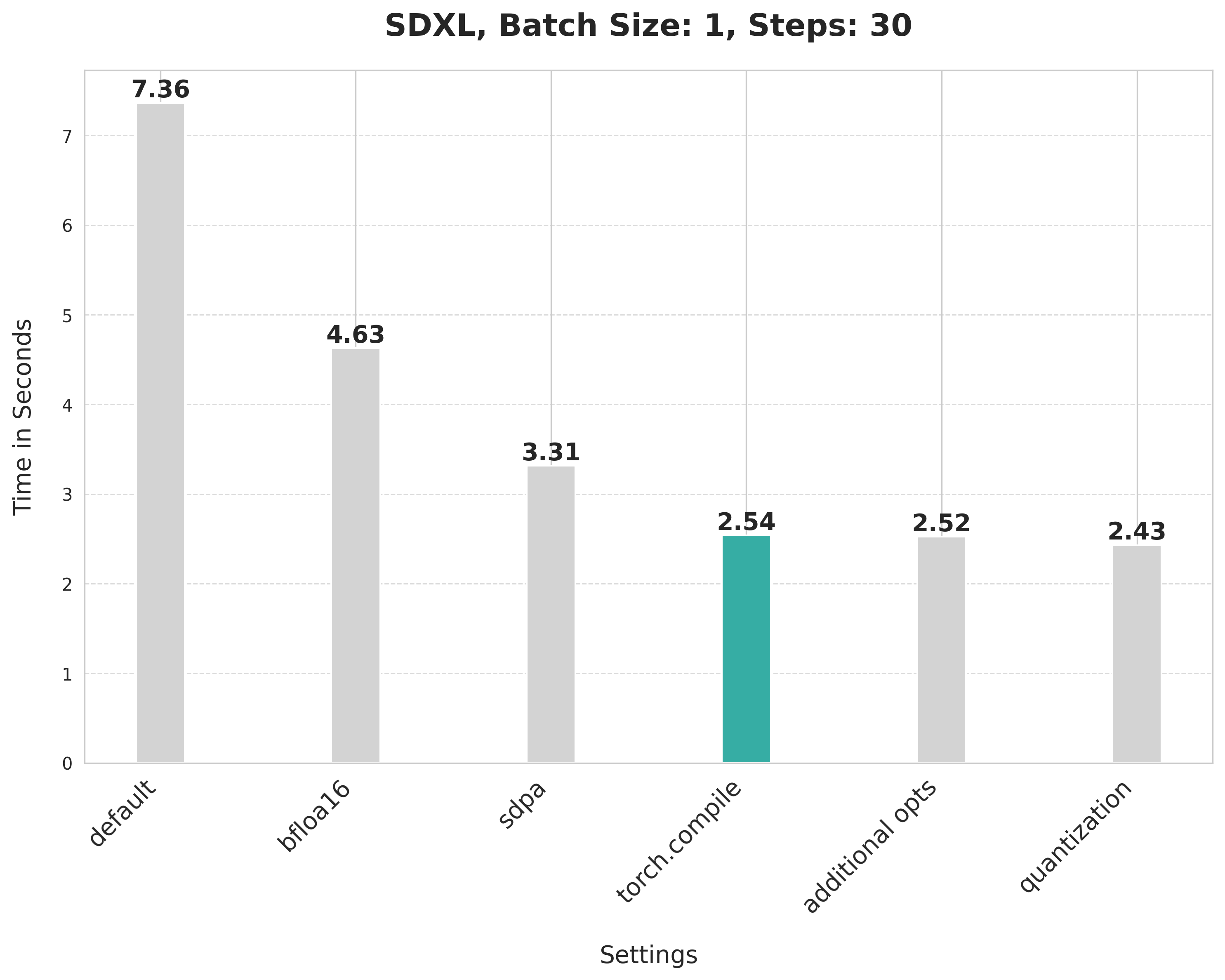

-PyTorch 2 includes `torch.compile` which uses fast and optimized kernels. In Diffusers, the UNet and VAE are usually compiled because these are the most compute-intensive modules. First, configure a few compiler flags (refer to the [full list](https://github.com/pytorch/pytorch/blob/main/torch/_inductor/config.py) for more options):

-

-```python

-from diffusers import StableDiffusionXLPipeline

-import torch

-

-torch._inductor.config.conv_1x1_as_mm = True

-torch._inductor.config.coordinate_descent_tuning = True

-torch._inductor.config.epilogue_fusion = False

-torch._inductor.config.coordinate_descent_check_all_directions = True

-```

-

-It is also important to change the UNet and VAE's memory layout to "channels_last" when compiling them to ensure maximum speed.

-

-```python

-pipe.unet.to(memory_format=torch.channels_last)

-pipe.vae.to(memory_format=torch.channels_last)

-```

-

-Now compile and perform inference:

-

-```python

-# Compile the UNet and VAE.

-pipe.unet = torch.compile(pipe.unet, mode="max-autotune", fullgraph=True)

-pipe.vae.decode = torch.compile(pipe.vae.decode, mode="max-autotune", fullgraph=True)

-

-prompt = "Astronaut in a jungle, cold color palette, muted colors, detailed, 8k"

-

-# First call to `pipe` is slow, subsequent ones are faster.

-image = pipe(prompt, num_inference_steps=30).images[0]

-```

-

-`torch.compile` offers different backends and modes. For maximum inference speed, use "max-autotune" for the inductor backend. “max-autotune” uses CUDA graphs and optimizes the compilation graph specifically for latency. CUDA graphs greatly reduces the overhead of launching GPU operations by using a mechanism to launch multiple GPU operations through a single CPU operation.

-

-Using SDPA attention and compiling both the UNet and VAE cuts the latency from 3.31 seconds to 2.54 seconds.

-

-

-

-

-

-> [!TIP]

-> From PyTorch 2.3.1, you can control the caching behavior of `torch.compile()`. This is particularly beneficial for compilation modes like `"max-autotune"` which performs a grid-search over several compilation flags to find the optimal configuration. Learn more in the [Compile Time Caching in torch.compile](https://pytorch.org/tutorials/recipes/torch_compile_caching_tutorial.html) tutorial.

-

-### Prevent graph breaks

-

-Specifying `fullgraph=True` ensures there are no graph breaks in the underlying model to take full advantage of `torch.compile` without any performance degradation. For the UNet and VAE, this means changing how you access the return variables.

-

-```diff

-- latents = unet(

-- latents, timestep=timestep, encoder_hidden_states=prompt_embeds

--).sample

-

-+ latents = unet(

-+ latents, timestep=timestep, encoder_hidden_states=prompt_embeds, return_dict=False

-+)[0]

-```

-

-### Remove GPU sync after compilation

-

-During the iterative reverse diffusion process, the `step()` function is [called](https://github.com/huggingface/diffusers/blob/1d686bac8146037e97f3fd8c56e4063230f71751/src/diffusers/pipelines/stable_diffusion_xl/pipeline_stable_diffusion_xl.py#L1228) on the scheduler each time after the denoiser predicts the less noisy latent embeddings. Inside `step()`, the `sigmas` variable is [indexed](https://github.com/huggingface/diffusers/blob/1d686bac8146037e97f3fd8c56e4063230f71751/src/diffusers/schedulers/scheduling_euler_discrete.py#L476) which when placed on the GPU, causes a communication sync between the CPU and GPU. This introduces latency and it becomes more evident when the denoiser has already been compiled.

-

-But if the `sigmas` array always [stays on the CPU](https://github.com/huggingface/diffusers/blob/35a969d297cba69110d175ee79c59312b9f49e1e/src/diffusers/schedulers/scheduling_euler_discrete.py#L240), the CPU and GPU sync doesn’t occur and you don't get any latency. In general, any CPU and GPU communication sync should be none or be kept to a bare minimum because it can impact inference latency.

-

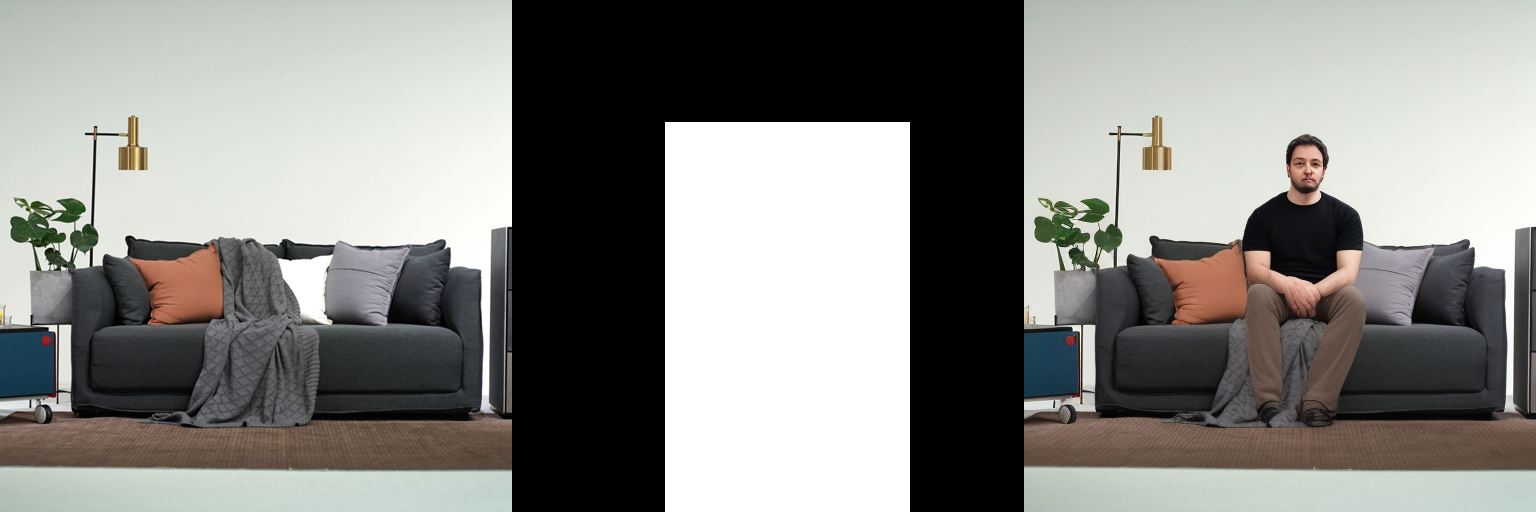

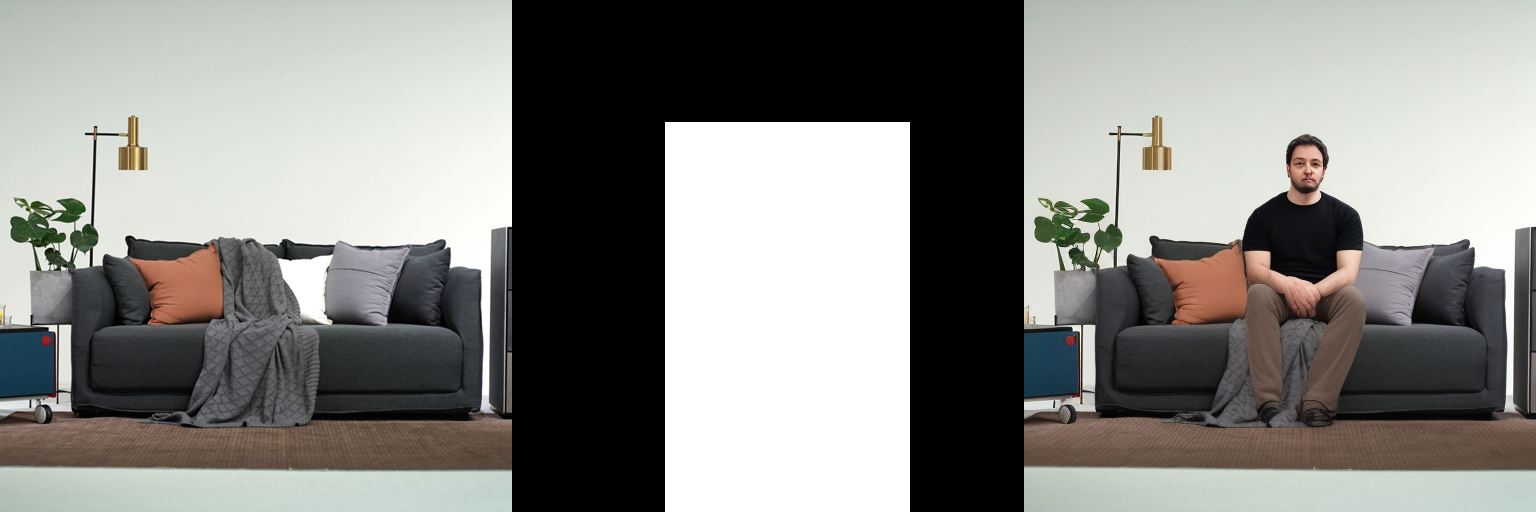

-## Combine the attention block's projection matrices

-

-The UNet and VAE in SDXL use Transformer-like blocks which consists of attention blocks and feed-forward blocks.

-

-In an attention block, the input is projected into three sub-spaces using three different projection matrices – Q, K, and V. These projections are performed separately on the input. But we can horizontally combine the projection matrices into a single matrix and perform the projection in one step. This increases the size of the matrix multiplications of the input projections and improves the impact of quantization.

-

-You can combine the projection matrices with just a single line of code:

-

-```python

-pipe.fuse_qkv_projections()

-```

-

-This provides a minor improvement from 2.54 seconds to 2.52 seconds.

-

-

-

-

-

-

-

-Support for [`~StableDiffusionXLPipeline.fuse_qkv_projections`] is limited and experimental. It's not available for many non-Stable Diffusion pipelines such as [Kandinsky](../using-diffusers/kandinsky). You can refer to this [PR](https://github.com/huggingface/diffusers/pull/6179) to get an idea about how to enable this for the other pipelines.

-

-

-

-## Dynamic quantization

-

-You can also use the ultra-lightweight PyTorch quantization library, [torchao](https://github.com/pytorch-labs/ao) (commit SHA `54bcd5a10d0abbe7b0c045052029257099f83fd9`), to apply [dynamic int8 quantization](https://pytorch.org/tutorials/recipes/recipes/dynamic_quantization.html) to the UNet and VAE. Quantization adds additional conversion overhead to the model that is hopefully made up for by faster matmuls (dynamic quantization). If the matmuls are too small, these techniques may degrade performance.

-

-First, configure all the compiler tags:

-

-```python

-from diffusers import StableDiffusionXLPipeline

-import torch

-

-# Notice the two new flags at the end.

-torch._inductor.config.conv_1x1_as_mm = True

-torch._inductor.config.coordinate_descent_tuning = True

-torch._inductor.config.epilogue_fusion = False

-torch._inductor.config.coordinate_descent_check_all_directions = True

-torch._inductor.config.force_fuse_int_mm_with_mul = True

-torch._inductor.config.use_mixed_mm = True

-```

-

-Certain linear layers in the UNet and VAE don’t benefit from dynamic int8 quantization. You can filter out those layers with the [`dynamic_quant_filter_fn`](https://github.com/huggingface/diffusion-fast/blob/0f169640b1db106fe6a479f78c1ed3bfaeba3386/utils/pipeline_utils.py#L16) shown below.

-

-```python

-def dynamic_quant_filter_fn(mod, *args):

- return (

- isinstance(mod, torch.nn.Linear)

- and mod.in_features > 16

- and (mod.in_features, mod.out_features)

- not in [

- (1280, 640),

- (1920, 1280),

- (1920, 640),

- (2048, 1280),

- (2048, 2560),

- (2560, 1280),

- (256, 128),

- (2816, 1280),

- (320, 640),

- (512, 1536),

- (512, 256),

- (512, 512),

- (640, 1280),

- (640, 1920),

- (640, 320),

- (640, 5120),

- (640, 640),

- (960, 320),

- (960, 640),

- ]

- )

-

-

-def conv_filter_fn(mod, *args):

- return (

- isinstance(mod, torch.nn.Conv2d) and mod.kernel_size == (1, 1) and 128 in [mod.in_channels, mod.out_channels]

- )

-```

-

-Finally, apply all the optimizations discussed so far:

-

-```python

-# SDPA + bfloat16.

-pipe = StableDiffusionXLPipeline.from_pretrained(

- "stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.bfloat16

-).to("cuda")

-

-# Combine attention projection matrices.

-pipe.fuse_qkv_projections()

-

-# Change the memory layout.

-pipe.unet.to(memory_format=torch.channels_last)

-pipe.vae.to(memory_format=torch.channels_last)

-```

-

-Since dynamic quantization is only limited to the linear layers, convert the appropriate pointwise convolution layers into linear layers to maximize its benefit.

-

-```python

-from torchao import swap_conv2d_1x1_to_linear

-

-swap_conv2d_1x1_to_linear(pipe.unet, conv_filter_fn)

-swap_conv2d_1x1_to_linear(pipe.vae, conv_filter_fn)

-```

-

-Apply dynamic quantization:

-

-```python

-from torchao import apply_dynamic_quant

-

-apply_dynamic_quant(pipe.unet, dynamic_quant_filter_fn)

-apply_dynamic_quant(pipe.vae, dynamic_quant_filter_fn)

-```

-

-Finally, compile and perform inference:

-

-```python

-pipe.unet = torch.compile(pipe.unet, mode="max-autotune", fullgraph=True)

-pipe.vae.decode = torch.compile(pipe.vae.decode, mode="max-autotune", fullgraph=True)

-

-prompt = "Astronaut in a jungle, cold color palette, muted colors, detailed, 8k"

-image = pipe(prompt, num_inference_steps=30).images[0]

-```

-

-Applying dynamic quantization improves the latency from 2.52 seconds to 2.43 seconds.

-

-

-

-

diff --git a/docs/source/en/tutorials/using_peft_for_inference.md b/docs/source/en/tutorials/using_peft_for_inference.md

index f17113ecb8..7199361d5e 100644

--- a/docs/source/en/tutorials/using_peft_for_inference.md

+++ b/docs/source/en/tutorials/using_peft_for_inference.md

@@ -103,7 +103,7 @@ pipeline("A cute cnmt eating a slice of pizza, stunning color scheme, masterpiec

## torch.compile

-[torch.compile](../optimization/torch2.0#torchcompile) speeds up inference by compiling the PyTorch model to use optimized kernels. Before compiling, the LoRA weights need to be fused into the base model and unloaded first.

+[torch.compile](../optimization/fp16#torchcompile) speeds up inference by compiling the PyTorch model to use optimized kernels. Before compiling, the LoRA weights need to be fused into the base model and unloaded first.

```py

import torch

diff --git a/docs/source/en/using-diffusers/conditional_image_generation.md b/docs/source/en/using-diffusers/conditional_image_generation.md

index b58b3b74b9..0afbcaabe8 100644

--- a/docs/source/en/using-diffusers/conditional_image_generation.md

+++ b/docs/source/en/using-diffusers/conditional_image_generation.md

@@ -303,7 +303,7 @@ There are many types of conditioning inputs you can use, and 🤗 Diffusers supp

Diffusion models are large, and the iterative nature of denoising an image is computationally expensive and intensive. But this doesn't mean you need access to powerful - or even many - GPUs to use them. There are many optimization techniques for running diffusion models on consumer and free-tier resources. For example, you can load model weights in half-precision to save GPU memory and increase speed or offload the entire model to the GPU to save even more memory.

-PyTorch 2.0 also supports a more memory-efficient attention mechanism called [*scaled dot product attention*](../optimization/torch2.0#scaled-dot-product-attention) that is automatically enabled if you're using PyTorch 2.0. You can combine this with [`torch.compile`](https://pytorch.org/tutorials/intermediate/torch_compile_tutorial.html) to speed your code up even more:

+PyTorch 2.0 also supports a more memory-efficient attention mechanism called [*scaled dot product attention*](../optimization/fp16#scaled-dot-product-attention) that is automatically enabled if you're using PyTorch 2.0. You can combine this with [`torch.compile`](https://pytorch.org/tutorials/intermediate/torch_compile_tutorial.html) to speed your code up even more:

```py

from diffusers import AutoPipelineForText2Image

@@ -313,4 +313,4 @@ pipeline = AutoPipelineForText2Image.from_pretrained("stable-diffusion-v1-5/stab

pipeline.unet = torch.compile(pipeline.unet, mode="reduce-overhead", fullgraph=True)

```

-For more tips on how to optimize your code to save memory and speed up inference, read the [Memory and speed](../optimization/fp16) and [Torch 2.0](../optimization/torch2.0) guides.

+For more tips on how to optimize your code to save memory and speed up inference, read the [Accelerate inference](../optimization/fp16) and [Reduce memory usage](../optimization/memory) guides.

diff --git a/docs/source/en/using-diffusers/controlling_generation.md b/docs/source/en/using-diffusers/controlling_generation.md

index c1320dce2a..5d1956ce2c 100644

--- a/docs/source/en/using-diffusers/controlling_generation.md

+++ b/docs/source/en/using-diffusers/controlling_generation.md

@@ -65,14 +65,14 @@ For convenience, we provide a table to denote which methods are inference-only a

| [Fabric](#fabric) | ✅ | ❌ | |

## InstructPix2Pix

-[Paper](https://arxiv.org/abs/2211.09800)

+[Paper](https://huggingface.co/papers/2211.09800)

[InstructPix2Pix](../api/pipelines/pix2pix) is fine-tuned from Stable Diffusion to support editing input images. It takes as inputs an image and a prompt describing an edit, and it outputs the edited image.

InstructPix2Pix has been explicitly trained to work well with [InstructGPT](https://openai.com/blog/instruction-following/)-like prompts.

## Pix2Pix Zero

-[Paper](https://arxiv.org/abs/2302.03027)

+[Paper](https://huggingface.co/papers/2302.03027)

[Pix2Pix Zero](../api/pipelines/pix2pix_zero) allows modifying an image so that one concept or subject is translated to another one while preserving general image semantics.

@@ -104,7 +104,7 @@ apply Pix2Pix Zero to any of the available Stable Diffusion models.

## Attend and Excite

-[Paper](https://arxiv.org/abs/2301.13826)

+[Paper](https://huggingface.co/papers/2301.13826)

[Attend and Excite](../api/pipelines/attend_and_excite) allows subjects in the prompt to be faithfully represented in the final image.

@@ -114,7 +114,7 @@ Like Pix2Pix Zero, Attend and Excite also involves a mini optimization loop (lea

## Semantic Guidance (SEGA)

-[Paper](https://arxiv.org/abs/2301.12247)

+[Paper](https://huggingface.co/papers/2301.12247)

[SEGA](../api/pipelines/semantic_stable_diffusion) allows applying or removing one or more concepts from an image. The strength of the concept can also be controlled. I.e. the smile concept can be used to incrementally increase or decrease the smile of a portrait.

@@ -124,7 +124,7 @@ Unlike Pix2Pix Zero or Attend and Excite, SEGA directly interacts with the diffu

## Self-attention Guidance (SAG)

-[Paper](https://arxiv.org/abs/2210.00939)

+[Paper](https://huggingface.co/papers/2210.00939)

[Self-attention Guidance](../api/pipelines/self_attention_guidance) improves the general quality of images.

@@ -140,7 +140,7 @@ It conditions on a monocular depth estimate of the original image.

## MultiDiffusion Panorama

-[Paper](https://arxiv.org/abs/2302.08113)

+[Paper](https://huggingface.co/papers/2302.08113)

[MultiDiffusion Panorama](../api/pipelines/panorama) defines a new generation process over a pre-trained diffusion model. This process binds together multiple diffusion generation methods that can be readily applied to generate high quality and diverse images. Results adhere to user-provided controls, such as desired aspect ratio (e.g., panorama), and spatial guiding signals, ranging from tight segmentation masks to bounding boxes.

MultiDiffusion Panorama allows to generate high-quality images at arbitrary aspect ratios (e.g., panoramas).

@@ -157,13 +157,13 @@ In addition to pre-trained models, Diffusers has training scripts for fine-tunin

## Textual Inversion

-[Paper](https://arxiv.org/abs/2208.01618)

+[Paper](https://huggingface.co/papers/2208.01618)

[Textual Inversion](../training/text_inversion) fine-tunes a model to teach it about a new concept. I.e. a few pictures of a style of artwork can be used to generate images in that style.

## ControlNet

-[Paper](https://arxiv.org/abs/2302.05543)

+[Paper](https://huggingface.co/papers/2302.05543)

[ControlNet](../api/pipelines/controlnet) is an auxiliary network which adds an extra condition.

There are 8 canonical pre-trained ControlNets trained on different conditionings such as edge detection, scribbles,

@@ -176,7 +176,7 @@ input.

## Custom Diffusion

-[Paper](https://arxiv.org/abs/2212.04488)

+[Paper](https://huggingface.co/papers/2212.04488)

[Custom Diffusion](../training/custom_diffusion) only fine-tunes the cross-attention maps of a pre-trained

text-to-image diffusion model. It also allows for additionally performing Textual Inversion. It supports

@@ -186,7 +186,7 @@ concept(s) of interest.

## Model Editing

-[Paper](https://arxiv.org/abs/2303.08084)

+[Paper](https://huggingface.co/papers/2303.08084)

The [text-to-image model editing pipeline](../api/pipelines/model_editing) helps you mitigate some of the incorrect implicit assumptions a pre-trained text-to-image

diffusion model might make about the subjects present in the input prompt. For example, if you prompt Stable Diffusion to generate images for "A pack of roses", the roses in the generated images

@@ -194,14 +194,14 @@ are more likely to be red. This pipeline helps you change that assumption.

## DiffEdit

-[Paper](https://arxiv.org/abs/2210.11427)

+[Paper](https://huggingface.co/papers/2210.11427)

[DiffEdit](../api/pipelines/diffedit) allows for semantic editing of input images along with

input prompts while preserving the original input images as much as possible.

## T2I-Adapter

-[Paper](https://arxiv.org/abs/2302.08453)

+[Paper](https://huggingface.co/papers/2302.08453)

[T2I-Adapter](../api/pipelines/stable_diffusion/adapter) is an auxiliary network which adds an extra condition.

There are 8 canonical pre-trained adapters trained on different conditionings such as edge detection, sketch,

@@ -209,7 +209,7 @@ depth maps, and semantic segmentations.

## Fabric

-[Paper](https://arxiv.org/abs/2307.10159)

+[Paper](https://huggingface.co/papers/2307.10159)

[Fabric](https://github.com/huggingface/diffusers/tree/442017ccc877279bcf24fbe92f92d3d0def191b6/examples/community#stable-diffusion-fabric-pipeline) is a training-free

approach applicable to a wide range of popular diffusion models, which exploits

diff --git a/docs/source/en/using-diffusers/custom_pipeline_overview.md b/docs/source/en/using-diffusers/custom_pipeline_overview.md

index 11d1173267..c5359fd0bc 100644

--- a/docs/source/en/using-diffusers/custom_pipeline_overview.md

+++ b/docs/source/en/using-diffusers/custom_pipeline_overview.md

@@ -18,7 +18,7 @@ specific language governing permissions and limitations under the License.

> [!TIP] Take a look at GitHub Issue [#841](https://github.com/huggingface/diffusers/issues/841) for more context about why we're adding community pipelines to help everyone easily share their work without being slowed down.

-Community pipelines are any [`DiffusionPipeline`] class that are different from the original paper implementation (for example, the [`StableDiffusionControlNetPipeline`] corresponds to the [Text-to-Image Generation with ControlNet Conditioning](https://arxiv.org/abs/2302.05543) paper). They provide additional functionality or extend the original implementation of a pipeline.

+Community pipelines are any [`DiffusionPipeline`] class that are different from the original paper implementation (for example, the [`StableDiffusionControlNetPipeline`] corresponds to the [Text-to-Image Generation with ControlNet Conditioning](https://huggingface.co/papers/2302.05543) paper). They provide additional functionality or extend the original implementation of a pipeline.

There are many cool community pipelines like [Marigold Depth Estimation](https://github.com/huggingface/diffusers/tree/main/examples/community#marigold-depth-estimation) or [InstantID](https://github.com/huggingface/diffusers/tree/main/examples/community#instantid-pipeline), and you can find all the official community pipelines [here](https://github.com/huggingface/diffusers/tree/main/examples/community).

diff --git a/docs/source/en/using-diffusers/img2img.md b/docs/source/en/using-diffusers/img2img.md

index d9902081fd..3175477f33 100644

--- a/docs/source/en/using-diffusers/img2img.md

+++ b/docs/source/en/using-diffusers/img2img.md

@@ -35,7 +35,7 @@ pipeline.enable_xformers_memory_efficient_attention()

-You'll notice throughout the guide, we use [`~DiffusionPipeline.enable_model_cpu_offload`] and [`~DiffusionPipeline.enable_xformers_memory_efficient_attention`], to save memory and increase inference speed. If you're using PyTorch 2.0, then you don't need to call [`~DiffusionPipeline.enable_xformers_memory_efficient_attention`] on your pipeline because it'll already be using PyTorch 2.0's native [scaled-dot product attention](../optimization/torch2.0#scaled-dot-product-attention).